Abstract

In this article we propose a novel framework for the modelling of non-stationary multivariate lattice processes. Our approach extends the locally stationary wavelet paradigm into the multivariate two-dimensional setting. As such the framework we develop permits the estimation of a spatially localised spectrum within a channel of interest and, more importantly, a localised cross-covariance which describes the localised coherence between channels. Associated estimation theory is also established which demonstrates that this multivariate spatial framework is properly defined and has suitable convergence properties. We also demonstrate how this model-based approach can be successfully used to classify a range of colour textures provided by an industrial collaborator, yielding superior results when compared against current state-of-the-art statistical image processing methods.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Wavelet methods have enjoyed popularity for many years for statistical data analysis due to their ability to provide efficient representations of signals and images. For a recent overview of wavelet techniques, see for example Vidakovic (1999) or Nason (2008). Amongst many notable contributions, recently a significant body of work has emerged in the area locally stationary wavelet time series. This stems from the seminal work of Nason et al. (2000). Notable contributions include work on forecasting (Fryzlewicz et al. 2003), changepoint analysis (Killick et al. 2013), signal classification (Fryzlewicz and Ombao 2009) and alias detection (Eckley and Nason 2014).

In two dimensions, this analysis falls into a class of problems known as texture analysis. Historically much of the work in this area has been targeted towards the analysis of greyscale (i.e. univariate) textured images. In recent years various contributions have been made to this area by the statistical community. These have typically sought to develop short-memory, multiscale models of the spatial covariance structure within textured images, see for example Eckley et al. (2010) or Mondal and Percival (2012). Within such contributions model-based estimates are used to distinguish between subtly different texture types. The multiscale framework is particularly appealing in this setting since it is well-documented that the mammalian visual system operates in a such a manner, see for example Daugman (1990) or Field (1999). See Petrou and Sevilla (2006) for a comprehensive introduction to this field.

In this article we adopt a formal, model-based approach to the analysis of multivariate two-dimensional random fields. Specifically, we develop a modelling framework which permits a multiscale decomposition of the second-order structure of a colour image. The novelty of the approach is that our proposed model considers both (i) within-component spatial covariance, and (ii) the spatially-localised coherence between components of a multivariate process. This latter quantity provides a local, multiscale measure of the (linear) dependence between components, and thus enables the model to accommodate realistic spatial non-stationarity whilst also representing the inherent multiscale structure of texture. Such multiscale measures of dependence have already been shown to be beneficial in other contexts, see for example Park et al. (2014) or Sanderson et al. (2010). An associated estimation scheme for model quantities is also developed.

Within the image analysis setting, our model lends itself to the analysis of colour textured images. Such images are typically multivariate in nature, containing three channels of spatial data, for example red, green and blue (RGB) or hue, saturation, value (HSV). These channels may, or may not, exhibit cross-channel dependence. Of course, we are by no means the first to consider this problem. See for example, work in the image processing community by Van de Wouwer et al. (1999) and Sengur (2008) and references therein. Some methods either fail to describe both the multichannel (colour) and textural aspects of “colour texture”, whilst others do not represent its inherent multiscale nature. We hence use our model estimation scheme as the basis of an example application of our modelling framework: a colour texture classification method. Both simulated and industrially-motivated examples demonstrate that our approach is able to differentiate between multivariate spatial processes which exhibit subtly different textural and colour properties, outperforming state-of-the-art competitor methods.

The remainder of the article is organised as follows. In Sect. 2 we introduce the multivariate locally stationary 2D modelling framework and also define key measures which describe the second-order structure within such processes: the locally stationary wavelet cross- and auto-spectra and locally stationary wavelet coherence. The estimation theory for such processes is established in Sect. 3 together with a simulated example which demonstrates the potential of our spectral estimators to describe localised structure. Finally in Sect. 4 we consider the application of our approach to two related texture classification challenges introduced by an industrial collaborator.

2 The multivariate 2D locally stationary wavelet model

The locally stationary two-dimensional wavelet (LS2W) model for spatial fields has been proposed as a formal framework to represent spatial inhomogeneity whilst also capturing the inherent multiscale structure of texture (Eckley et al. 2010). Loosely speaking, by incorporating the multiscale structure of (non-decimated) wavelets within a spatial context it is possible to describe a spatially evolving structure which allows both for stationary structure (when sufficiently localised) and spatially diverse structures (when looked at from a distance).

In this section we introduce the multivariate 2D locally stationary wavelet process model, which extends earlier work by Eckley et al. (2010) to the multivariate setting. We also introduce various scale-based measures of variation which describe the spectral and cross-spectral behaviour locally within non-stationary images.

2.1 The locally stationary wavelet model of a multivariate spatial process

We start by considering an m-dimensional spatial process, which we denote \(\mathbf {X}_{\mathbf {r;R}} = [X_{\mathbf {r,R}}^{(1)}; X_{\mathbf {r,R}}^{(2)}; \ldots ; X_{\mathbf {r,R}}^{(m)}]'\). Here each element is an individual channel (i.e. spatial plane) of the multivariate image. We seek to describe the cross-channel dependence. To this end we define the multivariate 2D locally stationary wavelet process (LS2Wmv) model as follows.

Definition 1

A m-variate 2D locally stationary wavelet process process, \(\mathbf {X_{\mathbf {r;R}}}\), is defined to have the following form:

Here \(\mathbf {r} = (r,s) \in \{0, \ldots , R-1\} \times \{0, \ldots , S-1\} = \mathcal {R}\) and \(R=2^{k}\), \(S=2^{n}\) for some \(k, n \in \mathbb {N}\). The \(\{W_{\eta }^{(i)}(\mathbf {u}/\mathbf {R})\}\) can be thought of as scale-direction-location dependent transfer functions in rescaled space, where the index i represents a particular channel of the multivariate image. The random variables \(\{\xi ^{(i)}_{\eta ,\mathbf {u}}\}\) for \(\mathbf {u} \in \mathcal {R}\) are a collection of zero-mean random orthonormal increments which encapsulate the cross-channel dependence of the process through Eq. (3) (see below) and \(\{\psi _{\eta ,\mathbf {u}}\}\) are two-dimensional discrete non-decimated wavelets as defined in Eckley et al. (2010).

Note that each individual channel can be seen as a (univariate) LS2W process on a regular lattice. Note also that in the above, we adopt a simplified notation for a scale-direction pair. Instead of having two separate indices representing scale and direction (i.e. j and l), a combination of both provides a single index, \(\eta \), each value of which represents a particular decomposition scale in a given direction. More specifically, we have \(\eta :=\eta (j,l) = j + g(l)\) for all \(j=1,\ldots ,J\) where \(g(l)=0,J,2J\) and \(l=v,h,d\).

2.1.1 Modelling assumptions for the LS2Wmv model

In order that a principled estimation theory for LS2Wmv processes can be developed, we require several modelling assumptions to control the degree of non-stationarity of the process and enable the identification of the local spectral structure. Specifically:

-

1.

\(\mathbb {E}[\xi ^{(i)}_{\eta ,\mathbf {u}}] = 0\) hence \(\mathbb {E}(\mathbf {X}_{\mathbf {r}})=0\) for all \(\eta \) and \(\mathbf {u}\).

-

2.

The increments of the process, \(\{\xi ^{(i)}_{\eta ,\mathbf {u}}\}\), have the following properties

$$\begin{aligned} \text{ Cov }[\xi _{\eta ,\mathbf {k}}^{i}, \xi _{\eta _{1},\varvec{m}}^{i}]= & {} \delta _{\eta ,\eta _{1}} \delta _{\mathbf {k},\varvec{m}} \end{aligned}$$(2)$$\begin{aligned} \text{ Cov }[\xi _{\eta ,\mathbf {k}}^{p}, \xi _{\eta _{1},\varvec{m}}^{q}]= & {} \delta _{\eta ,\eta _{1}} \delta _{\mathbf {k},\varvec{m}} \rho ^{p,q}_{\eta }(\mathbf {k}/\mathbf {R}), \end{aligned}$$(3)where \(\delta _{\eta ,\eta _{1}}\) is the Kronecker delta, and as a consequence of extending to a multivariate setting, \(\rho ^{p,q}_{\eta }(\mathbf {u}/\mathbf {R})\) represents the possible coherence structure between each pair (p, q) of image channels, where we assume the images are in-phase. This quantity is the LS2W coherence between channels p and q, which we discuss in more detail in the next section.

-

3.

The LS2Wmv coherence in (3) is assumed to be Lipschitz continuous for each scale-direction pair with Lipschitz constants \(R^{(p,q)}_{\eta }\) satisfying

$$\begin{aligned} \sum _{\eta } 2^{2j(\eta )}R^{(p,q)}_{\eta } < \infty . \end{aligned}$$ -

4.

Additionally, for each scale-direction \(\eta \), the transfer function \(W_{\eta }^{(i)}(\mathbf {z})\), is Lipschitz continuous with constants \(L^{(i)}_{\eta }\) which are uniformly bounded in \(\eta \) such that:

$$\begin{aligned} \sum _{\eta } 2^{2j(\eta )} L^{(i)}_{\eta } < \infty . \end{aligned}$$

2.2 Measuring local power and cross-channel coherence in LS2Wmv processes

It is well-known that the spectral structure of a signal or image can be used to describe its second-order behaviour (see for example Priestley (1981); Broughton and Bryan (2011)). For the multivariate setting on which we focus in this article, this leads to the consideration of (i) spatially localised wavelet spectra which represent the structure within a single channel, and (ii) cross-spectra which capture the structure across channels.

Definition 2

Let \(X_{\mathbf {r}}^{(p)}\) and \(X_{\mathbf {r}}^{(q)}\) be two channels of a LS2Wmv process with amplitude functions \(W_{\eta }^{(p)}(\mathbf {z})\) and \(W_{\eta }^{(q)}(\mathbf {z})\) respectively. Then the local wavelet cross-spectrum (LWCS) of the two channels \(X_{\mathbf {r}}^{(p)}\) and \(X_{\mathbf {r}}^{(q)}\) is given by

for \(\mathbf {z} \in (0,1)^{2}\) and scale-direction \(\eta \).

For the case where \(p=q\), Eq. (4) gives the auto-spectra (the local wavelet spectrum (LWS) for each channel) as given in Eckley et al. (2010). The LWS provides a measure of the local contribution to the variance of each channel. Conversely, in the case where \(p\ne q\) the local wavelet cross-spectra quantify the cross-covariance between channels at a specific (rescaled) location \(\mathbf {z}\) and scale-direction \(\eta \). To quantify the dependence between channels we first introduce the local cross-covariance (LCCV) and consider its relationship to the local cross-spectrum.

Definition 3

Let \(c^{(p,q)}(\mathbf {z},\varvec{\tau })\) denote the local cross-covariance between channels p and q from a LS2Wmv process at lag \(\varvec{\tau } \in \mathbb {Z}^{2}\). We define this function in terms of the local cross-spectrum by

for \(\varvec{\tau } \in \mathbb {Z}^{2}\), where \(\varPsi _{\eta }(\varvec{\tau })= \sum _{\mathbf {v} \in \mathbb {Z}^2} \psi _{\eta ,\mathbf {v}}(\mathbf {0}) \psi _{\eta ,\mathbf {v}}(\mathbf {\varvec{\tau }})\) are the two-dimensional autocorrelation wavelets as defined in Eckley and Nason (2005).

It can be shown that given a choice of wavelet, this cross-spectral representation is unique. Moreover, it can be established that the LS2Wmv process cross-covariance, given by \(c_{\mathbf {R}}^{(p,q)}(\mathbf {z},\varvec{\tau }) = \text{ Cov }\left( X_{[\mathbf {zR}]}^{(p)},X_{[\mathbf {zR}+\varvec{\tau }]}^{(q)}\right) \), asymptotically tends to local cross-covariance of each pair of channels.

Theorem 1

The LWCS for each p and q is uniquely defined given the corresponding LS2Wmv process. Moreover let \(c^{(p,q)}(\mathbf {z},\varvec{\tau })\) denote the local cross-covariance for two channels p and q of an LS2Wmv process from Definition 3. Then

for \(\varvec{\tau } \in \mathbb {Z}^{2}\) and \(\mathbf {z} \in (0,1)^2\) as \(R, S \rightarrow \infty \).

The proof of this result can be found in the appendix.

The LS2Wmv process quantity \(\rho ^{p,q}_{\eta }(\mathbf {z})\) in Definition 2, which we term the LS2Wmv coherence between two channels p and q, is a direct measure of the linear dependence between the innovation sequences of two channels at scale-direction \(\eta \). This measure can be defined as

where the individual LWS of each channel, \(S_{\eta }^{(p)}(\mathbf {z})\), together with the LWCS \(S^{p,q}_{\eta }(\mathbf {z})\), provide a normalised measure of the relationship between two channels. The value of the coherence determines the level of dependence between two channels, with \(+1\) and \(-1\) indicating a positive and negative dependence respectively, and a value of zero showing no dependence at a given scale-direction \(\eta \) and rescaled location \(\mathbf {z}\).

In the next section we develop a rigorous estimation theory for the LS2Wmv process quantities introduced in this section, establishing desirable asymptotic properties of the estimators. These properties are analogous to those for univariate LS2W processes in Eckley et al. (2010) but consider the crucial cross-channel dependence in the LS2Wmv model.

3 Estimation theory for LS2Wmv processes

Recall from standard Fourier theory that the traditional estimate of the spectrum is the square of the transformed process coefficients. In a similar fashion we define the (raw) cross-spectra as the product of empirical wavelet coefficients \(d_{\eta ,\mathbf {u}}^{(i)}\) from individual channels with \(d_{\eta ,\mathbf {u}}^{(i)} = \sum _{\mathbf {r}} X_{\mathbf {r}}^{(i)} \psi _{\eta , \mathbf {u-r}}\). Using these empirical coefficients we can define an estimator of the cross-spectrum of each pair of channels as the local (raw) wavelet cross-periodogram for two channels of a LS2Wmv process \(X_{\mathbf {r}}^{(p)}\) and \(X_{\mathbf {r}}^{(q)}\), given by \(I_{\eta , \mathbf {u}}^{(p,q)} = d_{\eta ,\mathbf {u}}^{(p)}d_{\eta ,\mathbf {u}}^{(q)}.\)

We now establish the statistical properties of the (raw) wavelet cross-periodogram as an estimator of the LWCS. In order to estimate the relationship between channels we use a pairwise approach. The proofs of the results of this section can be found in the appendix.

Theorem 2

Let \(X_{\mathbf {r}}^{(p)}\) and \(X_{\mathbf {r}}^{(q)}\) be two channels of an LS2Wmv process. Then asymptotically, the expectation of the (raw) cross-periodogram between these two channels, \(I_{\eta , \mathbf {s}}^{(p,q)}\), is given by

where \(\mathbf {R} = (R, S)\), with \(R=2^k, S=2^n\) for some \(k, n \in {\mathbb N}\). Similarly, the variance is given by

Here \(\eta \) is fixed and \(j(\eta )\) simply refers to scale for each direction.

The theorem demonstrates that the local raw cross-periodogram is a biased estimator of the cross-spectrum. However, rather helpfully (7) indicates that an asymptotically unbiased estimator can be obtained by transforming the raw periodogram by \(A^{-1}_{J}\), where \(A_J\) denotes the (curtailed) two-dimensional discrete autocorrelation wavelet inner product matrix [see Eckley and Nason (2005) for more details, including results establishing the invertibility of \(A_{J}\)]. Theorem 2 also establishes that smoothing the raw periodogram estimator is required to achieve asymptotic consistency. We choose to use the Nadaraya-Watson kernel estimator (Nadaraya 1964; Watson 1964) for smoothing the cross-spectra. The estimator is given by the weighted average

where the lattice weights are given by \(w_{\mathbf {u}}=\frac{K_{h}(||\mathbf {s-u}||}{\sum _{\mathbf {u}}K_{h}(||\mathbf {s-u}||)}\). In this expression \(K_{h}(\cdot )\) is a (two-dimensional) kernel function with bandwidth h.

The asymptotic properties of the resulting smoothed cross-periodogram, \(\tilde{I}\), are established in the following Theorem.

Theorem 3

The (asymptotic) expectation of \(\widetilde{I}^{(p,q)}_{\eta , \mathbf {s}}\) is given by,

Furthermore, the variance of the smoothed cross-periodogram vanishes:

as \(h,R, S \rightarrow \infty \) with \((h/\min \{R,S\}) \rightarrow 0\).

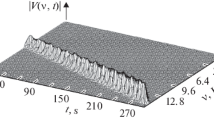

Plots of the coherence at the finest scale: a corresponds to the coherence between the first and second channel \(\rho ^{1,2}_{(1,v)}(u_1,u_2)=\rho ^{1,2}_{(1,v)}(\mathbf {z})\), whereas b the coherence between the second and third channel, \(\rho ^{2,3}_{(1,v)}(\mathbf {z})\); c shows the average estimation error at the finest scale between the first and second channel; d corresponds to the the average estimation error at the finest scale between the second and third channel

Defining the smoothed corrected cross-periodogram \(\widehat{I}\) as

by bias-correcting the spectrum estimate with \(A^{-1}_J\) we obtain the result

In other words, the estimator is asymptotically unbiased. This expression holds for any pair of channels p and q of the multivariate image. Taken together Theorems 2 and 3 establish the consistency of the estimator of the LWCS for each pair of channels. Note that this estimate, along with the estimate of the LWS, can be used in equation (6) to estimate the coherence.

3.1 Example

In order to demonstrate our multivariate model and coherence measure, we now present an example of data simulated using a (square) trivariate LS2Wmv process with given spectral and coherence structure. The spectrum is constant at all scales and directions, i.e. \(S^{1,1}_{\eta }(\mathbf {u/R}) = S^{2,2}_{\eta }(\mathbf {u/R}) = S^{3,3}_{\eta }(\mathbf {u/R}) =2\). Further, the coherence between the first and second channel is \(\rho ^{p,q}_{\eta }(\mathbf {u/R})=0.2\) and the coherence between the second and third channel increases linearly along the horizontal axis between 0 and 0.8 as demonstrated in Fig. 1. The LS2Wmv process realisations in this and subsequent sections were generated using Gaussian increments.

We estimate this coherence using the methods described in Sect. 3. Note that results in this section as well as subsequent examples in this article were produced in the R statistical computing environment (R Core Team 2013), using modifications to the code from the LS2W package (Eckley and Nason 2011a, b). Fig. 1 shows the difference between the true and estimated coherence at the finest scale in the vertical direction based on averaging \(K=100\) simulations. We observe similar results for the other directions. The estimate for the coherences between the first two channels is very good, signified by the low estimation error. We also obtain a reasonable estimate for the more difficult coherence specification between the second and third channel, the estimate capturing the general structure. Other simulated examples show a similar degree of estimation accuracy.

We now consider the application of our modelling approach to colour texture.

4 Application of the LS2W model to colour image classification

In this section we apply our modelling framework to synthetic colour textures as well as images arising from an industrial application of product evaluation. We start by introducing a feature vector based on the LS2Wmv model (Sect. 2) which forms the basis of our colour image classification procedure. The key to our approach is finding the coherence between the colour channels as we believe the additional information provided by the coherence will allow more subtle differentiation between visually similar images. The feature vector we suggest considers the location average auto- and cross-spectral structure and the average local wavelet coherence at each scale-direction pair, since these measures encapsulate changes in the second-order (textural) structure of a colour image. Algorithm 1 describes the method which we use to obtain a feature vector for an LS2Wmv process with three channels.

In order to show the full potential of our method we choose to compare it against three approaches. In particular, we compare our technique against the wavelet-based method of Van de Wouwer et al. (1999) as it is a popular approach using a fast algorithm which specifically focusses on cross-correlation measures. The alternative wavelet approach of Sengur (2008) is included in our study since the author reports better classification results than Van de Wouwer et al. (1999) for their chosen examples. Due to the ubiquity of Fourier methods in the literature we also compare against an alternative method based on the Fourier spectrum and Fourier coherence (Van Heel et al. 1982; Saxton and Baumeister 1982). From now on we shall refer to the approaches in Sengur (2008) as ‘Sengur’, Van de Wouwer et al. (1999) as ‘VdW’ and the Fourier approach as ‘Fourier’.

In what follows, we consider colour images with three channels corresponding to a RGB colour representation, but the method can equally be applied to other colour space specifications. For recent reviews of feature extraction and colour texture classification, see for example Prats-Montalbán et al. (2011).

Examples of visually similar simulated colour textures for classification using the procedure in Sect. 4 generated with the LS2Wmv model

4.1 The classification procedure

To demonstrate the power of the LS2Wmv approach against the alternative methods described above, we classify a test set of images representing a number of colour texture images. To perform the classification experiment we use a nearest centroid classifier based on linear discriminant analysis (LDA), one of many potential approaches commonly used in practice which could be used to classify such data. See for example Hastie et al. (2001) or Parker (2010) for more details on this classification method. The classification experiment is as follows. We sample fifty sub-images of dimension m x m from the upper half of each image in the set. These sub-images comprise our training set. Another set of fifty sampled sub-images is used as test images to classify in order to assess the performance of each method. We perform LDA on the training set using the feature vector highlighted in Algorithm 1; for each test sub-image, the LDA-transformed feature vector is calculated, and the image is assigned a texture class as the class whose centroid is closest in Euclidean distance. This is then repeated for the competitor Fourier and wavelet classification feature vectors. We assess classification performance of each method accordingly using the average classification rate over the fifty test sub-images.

4.2 Synthetic Examples

To illustrate the potential of the LS2Wmv feature vector described in Algorithm 1 in texture classification tasks, we simulate a number of colour textures of dimension \(256 \times 256\) with different colour texture structure, and then use the classification procedure described above (Sect. 4) to classify 50 sampled sub-images of each texture, using another 50 sub-images as a training set.

For the first example, we use the LS2Wmv model (1) to simulate a number of colour textures for classification. The three LS2Wmv processes in the classification experiment are defined as follows. The first process has a spectrum which is constant at all scales and directions (\(S^{1,1}_{\eta }(\mathbf {u/R}) = S^{2,2}_{\eta }(\mathbf {u/R}) = S^{3,3}_{\eta }(\mathbf {u/R}) =2\)), with the coherence between the first and second channel being \(\rho ^{1,2}_{\eta }(\mathbf {u/R})=0.2\); the coherence between the second and third channel is the same at all scales and directions and is set to 0.4. The second process is similar in structure to the first process, except we specify a non-stationary coherence between channels 2 and 3, namely it is set to 0.4 for half the pixels, and -0.4 for the other half, i.e. \(\rho ^{2,3}_{\eta }((u_1,u_2)/R)=0.4\) for \(u_1\in \{1,\dots ,128\}\) and \(\rho ^{2,3}_{\eta }((u_1,u_2)/R)=-0.4\) for \(u_1\in \{129,\dots ,256\}\). The third process has the same coherence structure as Process 2, but has a non-stationary spectral structure at all scales and directions. In particular, the spectral structure increases linearly from 0 to 0.8 as depicted in Fig. 1. Example realisations of the three processes can be seen in Fig. 2.

Examples of visually similar simulated colour textures for classification using the procedure in Sect. 4 generated with the model of coregionalization

The experiment attempts to classify the sub-images from the three processes. The resulting proportion of correctly classified images for the experiment is shown in Table 1. Whilst being difficult to distinguish visually, the LS2Wmv feature vector permits a substantially better classification of the processes due to being able to model the potential non-stationarity in both coherence and spectral structure (Table 1).

To examine the classification potential of our modelling framework further, we repeated the classification experiment using a second example of colour textures. Specifically, we simulate colour textures from a linear model of coregionalization. In this class of models, each channel of a colour texture is defined as a linear combination of independent univariate random fields [see e.g. Wackernagel (2003) or Gelfand et al. (2004)]. In other words,

for independent random fields \(Y_{j,\mathbf {u}}\) defined at spatial locations \(\mathbf {u}\). For the processes in this example, we specify that the \(Y_{j}\) have stationary, anisotropic covariance functions \(C_{\kappa , \mu , \phi }(\cdot )\) from the Gneiting (1999) class of models, as described in Schlather et al. (2015). In these functions, the parameters \(\kappa \) and \(\mu \) characterize the form and smoothness of the covariance, whilst \(\phi \) is an angle representing the degree of the anisotropy. In particular, for the experiment we define three different processes

Moreover, we specify that \(Y^{A}_{1,\mathbf {u}}\), \(Y^{B}_{1,\mathbf {u}}\) and \(Y^{C}_{1,\mathbf {u}}\) all have Gneiting covariance functions \(C_{0, 2, 0}(\cdot )\); \(Y^{A}_{2,\mathbf {u}}\), \(Y^{B}_{2,\mathbf {u}}\) and \(Y^{C}_{2,\mathbf {u}}\) have covariances \(C_{3, 2, \frac{\pi }{4}}(\cdot )\), \(C_{2, 2, \frac{\pi }{4}}(\cdot )\) and \(C_{2, 1, \frac{\pi }{4}}(\cdot )\) respectively. More explicit forms for these functions for particular values of \(\kappa \) can be found in Schlather et al. (2015). See also Wendland (2004) for more details on these processes. The textures were simulated using the RandomFields R package (Schlather 2014). The textures used in the experiment can be seen in Fig. 3. Visually, these textures can be seen to exhibit vertical and diagonal structure, but it is difficult to distinguish between them.

Similar to the first example, the experiment attempts to classify the 50 sub-images from the three processes. The proportion of correctly classified sub-images is shown in Table 1. The Fourier method performs poorly in the experiment. The Sengur and VdW are an improvement; the LS2Wmv feature vector achieves good classification via its ability to model the differing structure in the processes, despite the similarities between the images.

4.3 Application of the LS2W model to the classification of hair images

We now consider an application of our modelling framework to a colour texture analysis problem encountered by an industrial collaborator. In many industrial settings, it is of considerable interest to be able to discriminate between different hair images based on their textural properties, for example, to indicate the age of a product or its variability under different conditions. Until recently such images were analysed by experienced image analysts. However, such a task can be challenging even to the human eye, and as suggested by Liang et al. (2012), manual methods of image inspection are subjectively dependent on human vision. As such, a more principled approach for classifying such images is required. Thus it is of interest to see which of the four methods performs well in these differing cases. A typical swatch of hair analysed in the experiments is depicted in Fig. 4.

4.3.1 Hair analysis: different colours

In the first industrial colour texture experiment, each image represents a sample of hair which has undergone one of three different treatments, each of which results in a subtly different colouration of the hair sample. In other words, the swatches we analyse show a change in colour between the images but the same texture. More specifically, we wish to classify hair swatches representing three subtly different hair colours denoted A, B and C as shown in Fig. 5 Footnote 1. We follow the classification approach as outlined in Sect. 4 for each of the four methods, sampling fifty sub-images from the upper half of each image as training data and sampling fifty sub-images from the lower half of each image as the test set. We choose our sub-samples to have dimension 64 x 64.

Table 1 shows all methods have high classification rates. However, of these LS2Wmv achieves the highest correct classification. In this case the physical texture is the same across all images, however the colour changes. Hence, as we would anticipate, the two methods which take coherence into consideration produced the best results, namely the Fourier- and LS2Wmv-based feature classification methods. The VdW method is not competitive, failing to capture all of the structure within the colour textures.

4.3.2 Hair analysis: different preparation processes

Our next example analyses images from an experiment in which, after colourant B has been applied to the original image, three different processes are undergone independently (Fig. 6)Footnote 2. In other words, our aim is to distinguish between the three different physical texture processes, where the colour remains the same for each swatch.

Again we follow the classification approach as outlined in Sect. 4. Table 1 shows the classification results for this example, where again the LS2Wmv method achieves the highest classification rate. As may be expected, VdW is reasonably competitive in this case. This is due to the feature vector containing wavelet correlation signatures which take into account the textural change across processes. As all the images are for colourant B they have less variation across the colour planes, so the Fourier approach does not fare well since the images show change in their textural properties.

5 Conclusion

In this article we have introduced the multivariate 2D locally stationary wavelet process model and proposed an unbiased and consistent measure of the dependence between two locally stationary channels, namely the locally stationary wavelet coherence. Following this we detailed a full estimation procedure considering important asymptotic properties. We demonstrated the high accuracy of our approach through simulated examples.

We also applied the LS2Wmv modelling approach to a colour texture analysis task, motivated by images encountered in an industrial setting. Both simulated and industrial image examples show that the LS2Wmv has improved performance over other state-of-the-art approaches due to its ability to cope well with both changes in colour and texture features: in this case the coherence contributes to the higher classification rate and thus underlines the importance of coherence in a colour texture setting.

Notes

Note that these images have been artificially lightened for display purposes.

Note that these images have been artificially lightened for display purposes.

References

Broughton, S.A., Bryan, K.M.: Discrete Fourier Analysis and Wavelets: Applications to Signal and Image Processing. Wiley, Hoboken (2011)

Daugman, J.G.: An information-theoretic view of analog representation in striate cortex. In: Schwartz, E. (ed.) Computational Neuroscience, pp. 403–424. MIT Press, Cambridge (1990)

Eckley, I.A., Nason, G.P.: Efficient computation of the discrete autocorrelation wavelet inner product matrix. Stat. Comput. 15(2), 83–92 (2005)

Eckley, I.A., Nason, G.P.: LS2W: Locally stationary wavelet fields in R. J. Stat. Softw. 43(3), 1–23 (2011)

Eckley IA, Nason GP (2011b) LS2W: Software implementation of locally stationary wavelet fields. http://CRAN.R-project.org/package=LS2W

Eckley, I.A., Nason, G.P.: Spectral correction for locally stationary shannon wavelet processes. Electron. J. Stat. 8(1), 184–200 (2014)

Eckley IA, Nason GP, Treloar RL (2009) Technical appendix to locally stationary wavelet fields with application to the modelling and analysis of image texture. Technical Report 09:12, University of Bristol

Eckley, I.A., Nason, G.P., Treloar, R.L.: Locally stationary wavelet fields with application to the modelling and analysis of image texture. J. R. Stat. Soc. 59(4), 595–616 (2010)

Field, D.J.: Wavelets, vision and the statistics of natural scenes. Philos Trans. R. Soc. Lond. 357(1760), 2527–2542 (1999)

Fryzlewicz, P., Ombao, H.: Consistent classification of non-stationary signals using stochastic wavelet representations. J. Am. Stat. Assoc. 104, 299–312 (2009)

Fryzlewicz, P., Van Bellegem, S., von Sachs, R.: Forecasting non-stationary time series by wavelet process modelling. Ann. Inst. Stat. Math. 55, 737–764 (2003)

Gelfand, A., Schmidt, A., Banerjee, S., Sirmans, C.: Nonstationary multivariate process modeling through spatially varying coregionalization. Test 13(2), 263–312 (2004)

Gneiting, T.: Correlation functions for atmospheric data analysis. Q. J. R. Meteorol. Soc. 125(559), 2449–2464 (1999)

Hastie, T., Tibshirani, R., Friedman, J.J.H.: The Elements of Statistical Learning, vol. 1. Springer, New York (2001)

Killick, R., Eckley, I., Jonathan, P.: A wavelet-based approach for detecting changes in second order structure within nonstationary time series. Electron. J. Stat. 7, 1167–1183 (2013)

Liang, Z., Bingang, X., Chi, Z., Feng, D.: Intelligent charactererization and evaluation of yarn surface appearance using saliency map analysis, wavelet transform and fuzzy artmap neural network. Expert Syst. Appl. 39(4), 4201–4212 (2012)

Mondal, D., Percival, D.B.: Wavelet variance analysis for random fields on a regular lattice. IEEE Trans. Image Process. 21(2), 537–549 (2012)

Nadaraya, E.: On estimating regression. Theory Probab. Appl. 9(1), 141–142 (1964)

Nason, G.P.: Wavelet Methods in Statistics with R. Springer, Berlin (2008)

Nason, G.P., Von Sachs, R., Kroisandt, G.: Wavelet processes and adaptive estimation of the evolutionary wavelet spectrum. J. R. Stat. Soc. 62(2), 271–292 (2000)

Park, T., Eckley, I., Ombao, H.: Estimating time-evolving partial coherence between signals via multivariate locally stationary wavelet processes. IEEE Trans. Signal Process. 62(20), 5240–5250 (2014). doi:10.1109/TSP.2014.2343937

Parker, J.R.: Algorithms for image processing and computer vision. Wiley, New York (2010)

Petrou, M., Sevilla, P.G.: Image Processing: Dealing with Texture. Wiley, New York (2006)

Prats-Montalbán, J., De Juan, A., Ferrer, A.: Multivariate image analysis: a review with applications. Chemom. Intell. Lab. Syst. 107(1), 1–23 (2011)

Priestley, M.B.: Spectral analysis and time series. Academic Press, Cambridge (1981)

R Core Team (2013) R: A Language and Environment for Statistical Computing. R Foundation for Statistical Computing, Vienna, Austria. http://www.R-project.org

Sanderson, J., Fryźlewicz, P., Jones, M.: Estimating linear dependence between nonstationary time series using the locally stationary wavelet model. Biometrika 97(2), 435–446 (2010)

Saxton, W.O., Baumeister, W.: The correlation averaging of a regularly arranged bacterial cell envelope protein. J. Microsc. 127(2), 127–138 (1982)

Schlather M (2014) Randomfields: simulation and analysis of random fields. R package version 3.0.44. http://CRAN.R-project.org/package=RandomFields

Schlather, M., Malinowski, A., Menck, P.J., Oesting, M., Strokorb, K.: Analysis, simulation and prediction of multivariate random fields with package randomfields. J. Stat. Softw. 63(8), 1–25 (2015)

Sengur, A.: Wavelet transform and adaptive neuro-fuzzy inference system for color texture classification. Expert Syst. Appl. 34(3), 2120–2128 (2008)

Taylor, S: Wavelet methods for the statistical analysis of image texture. PhD thesis, Lancaster University, UK. http://eprints.lancs.ac.uk/79607/1/SLTaylorThesis2013 (2013)

Van de Wouwer, G., Scheunders, P., Livens, S., Van Dyck, D.: Wavelet correlation signatures for color texture characterization. Pattern Recognit. 32(3), 443–451 (1999)

Van Heel, M., Keegstra, W., Schutter, W., van Bruggen, E.J.F.: Arthropod hemocyanin structures studied by image analysis. Life Chem. Rep. Suppl. 1, 69–73 (1982)

Vidakovic, B.: Statistical Modelling by Wavelets. Wiley, New York (1999)

Wackernagel, H.: Multivariate Geostatistics, 3rd edn. Springer, Berlin (2003)

Watson, G.: Smooth regression analysis. Sankhyā 26, 359–372 (1964)

Wendland, H.: Scattered Data Approximation, vol. 17. Cambridge University Press, Cambridge (2004)

Acknowledgments

The authors gratefully acknowledge the financial support of Unilever Research Ltd. Taylor also acknowledges funding from EPSRC. We thank Robert Treloar and Eric Mahers for many interesting discussions which have helped stimulate this research. Code to implement the methods in this article, together with the non-commercial data used in Sects 3.1 and 4.1 can be found in the supplementary material.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

A Proofs

A Proofs

In this section we provide proofs of the results in Sect. 3 of the article. The proofs are similar in spirit to those in Eckley et al. (2010), but differ in that they extend those results to specifically consider the cross-channel dependence in spectral quantities.

1.1 Proof of Theorem 1.

We first establish uniqueness of the local wavelet cross-spectra of an LS2Wmv process. The structure of the proof is very similar to establishing the uniqueness of auto-spectra in the univariate case considered by Eckley et al. (2010). In order to prove the uniqueness of the multivariate spectral representation, it thus suffices to consider the properties of the cross-spectrum.

Suppose, by way of contradiction, that there exist two cross-spectral representations of the same LS2Wmv process which also possess the same cross-covariance structure. In other words

where \(c^{p,q}(\mathbf {z},\varvec{\tau })\) is defined in Eq. (3) and \(S^{(i)(p,q)}_{\eta }(\mathbf {z}) = W_{\eta }^{p}(\mathbf {z}) W_{\eta }^{q}(\mathbf {z}) \rho _{\eta }^{(p,q)}(\mathbf {z})\) for \(i=1,2\) and \(p,q \in \{1, \ldots m\} \text{ with } p \ne q\). Let \(\varDelta ^{(p,q)}_{\eta }(\mathbf {z}) \equiv S^{(1)(p,q)}_{\eta }(\mathbf {z})-S^{(2)(p,q)}_{\eta }(\mathbf {z})\) be the difference between the two representations. To establish uniqueness we must show that

What we actually show is the equivalent implication

where \(\tilde{\varDelta }^{(p,q)}_{\eta }(\mathbf {z}) = 2^{-2j(\eta )} \varDelta ^{(p,q)}_{\eta } (\mathbf {z})\).

Given the assumption, for a given pair of channels p and q, we have that

Additionally, using Parseval’s relation, the discrete autocorrelation wavelet inner product matrix can be expressed as

where \(\hat{\varPsi }_{\eta }(\varvec{\omega }) = |\hat{\psi }_{\eta }(\varvec{\omega })|^{2}\) and \(\hat{\varPsi }_{\eta }(\varvec{\omega })\) represents the Fourier transform of \(\varPsi \) (Eckley et al. 2010).

Since \(\sum _{\eta } S_{\eta }^{(p,q)} (\mathbf {z}) < \infty \) uniformly in \(\mathbf {z}\), we have \(\sum _{\eta }|\varDelta ^{(p,q)}_{\eta }(\mathbf {z})| < \infty \) and hence \(\sum _{\eta } 2^{2j(\eta )}|\tilde{\varDelta }^{(p,q)}_{\eta }(\mathbf {z})| < \infty \). For any channel pair (p, q), \(\hat{\varPsi }_{\eta }(\varvec{\omega })\) is continuous on \([-\pi ,\pi ]^{2}\) and in turn \(\sum _{\eta } \tilde{\varDelta }^{(p,q)}_{\eta } \hat{\varPsi }_{\eta }(\varvec{\omega }) \) is continuous on \([-\pi ,\pi ]^{2}\) as a function of \(\varvec{\omega }\). Hence Eq. (16) implies that,

In order to complete this proof we reconsider the Fourier properties of \(\varPsi \) as discussed in Eckley et al. (2009). Recall that for \(\varvec{\omega }=(\omega _{1},\omega _{2})\),

Let \(\tilde{\varDelta }^{(p,q)}_{\eta }:= \tilde{\varDelta }^{(p,q)}_{\eta }(\mathbf {z})\) at some fixed point \(\mathbf {z} \in (0,1)^{2}\). Then using Eq. (18) we can rewrite Eq. (17) as follows:

The RHS of Eq. (19) is a continuous function of \(\varvec{\omega }\) and so must vanish for all \(\varvec{\omega } \in [-\pi ,\pi ]^{2}\). In order to show that \(\tilde{\varDelta }_{1}\) is zero, we insert \(\varvec{\omega }=(\pi ,0)\) into Eq. (19). This gives,

since \(|m_{1}(\pi )|^{2} \)=1, \(|m_{0}(0)|^{2} \)=1 and therefore \(|m_{1}(2 \pi n)|^{2} \)=0 (Eckley et al. 2009). In other words, we have \(\tilde{\varDelta }_{1}^{(p,q)}=0\). To show that \(\tilde{\varDelta }_{2J+1}^{(p,q)}=0\) we reconsider Eq. (19). Taking \(\varvec{\omega }=(\pi ,\pi )\) and since \(\tilde{\varDelta }_{1}^{(p,q)}=0\), we have,

giving \(\tilde{\varDelta }_{2J+1}^{(p,q)}=0\). Finally we insert \(\varvec{\omega }=(0,\pi )\) into Eq. (19). Given that \(\tilde{\varDelta }_{1}^{(p,q)}=0\) and \(\tilde{\varDelta }_{2J+1}^{(p,q)}=0\) we can also derive that \(\tilde{\varDelta }_{J+1}^{(p,q)}=0\).

By setting \(\varvec{\omega }=(\pi /2,0)\) in Eq. (19),

and thus \(\tilde{\varDelta }_{2}^{(p,q)}=0\). Proceeding in a similar manner, we can show that \(\tilde{\varDelta }_{2J+2}^{(p,q)}=0\) and \(\tilde{\varDelta }_{J+2}^{(p,q)}=0\). Continuing with this process recursively setting \(\omega = \pi /2^{(j-1)}\) we can show that \(\tilde{\varDelta }_{\eta }^{(p,q)}(\mathbf {z})=0, \quad \forall \eta , \forall \mathbf {z} \in (0,1)^{2}\).

As the difference between the two cross-spectra is zero, the cross-spectral representations are uniquely defined given the corresponding LS2Wmv process.

To establish the asymptotic properties of the empirical autocovariance, we first note that

By the modelling assumptions of LS2Wmv, \(\mathbb {E}(X_{\mathbf {r}})=0\) for all \(\mathbf {r}\). Hence using Definition 1 \(c_{\mathbf {R}}^{(p,q)}(\mathbf {z},\varvec{\tau })\) can be written as

From Eq. (3) we have,

If \(p=q\) then \(\rho ^{p,q}_{\eta }(\mathbf {u}/\mathbf {R})=1\) and so the proof follows as in Eckley et al. (2009). For the case where \(p \ne q\) we have that \(c_{\mathbf {R}}^{(p,q)}(\mathbf {z},\varvec{\tau })\) becomes

Finally we consider the absolute difference between the true cross-covariance and the local cross-covariance \(|c_{\mathbf {R}}^{(p,q)}(\mathbf {z_{1}},\varvec{\tau }) - c^{(p,q)}(\mathbf {z_{2}},\varvec{\tau })|\). This difference is equal to

Using the Lipschitz continuity of the cross-spectrum \(S^{(p,q)}_{\eta }\) Taylor (2013, Lemma 1, Chapter 5), the difference can be bounded by

and since \(\varPsi _{\eta }(\varvec{\tau }) = {\mathcal O}(1)\) uniformly in \(\varvec{\tau }\) and the support of \(\varPsi _{\eta }(\varvec{\tau })\) is bounded by \(K2^{2j(\eta )},\) the distance \(||\mathbf {u-r}||\) is also bounded by this amount. Finally we obtain

since the Lipschitz constants \(B_{\eta }\) are uniformly bounded in \(\eta \) with \(\sum _{\eta } B_{\eta }2^{2j(\eta )} < \infty \) (as stated in Definition 1). \(\square \)

1.2 Proof of Theorem 2.

Using the LS2Wmv model (Definition 1), the expectation of the local wavelet raw cross-periodogram is given by

Recalling the assumptions on the model innovations (3), the expectation reduces to

Substituting \(\mathbf {u_{1}}=\mathbf {x+s}\) into the equation above we obtain

By the assumptions of the model given in Definition 1, \(W_{\eta }^{p}\) and \(\rho _{\eta }^{p,q}\) are Lipschitz continuous with constants \(L^{(p)}_{\eta }\) and \(R^{(p,q)}_{\eta }\) respectively. Therefore it follows that the product \(S_{\eta }^{p,q}=W_{\eta }^{(p)}W_{\eta }^{(q)}\rho ^{p,q}_{\eta }\) is also Lipschitz continuous (see Taylor (2013, Lemma 1, Chapter 5)). In other words

for Lipschitz constants \(CB^{(p,q)}_{\eta }\), with some constant \(C \in \mathbb {R}\). Hence

Incorporating this Lipschitz property of the \(S^{(p,q)}_{\eta }\) into Eq. (20), we obtain

since \(\sum _{\eta } B^{(p,q)}_{\eta } < \infty \) and \(\left\{ \sum _{\mathbf {r}} \psi _{\eta _{1}, \mathbf {x-r}} \psi _{\eta , \mathbf {-r}}\right\} ^{2}\) is finite. Expanding the square then substituting \(\mathbf {r_{0}=r_{2}-r_{1}}\) then gives

Finally we note that each of the summations within the brackets are simply autocorrelation wavelets (see Eckley et al. (2010)). Hence

where A is the discrete autocorrelation inner product matrix (see Eckley and Nason (2005) for more details). Therefore by correcting the cross-periodogram with the inverse of the A matrix it can be an unbiased estimator of the spectrum.

The result for the variance can be established using a similar strategy, please refer to Taylor (2013) for more details.

1.3 Proof of Theorem 3.

Let K be a bounded kernel on \(\mathbb {R}^{2}\). In other words, \(K:\mathbb {R}^{2} \rightarrow \mathbb {R}\) satisfies \((i)\ \int {}K(\mathbf {x})\ d\mathbf {x} < \infty \) and \((ii)\ |K(\mathbf {x})|< K_{M} < \infty \ \forall \mathbf {x}\). Recall that we use a Nadaraya-Watson kernel estimator to smooth the cross-periodogram. Hence we focus on the asymptotic properties of:

where \(w_{\mathbf {u}}=\frac{K_{h}(\mathbf {s-u})}{\sum _{\mathbf {u}}K_{h}(\mathbf {s-u})}\) and define \(K_{h}(\mathbf {s-u}) = K(\frac{\mathbf {s-u}}{h})\) for bandwidth \(h > 0\). Defining \(\lambda = \sum _{\mathbf {u}}K_{h}(\mathbf {s-u})\) and recalling Theorem 2, we have

Setting \(\mathbf {u}=\mathbf {s}+\varvec{\tau }\) we obtain

As discussed in the proof of Theorem 2, \(S_{\eta }^{(p,q)}\) is Lipschitz continuous with respect to the \(L_{1}\) norm, with constant \(CB^{(p,q)}_{\eta }\). Hence the expectation of the smoothed cross-periodogram is

where \(A_{\eta _{1},\eta }= {\mathcal O}(2^{2j(\eta _{1})})\) and since \(\sum _{\eta _{1}} B_{\eta _{1}}^{(p,q)} 2^{2j(\eta _{1})} < \infty .\) Therefore,

where \(\tilde{I}_{1}= \frac{1}{\lambda } \sum _{\varvec{\tau }} K_{h}(\varvec{\tau })\Big ( \sum _{\eta _{1}} S^{(p,q)}_{\eta _{1}}\left( \frac{\mathbf {s}}{\mathbf {R}}\right) A_{\eta _{1},\eta }\Big )\) and \(\tilde{I}_{2} = \frac{1}{\lambda } \sum _{\varvec{\tau }} K_{h}(\varvec{\tau })\bigg ((||\varvec{\tau }||+1) {\mathcal O}\left( \frac{1}{\min \{R,S\}}\right) \bigg )\). First we focus on \(\tilde{I}_{1}\):

since \(\sum _{\mathbf {u}} w_{\mathbf {u}} = \frac{1}{\lambda } \sum _{\varvec{\tau }} K_{h}(\varvec{\tau })=1\). In order to evaluate the second term in Eq. (21), we consider the number of lattice points, \((2 \lfloor h \rfloor + 1)^{2}\), in the support of a kernel of bandwidth h. More specifically we divide both the numerator and denominator by this quantity. In so doing we obtain an expression for \(\tilde{I}_{2}\) as:

We now analyse the numerator and denominator of \(\tilde{I}_{2}\) separately, denoting these by \(\tilde{I}_{2,N}\) and \(\tilde{I}_{2,D}\) respectively. The numerator is given by,

since

and \(||\varvec{\tau }||_{1}=|\tau _{1}|+|\tau _{2}| < \lfloor h \rfloor \). The denominator of \(\tilde{I}_{2}\) is given by \(\tilde{I}_{2,D} = \frac{\lambda }{(2 \lfloor h \rfloor + 1)^{2}}\), where \(\lambda = \sum _{\varvec{\tau }}K_{h}(\varvec{\tau })\). We have,

since \(K(x) \le K_{M} < \infty \) where \(K_{M}\) is the maximum point of the kernel. Combining the two terms of (21), the expectation of the smoothed cross-periodogram \(\mathbb {E}(\tilde{I}^{(p,q)}_{\eta , \mathbf {s}})\) is thus

\(\square \)

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Taylor, S.L., Eckley, I.A. & Nunes, M.A. Multivariate locally stationary 2D wavelet processes with application to colour texture analysis. Stat Comput 27, 1129–1143 (2017). https://doi.org/10.1007/s11222-016-9675-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11222-016-9675-9