Abstract

People like variety and often prefer to choose from large item sets. However, large sets can cause a phenomenon called “choice overload”: they are more difficult to choose from, and as a result decision makers are less satisfied with their choices. It has been argued that choice overload occurs because large sets contain more similar items. To overcome this effect, the present paper proposes that increasing the diversity of item sets might make them more attractive and satisfactory, without making them much more difficult to choose from. To this purpose, by using structural equation model methodology, we study diversification based on the latent features of a matrix factorization recommender model. Study 1 diversifies a set of recommended items while controlling for the overall quality of the set, and tests it in two online user experiments with a movie recommender system. Study 1a tests the effectiveness of the latent feature diversification, and shows that diversification increases the perceived diversity and attractiveness of the item set, while at the same time reducing the perceived difficulty of choosing from the set. Study 1b subsequently shows that diversification can increase users’ satisfaction with the chosen option, especially when they are choosing from small, diverse item sets. Study 2 extends these results by testing our diversification algorithm against traditional Top-N recommendations, and finds that diverse, small item sets are just as satisfying and less effortful to choose from than Top-N recommendations. Our results suggest that, at least for the movie domain, diverse small sets may be the best thing one could offer a user of a recommender system.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Every day we are confronted with an abundance of decisions. The large assortments found in supermarkets and online stores ensure that all tastes are catered for, allowing each individual to maximize his or her utility. However, some people argue that these large assortments result in a Tyranny of Choice (Schwartz 2004) and that in the end they do not contribute to our overall happiness. Indeed, research in consumer and social psychology has shown that there are major drawbacks in offering large lists of attractive items, especially when customers do not have strong preferences, resulting in what has been labeled Choice Overload (Iyengar and Lepper 2000; Chernev 2003; Scheibehenne et al. 2010).

Scheibehenne et al. (2010) indicate that item similarity constitutes an important potential moderator of choice overload: Choice overload is more likely to occur for item sets with many items that are equally attractive. This might especially apply to recommender systems, which explicitly try to provide the user with highly attractive recommendations (Bollen et al. 2010). Large item sets are often inherently less varied, because most real-life assortments have limits to the potential variety they can offer. This, too, makes choosing from large item sets more difficult.

These results suggest that the diversity of the set of items under consideration is an important determinant of choice difficulty and satisfaction, which might have important implications in the domain of recommender systems (Bollen et al. 2010). In pioneering work on case-based recommender systems, Smyth and McClave (2001) already argued that there is a tradeoff between attaining the highest accuracy and providing sufficient diversity. Similarly, Ziegler et al. (2005) showed that topic diversification can enhance users’ satisfaction with a list of book recommendations. Knijnenburg et al. (2012, Sect. 4.8) reproduced Ziegler et al.’s findings in a movie recommender and, using their user-experience framework, showed that the positive effect of diversification on user satisfaction could be explained by an increase in perceived recommendation quality, a decrease in choice difficulty, and an increase in perceived system effectiveness.

In the present paper, we will expand on this earlier work by applying insights from the psychological literature on choice overload to the study of diversification in recommender systems. Specifically, the net result of choice overload is typically reduced choice satisfaction, because of two counteracting forces: larger items sets increase the attractiveness but also increase the difficulty of choosing from the set. Arguably, this problem can be solved by increasing the diversity of the set, all else remaining equal. Therefore, we will discuss and test latent feature diversification, a diversification method that integrates insights from psychological research into the core mechanism of a matrix factorization recommendation algorithm. Because latent feature diversification provides maximum control over item quality and item set variety on an individual level it can increase the diversity (and thus reduce the choice difficulty) of an item set while maintaining perceived attractiveness and satisfaction. We will test this algorithm in two studies, for a total of three user experiments, employing the user-centric framework of Knijnenburg et al. (2012)

Our primary research question asks whether latent feature diversification applied in a recommender system can help users to find appropriate items in a satisfactory way, with minimal choice difficulty. Diversification might reduce the average predicted quality of recommendation lists compared to standard Top-N lists, but we expect that the increased diversity might still result in higher satisfaction because of the reduced difficulty. We also consider the length of the recommendation list, and investigate the effect of list length as well as the interplay of diversification and list length on choice satisfaction. Finally, the user-centric framework allows us to investigate both how and why diversification influences choice difficulty and satisfaction, by providing better insights into the psychological mechanisms driving these effects.

2 Theory and existing work

Choice overload as described in the literature (Iyengar and Lepper 2000; Scheibehenne et al. 2010) is the phenomenon of choice difficulty reducing choice satisfaction because the difficulty of choosing from a larger set of options outweighs the increased attractiveness of the larger set. In the current paper we focus not so much on the phenomenon of choice overload but on its underlying mechanisms: how do choice difficulty and perceived attractiveness influence satisfaction and what is the role of diversity? We will first discuss existing literature on attractiveness and choice difficulty (Sect. 2.1) and diversification (Sect. 2.2) before introducing our latent feature diversification method in Sect. 2.3 and an overview of the studies in Sect. 2.4.

2.1 Attractiveness and choice difficulty in large item sets

In the psychological literature it is argued that larger assortments offer important advantages for consumers (Chernev 2003; Scheibehenne et al. 2010). They can serve a larger target group, satisfying the needs of a diverse set of customers. They also allow customers to seek variety and maintain flexibility during the purchase process (Simonson 1990). Moreover, the variety of products offered by a specific brand is perceived as a cue for the quality of the brand (Berger et al. 2007). In other words, larger item sets are often perceived to be more attractive.

However, these beneficial aspects of large sets only seem to occur when people have stable and explicit preferences. Chernev (2003) labels this as having an “ideal point”: Only when people hold clear preferences for particular attribute values (an “ideal point”) they can assign specific weights to the different attributes that define a choice. Indeed, under such circumstances the likelihood of finding an option that matches one’s preferences is greater for larger item sets, because larger sets often contain a larger variety of attributes and span a wider range of attribute values. However, when people lack knowledge of the underlying attribute structures of a decision domain, they instead construct their preferences on the fly, i.e., while making the decision (Bettman et al. 1998). In such cases they are much less capable of articulating their preferences, and a larger item set might thus only hinder their decision process by making the preference construction process more difficult. In a series of studies Chernev (2003) showed that people without ideal points are indeed more likely to change their minds after a decision (i.e. showed less confidence in their decision) when confronted with a large assortment compared to a small assortment.

Recommender systems could potentially reduce this choice difficulty as such systems help users to find a limited set of items that fit their personal preferences from a much larger set of potential alternatives. However, choice overload effects also occur in item lists generated by recommender systems. Bollen et al. (2010) performed a user experiment with a matrix factorization movie recommender, comparing three conditions: a small Top-5 list, a large Top-20 list, and a large lower quality 20-item list, composed of the Top-5 plus 15 lower-ranked movies. Users experienced significantly more choice difficulty when presented with the high quality Top-20 item list, compared to the other two lists. The increased difficulty counteracted the increased perceived attractiveness of the Top-20 list, showing that in the end, choice satisfaction in all three conditions was about the same. Behavioral data corroborated these findings, as users spent more effort evaluating the items of the Top-20 list compared to the other two lists.

Effort may provide one possible explanation for the increased choice difficulty of larger item sets. The amount of presented information and the required number of comparisons is larger for larger sets (the number of pairs of items to compare increases with \(O(\hbox {n}^{2}))\). Therefore, more effort will be required to construct a preference when the number of items increases, especially for cases where the decision maker has no ideal point (Chernev 2003). But besides this objective effort, the primary driver of choice difficulty seems to be the cognitive effort due to considerations underlying the comparisons to be made. One important determinant of cognitive effort is the similarity between the items (Scheibehenne et al. 2010). Fasolo et al. (2009) studied real world assortments and showed that as the number of items in a set increase, the density Footnote 1 of an item sets grows, i.e., the differences between the items on their underlying attributes become smaller. This increases the similarity of the items and likewise the required cognitive effort to make a decision, as the number of potential candidates that are close to each other increases. For example, in such a dense set, the second best option is typically also very attractive, causing potential regret with the chosen option (Schwartz 2004). Moreover, people prefer to make decisions they can easily justify (Shafir et al. 1993), especially when item sets become larger (Sela et al. 2009). Fasolo et al. (2009) argue that in large sets attribute levels are so close to one another that it is hard to decide which option is better, and the final decision is subsequently harder to justify. This difficulty due to the increase in cognitive effort is what we expect to drive the perceived choice difficulty.

Scheibehenne et al. (2010) observed in their meta-analysis of the choice overload phenomenon that previous research has not controlled for similarity/diversity and the number of tradeoffs between options in the set, thereby making it difficult to disentangle the effects of item set diversity and size on the ease of comparison and thus choice overload. In the experimental paradigms employed in existing work such control is indeed difficult, because there is typically no knowledge of individual-level preferences of the participants to be able to individually control for levels of diversity and tradeoffs. To wit: increasing diversity without accounting for individual-level preferences may simply render some of the choice options irrelevant to the participant’s taste.

In the current paper we will use a matrix factorization recommender algorithm to diversify based on the latent features calculated by the algorithm and used for predicting ratings. These latent features allow us to manipulate the diversity of a set of items while controlling for attractiveness (by keeping the quality of the item set constant). For example, a set of items with the same predicted ratings that have very similar scores along these latent features would create a uniform set with a high density, potentially causing much choice difficulty. A different set—again with the same predicted ratings—that maximizes the distance between the features would create a more varied set with a lower density. Without reducing the overall quality of the item set, this diversification method can potentially reduce the cognitive effort in choosing from an item set (i.e. choice difficulty) for the same number of items.

However, there may be a negative side to diversification, one that has received little attention in the literature: As options become more varied, they may encompass more difficult tradeoffs. Such tradeoffs generate conflicts that require a lot of effort to resolve, as they require one to sacrifice something when choosing one item over another. In other words, there might be a limit to how much diversification can reduce choice difficulty. Scholten and Sherman (2006) proposed a double mediation model, which shows a U-shaped relation between the size of the tradeoffs in a set and the amount of conflict that is generated by the set. They suggest that not only very uniform sets (i.e., sets with high density) are difficult to choose from (as we argued earlier), but also very diverse sets are difficult because of tradeoff difficulty: tradeoffs are larger, which makes it more difficult to make the decision because greater sacrifices need to be incurred. In other words, there might be an optimal (medium) level of diversification that has the lowest choice difficulty.

In the next section we will review relevant literature on diversification and explain why for our particular research goal we employed diversification on the latent features of a matrix factorization algorithm.

2.2 Diversifying recommendations

The ultimate goal of a recommender system is to produce a personalized set of items that are the most relevant to a user. However, only relying on highest predicted relevance can result in ignoring other factors that influence user satisfaction. In line with this Smyth and McClave (2001) already argued that there is a tradeoff between attaining the highest accuracy and providing sufficient diversity. Their (bounded) greedy diversity algorithm tried to find the items that are the most relevant to the current user but that are maximally diverse from the other candidate items. McGinty and Smyth (2003) subsequently argued that the optimal level of diversity depends on the users’ phase in the decision process. In the initial stage of interaction with a recommender system, diversity helps a user to find a set of relevant items sooner, thereby speeding up the recommendation process. Later in the process too much diversity reduces the recommendation efficiency, as relevant cases might be lost due to the diversification process. However, case-based recommenders studied in these papers differ from collaborative filtering algorithms we employ in the current paper, so these findings cannot readily be generalized to our work.

Bridge and Kelly (2006) similarly investigated the role of diversification and showed that different methods of diversifying collaborative filtering recommendations makes simulated users reach their target items in a conversational recommender system more quickly. Their diversification was based on item-item similarity calculated from rating patterns. Recently Ribeiro et al. (2014) also proposed a diversification method using item similarity based on rating patterns. A simulation showed that their diversification method results in recommendations that are simultaneously accurate, diverse and novel.

Existing research such as discussed above typically evaluates diversity by performing simulations to show that enhancing diversity improves the accuracy or efficiency with which simulated users interact with a recommender system. Ge et al. (2010) propose a number of metrics that are more suitable for the evaluation of diversity: Coverage, describing the number of items a recommender system can and does recommend; and Serendipity, describing to what extent the recommended items are unexpected and satisfactory. However, to better understand how real users perceive and evaluate diversity, and to be able to answer our question of whether diversification can reduce choice difficulty, we need to go beyond these simulations and study actual users’ diversity perceptions, choice difficulty, and satisfaction with diversified item sets. We will briefly review relevant work that did employ user experiments to study the effect of item set diversity.

Ziegler et al. (2005) showed that topic diversification (using an ontology acquired separately from the data used to calculate recommendations) can enhance the perceived attractiveness Footnote 2 of a list of book recommendations. In a user experiment they demonstrated that despite the lower precision and recall of the diversified recommendations, diversification had a positive effect on users’ perception of the quality of item sets produced by their recommender algorithm. The effects as established in their study were small, and overall perceived quality reached a plateau at some level of diversification, after which it actually decreased (cf. Scholten and Sherman 2006). As Ziegler et al. did not measure any potential moderating or mediating variables, their study provides no insight into the psychological mechanisms underlying this interesting effect. In addition, they acknowledge that the use of an external ontology may have led to a mismatch between the diversity calculated by the algorithm and the diversity perceived by their users.

Knijnenburg et al. (2012, Sect. 4.8) attempted to reproduce Ziegler et al.’s findings in a movie recommender. In this study, recommendation sets were diversified based on movie genre information. Going beyond the original study by Ziegler et al., this study made use of the user-centric evaluation framework by Knijnenburg et al. to provide insight into the underlying mechanisms that allow diversification to increase choice satisfaction. The study confirmed Ziegler et al.’s positive effect of diversification on perceived quality, and showed that this in turn decreased choice difficulty and increased perceived system effectiveness, ultimately leading to a higher choice satisfaction.

In the present paper, we will expand on this earlier work to gain more insight in what factors affect choice difficulty and satisfaction in recommender systems. For this purpose we will test a diversification method that does not require external sources such as an external ontology (Ziegler et al. 2005) or a genre list (Knijnenburg et al. 2012), but that provides direct control over item quality and item set diversity on the individual level, using latent feature diversification.

2.3 Latent feature diversification

Matrix factorization algorithms (Koren et al. 2009) are widely used in recommender systems. These algorithms are based on singular value decomposition that reduces a high-dimensional user/item rating matrix into two lower dimensional matrices that describe users and items as vectors in a latent feature space in such a way that the relative positions of a user and item vector can be used to calculate the predicted ratings. In essence, the rating a user is predicted to assign to a specific item is equal to the inner product of the corresponding user- and item-vectors.

This approach is mathematically analogous to the multi-attribute utility theory (MAUT) framework (Bettman et al. 1998) used in decision making psychology. Matrix factorization models share with existing theories of MAUT the idea that the utility of an option is a sum of the utility of its attributes weighted by an individual decision maker’s attribute weights. The difference is that the dimensions in matrix factorization models describe abstract (latent) features, while in MAUT they describe concrete (interpretable) features.

The simplified choice of a camera can serve as an example of how decisions are described in MAUT and how we can diversify options while controlling for attractiveness. Imagine Peter, who is considering buying a camera, described along two attribute dimensions: zoom and resolution. Peter thinks that resolution and zoom are equally important, so his user vector is \(\overline{w}_{{ peter}}=(0.5,0.5)\). If we assume a linear utility function for attribute values (i.e. doubling an attribute value doubles the utility for the corresponding attribute), the utility for a 10 MP/10\(\times \) zoom camera \(u_{peter,10\,MP/10\times } =\overline{w}_{peter} \cdot \overline{x}_{10\,MP/10\times } =\left( {0.5\,{*}\,10} \right) +\left( {0.5\,{*}\,10} \right) =10\) is higher than that of a 12 MP/7\(\times \) zoom camera \(u_{peter,12\,MP/7\times } =\overline{w}_{peter} \cdot \overline{x}_{12\,MP/7\times } =\left( {0.5\,{*}\,12} \right) +\left( {0.5\,{*}\,7} \right) =9.5\), so Peter would prefer the first option. Similarly, we can calculate the utility for a 8 MP/12\(\times \) zoom and 15 MP/5\(\times \) zoom and see that Peter would like these equally much as the 10 MP/10\(\times \) alternative.

This example illustrates that there exist equipreference hyperplanes of items—orthogonal to the user vector—that are all equally attractive for that user (their inner products are the same) but that might differ a lot in terms of their features. The three cameras (10 MP/10\(\times \), 8 MP/12\(\times \) and 15 MP/5\(\times \)) are in such a hyperplane (or more precisely on a line in this 2-dimensional example), see Fig. 1. To test the effect of diversification independent of attractiveness, our goal is to choose items on this equipreference line that are either close to each other (low diversity, e.g. 11 MP/9\(\times \), 10 MP/10\(\times \) and 9 MP/11\(\times \)) or far apart from each other (high diversity, e.g. like the 8 MP/12\(\times \), 10 MP/10\(\times \) and 15 MP/5\(\times \) cameras in the figure). In terms of (Fasolo et al. 2009) the first set has a higher density than the second set because the inter-attribute differences are smaller, and we expect the first set of similar items therefore to require more cognitive effort (and thus cause more choice difficulty). However, if the items are too far apart then the increased trade-off difficulty may increase the choice difficulty again (cf. Scholten and Sherman’s (2006) U-shaped relationship).

2.3.1 Latent features in matrix factorization

Matrix factorization models are similar to MAUT in the way that items have higher predicted ratings if they score high on (latent) features that an individual user has higher weights for. A difference is that in MAUT attributes describe concrete, identifiable properties of choice alternatives, while in matrix factorization they describe abstract, ‘latent’ features. Though it is hard to ascribe a simple unidimensional meaning to these features (cf. Graus 2011), they are related to the dimensionality of users’ preferences (Koren et al. 2009 suggest they might encompass composite attributes such as ‘escapist’ movies). Using these latent features in a way similar to how attributes are treated in MAUT allows us to construct sets of items of the same quality (i.e. predicted rating) that are either highly diverse (with alternatives that have larger differences on latent feature scores, i.e. low density) or more similar (with alternatives that have smaller differences on latent feature scores, i.e. high density).

More specifically, we will construct such sets in the movie domain, for which large existing datasets allow us to construct a good matrix factorization model (Bollen et al. 2010). Our ideal goal is to construct sets of movies that differ in diversity, while controlling for quality. This means we would like to select a diverse (or non-diverse) set of movies from a hyperplane (orthogonal on the user vector) of movies with similar predicted ratings, analog to our camera example above. However, as we are bound by existing sets of movies and their vectors, it is in practice impossible to select a sufficiently large and diverse set that will have all the movies on exactly the same hyperplane. Our requirement to manipulate recommendation set diversity while controlling for quality thus requires us to tolerate some variation in predicted ratings. This implies that we have to find a small range \(({\Delta }R)\) in predicted ratings that allows us to extract both high and low diversity sets. This type of sets is illustrated in Fig. 2 for two dimensions.

As the factors in a matrix factorization model tend to be normally distributed, the latent feature space represents a multidimensional hypersphere, or simply a circle in the two-dimensional case. The Top-N predicted items of a user are those that are farthest in the direction of the user vector. We are interested in selecting a set of recommendations with high predicted ratings, but with sufficient diversity. To achieve this, we select our items to diversify from a Top-N set of highest predicted ratings for a user (light-grey area). From that area we select both low-diversity and high-diversity subsets of items (darker grey areas). Our constraints are that the width of the set (or the maximum distance perpendicular to the user vector) is maximal to achieve high diversity, and the height of the set (or the maximum distance parallel to the user vector, or \({\Delta }R\): the differences in ratings) is minimal to achieve low variation in item ratings (i.e. attractiveness), while still providing enough items to allow extracting both types of sets.

2.3.2 Diversification algorithm

The algorithm from Ziegler et al. (2005) was adopted and altered to meet the requirements of the current study. The algorithm greedily adds items to the set of recommendations, maximizing at each addition the total distance between all items in the set. This section will elaborate on the algorithm and will check the validity of the algorithm through simulation.

The applied algorithm (see Fig. 3) performs a greedy selection from an initial set (Candidates) to extract a maximally diverse recommendation set (R) of size k. Implementing the algorithm requires deciding on two parameters: the distance metric to use, and what set to take as initial set of candidate items. In this specific application, we used the first order Minkowski distance (\((d({a,b})=\sum \nolimits _{k} \left| {a_k -b_k} \right| \), with a, b as items and k iterating over the latent features), also known as Manhattan, City Block, Taxi Cab or \(L_{1}\) distance as the distance metric d(i, l). This was done to ensure that differences along different latent features are considered in an additive way, and large distances along one feature cannot be compensated by shorter distances along other features. This means that two items differing one unit on two dimensions are considered as different as two items differing two steps along one dimension (using Euclidean distance, these differences would be \(\surd 2\) and 2 respectively). This is more in line with how people perceive differences between choice alternatives with real attribute dimensions. Additionally, initial analyses showed that we obtained about 15 % higher diversity for City Block distance than for common Euclidian distances.

To ensure that quality is kept equal for different levels of diversity, the diversification starts from the centroid of the initial recommendation set. This allows for selecting items with both higher and lower predicted ratings, and as a result every set will have the same average predicted rating. Note that selecting the centroid is for the sole purpose of experimental control. From a more practical perspective this approach might be suboptimal as in many cases it will exclude the highest predicted items to be part of the recommended set. We will address this issue in study 2 through a slight adaptation of our algorithm.

Diversity can be manipulated by restricting the sets from which items are chosen in the diversification algorithm. For our high level diversity, the algorithm selected the N most diverse items among the 200 items with the highest predicted rating. For medium diversity, the algorithm selected the N most diverse items from the 100 items closest to the centroid of these 200 items. For low diversity, the algorithm simply selected the N items closest to the centroid. To test our algorithm we ran several simulations and tests using the 10 M movielens (Harper and Konstan 2015) dataset. As an initial starting set, the Top-200, or the 200 items with highest predicted rating was found to provide a good balance between maximum range in predicted rating and maximum diversity. The range in predicted rating was lower than the mean average error (MAE) of the predictions of our matrix factorization model, implying that even the difference in rating between the highest and lowest prediction in the set fall within the error margin of predictions for a particular user. The attractiveness differences \(({\Delta }R)\) would therefore most likely not be perceived by the user.

To measure the success of the diversification algorithm, we measured two properties: the average rating of the recommendation set (which should be similar across diversification levels to ensure equal quality of the item sets), and the diversity of the recommendation set. There are many measures of diversity available, see for example the recent overview by Castells et al. (2015) that provides a number of metrics. However, as we aim for a specific type of diversity related to distances between options on the features, we base our measure on the density measure of Fasolo et al. (2009) that was used in earlier work on choice overload. We define the AFSR (Average Feature Score Range) of a recommendation set X as the average difference per feature \((i_{k})\) between the highest and lowest scoring items along that feature (Eq. 1).

where \(i\in X\), \(i_k\) is the score of item i on feature k, and D is the number of dimensions.

We ran a simulation to verify that these recommendation sets meet the requirements. For 200 users randomly selected from the original MovieLens dataset, the recommendation sets for three different diversification levels were calculated and compared in terms of AFSR and average predicted rating. The results for recommendation sets with 5 (representing smaller recommendation sets) and 20 (large item sets) items can be found in Table 1. The numbers show that while the average predicted rating only differed minimally (about 0.06 stars between low and high, with more variance for higher diversity) over the diversity levels, the AFSR does differ a lot. This shows that the algorithm succeeds in manipulating diversity while maintaining equal levels of quality.

2.4 Overview of our user studies

In what follows, we present two studies that test latent feature diversification. In the first study, we test the user perceptions and experiences with recommendation lists for different levels of diversification and different number of items in the list. This study has two parts. The first part, study 1a, has been presented (and published) in an earlier version as workshop paper (Willemsen et al. 2011) and encompasses a basic test of our diversification algorithm. It asks users to inspect 3 lists with different levels of diversification, and measures subjective perceptions of attractiveness, diversity and difficulty. In this study users only reported their perceptions and did not choose an item from the recommendations. The second part, study 1b, performs a study in which users also choose an item, thus allowing us to directly test the impact of diversification on actual choice difficulty and choice satisfaction. Combined, the results of study 1 show that users indeed perceive the diversification generated by the algorithm, and that it is beneficial for reducing choice difficulty and improving satisfaction, especially for smaller item sets.

In study 1 the diversification starts from the centroid of the top-200. This way we controlled the average predicted rating of the lists (as described in Sect. 2.3). This means, though, that the lists used in study 1 are different from standard Top-N lists. The second study tests how the lists produced by our diversification algorithm compare against Top-N lists (i.e., lists that are optimized for predicted ratings). For this we modified our diversification algorithm slightly, starting the diversification from the top-predicted item (rather than the centroid), and manipulating diversity by varying the balance between predicted rating and diversity. Study 2 replicates the main result of study 1, showing that there is a benefit in diversifying recommendations based on the latent features to reduce choice difficulty and improve satisfaction, especially for small item sets.

3 Study 1

3.1 Goals of study 1

The first study aims to test how our latent feature diversification affects the user perceptions and experiences with recommendation lists. We test this in two steps. Study 1a \((\mathrm{N}=97)\) tested if our diversification is at all perceived by the user and whether diversity can be linked to tradeoff and choice difficulty. To do this, the level of diversification was manipulated within subjects, (i.e. all subjects were asked to assess three lists with different diversification levels). List length was manipulated between subjects, i.e. each user only saw one list length. After each list, users’ perceptions of the list were queried. Users were not asked to choose an item from the lists, and no choice satisfaction was measured. In study 1b \((\mathrm{N}=78)\) participants were given only one set of personalized recommendations (list length and diversification were both manipulated between subjects) but this time they were asked to make a choice, and they also reported their choice satisfaction (along with choice difficulty, diversity and attractiveness). Study 1b thus allowed us to see if diversification can indeed reduce choice difficulty and increase satisfaction.

3.2 System used in study 1

Both studies 1a and 1b used a movie recommender with a web-interface used previously in the MyMedia project. The software is currently being developed as MyMediaLite.Footnote 3 A standard Matrix Factorization algorithm was used for the calculation of the recommendations. The 10 M MovieLens dataset was used for the experiment, which, after removing movies from before 1994, contained 5.6 million ratings by 69820 users on 5402 movies. We further enriched the MovieLens dataset with a short synopsis, cast, director, and a thumbnail image of the movie cover taken from the Internet Movie Database.

The Matrix Factorization algorithm used 10 latent features, a maximum iteration count of 100, a regularization constant of 0.0001 and a learning rate of 0.01. Using a 5-fold cross validation on the used dataset, this specific combination of data and algorithm resulted in an RMSE of 0.854 and an MAE of 0.656, which is up to standards. An overview of performance metrics is given by (Herlocker et al. 2004).

Below we will discuss the setup and results of study 1a and 1b separately.

3.3 Study 1a: setup and expectations

The diversification algorithm manipulated the density of the set of recommendations while keeping the overall attractiveness of the set (in terms of the predicted ratings) constant. By using three levels of diversification, we were able to investigate whether the relation between diversity and difficulty is linear (higher diversity always reduces choice difficulty because it makes it easier to find reasons to choose one item over another; the predominant view in the literature) or U-shaped (diversity only helps up to a certain level, but very high diversity might result in large tradeoffs between items that are difficult to resolve due to the sacrifices that need to be made when choosing one item over the other; the view proposed by (Scholten and Sherman 2006)). As our main interest was in assessing differences in perceptions of diversity between the lists, we employed a within-subject design in which each participant is presented (sequentially) with a low, medium and high diversification set. This increases statistical power by allowing us to test the effect of diversification within subjects. To prevent possible order effects, the order of these sets was randomized over participants. We explicitly did not ask people to choose an item from each list, as we considered it to be unnatural to have to choose a movie three times, and spill-over effects might occur from one choice to another (i.e., the perception of diversity might be influenced by the preferences constructed for the movie chosen in the previous task).

We also varied the number of items in the set on 5 levels (5, 10, 15, 20 or 25; a between-subjects manipulation, each user saw all three lists in one of these lengths), as the literature suggests that diversification might have a stronger impact on larger sets. However, given that recommender systems output personalized and highly attractive sets of items, we might find that even for small sets diversification has a strong impact on experienced difficulty.

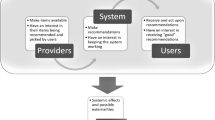

To measure the subjective perceptions and experiences of these recommendations after the presentation of each set, we employed the user-centric framework for user experience of recommender systems (Knijnenburg et al. 2012). This framework models how objective systems aspects (OSA) can influence user experience (EXP) and user interactions (INT) and how these relations are mediated by users’ perceptions as measured by Subjective System Aspects (SSA). The framework also models the role of situational characteristics (SC) and personal characteristics (PC).

Based on this framework we expected that the effect of diversification (OSA) on users’ subjective evaluation of the set (EXP) (i.e. how difficult it is to make tradeoffs and choose from the set), is mediated by subjective perceptions (SSA) of the diversity and attractiveness of the set. In particular, we expect that item sets that are more diverse (i.e., that have a lower density on the attributes) are perceived as more varied and potentially also as more attractive, and that these two factors affect the experience of tradeoff difficulty and expected choice difficulty (again, as we did not ask to actually choose an item, so we asked how difficult they would expect it to be to choose from the list).

3.3.1 Research design and procedure

Participants for this study were gathered using an online participant database that primarily consists of (former) students who occasionally participate in online studies and in lab studies of the HTI group. Participants were compensated with 3 euro (about 4 US dollars) for participating. 97 participants completed the study (mean age: 29.2 years, sd: 10.3; 52 females and 45 males).

The study consisted of three parts. In the first part, participants answered a set of questions to measure a number of personal characteristics (PC). In their meta-analysis, Scheibehenne et al. (2010) show that the characteristics expertise and prior preferences are important moderators of choice overload. Therefore, we constructed a set of items to measure movie expertise and strength of preferences. We also measured maximizing tendency of our participants, using the short 6-item version (Nenkov et al. 2008) of the maximization questionnaire by (Schwartz et al. 2002). Schwarz defines people who always try to make the best possible choice as maximizers, and people who settle for “good enough” as satisficers. Maximizers consider more options whereas satisficers stop looking when they have found an item that meets their standards. Therefore the search costs of maximizers are higher and consequently it is suggested that they are more prone to experience choice difficulty.

After these questions, the second part of the study was used to gather rating information from the participant to be able to calculate and provide personalized recommendations. In this phase the participants were asked to rate a total of ten movies, which provided adequate recommendations without too much effort in earlier experiments (Bollen et al. 2010; Knijnenburg et al. 2012). They were presented with ten randomly selected movies at a time, with the instruction to rate only the movies they were familiar with (ratings were entered on a scale from 1 to 5 stars). After inspecting and (possibly) rating some of the ten movies shown, users could get a new list of movies by pressing a button. When the participant had entered ten ratings (or more if on this particular page a user would cross the 10 ratings threshold) in total, this button would guide them to the third part.

In the third part the participant sequentially (and in randomized order) received three sets of recommendations, each time with a different level of diversification (OSA). List length (OSA) was manipulated between subjects: each participant was either shown a rank-ordered list of \(5\,(\mathrm{N}=19), 10\,(\mathrm{N}=22), 15\,(\mathrm{N}=22), 20\,(\mathrm{N}=16)\,\mathrm{or}\,25\,(\mathrm{N}=18)\) movies; each movie represented by its title (see Fig. 4). The predicted rating (in stars and one-point decimal value) was displayed next to the title. If the participant hovered over one of the titles, additional information would appear in a separate preview panel. This additional information consisted of the movie cover, the title of the movie, a synopsis, the name of the director(s) and part of the cast. Before moving to the next list, participants were presented with a short questionnaire of 16 items, measuring perceived choice difficulty and tradeoff difficulty (EXP) and their perceived diversity and perceived attractiveness of the presented list (SSA). Participants thus answered these questions for each of the three lists.

3.3.2 Measures

The items in the questionnaires were submitted to a confirmatory factor analysis (CFA, as suggested by Knijnenburg et al. 2012; Knijnenburg and Willemsen 2015). The CFA used repeated ordinal dependent variables and a weighted least squares estimator, estimating 5 factors. Items with low factor loadings, high cross-loadings, or high residual correlations were removed from the analysis. Factor loadings of included items are shown in Table 2, as well as Cronbach’s alpha and average variance extracted (AVE) for each factor. The values of AVE and Cronbach’s alpha are good, indicating convergent validity. The square root of the AVEs are higher than any of the factor correlations, indicating good discriminant validity. For expected choice difficulty, a single indicator was used. Based on the CFA, in which no stable construct for tradeoff difficulty could be fitted due to high cross loadings with other constructs, we selected a single indicator for tradeoff difficulty as well.

3.3.3 Results

Before we construct a structural equation model (SEM) that shows how the measured constructs relate to each other and to the diversification manipulation, we first check if our diversification algorithm indeed results in different levels of attribute diversity.

3.3.3.1 Manipulation checks

To check our diversification algorithm, we compared the resulting diversity, predicted ratings and variance of the predicted ratings in our data (analyzed across list lengths). Table 3 shows that our diversification algorithm indeed increases the average range of the scores on the 10 matrix factorization features (AFSR, see Sect. 2.3). At the same time, the predicted average rating does not differ significantly between the three levels of diversification (differences are smaller than the standard error), showing that we manipulated diversity independent of (predicted) quality. The standard deviation of the predicted ratings for the three sets does increase slightly with increasing diversity.

3.3.3.2 Structural model

The subjective constructs from the CFA were organized into a path model using a confirmatory structural equation modeling (SEM, as suggested by Knijnenburg et al. 2012; Knijnenburg and Willemsen 2015) approach with repeated ordinal dependent variables and a weighted least squares estimator. In the resulting model, the subjective constructs are structurally related to each other and to the experimental manipulations (i.e. the OSAs list length and diversification level). The model was constructed based on the user-centric framework for user experience of recommender systems (Knijnenburg et al. 2012). For analysis, we followed the recommendations by Knijnenburg and Willemsen (2015), creating a saturated model (following the framework’s core pathway linking OSAs to EXP variables via SSAs) and then pruning non-significant effects from this model.

In the final model, the maximizer scale did not relate to any other variable, and was therefore removed from the analysis. The manipulation “list length” (whether participants were shown 5, 10, 15, 20 or 25 recommendations) also did not have a significant influence on the other variables, nor did it interact with diversification. The results are therefore collapsed over these conditions. We also did not observe any effect of the order in which the three sets were presented.

The final model had a good model fitFootnote 4 \((\upchi ^{2}(179) = 256.5\), \(\hbox {p }< 0.001\), CFI = 0.989, TLI = 0.987, RMSEA = 0.041, 90 % CI [0.030, 0.051]). Figure 5 displays the effects found in this model. Path coefficients in the final model are standardized; the numbers on the arrows \((\hbox {A }\rightarrow \hbox { B})\) denote the estimated mean difference in B, measured in standard deviations, between the medium or high diversification list and the low diversification list. For all other arrows, the numbers denote the standardized increase or decrease in B, given a one standard deviation increase or decrease in A. The number in parentheses denotes the standard error of this estimate, and the p-value below these two numbers denotes the statistical significance of the effect. As per convention, only effects with \(\hbox {p }<.05\) are included in the model. The medium and high diversification conditions are compared to the low diversification baseline condition; numbers on the arrows originating in the conditions denote the mean differences between participants in medium or high diversification condition and participants in the low diversification condition.

To better understand the effects, we plotted the marginal effects of the medium and high diversification condition relative to low diversification condition on the subjective constructs in Fig. 6. Our diversification algorithm affects the perceived diversity in a seemingly linear fashion (see Fig. 6), with medium and high diversification resulting in significantly higher perceived diversity than the low diversification condition. Higher perceived diversity subsequently increases the perceived attractiveness of the recommendations. The medium diversification condition also has a direct positive effect on attractiveness, making medium diversification as attractive as the high diversification (and both are significantly more attractive than low diversification; see Fig. 6). There is also a direct effect of expertise, a personal characteristic, on attractiveness, showing that expert participants report higher perceived attractiveness.

In terms of tradeoff difficulty, we observe that this is significantly (and negatively) influenced by the high diversification condition, as well as a main effect of strength of preferences (consistent with the work of Chernev (2003) on ideal point availability). So both high diversification and a high self-reported strength of preferences cause people to experience lower tradeoff difficulty. The negative effect of diversification on tradeoff difficulty goes against the expectation (Scholten and Sherman 2006) that higher diversification leads to options that encompass larger tradeoffs between the attributes. Potentially, the high diversification setting of our algorithm does not generate items that encompass difficult tradeoffs, at least not in the specific domain of movies.

These constructs together influence the expected choice difficulty experienced by the user, which goes up with increased tradeoff difficulty, but goes down with increased diversity and attractiveness. The net result of our diversification manipulation on expected choice difficulty is negative: the higher the diversity of the set, the more attractive and diverse, and the less difficult participants expect it to be to choose from the set (the marginal effects in Fig. 6 suggest that choice difficulty decreases almost linearly with diversification level).

3.3.4 Discussion of study 1a results

The results of study 1a show how expected choice difficulty and tradeoff difficulty associated with a set of recommendations are influenced by the diversity of the items on the underlying latent features. This is a noteworthy result, as it shows that these latent features have a psychological meaning to the participant, providing support for our basic assumption that a parallel can be drawn between attribute spaces in regular decision making domains and the latent features in a matrix factorization space. By diversifying the items on these latent features, we increase the perceived diversity and attractiveness of the set, and subsequently reduce expected choice difficulty. Our net result thus is not a U-shaped relation between diversity and choice difficulty, but rather a simple downward trend.

Though intuitively one would expect an effect of list length on the perceived diversity or the experienced difficulty, we do not observe such an effect. Given that our diversification algorithm finds items that are maximally spaced out from each other on the latent features within a set of options with the same quality (predicted ratings), this might be not very surprising: when all items are good, diversification helps for both small and large sets. We would thus expect the effect of diversification to be roughly equal for different list lengths. Moreover, as the 5 different list lengths were manipulated between subjects, we have limited statistical power to detect small differences.Footnote 5 Finally, in study 1a we did not ask participants to make a choice from the item sets. This might be one reason why no effect of list length on choice difficulty was observed as the participants did not have to commit to any of the options.

Study 1a has established an effect of our diversification algorithm on perceived diversity and expected choice difficulty. In study1b, we further investigated our diversification algorithm, but now we explicitly asked participants to choose an item from the list of recommendations so we can measure their actual choice difficulty and also measure their satisfaction with the chosen item (as in Bollen et al. 2010).

3.4 Study 1b

3.4.1 Setup and expectations

The goal of the second study was to measure the effect of diversification on users’ satisfaction with the chosen option by using an experimental design in which the participants choose an item from the presented set. Study 1b therefore allows us to measure to what extent actual (rather than perceived) choice difficulty might be reduced by diversifying a large recommendation set. Furthermore, we expected that even for small sets diversification might be beneficial: In Study 1a, the number of items did not seem to affect the perceived diversity, showing that diversification enhances the attractiveness of both large and small item sets. In Study 1b we wanted to find out whether such diversified small item sets can render large item sets obsolete. For non-personalized item sets, this would be a daunting task: it seems impossible to create a small set of items that would fit everyone’s needs. However, a personalized diversified small item set might be just as satisfying as a large set, since this set is tailored to the user. In that case, the additional benefits of more items may not weigh up against the increased difficulty.

To accomplish these goals, we conducted a study in which we manipulate both diversity and item set size. Study 1a showed no detrimental effects of the high level of diversification due to large tradeoffs; this condition just showed stronger effects than medium diversification. In the current study we thus only include low and high levels of diversification, as this will show us the most pronounced effect on choice satisfaction. Besides varying the level of diversification, we also vary the number of items in the set on three levels, 5, 10 and 20 items. The extreme levels (5 and 20) are identical to those used by Bollen et al. (2010). As most literature on choice overload (e.g., Reutskaja and Hogarth 2009) predicts an inverted U-shaped relation between satisfaction and number of items, we explicitly included an intermediate level but we did not use 5 levels (as in study 1a) as this would require two additional (between-subjects) conditions and thus many additional participants. Different from study 1a, and to prevent spill-over effects from participants’ previous decisions, participants only received one list of recommendations and we manipulate both diversification (2 levels) and list length (3 levels) between subjects.

3.4.2 Research design and procedure

Participants for this study were gathered using the same online participant database as study 1a, but participants from study 1a were excluded from participation. Participants were compensated with 3 euro (about 4 US dollars) for participating. 87 participants completed the study (mean age: 29.0 years, sd: 8.91; 41 females and 46 males).

Like study 1a, study 1b consisted of three parts. Part 1 (measuring personal characteristics) and part 2 (asking for ratings to train the system) were identical to study 1a. In the third part participants received one set of recommendations, which, depending on the experimental condition, consisted of 5, 10 or 20 items that were of high or low diversification. See Table 5 for the number of participants in each condition. Recommended movie titles were presented list-wise, with the predicted rating (in stars and one-point decimal value) displayed next to the title. If the participant hovered over one of the titles, additional information appeared in a separate preview panel, identical to study 1a. In study 1b, however, we asked participants to choose one item from the list (the movie they would most like to watch) before proceeding to the final questionnaire. The final questionnaire presented 22 items, measuring the perceived diversity and attractiveness of the recommendations, the choice difficulty and participants’ satisfaction with the chosen item. Some items of these questionnaires were slightly reworded from study 1a (e.g., we reverse coded one item of the diversity scale to have a more balanced set of items). We did not measure tradeoff difficulty, because we did not require this aspect after finding in study 1a that the hypothesized U-shaped effect of diversity (which was hypothesized to result from a positive effect of diversity on tradeoff difficulty) did not hold (a negative effect of diversity on tradeoff difficulty was found instead).

3.4.3 Measures

After initial inspection of the process data, we excluded 9 participants that clearly put little effort in the experiment. These participants went over the entire experiment unrealistically quickly and only inspected 1 or 2 movies for a very short time (less than 500 ms) during the decision. The remaining analysis thus contains 78 participants.

The items in the questionnaires were submitted to a confirmatory factor analysis (CFA). The CFA used ordinal dependent variables and a weighted least squares estimator, estimating 7 factors. Items with low factor loadings, high cross-loadings, or high residual correlations were removed from the analysis. Factor loadings of included items are shown in Table 4, as well as Cronbach’s alpha and average variance extracted (AVE) for each factor. The values of AVE and Cronbach’s alpha are good, indicating convergent validity. The square roots of the AVEs are higher than any of the factor correlations, indicating good discriminant validity. In Table 4 we only report the 4 factors that we used in the final SEM. Maximization tendency, strength of preference and expertise showed loadings similar to study 1a. However, in the resulting SEM, none of these constructs significantly contributed to the model, except for a small effect of preference strength on perceived difficulty that did not affect the other relations in the model. This effect is left out because it does not contribute to our overall argument.

Besides these subjective measures we also included a behavioral variable representing the log-transformed total number of hovers users made on the movie titles. Hovering on a movie title was required to read the description of that movie. A large number of hovers means that participants switch back and forthFootnote 6 to compare different movies (to exclude accidental or transitional hovers, only hovers longer than 1 second were counted). This measure is a behavioral indicator of the amount of effort users put into making the decision. As discussed in the introduction, this measure would project the objective effort a user puts into choosing, whereas the choice difficulty questions measure the cognitive effort of the user.

3.4.4 Results

3.4.4.1 Manipulation check

For completeness, we checked again if the diversification algorithm impacts the actual diversity of the latent features without affecting the predicted ratings. Higher diversity indeed causes a significantly higher average feature score range (AFSR; see Table 5, differences much larger than the standard error of the mean), while the average predicted rating of the items remains equal between the two diversity levels (differences smaller than standard error of the mean). As in study 1a, we do observe a slight increase in the standard deviation of the predicted ratings with increasing diversity.

3.4.4.2 Structural model The subjective constructs from the CFA (see Table 4) were organized into a path model using a confirmatory SEM approach with ordinal dependent variables and a weighted least squares estimator. In the resulting model, the subjective constructs are structurally related to each other, to the hover measure and to the experimental manipulations (list length and diversification level). As we already noted, the maximizer scale, strength of preference, and expertise did not relate to any other variable, and were therefore removed from the analysis. The final model had a good model fit (\(\upchi ^{2}(167) = 198.9\), p \(=\) 0.046, CFI \(=\) 0.99, TLI \(=\) 0.99, RMSEA \(=\) 0.050, 90 % CI [0.007, 0.074]). Figure 7 displays the effects found in this model.

The model shows that choice satisfaction increases with perceived attractiveness and perceived diversity, but decreases with the experienced choice difficulty and the number of hovers made. The two manipulations (diversity and list length) are represented by the two purple OSA boxes at the top of the display. The three levels of list length are tested simultaneously as well as separately.Footnote 7 As expected, longer lists (the 10- and 20-item set) increase the number of hovers, as well as the choice difficulty. They also have a direct positive effect on satisfaction compared to the 5-item set. Finally, there is an interaction effect between diversification and list length on perceived diversity: High diversification of the items increases the perceived diversity (perceived diversity is 1.08 higherFootnote 8 for the high diversity condition compared to the low diversity condition), but only for small item sets, as this main effect is attenuated by an interaction with larger sets (–1.252 for 10 items and –0.864 for 20 items). The interaction effects of our two manipulations can best be understood by looking at the marginal effects of these conditions on perceived diversity, as presented in Fig. 8. Only the high-diversity 5-item set is perceived to be more diverse; all other sets hardly differ in perceived diversity between each other and the baseline (low diversity 5 items). In other words, in larger item sets participants do not perceive the effect of diversification. We will elaborate on this effect in the next section.

For perceived attractiveness we see similar results. Attractiveness is higher when diversity is perceived to be higher, and therefore only the highly diverse 5-item set has a significantly higher attractiveness (see Fig. 8) compared to the low diverse 5-item set.

In our model choice difficulty is a function of perceived attractiveness, perceived diversity and the length of the list. Consistent with the literature, difficulty is higher in larger and more attractive item sets. Both the 10- and 20-item lists are significantly more difficult to choose from than the 5-item list; the effect on choice difficulty shows that this difficulty is not just objective effort, but also cognitive effort. Like in study 1a we observe a negative effect of diversity on difficulty: controlling for perceived attractiveness and list length, lists that are perceived to be more diverse are perceived to be less difficult to choose from. In total, for both low and high diversity, the difficulty increases monotonically with list length, and we see that difficulty is on its lowest level for the highly diverse 5-item set (see Fig. 8).

Finally, we investigate the resulting choice satisfaction. There is a direct effect of set size on satisfaction, showing higher satisfaction with longer lists. However, satisfaction is also influenced positively by perceived attractiveness and diversity, and negatively by higher choice difficulty and increased hovers. Note that the effect of hovers is independent of choice difficulty: the number of hovers increase with list length, and the more hovers people make the lower their satisfaction. So both the objective effort (hovers) and cognitive effort (choice difficulty) incurred by longer lists reduce satisfaction.

The marginal effects on satisfaction (Fig. 8) reveal that 10- and 20-item sets are perceived as more satisfying than the non-diversified 5-item set, but that there are no significant differences between the 10- and 20-item sets. The most interesting result is that the highly diverse 5-item set stands out, because it is perceived to be as satisfying as the 10- and 20-item sets. Our model also explains why: the diversified 5-item list excels in terms of perceived diversity and attractiveness, while at the same time being less difficult to choose from. These three effects completely offset the direct negative effect of short list length on satisfaction. Note that the study does not reveal a choice overload effect, as we do not observe that longer items lists are less satisfying than shorter ones (despite the increased choice difficulty).

3.4.5 Discussion of study 1b results

Study 1b reveals an important role of diversity and list length on the choice satisfaction of decision makers using a recommender system. Consistent with Study 1a we see that diversification increases the attractiveness of an item set, while at the same time reducing the choice difficulty. Together these effects can increase the satisfaction with the chosen item, but we observe this effect for small item sets only; diversification does not seem to matter much for larger item sets, as these sets are satisfying even with low diversification.

Unlike study 1a where diversification increased perceived diversity regardless of set size, we now observe that diversification increases perceived diversity in small sets only. This limited effect in study 1b is not entirely surprising, because study 1b differs from study 1a in many aspects. Most importantly, in study 1a we asked participants to carefully inspect the entire set of items, before asking them their perceptions of the list. In study 1b, on the other hand, we asked them to choose from the set, without asking them explicitly to inspect the entire list. This means that participants might have stopped inspecting the set earlier (i.e. they may have ignored some items altogether), and that they are therefore less able to assess its overall diversity, especially for the larger sets. Inspecting the hovers corroborates our expectation that participants behave differently: in study 1a the percentages of unique acquisitions are relatively high; between 80–90 % of the items are actually looked at. These numbers do not differ much between 5, 10 and 20 items. In study 1b the percentages of unique acquisitions drop substantially with increasing list length—from 80 % in the 5-item set to only 45 % in the 20-item set. In other words, if the participant is asked to choose (rather than to inspect), she is much more likely to inspect fewer items, and might therefore have less opportunity to get a good impression of the overall diversity of the set. The resulting assessment on the diversity scale might therefore regress towards the middle, showing lower values for the 10- and 20-item sets compared to the 5-item set.

Consistent with previous research (Bollen et al. 2010), our model shows that satisfaction with the chosen item is an interplay of choice difficulty and attractiveness. However, different from Bollen et al., we also observe a direct effect from perceived diversity on satisfaction, and a direct effect of list length on satisfaction. These effects might explain why we do not observe a choice overload effect in this study, as people seem to derive inherent satisfaction from choosing from longer lists that overrules the difficulty of choosing from such longer lists.

In terms of attractiveness and satisfaction, item sets with a length of at least 10 seem to be optimal when creating a recommender system. However, this study convincingly shows that small but diverse items sets can be just as satisfying as larger sets, and more importantly, that they result in lower choice difficulty. Diverse small sets may thus be the best thing one could offer a user of a recommender system.

3.5 Overall discussion of study 1

The goal of study 1 was primarily to show that a diversification based on psychological principles can be effective in positively enhancing subjective experiences with a recommender system. The two experiments of study 1 clearly show that latent feature diversification can reduce choice difficulty and—especially for short item lists—increase choice satisfaction. However, the precise experimental control we enforced in study 1 has a drawback for more practical applications. Most importantly, in study 1 we diversified our item set from the centroid of the Top-200 latent feature space. This allowed us to attain maximal diversity while controlling for the average quality in each list, but it also implied that our lists did not excel in terms of predicted accuracy, as they did not necessarily include items with the highest predicted ratings. So despite the fact that we show that diversified lists are more attractive, less difficult and in some cases more satisfying, we do not know yet if such diversified lists can hold up against standard lists such as the Top-N lists that exclusively present the items with the highest predicted rating, as typically provided by recommender algorithms.

For a practical test of our diversification algorithm, we will need to adapt our diversification algorithm to allow us to tradeoff the most accurate/relevant items with the most diverse items. This allows for a realistic comparison against the pure Top-N lists that are pervasive in today’s recommender systems research and applications. In the next section we discuss this adaptation to our diversification approach in detail, and compare it against the centroid diversification approach used in study 1. We then present study 2, a study similar to study 1b, in which we hypothesize that latent feature diversification can hold up against Top-N recommendations—by means of reducing choice difficulty and increasing attractiveness and satisfaction—even though the recommended items have lower predicted ratings than undiversified Top-N lists.

4 Diversification versus Top-N

Because the diversification in study 1 was started from the centroid of a Top-N set, there was no guarantee that the items with the highest predicted rating were included in the actual recommendation set. This begs the question how diversification compares to a ‘standard’ Top-N recommendation list.

To make this comparison, the diversification algorithm needs to be altered slightly. For a direct comparison to Top-N the diversification process needs to start from the item with highest predicted rating instead of the item closest to the centroid of the Top-200. To make sure that this alteration did not reduce the effectiveness of the diversification process, its consequences in terms of AFSR were studied by performing a simulation. For 100 randomly selected users from the MovieLens dataset, AFSR was calculated for recommendation sets that started either from the item with highest predicted rating (Top-1) or the centroid. Rather than a decreased AFSR, Table 6 shows even a marginal increase in AFSR when starting from the top predicted item rather than the centroid, indicating that starting from the top does not result in lower diversity than the diversification method starting from the centroid employed in study 1a and 1b. Thus, we can safely assume that the high diversification condition created in study 2 (where we start from Top-1) is similar to the high diversification condition used in study 1 (where we started from the centroid).

As study 2 aims to investigate the effect of different levels of diversification compared to ‘standard’ Top-N recommendations, we needed a method to vary the level of diversification along a scale ranging from ‘standard’ Top-N recommendations (no diversification) to recommendations with high diversification, which, for a better comparison, start from the item with highest predicted rating (Top-1). This was done by introducing a weighting parameter \(\upalpha \) (similar to Ziegler et al. 2005), that controls the relative importance of Top-N ranking versus diversity.

At every iteration in the diversification, all candidate items are ranked on two criteria: 1) the distance of this item with all other items in the recommendation set, and 2) the predicted rating. The weighting factor \(\upalpha \) defines the relative weight that is given to either ranking (\(\upalpha \) for the diversification ranking and \(1-\upalpha \) for the predicted rating ranking), resulting in a weighted combined ranking. This allows for a continuous range of diversification, where \(\upalpha =0\) results in the Top-N recommendation set (diversity is not considered at all), \(\upalpha =1\) results in the high diversity recommendation set (predicted rating is not considered at all), and any value in between results in a mix of diversity and predicted rating.

Whereas in study 1b we only used two levels of diversification, in this study at least three levels of diversification are needed to test whether a pure Top-N \((\upalpha =0)\), a pure diversificationFootnote 9 \((\upalpha =1)\), or a mix of both is preferred. For this latter “medium diversity” condition, we try to balance average predicted rating and AFSR, as taking diverse items comes at the expense of items with high predicted ratings. In order to find the right point in this trade-off, AFSR and average rating were plotted for several levels of \(\upalpha \) in Fig. 9a–d. These figures show for AFSR an inflection point at \(\upalpha =0.3\) (see Fig. 9a, b), indicating that the highest relative gain in AFSR occurs going from \(\upalpha =0\hbox { to }\upalpha =0.3\). Fig. 9c, d show linearly decreasing average ratings for increasing alpha. These findings hold for both short (left panels) and long (right panels) recommendation lists.

Based on these findings, the optimal \(\upalpha \)-level for the medium diversification condition was determined to be 0.3. The mean AFSR and average predicted rank (the position of the recommended item in a list ordered on decreasing predicted rating) of the resulting recommendation sets are displayed in Table 7 for recommendation lists of 5 and 20 items. The table shows that the medium diversification condition lies approximately halfway between the high and low diversification condition in terms of AFSR. We also inspect the average rank, which is more informative than the mean rating as we want to know how close the new medium lists are to the Top-N list. The average rank of the medium diversification list is still quite low (close to the Top-N), showing a nice balance between AFSR and rank (i.e. predicted rating) for this medium diversification.

5 Study 2

5.1 Setup and expectations

Study 2 investigates the trade-off between conventional Top-N sets and diversified recommendation sets for small and larger item sets. For this purpose, study 2 manipulated diversification on three levels and list length on two levels. For diversification, we compared non-diversified Top-N lists with highly diversified lists similar to the high diversification condition from Study 1, and one medium diversification list that trades off accurate Top-N lists with diverse lists, as described in Sect. 4. For item set size we used 5 and 20 items, the two outer levels of study 1b. We did not include the 10-item lists, since there were no significant differences between 10 versus 20 items in the previous studies. Additionally, this allowed us to limit the number of between-subjects conditions to 6 (3 \(\times \) 2).

We expect that despite the fact that diversified lists in study 2 contain theoretically less attractive items than Top-N lists, such diverse lists will be perceived to be less difficult to choose from, as study 1 showed strong effects of diversification on choice difficulty. Both experiments in study 1 also showed that diversified lists were perceived to have a higher quality. However, in study 1 we controlled for equal attractiveness between diversified lists, whereas in study 2 we exert less control over the average predicted rating in order to compare against Top-N lists. By design, the Top-N lists will have items with higher predicted ratings than the diversified lists, which select items with a lower predicted rating (ranking) in order to improve diversity. So for study 2 we do not have strong predictions about the effect of diversity on perceived attractiveness, as on the one hand the Top-N lists should be theoretically the most attractive (highest predicted rating) but more diverse lists were perceived to be attractive in study 1. As choice satisfaction is an interplay between difficulty and perceived attractiveness, we expect that diversification will overcome the difficulty associated with choosing from high-quality item lists, so that in the end diversified lists, despite having lower predicted ratings, might be as satisfactory or even more satisfactory to choose from than traditional Top-N lists. Study 1b also showed that the effect of diversification was more pronounced for small item sets. Similarly, we expect to find a more positive effect of diversification for short lists in Study 2.

5.2 Research design and procedure

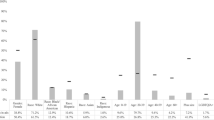

Participants for this study were gathered using the JFS participant database from our department, a different database with different participants from those in study 1. Participants were compensated by playing in a lottery that paid 15 euro for 1 out of every 5 participants (expected return was 3 euro). 165 participants completed the study (mean age: 25.6 years, SD \(=\) 9.8, 59 % males). After initial inspection of the data, 6 participants were excluded because they clearly put little effort in the experiment (short response times, inconsistent responses in the survey).

The procedure of Study 2 was similar to study 1b, with a few minor changes. Because Study 1b did not find any effects of the maximization scale, we dropped this scale from the experiment. The remaining items measuring personal characteristics (i.e., of preference strength and movie expertise) were subsequently moved to after the choice task as part of the larger survey evaluating diversity, attractiveness, difficulty and satisfaction. Study 2 thus consisted of two parts. Participants started with the task to rate at least 15Footnote 10 items, using the same movie recommender system as used in study 1. In the second part, participants received a set of recommendations, which, depending on the experimental condition, consisted of 5 or 20 movie titles that were of high, medium or no (Top-N) diversification (the number of participants per condition are in Table 9). The predicted ratings were displayed next to the movie titles. If the participant hovered over one of the titles, additional information appeared in a separate preview panel, identical to study 1. Participants were asked to choose one item from the list before proceeding to the final questionnaire. The final questionnaire presented 36 questions measuring the perceived diversity and attractiveness of the recommendations, the choice difficulty, and participants’ satisfaction with the chosen item, as well as preference strength and movie expertise, and a new set of questions that measured familiarity with the movies (which was included as an additional control, as Top-N lists might contain more popular/familiar movies).

5.3 Measures