Abstract

Metacognitive skills have been shown to be strongly associated with academic achievement and serve as the basis of many therapeutic treatments for mental health conditions. Thus, it is likely that training metacognitive skills can lead to improved academic skills and health and well-being. Because metacognition is an awareness of one’s own thoughts, and as such is not directly observable, it is often measured by self-report. This study reviews and critiques the use of self-report in evaluating metacognition by conducting systematic reviews and a meta-analysis of studies assessing metacognitive skills. Keyword searches were performed in EbscoHost, ERIC, PsycINFO, PsycArticles, Scopus, Web of Science, and WorldWideScience.org to locate all articles evaluating metacognition through self-report. 24,396 articles from 1982 through 2018 were screened for inclusion in the study. Firstly, a systematic review of twenty-two articles was conducted to review the ability of self-report measures to evaluate a proposed taxonomy of metacognition. Secondly, a systematic review and meta-analyses of 37 studies summarizes the ability of self-report to relate to metacognitive behavior and the possible effects of differences in research methods. Results suggest that self-reports provide a useful overview of two factors – metacognitive knowledge and metacognitive regulation. However, metacognitive processes as measured by self-report subscales are unclear. Conversely, the two factors of metacognition do not adequately relate to metacognitive behavior, but subscales strongly correlate across self-reports and metacognitive tasks. Future research should carefully consider the role of self-reports when designing research evaluating metacognition.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Importance.

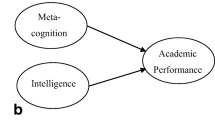

Flavell (1979) was the first to utilize the term metacognition. He defined it as “thinking about thinking” and described metacognition as one’s awareness of and understanding of their own and other’s thoughts. Since then a variety of interpretations and adjustments of Flavell’s original definition have been made. Currently, most researchers subscribe to the notion that metacognition involves processes that monitor and increase the efficiency of cognitive procedures (Akturk and Sahin 2011; Bonner, 1998; Van Zile-Tamsen 1996). In other words, metacognition encapsulates an awareness of one’s own learning and comprehension, the capacity to evaluate the demands of a task and subsequently choose the appropriate strategy for task completion, the ability to monitor one’s progress towards a goal and adjust strategy usage, the ability to reflect on one’s decision making process, and the ability to discern the mental states of others (Beran 2012; Flavell 1979; Lai 2011). Metacognition, then, is essential for learning, and training metacognitive skills has been repeatedly shown to increase academic achievement (e.g. Brown 1978; Bryce et al. 2015; Flavell 1979; Perry et al. 2018; van der Stel and Veenman 2010; van der Stel and Veenman 2014; Veenman and Elshout 1994; Veenman and Spaans 2005; Wang et al. 1993). Furthermore, therapies grounded in metacognition have been successful in treating those with mental health conditions (Wells 2011).

Because metacognition is defined as an awareness of one’s own thought processes and as such is not easily observed, it is difficult to measure. The most cost effective and efficient way to evaluate metacognitive skills is through a self-report questionnaire. Currently, there is not a self-report questionnaire that is considered the industry standard. Instead there is a wide range of questionnaires that measure a variety of components of metacognition (see Table 1 for a complete list of the evaluated self-reports). Employing a wide range of self-report assessments that evaluate a variety of metacognitive components results in an inconsistent understanding of the concept of metacognition and may affect how lay personnel, such as teachers and therapists, work directly with the metacognitive skills of those in their care. Therefore, the aim of this work is to critique the value of self-reports in metacognitive research by summarizing their ability to measure metacognition in two inter-related but distinct reviews:

-

1)

a systematic review of the entire body of metacognitive literature that evaluates whether self-report can adequately measure the distinct components of metacognition being assessed by the researcher’s purported taxonomy

-

2)

a separate systematic review and meta-analysis that analyzes the ability of self-report to adequately measure all aspects of purported taxonomies and the ability of self-report scales to relate metacognitive components to metacognitive behavior.

To our knowledge this is the first systematic review and meta-analysis to comprehensively investigate the use of self-report measures and their utility as a valid measure of distinct metacognitive components.

This review and meta-analysis were conducted and reported in accordance with the preferred reporting items for systematic reviews and meta-analyses (PRISMA) statement (Moher et al. 2009). Because both the systematic review and meta-analyses were not medical in nature, and do not investigate interventions, published scales for assessing risk of bias were not applicable. Consequently, bias was assessed following The Cochrane Collaboration’s (2011) recommendation of a domain-based evaluation.

Study 1: Systematic review: Can self-report assess distinct components of metacognition?

Introduction

Flavell’s original theory and definition

Metacognition is widely used as an “umbrella term” to refer to a range of different cognitive processes, all of which crucially involve forming a representation about one’s own mental states and/or cognitive processes. Whilst Flavell (1979) originally proposed a taxonomy of metacognition (Fig. 1), a range of other taxonomies are used within the field (e.g Brown 1978; Pedone et al. 2017; Schraw and Dennison 1994). As such, this has resulted in a wide variety of self-report questionnaires being used within the field, many of which are based on different taxonomies of metacognition. Flavell’s 1979 (Fig. 1) original theory divides metacognition into four areas: metacognitive knowledge, metacognitive experiences, goals, and actions. Metacognitive knowledge refers to the knowledge one has gained regarding cognitive processes, both in oneself and in others. Metacognitive experiences describes the actual usage of strategies to monitor, control, and evaluate cognitive processes. For example, knowing study strategies would be metacognitive knowledge, using a strategy while studying would exemplify a metacognitive experience. Flavell (1979) also subdivides metacognitive knowledge into three areas of knowledge – person, task, and strategy. Knowledge of person is the understanding of one’s own learning style and methods of processing information, as well as a general understanding of humans’ cognitive processes. The understanding of a task as well as its requirements and demands is designated as knowledge of task. Lastly, knowledge of strategy includes the understanding of strategies and the manner in which each strategy can be employed (Livingston 1997). The remaining two factors of Flavell’s description of metacognition are goals – one’s intentions when completing a cognitive task, and actions – the behaviors or cognitive functions engaged in fulfilling a goal. Because actions are generally cognitive tasks, it is an area rarely addressed in more recent metacognitive theories as it blurs the necessary divide between cognitive and metacognitive activities.

Flavell’s (1979) proposed taxonomy of metacognition

Modifications to Flavell’s taxonomy

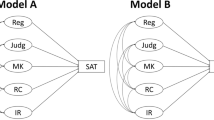

From Flavell’s pioneering work, many other theories of metacognition have been posited. Brown (1978) divided metacognition into knowledge of cognition (KOC) and regulation of cognition (ROC) and referred to subcomponents of regulation such as planning, monitoring, and evaluating, or reflecting. Much like Flavell’s theory, Brown’s (1978) two factors comprise an understanding of one’s ability to learn and remember (KOC) and one’s ability to regulate their learning and memory (ROC). Paris and colleagues (1984) took Brown’s model and divided knowledge of cognition into declarative, procedural, and conditional knowledge. Again, similar to Flavell, these subcomponents refer to one’s knowledge of their own processing abilities (declarative), ability to solve problems (procedural), and knowledge of when and how to use specific strategies (conditional). Schraw and Dennison (1994; Fig. 2) further defined metacognition by adding information management and debugging to join planning, monitoring and evaluation as subcomponents of regulation of cognition.

Schraw and Dennison’s (1994) proposed structure of metacognition

Additional taxonomies

In contrast, some researchers look at metacognition as self versus other skills (Pedone et al. 2017; Semerari et al. 2012). In other words, they separate metacognitive awareness and understanding of one’s own thoughts and actions from the awareness and understanding of other’s thoughts and actions. Thus, subcomponents of self include monitoring and integrating, and subcomponents of others are defined as differentiating and decentring. Some researchers posit a third factor of metacognitive beliefs or attributions (Desoete et al. 2001) in addition to KOC and ROC. This factor encompasses individuals’ attribution of their failures and successes, for example citing poor instructions as a reason for failure. However, there is a debate regarding whether attribution can be considered a true metacognitive process, and some researchers define it as an aspect of motivation, and not metacognition. Still other taxonomies build on those mentioned above by making slightly different distinctions, identifying more subcomponents, eliminating some subcomponents, and/or modifying the factors (see Pena-Ayala and Cardenas 2015 for a full comparison of all models of metacognition). Clearly there is a lack of consensus regarding a theoretical organization of metacognition, and available self-report questionnaires reflect this lack of consensus. A review of statistical representations of the structure of metacognitive self-reports may bring some clarity to this theoretical debate.

Methods

Searches and reviews were conducted in June and July of 2018 using EbscoHost, ERIC, PsycArticles, PsycINFO, Scopus, Web of Science, WorldWideScience.org, and bibliography reviews. The PRISMA chart in Fig. 3 details the searches as well as the inclusion and exclusion of papers. An initial search of all years of publication for the terms model, factor analysis and the various forms of metacognition (metacognition, metacognitive, meta-cognition) was conducted. To evaluate a generalizable structure of metacognition, participants must represent the general population. Therefore, articles were included only if:

-

they were from a peer reviewed journal or a chapter in a published book of articles

-

they statistically evaluated metacognition in the general population

-

the questionnaire used was widely applicable and not for a specific subset (thus research conducted in a mathematics class was included if the measures of metacognition were widely applicable and not specific to numeracy)

Articles were excluded if:

-

participants had a condition or disability (e.g. schizophrenia, Parkinson’s disease, learning disability)

-

the questionnaire used was built for a specific subset of the population (e.g. patients, firefighters, chemistry students)

-

the questionnaire used went beyond the scope of metacognition (e.g. included motivation or memory as part of the scales)

-

and if the article could not be obtained in English.

If an article was in another language or could not be located, the authors of the research were contacted and a copy of the article in English was requested. Thanks to response from authors, only two articles were eliminated due to language barriers.

Thus, after a title search, 170 articles were further reviewed. Fifty-five articles were excluded as duplicates, and another 65 based on analysis of the article abstracts using the inclusion and exclusion criteria. Fifty full articles were read and 28 more excluded (see Fig. 3 for an itemized exclusion record with justification). A table was created to encapsulate the following data from each article; authors and year, evaluated structure as measured by questionnaire scales or confirmatory factor analysis, measures employed, narrative results, statistical analysis and any items of note (See Table 1). Thus, each of the 22 articles were reviewed for statistical analysis of internal consistency, validity, and fit indices. Measures were reviewed to ensure they were evaluating only metacognition. Finally, participants were reviewed to ensure compliance with inclusion and exclusion criteria and to note possible drawbacks with participant pools.

Results

Two-factor structure

In total, 22 articles spanning 25 years (1993–2018) of research were included (Table 1). All 22 articles evaluated the structure of metacognition using a self-report questionnaire, self-report through an interview, or task that included self-report questions. Twelve of the articles employed either confirmatory factor analysis (CFA) or exploratory factor analysis (EFA) on the same measure; the Metacognitive Awareness Inventory (MAI; Schraw and Dennison 1994). The remaining ten examined the factor structure proposed by the Metacognition in Multiple Contexts Inventory (MMCI), Metacognitive Skills Scale (MSS), Awareness of Independent Learning Inventory (AILI), the state form of a measure of metacognition as state and trait, Metacognition Self-Assessment Scale (MSAS), COMEGAM-ro, Metacognition Assessment Interview (MAI), Metacognition Scale (MS), and the Turkish Metacognitive Inventory. Of the 22 studies, 10 confirmed, either through factor analysis or theoretical reasoning, the existence of two overarching factors – a measure of metacognitive knowledge (Knowledge of Cognition or Metacognitive Knowledge; henceforth KOC) and a measure of metacognitive regulation (Regulation of Cognition or Metacognitive Experiences; henceforth ROC; see Table 1 and Figs. 1 and 2). The MS questionnaire (Yildiz et al. 2009) first loaded on 6 factors, but researchers failed to adequately name the factors based on item loadings. Therefore, the items were adjusted and finally loaded on the 8 sub factors defined by Schraw and Dennison (1994)), Fig. 2). The Turkish and Persian versions of the MAI (Akin et al. 2007; Pour and Ghanizadeh 2017) loaded onto the Schraw and Dennison 8 subcomponents. Schraw and Dennison’s taxonomy defines metacognition as a two-factor structure of KOC and ROC with 8 subcomponents. Furthermore, Schraw and Dennison’s MAI loads consistently on KOC and ROC as factors. Thus, it is likely that all three of these studies would also load on KOC and ROC. In total, then 13 studies confirmed a 2-factor structure of metacognition separating knowledge from regulation.

Three-factor structure

In contrast, the AILI (Meijer et al. 2013) measure found three factors that were widely applicable using the generalizability coefficient G and validating it by correlating it to the Motivated Strategies for Learning Questionnaire (MSLQ). No factor analysis was run. The three factors – defined as knowledge, regulation, and metacognitive responsiveness – significantly correlated (all rs > .34) with all the subscales of the MSLQ except Test Anxiety. It should be noted that the MSLQ measures motivation as well as metacognition. In fact, the subscales of the AILI significantly correlated with the value scale (rs > .57), a motivational scale of the MSLQ. Additionally, the AILI included statements like “I think it’s important that there are also personal aims linked to assignments”. Therefore, motivation may help explain the third factor. Teo and Lee (2012) also confirmed a three-factor solution using a Chinese version of the MAI. However, as Harrison and Vallin (2018) aptly point out, no theoretical explanation for three factors was provided, and they utilized only 21 of the original 52 items. Additionally, there was no comparison of their structure with Schraw and Dennison’s (1994) two factor findings for the MAI. Teo and Lee did report some fit indices on a two-factor structure (see Table 1), which ranged from statistically acceptable to scores just below the cutoff for acceptability. Thus, Teo and Lee’s research can also be interpreted as lending some support for the two-factor structure.

Other structures

The MMCI (Allen & Amour-Thomas 1993) loaded on 6 factors, and both the state metacognitive measure (O’Neil and Abedi 1996) and the MI (Çetinkaya & Erktin, 2002) loaded on 4 factors (see Table 1). In all three cases, all of the resultant factors would align with only one of the overarching factors, suggesting the factors are all subcomponents of ROC. Similarly, the MSAS (Pedrone et al., 2017) and MAI (Semerari et al. 2012) loaded on 4 and 2 factors respectively. Again, all of the resultant factors would align with only one of the overarching factors defined in the two-factor structure, but in this case, it is KOC. Thus, these 5 studies also support the existence of a two-factor structure that distinguishes between knowledge and regulation, suggesting that the MMCI is best considered a self-report measure of metacognitive regulation, whilst the MSAS and MAI can be best considered self-report measures of metacognitive knowledge. None of the three self-reports provide suitable measures of knowledge and regulation.

Unidimensional

There were two studies that did not support the two factors of knowledge and regulation, but instead found a unidimensional structure (Altindağ & Senemoğlu, 2013; Immekus and Imbrie 2008). However, the single factor was reported after large adjustments to the original measures, that included eliminating almost half of the original items in one study and collapsing scores on one end of the Likert scale in the other study. Additionally, neither study reported fit indices other than chi square. Statistics that were reported were not ideal, for instance a unidimensional model representing 35.74% of the variance (Altindağ & Senemoğlu, 2013) and a unidimensional model reporting χ2(1409) = 26,396.72, p < .001 (Immekus and Imbrie 2008).

Ability based structure

In addition to the 2017 study reported above that suggested a two-factor structure for the JrMAI, Ning (2016) completed a second study with the JrMAI. In this second study Ning chose to look at the structure of metacognition based on respondents. Participants were given the JrMAI and then divided into two groups – those with high scores, and those with low scores. A factor analysis of participants who self-reported weaker metacognitive skills by scoring lower on the questionnaire revealed a unidimensional structure of metacognition. Analysis of those with higher metacognitive scores found a two-factor structure that aligned with Schraw and Dennison’s (1994) KOC and ROC. Ning’s research suggests that level of metacognitive abilities may play a role in the factor structure of metacognition, lending credibility to both a two-factor and unidimensional structure of metacognition. As the JrMAI is for adolescents, Ning’s research may also suggest that age could have an effect on factor structure as younger individuals have less sophisticated metacognitive skills (Dermitzaki 2005), however there is no discernable pattern of factor results based on age among the studies in this review. No other study attempted to divide participants by self-reported metacognitive abilities.

Subcomponent analysis

In sharp contrast to the strong support of a two-factor structure, the subcomponents of the factors are much more debatable. Component analysis varied widely both across the measures as well as on repeated assessments of the same measure. Structures with two, three, four, five, six, eight, and nine components were found (see Table 1). Just in the MAI, four, five, six, and eight subcomponents were found. Like the factor analysis, the number of components varied widely across ages and showed no discernable pattern of age influencing the number of subcomponents found.

Discussion

The papers systematically reviewed, despite the variance in results, lend strong support for the ability of various self-report measures to evaluate a two-factor structure. However, due to the wide range of results, no conclusion can be made regarding whether distinct subcomponents of these factors can be accurately assessed using a self-report measure. Of particular note, is that both the JrMAI and the MAI were unable to produce the same factor structure across studies. Ning’s structural equation modelling of metacognition according to participant skill level gives a possible explanation for the diverse results. Participants in the studies ranged widely in age from primary school to university. The extent of abilities across this large spread in age coupled with the range of results reported in this paper lends support to Ning’s supposition that reduced metacognitive skill operates with a less complex structure of metacognition. More research is required to determine whether varying metacognitive abilities effect the underlying structure of metacognition and are thus responsible for the wide variety of results. Regardless, when taking all findings into consideration, it can be deduced that when participants self-report on their own metacognitive abilities they provide an overview of their knowledge and their experiences or ability to regulate cognition, but self-reports do not seem to be able to reliably reveal the more complex relationships found in the metacognitive process when evaluating subscales.

Based on fit indices, the most statistically noteworthy self-report analyses include the bifactor structure from the JrMAI (see Fig. 5; Ning 2017) and the two-factor structure with 6 subcomponents from the COMEGAM-ro (see Fig. 6; Porumb and Manasia 2015). Both had multiple indices (see Table 1) that declared the models to be a good fit for the corresponding questionnaire, as well as strong theoretical support. Ning’s structure was evaluated on the JrMAI version A, which has had varying results. This study was the first attempt to compare several different theoretical structures alongside a bifactor structure. Results showed a bi-factor structure of general metacognition along with KOC and ROC to be the best fit (Fig. 4). However, upon looking at the reported Akaike and Bayesian analysis, it is questionable whether the bifactor structure is actually a better fit than the two-factor structure. In contrast the COMEGAM-ro model has strong statistical support in all areas (Porumb and Manasia 2015; Table 1). The results for the COMEGAM-ro revealed a two-factor structure of KOC and ROC with 6 subcomponents (Fig. 5). However, Porumb and Manasia’s article is the only published analysis of the factor structure of the COMEGAM-ro, thus the structure has not been replicated.

Ning’s (2017) bifactor structure of metacognition

Porumb and Manasia’s (2015) metacognitive structure

Based on the systematic review, there is not a single self-report that can be recommended as the industry standard (i.e. reliable and replicable). However, results suggest that using self-report, in particular the COMEGAM-ro, are best suited to evaluate two distinctive metacognitive factors. Alternatively, Ning’s (2016) novel approach of dividing participants by skill level may be a better method of evaluating self-reported metacognition. As both Ning’s and Porumb and Manasia’s results are each based on only one study, it is clear that more research is needed to determine the best method for using self-reports. Furthermore, based on the wide variety of subcomponent results, using a self-report to delineate the complexities of each factor may not be feasible. Thus, further research is also needed to explore the efficacy of measuring subcomponents with self-reports. Regardless, results of the review suggest that if a self-report analysis is included as part of a study, it can be used to evaluate general skills of two factors distinguishing knowledge from regulation but cannot adequately measure distinct subcomponents within the two factors.

If, as the systematic review suggests, knowledge and regulation can be adequately measured as distinct factors by self-reports, the subsequent question is whether those factors relate to participant behavior on experimental measures of knowledge and regulation.

Study 2: Systematic review and meta-analysis: Can self-report assess distinct components of metacognition and do those components relate to metacognitive behavior?

Introduction

Study 1 indicated that self-reports mostly measure two main factors of metacognition – knowledge and regulation. To date, the relationship between knowledge and regulation is not clear, in other words, knowledge of metacognitive skills may not relate to metacognitive behaviors. Much of the data seems to indicate that knowledge and regulation do not significantly correlate with each other, particularly when comparing knowledge to experimental measures of regulation (Jacobse and Harskamp 2012; Veenman 2005; Veenman 2013). Van Hout-Wolters & Schellings (2009) report r’s ranging from −.07 to .22 for self-report questionnaires and think aloud protocols, a method of measuring metacognition which asks participants to “think aloud” their thought processes as they complete a task. Correlations between retrospective task specific questionnaires and think aloud protocols fare a little better in that the r’s range from .10 to .42 (Van Hout-Wolters & Schellings 2009).

In contrast, correlations of subcomponents within each factor reveal larger effect sizes, albeit still with a range of results. Correlations of varying metacognitive behaviors (e.g. planning or monitoring) range from .64 to .98, and correlations of components of metacognitive knowledge (e.g. task or strategy knowledge) range from .02 to .80 (Schellings 2011; Van Hout-Wolters & Schellings 2009). The strength of the top end of these correlations within factors appears to verify the existence of two factors, but the low to moderate strength of the correlations between the factors questions the relationship between knowledge and behavior. The apparent contradictions of the results are often attributed to a variety of methodological choices, including the type of instrument used, timing of the instruments, participant ages, and analysis that compares full scale scores instead of corresponding subscale scores.

Type of instrument

Because metacognition is not directly observable, measurement tends to involve either a mechanism for self-report or performance on a task (e.g. Akturk & Sahin, 2001; Georghiades 2004; Schraw and Moshman 1995; Veenman et al. 2005; Veenman et al. 2006). The measurements typically employed can be divided into two types – on-line and off-line. On-line measurement occurs during the performance of a task or during learning, for example evaluating one’s judgement of learning or having a participant speak their strategies aloud as they complete a task. Off-line measurement occurs either before or after a task or learning has finished, such as interviewing a participant about the strategies they employed on the task they just completed or surveying participants about the general strategies they use to prepare for an exam. Due to its nature, knowledge is most often measured by self-report questionnaires or prospective interviews (off-line). Regulation is often measured with a task (on-line). Because, in general, on-line measures only weakly correlate with off-line measures (Veenman 2005), one interpretation of varied effect sizes is that the type of instrument (questionnaire versus task) may impact the results. Researchers agree that to truly understand the relationships between components of metacognition a multi-method approach using both on-line and off-line tasks is required (e.g. Desoete 2008; Schellings et al. 2013; Van Hout-Wolters & Schellings, 2009; Veenman 2005; Veenman et al. 2014). It is important to determine what off-line data (self-report) adds to understanding metacognition and metacognitive behaviors.

Timing

A similar interpretation for the variety of correlational analysis is the choice in timing of the measurement. Metacognition can be measured prior to performing a task (prospectively), during a task (concurrently), or following the completion of a task (retrospectively). It has been hypothesized that assessing metacognitive knowledge prospectively allows for too much bias as participants may be comparing themselves to others, what the teacher or supervisor thinks, or succumbing to social desirability (Schellings et al. 2013; Veenman 2005). A retrospective questionnaire allows participants to rely more heavily on actual behaviors just performed when evaluating the statements. Concurrent measures, like on-line measures, tend to obtain stronger correlations because they are evaluated during a task. However, not all skills are easily measured concurrently. For example, evaluating one’s performance, by its nature, must be measured retrospectively. Thus, some researchers suggest employing concurrent and retrospective task specific measures to ensure more reliable measurement (Schellings et al. 2013; Van Hout-Wolters & Schellings, 2009).

Age and full score versus scale scores

The age of the participants and manner of statistical analysis may also impact effect sizes. Dermitzaki (2005) reports, it is likely that students in primary school have not fully developed their metacognitive skills and may; therefore, not know how to apply their knowledge to a task or be fully aware of their own strategy use. Therefore, the variation in correlation coefficients could be due to lack of experience associated with chronological age. It has also been suggested that when comparing multiple measures of metacognition, they may be evaluating different subcomponents of the factors (e.g. planning and monitoring correlated to evaluation and reflection), resulting in poorer effect sizes. Thus, it has been suggested that correlational analysis be carried out by the corresponding subscales instead of the overall scores (Van Hout-Wolters & Schellings, 2009).

Meta-analysis

That we know of, there has never been a meta-analysis of the various relationships between and within factors of metacognition as assessed by self-reports and experimental procedures. Thus, based on the results of Study 1, this systematic review and meta-analysis will evaluate two factors of metacognition by summarizing the relationships between knowledge and regulation to first, determine the ability of self-report to measure proposed taxonomies and second, determine whether self-report relates to metacognitive behavior. Subcomponent correlations will be evaluated not only to determine relationships between self-report and behavior, but also to look again at whether self-report can capture more than a general overview of two factors. Furthermore, due to the current wide range of results, it is likely that meta-analysis results will be high in heterogeneity. Heterogeneity indicates that the pooled effect size estimate cannot be interpreted because another factor is moderating the results. Therefore, this analysis will also examine possible effects of moderators. When elevated heterogeneity is found, timing and type of instruments as well as age will be evaluated for their impact.

Methods

Searches and reviews were conducted in July and August of 2018 using EbscoHost, ERIC, PsycArticles, PsycINFO, Scopus, Web of Science, WorldWideScience.org, and bibliography reviews. The PRISMA chart in Fig. 6 details the searches and inclusion and exclusion criteria.

The aim of Study 2 is to determine the relationship between the varying components of metacognition, and whether measures of metacognitive knowledge relate to measures of metacognitive behavior (regulation). Consequently, several searches of all years of publication were performed. Since on-line tasks generally measure knowledge, and off-line tasks generally measure regulation, a search for these terms as well as the term multi-method was performed. The various forms of metacognition (metacognition, metacognitive, meta-cognition) were paired individually and with combinations of the terms online, on-line, offline, off-line, and multi-method (see the appendix for the specifics of the search).

Articles were included only if they compared at least two measures of pure metacognition. Thus, a comparison of the total scores of the Motivated Strategies for Learning Questionnaire (MSLQ) and a think aloud protocol would be excluded due to the generally accepted assumption that total scores on the MSLQ measure both participants’ metacognitive abilities and motivation profile. However, a comparison of the metacognitive subscale of the MSLQ and a think aloud protocol would be included. Unlike the first search looking for an overall structure of metacognition, one of the aims of this search was to understand the extent to which self-report scales correlate to behavioral measures of metacognition. Thus, task specific correlations were not excluded. Additionally, one task could be a measure of two components, provided the scales were listed separately and statistically compared. Therefore, articles were included if:

-

they statistically compared components of metacognition using a within design method

-

correlational effect sizes (e.g. Pearson’s r, Kendall’s tau) were provided

-

the measures of metacognition employed did not include other skills (e.g. motivation)

Articles were excluded if:

-

participants had a condition or disability (e.g. schizophrenia, Parkinson’s disease, learning disability)

-

there was no statistical data comparing components of metacognition (e.g. means and standard deviations listed, but no actual correlations run)

-

the correlational data was between participants instead of within (i.e. comparing abilities of distinct groups of participants instead of components of an underlying structure)

-

and the article could not be obtained in English.

Like the first systematic review, if an article was in another language or could not be located, the authors of the research were contacted and a copy of the article in English was requested. Thanks to the authors of the requested research, excluded studies based on lack of access were limited to 8 articles.

Ultimately, 320 articles were reviewed following a title search. One hundred sixty were excluded as duplicates. Another 94 articles were excluded after reviewing the article abstracts for relevance. Sixty-six full articles were read and 29 excluded based on the inclusion and exclusion criteria (see Fig. 6 for an itemized exclusion record with justification). A total of 37 articles spanning 33 years of research (1982–2015) were analyzed. A table was created summarizing authors and year, measures employed, components evaluated, age of participants, narrative results, statistical analysis and any items of note (see Table 2). In addition to this information, the type (on-line, off-line) and timing (prospective, concurrent, retrospective) of each instrument were noted. Thus, each of the 37 articles were reviewed for statistical relationships, and to ensure participant pools and metacognitive measures complied with inclusion and exclusion criteria. Any possible drawbacks to the study were also noted.

Statistical analysis

As recommended by researchers, most of the 37 articles used a multi-method approach to examine relationships or analyzed results by correlating corresponding subscales of measures. Thus, one article could feasibly contribute several pieces of data to the meta-analysis. In total, the 37 articles reported 328 correlations between factors and/or subcomponents of metacognition. Because only one statistic per population could be included in the meta-analysis, specific criteria for choosing the statistic was necessary. Correlations were chosen using the following hierarchy:

-

from online measures – online measures such as think aloud protocols are less subject to bias and misinterpretation than offline measures (Schellings et al. 2013),

-

correlations between two different measures as opposed to within one measure (e.g. correlations between subscales of a questionnaire) provide a more robust picture of relationships between metacognitive skills,

-

from measures that, based on the systematic review, found a model closest to Porumb and Manasia’s (2015) model (see Fig. 6 above) thus lessening possible interference of other factors, such as motivation,

-

the better Cronbach’s alpha scores for a more reliable measure,

-

the median piece of data – if an even number of statistics was reported, then the range of each half of the data was calculated and the statistic chosen according to the larger range (e.g. correlation set {.27, .27, .28, .38} .28 was chosen; {.40, .45, .55, .63, .68, .72} .55 was selected).

All correlations were reported with either Pearson’s r or Kendall’s tau. Pearson’s r and Kendall’s tau cannot be directly compared. Thus, all Kendall’s tau statistics were first converted to r using Kendall’s formula sine(0.5*π*τ) (Walker 2003). Data was then read into R (R Core Team 2018) and statistically analyzed using a random effects model and Hunter and Schmidt (2004) method with the metafor package (Viechtbauer 2010). Because of the small number of studies, Knapp and Hartung’s (2003) adjustment was also applied.

For the purposes of this study, all measures were labeled by their factor and/or subcomponent (e.g. metacognitive knowledge, planning), the timing of the measure (prospective, concurrent, retrospective), and assessment type (on-line, off-line). These labels allowed for analysis of moderators where it was necessary, and for meta-analysis of specific variables. Off-line is defined as a measure occurring before or after the learning task (Veenman 2005). Accordingly, overall confidence judgments made after the completion of the entire task were categorized as off-line. Confidence judgments made after completing each problem or question were classified as on-line since the learning was still occurring in a way that could effect the next judgment. Using the same reasoning, confidence judgments were also labeled as retrospective for overall and concurrent for judgements made after each problem or question.

Results

Knowledge and regulation

Thirteen articles analyzed correlations between knowledge and regulation, contributing 20 correlations for the meta-analysis. Measures of knowledge evaluated declarative, procedural, conditional, person, task, and/or strategy knowledge as defined by Flavell (1979) and Schraw and Dennison (1994). Knowledge was assessed by prospective judgments of metacognitive abilities that occured prior to commencing a task, interviews, the Index of Reading Awareness (IRA; Van Kraayenoord and Schneider 1999), Wurzburg Metamemory Test (WMMTOT; Van Kraayenoord and Schneider 1999), and the total score or metacognitive subscale scores of self-report questionnaires (see Table 2 for a complete list of measures). Regulation was evaluated by metacognitive tasks involving orientation, planning, prediction, organization, monitoring, regulation, control, systematic orderliness, debugging, evaluation, and reflection. Regulation was assessed through retrospective interviews, confidence judgments (CJ), think aloud protocols (TAP), PrepMate (Winne and Jamieson-Noel 2002), Index of Reading Awareness (IRA; Van Kraayenoord and Schneider 1999), the Meta-comprehension Strategies Index (MSI; Sperling et al. 2002), Cognitive Developmental aRithmetics (CDR; Desoete 2009), and the total score or metacognitive subscale scores of self-report questionnaires (see Table 2). All questionnaires reported good internal consistency except for 3 subscales of the task specific questionnaire employed in both of Schellings’ studies (Schellings 2011; Schellings et al. 2013). Correlations for subscales with poor Cronbach’s alpha scores were included in neither Schellings’ articles nor this meta-analysis.

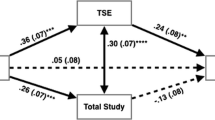

The 13 studies amassed a total of 2697 participants that varied in age from primary (604) and secondary (1317) to university students (776). Participants also varied nationally as research was conducted in America, Canada, Germany, the Netherlands, Nigeria, and Turkey. Pearson’s r correlations ranged widely from −0.03 to 0.93. A positive correlation indicates that greater knowledge of metacognition was associated with more accurate metacognitive regulation, in other words, greater metacognitive knowledge related to better metacognitive skills. The pooled effect size estimate for the data is r = 0.34 (95% CI, 0.22–0.46; see Table 3 for full meta-analysis results). However, interpretations of this value are not feasible because of the elevated heterogeneity (I2 = 96.26%). Due to the heterogeneity of the data, measures of regulation, timing of the assessment, type of assessment, age, and nationality were evaluated as moderators. The moderators lowered the heterogeneity to 37.07%, 72.96%, 91.66%, 92.04%, and 92.61% respectively. Of particular note, the instruments used to measure knowledge were responsible for 100% of the heterogeneity, leaving 0% residual heterogeneity (see Fig. 7). Additionally, measuring knowledge with an interview was a significant positive moderator indicative of higher effect sizes than other measures. Retrospective instruments (Timing) and the CPQ (measure of regulation) were also significant positive moderators. However, the Pearson’s correlation between the CPQ and a retrospective interview was r = 0.93. Therefore, timing (retrospective), measures of regulation (CPQ), and interviews are moderators because they are responsible for an extreme outlier. Since the outlier did not affect measures of knowledge, the results indicate that the choice of assessment instrument for measuring knowledge is most responsible for effect size variations.

Knowledge and regulation as off-line and on-line

Brown (1987) posited that all off-line measures of metacognition are actually measures of knowledge, even if statements are querying regulation. This supposition has merit as participant’s skills are not being measured in a questionnaire, rather it is awareness or knowledge of regulation that is evaluated. Consequently, a new set of data was selected following the hierarchy detailed above that looked for any correlation between on-line (regulation) and off-line (knowledge) instruments. This alternate classification yielded 21 studies that contributed 23 correlations. The studies were comprised of 1691 American, Canadian, Chinese, Dutch, German, Greek, and Turkish participants. Similar instruments were employed apart from the IRA, and with the addition of the Interactive Multi-Media Exercises (IMMEX; Cooper et al. 2008) and Sokoban tasks (Li et al., 2015). Primary (390), Secondary (156), and University (1145) students volunteered to take part in research that found correlations ranging from −0.39 to 0.63. This selection of studies resulted in a pooled effect size estimate of r = 0.22 (95% CI, 0.14–0.31) with heterogeneity of I2 = 58.78%. Due to the moderate amount of heterogeneity, a meta-regression was also run on this data. Similar to the previous results, measures of knowledge were responsible for 100% of the variation, left 0% residual heterogeneity, and was a significant moderator. Measures of regulation lowered the heterogeneity to 22.34% and nationality and timing of the instruments to 38.14% and 43.78%. Age was a significant moderator revealing that, correlation coefficients of students at the university level significantly increase the pooled effect size estimate and lower the heterogeneity to 32.93%. When evaluated as subgroups, age was not significant for primary and secondary. Additionally, secondary and university still revealed moderate heterogeneity (see Fig. 8). Thus, in general, older participants have stronger correlations between knowledge and regulation, but the results still vary widely based on the instrument used to measure knowledge. Taken together, then, self-reports of metacognitive knowledge and metacognitive regulation poorly relate to actual performance on metacognitive tasks. Of note, some self-reports appear to correlate more strongly than others (Fig. 7).

Subcomponents of knowledge and regulation

Few studies examine the relationship between the subcomponents of regulation and knowledge. The studies that explore those relationships are often correlating subscales instead of overall instrument scores. Because the subcomponents of metacognition operate jointly in the completion of a task, it is rare to see one subcomponent evaluated by one instrument. The studies found for this meta-analysis reflect this rarity, as all of the studies used subscale measures to evaluate relationships between subcomponents of metacognition. Thirteen studies employing 2278 participants compared two different measures evaluating subcomponents of knowledge and regulation. Participants ranged in age from primary (403) and secondary (1270) to university (605). Like the previous analyses, the measures varied widely and included both on-line tasks and off-line questionnaires. Additionally, measures were given across time and in a variety of countries including America, Canada, Germany, Greece, the Netherlands, and Romania.

Meta-analyses on subcomponents of knowledge revealed pooled effect sizes that ranged from 0.41 to 0.43. Pooled effect sizes for subcomponents of regulation ranged from 0.42 to 0.63 (see Table 3). Four of the six estimates displayed elevated heterogeneity. Meta-regressions revealed that in all but one case, measures of knowledge accounted for 100% of the heterogeneity. The five correlations between planning and monitoring came from five different measures, therefore measures of knowledge could not be evaluated as a moderator in the sixth study. Instead, nationality was responsible for 100% of the heterogeneity. Also of note, is that in four of the six meta-regressions, age was a significant moderator indicating that older participants had significantly stronger effect sizes than primary-aged participants. While age was a significant moderator, it did not meaningfully lower the heterogeneity. Meta-analyses of subcomponents across factors found pooled effect sizes that varied from 0.32 to 0.48 (see Table 3). Three of nine meta-analyses found non-significant pooled effect sizes. Pooled effect sizes that were significant had moderate to no heterogeneity. Because of the small number of studies examining these relationships, meta-regressions either could not be run, or moderators did not meaningfully decrease the heterogeneity.

Three other subcomponents of metacognition were evaluated at a subscale level in three studies. Elaboration (Muis et al. 2007) obtained moderate to strong effect sizes with other subcomponents of regulation (Planning 0.38–0.67; Monitoring 0.34–0.70; Evaluation 0.42–0.66). Prediction (Desoete et al., 2008) obtained small effect sizes with subcomponents of knowledge (Declarative 0.16; Procedural 0.10; Conditional 0.18) and small to strong effect sizes with other subcomponents of regulation (Planning 0.12–0.55; Monitoring 0.39–0.84; Evaluation 0.08–0.89). Finally, Attribution (Desoete et al. 2001) was characterized by small to moderate effect sizes with subcomponents of knowledge (rs 0.01 to 0.24) and small effect sizes with subcomponents of regulation (rs − 0.04 to 0.18). Because each study evaluated only one of these components and thus utilizing only one population, meta-analyses could not be run. Taking all the meta-analyses into consideration, it appears that subscales relate more strongly to behavior across and within measures than the overarching factors (knowledge and regulation) of metacognition.

Discussion

Results of the meta-analyses within the factors of knowledge and regulation (Table 3: Within Factor Relationships) reveal moderate to large effect sizes, confirming the existence of the two overarching factors. Conversely, the data shows only small to moderate pooled effect size estimates between knowledge and regulation, and confirm previous research finding that on-line and off-line measures do not strongly correlate. The smaller pooled effect size of 0.22 from measures categorized as on-line and off-line is not dissimilar to (Veenman and Van Hout-Wolter’s 2002) estimated average of r = 0.17 (as reported in Jacobse and Harskamp 2012). The pooled effect size is greater (r = .34) when measures aren’t categorized as on-line and off-line assessments. Thus, the data indicates that while self-reports consistently provide a broad overview of participants’ understanding of their own metacognitive knowledge and metacognitive regulation, the reports only weakly correlate with participants’ metacognitive behavior.

It is important to note that the resulting estimates in this study must be treated with caution because of the high heterogeneity. The heterogeneity can be explained by the wide range and variety of measures used to assess knowledge. One may therefore question whether the measures of knowledge are assessing the same underlying construct making their ability to predict behavior on a metacognitive task variable. Similarly, measures of regulation also meaningfully decrease heterogeneity, though it does not have as significant an impact as measures of knowledge. Consequently, the effect size varies based on the instruments chosen to measure metacognition. This may be due to the fact that tasks tend to measure one specific metacognitive skill (e.g. monitoring) while self-reports give an overview of many metacognitive skills. Thus, the data appears to reinforce the importance of carefully choosing an appropriate measure.

Sorting the data by measures of knowledge and running another meta-analysis still finds some heterogeneity within the results (see Fig. 7). The MAI, as an example, revealed multiple factor structures in the systematic review. Similarly, correlational results are wide ranging when employing the MAI (r’s 0.07 to 0.70). This may be explained by age, as it was a significant factor for the on-line versus off-line meta-regression. Age also shows up frequently as a significant modifier among the subcomponents. Meta-regressions with age as a modifier, in general, suggest that older participants achieve stronger effect sizes. But again, forest plots and meta-analyses show heterogeneity still exists when data is sorted by age (see Fig. 8). Thus, both age and choice of instrument appear to meaningfully impact results, reinforcing the import of carefully choosing a self-report as well as lending support to Ning’s suggestion that questionnaire factor structure is related to self-reported metacognitive ability.

Meta-analyses assessing components of knowledge and regulation, find strong correlations that lack heterogeneity (rs 0.46–0.51; Table 3: Between Factor Relationships). This supports the existence of two factors. Only attribution failed to have substantial relationships with other possible subcomponents and, like the systematic review, discounts the presence of a third factor based on motivation or attribution. In addition, the meta-analyses suggest that the subscale level of self-reports may strongly relate to behavior on metacognitive tasks. Thus, self-reports of knowledge and regulation may be useful for corelating to behavior at the subcomponent level, more so than at the factor level.

However, like the factor level, many of results must be interpreted with caution. Here again, variation in the instruments used to measure knowledge were most responsible for the wide range of results. Age also appeared as a significant moderator, but again, had less impact than the diversity of measures of knowledge. Thus, subcomponent meta-analysis reinforces the import of choosing the best instrument for the study’s specific questions. Furthermore, choice of instrument appeared more critical than timing or type of instrument. The studies varied widely in their use of on-line and off-line assessments and in the timing of the assessments (prospective, retrospective, and concurrent). Yet, timing appeared only once as a significant moderator, and type did not significantly moderate the results at all. This does not mean researcher’s emphasis (Sperling et al. 2004; Van Hout-Wolters & Schelllings 2009; & Veenman 2005) on the need for both on-line and off-line assessments across time should be ignored. Rather, the data seems to indicate that as multi-method approaches are being utilized widely across studies, there is not a superior type or timing of the assessments. Thus, multi-method assessments will provide a more detailed picture of metacognition.

General discussion

Current research that analyzes the factor structure of self-reported metacognition varies widely, from reporting a unidimensional structure to a structure with nine components. The first systematic review of factor analyses indicates that self-reports of metacognition are best suited to measure two factors characterized as regulation and knowledge but does not support the distinct measurement of additional factors or subcomponents of metacognition. Likewise, the second systematic review and associated meta-analysis did not support the inclusion of additional factors, as shown by weaker fit indices and small effect sizes between attribution and subcomponents of knowledge and regulation. Meta-analyses of subcomponents (person, task, strategies, planning, monitoring, evaluation, elaboration) tend towards moderate and strong pooled effect size estimates, again supporting the ability of self-reports to measure a two-factor structure of regulation and knowledge. It is important to note that this review is not evidence that only two factors of metacognition exist, rather that two broad factors of metacognition are robustly found from available self-reports measures.

Overall, the meta-analyses indicate that subcomponents of knowledge correlated with subcomponents of regulation result in considerably stronger estimates than the pooled effect sizes found between the broad factor measurements of knowledge and regulation (Table 3), indicating that subcomponents may better relate to each other and to behavior than the overall factors. Thus, it would appear Van Hout-Wolters and Schelling’s (2009) contention that metacognitive relationships should be measured at the subscale level has strong merit. Additionally, it lends support to the presence and importance of the subcomponents. The lack of heterogeneity in some of the pooled estimates of subcomponent relationships lends further credibility to the supposition that choice of measure may be a contributing factor to the wide range of somewhat contradictory results. Of note, every pooled estimate that lacked heterogeneity included the COMEGAN-ro as one of the instruments involved in the correlational analysis. The systematic review also found the COMEGAN-ro to report some of the strongest fit indices of a two-factor model.

While self-reports do not adequately measure the nuances of metacognitive behaviours, there is still a place for them in metacognitive research. Due to the variation among self-reports, the systematic reviews and meta-analyses do not indicate one specific self-report as the “gold” standard. Thus, choice of instrument and how the resulting data is used to measure metacognitive knowledge must be carefully considered. The data does suggest that self-reports are useful in obtaining a broad overview of participants’ knowledge and regulation. To correlate with metacognitive behavior, self-reports should be chosen carefully according to the subscales the research is evaluating. Furthermore, self-reports provide a broad understanding of how participants view their own metacognitive abilities. Therefore, the strength of self-reports may lie in their inability to reflect behaviour, allowing researchers to explore why participants tend towards inaccurate self-reporting. For example, research questions such as; Are those with autism or anxiety more accurate self-reporters than neurotypicals or healthy controls? or Do participants with more accurate metacognitive skills on tasks self-report less metacognitive ability than their peers?, would be valuable explorations for which self-reports are necessary assessment instruments.

It is important to note that choice of instrument could not explain 100% of the heterogeneity in every instance. Age also had a meaningful impact on the results, but like choice of instrument, cannot account for all of the heterogeneity. Ning’s 2016 study, described in Study 1, poses an alternative interpretation based on respondents’ self-reported metacognitive abilities. It is plausible that heterogeneity found throughout the meta-analyses is due to participant metacognitive capabilities. In other words, Ning’s study suggests that those with stronger metacognitive expertise utilize multiple strategies that are more sophisticated, thus employing multiple factors and subcomponents of metacognition. Those with weaker or minimal metacognitive capabilities may only utilize one or two simple strategies, revealing a simplified, or unidimensional, structure of metacognition. Under this hypothesis, it may be possible to adequately measure subcomponents with a self-report, but only in those with strong metacognitive skills.

The difference in nuance of metacognitive skills caused by expertise could effect the relationships between subcomponents, and account for the widely ranging scores that appear across instruments and even within instruments. The interpretation of differences in expertise are supported by the results showing age as a significant moderator while also continuing to show a range of results within each age cohort. Future studies collecting self-report data may want to divide the results by participant capabilities to explore the possibility of stronger relationships and a more complex underlying structure due to more developed metacognitive skill. Accordingly, it may be possible to determine weak metacognitive areas based on differences in structure (unidimensional versus two-factors) and the ability of subcomponents to relate to metacognitive behavior. Metacognitive skill can be taught (Perry et al. 2018). Under this supposition, it may also be possible to train individuals in specific subcomponents of metacognition in pursuit of academic achievement as well as better health and well-being.

Strengths and limitations

Study 1 and Study 2 are the first to comprehensively evaluate the use of self-reports to measure metacognition. Because the term metacognition came into use in the 1970s (Flavell 1979), there are 40 years of available research to analyse. Hence, given the range of studies analysed, the results are likely to be fairly representative of the general population and provide a rich pool of data from which an understanding of a metacognition can be evaluated. In addition, because measuring metacognition in the general population is not dependent on randomization, order of measures, or even participant sample characteristics – as evidenced by the wide range of results within age groupings, there is little risk of bias within the studies included for both reviews. Bias could result from participant response bias on the self-report questionnaires. But this concern is analyzed when comparing on-line versus off-line methods of measuring metacognition. The studies selected for both reviews are certainly subject to publication bias. However, as analysis of factor structure is not dependent on specific thresholds of findings and correlational analysis between metacognitive measures and subscales is generally part of a larger statistical question, a substantial quantity of both insignificant and robust results was reported within and across studies. A funnel plot would serve to further analyze publication bias, but the elevated heterogeneity, due to the wide range of results, renders funnel plot data unreliable (Terrin et al. 2003).

As stated throughout the analysis and discussion the amount of heterogeneity found within the meta-analyses does limit firm conclusions based on statistical analyses. This review was also limited to published studies that appeared in English. While we greatly appreciate the help of authors in providing some of these studies in an accessible format, we were unable to acquire all the inaccessible studies. In addition, the substantial volume of correlational data that had to be eliminated due to the constraint of preventing oversampling of participant populations is also a limitation. It is possible that an alternate hierarchy would obtain different results for the meta-analysis. The study tried to mitigate the effects of the volume of data by establishing deference to measures created specifically based on a theory of metacognition and giving lesser status to measures designed for specific venues (e.g. the classroom or therapeutic setting). The results clearly revealed that choice of instrument to measure metacognitive knowledge has a meaningful impact. Thus, it is probable that a hierarchy with an alternative focus could find significantly different results. To explore this concern, a meta-analysis was run with the entirety of statistical results culled from the systematic review. A meta-analysis of all results provided very similar pooled estimates to the ones reported in Study 2.

Conclusion

Self-reports can be problematic for a variety of reasons, such as effects of participant mood at the time the report is completed, social desirability bias, and central tendency bias with Likert scale responses. Furthermore, the correlations between participant self-reports and participants’ corresponding quantifiable behaviour are generally weak (Carver and Scheier 1981; Veenman 2005). Metacognitive self-reports are not exempted from these challenges, as seen in the fact that self-reports analysed for this review cannot adequately measure the nuances of metacognitive behaviour. However, metacognitive self-reports can still be used purposefully in research. Current self-reports can provide a general overview of knowledge and regulation skills. The relationships between subscales of self-reports and participant behaviour can be measured. Furthermore, the act itself of completing a self-report requires metacognition, and as such, can give researchers insights into how metacognitive knowledge can differ from metacognitive behaviour.

The studies analysed in this review support the use of self-report to measure participants’ general metacognitive abilities in knowledge and regulation as two distinct, albeit relatively basic, metacognitive factors. However, metacognitive knowledge measured as a broad factor is not strongly related to behavior on metacognitive tasks. Both factors can be divided into subcomponents that work jointly to achieve a goal or complete a task. However, self-reports cannot reveal the complex processes that occur at the subscale level. In contrast, self-reports do seem able to strongly correlate with behavior when subscales are used. However, data exploring the relationships between factors and components varies widely. This appears to be caused predominantly by choice of instrument to measure knowledge, and secondarily by age and choice of instrument to evaluate regulation. Thus, it is imperative that future research using self-reports systematically identify the purpose of the self-report and choose the report carefully based on that purpose. For example, if only a broad measure of knowledge and regulation are needed, then a variety of self-reports are effective. However, to evaluate the relationship between self-report and behavior, the method of self-report should align closely to the skills being measured by an experimental task. Alternatively, self-report may be used to further understand when or what type of participant is more accurate in predicting or understanding their own metacognitive behavior.

A challenge for researchers is to determine whether metacognitive capabilities effect the underlying structure of metacognition, and how the findings from this exploration can help inform venues such as schools and therapeutic environments where metacognitive skills are essential. Metacognition can be taught. If, as one interpretation of the data suggests, self-reported weak metacognitive skills function as a broad unidimensional construct, then it is feasible that teaching metacognition aimed at specific components prior to academic instruction or mental health therapy can allow individuals to more fully access both learning and the benefits of therapeutic interventions. Future research should look towards establishing a framework of metacognition that can be utilized across settings for advances in achievement and mental health and well-being, and then define how self-reports are best used towards that purpose.

References

Akin, A., Abaci, R., & Cetin, B. (2007). The validity and reliability of the Turkish version of the metacognitive awareness inventory. Educational Sciences: Theory and Practice, 7(2), 671–678.

Akturk, A. O., & Sahin, I. (2011). Literature review on metacognition and its measurement. In Procedia - Social and Behavioral Sciences (Vol. 15, pp. 3731–3736). https://doi.org/10.1016/j.sbspro.2011.04.364.

Allen, B. A., & Armour-Thomas, E. (1993). Construct validation of metacognition. The Journal of Psychology, 127(2), 203–211. https://doi.org/10.1080/00223980.1993.9915555.

Altindağ, M., & Senemoğlu, N. (2013). Metacognitive skills scale. Hacettepe University Journal of Education, 28(1), 15–26.

Artelt, C. (2000). Wie prädiktiv sind retrospektive Selbstberichte über den Gebrauch von Lernstrategien für strategisches Lernen? Zeitschrift Fur Padagogische Psychologie, 14(2–3), 72–84. https://doi.org/10.1024//1010-0652.14.23.72.

Aydin, U., & Ubuz, B. (2010). Turkish version of the junior metacognitive awareness inventory: An exploratory and confirmatory factor analysis. Education and Science, 35(157), 32–47.

Bannert, M., & Mengelkamp, C. (2008). Assessment of metacognitive skills by means of instruction to think aloud and reflect when prompted. Does the verbalisation method affect learning? Metacognition and Learning, 3(1), 39–58. https://doi.org/10.1007/s11409-007-9009-6.

Beran, M. J. (2012). Foundations of metacognition. Oxford University Press. https://doi.org/10.1093/acprof:oso/9780199646739.001.0001.

Bonner, J. (1988). Implications of cognitive theory for instructional design: Revisited. Educational Communication and Technology, 36(1), 3–14. https://doi.org/10.1007/BF02770012.

Bong, M. (1997). Congruence of measurement specificity on relations between academic self-efficacy, effort, and achievement indexes In AERA 1997.

Brown, A. (1978). Knowing when, where, and how to remember: A problem of metacognition. In R. Glaser (Ed.), Advances in Instructional Psychology. Volume 1 (Vol. 1, pp. 77–165). Mahwah, NJ: Erlbaum.

Brown, A. (1987). Metacognition, executive control, self-regulation, and other more mysterious mechanisms. In F. E. Wernert (Ed.), Metacognition, motivation and understanding (pp. 65–116). Mahwah, NJ: Erlbaum.

Bryce, D., Whitebread, D., & Szűcs, D. (2015). The relationships among executive functions, metacognitive skills and educational achievement in 5 and 7 year-old children. Metacognition and Learning, 10(2), 181–198. https://doi.org/10.1007/s11409-014-9120-4.

Carver, C.S., Scheier, M. F. (1981). Relationship between self-report and behavior. In: Attention and Self-Regulation. SSSP springer series in social psychology (pp. 269-285). New York: Springer.

Çetinkaya, P., & Erktin, E. (2002). Assessment of metacognition and its relationship with Reading comprehension achievement and aptitude. Bogazici University Journal of Education, 19(1), 1–11.

Chen, P. P. (2003). Exploring the accuracy and predictability of the self-efficacy beliefs of seventh-grade mathematics students. Learning and Individual Differences, 14(1), 79–92. https://doi.org/10.1016/j.lindif.2003.08.003.

Cooper, M. M., Sandi-Urena, S., & Stevens, R. (2008). Reliable multi method assessment of metacognition use in chemistry problem solving. Chemistry Education Research and Practice, 9(1), 18–24. https://doi.org/10.1039/b801287n.

Core Team, R. (2018). R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing Retrieved from https://www.r-project.org/.

Cromley, J. G., & Azevedo, R. (2006). Self-report of reading comprehension strategies: What are we measuring? Metacognition and Learning, 1(3), 229–247. https://doi.org/10.1007/s11409-006-9002-5.

Dermitzaki, I. (2005). Preliminary investigation of relations between young students’ self-regulatory strategies and their metacognitive experiences. Psychological Reports, 97, 759–768.

Desoete, A. (2007). Electronic journal of research in Educational Psychology. Electronic Journal of Research in Educational Psychology, 5(3), 705–730.

Desoete, A. (2008). Multi-method assessment of metacognitive skills in elementary school children: How you test is what you get. Metacognition and Learning, 3(3), 189–206. https://doi.org/10.1007/s11409-008-9026-0.

Desoete, A. (2009). Metacognitive prediction and evaluation skills and mathematical learning in third-grade students. Educational Research and Evaluation, 15(5), 435–446. https://doi.org/10.1080/13803610903444485.

Desoete, A., Roeyers, H., & Buysse, A. (2001). Metacognition and mathematical problem solving in grade 3. Journal of Learning Disabilities, 34(5), 435–449.

Elshout, J. J., Veenman, M. V. J., & Van Hell, J. G. (1993). Using the computer as a help tool during learning by doing. Computers and Education, 21(1–2), 115–122. https://doi.org/10.1016/0360-1315(93)90054-M.

Favieri, A. G. (2013). General metacognitive strategies inventory (GMSI) and the metacognitive integrals strategies inventory (MISI). Electronic Journal of Research in Educational Psychology, 11(3), 831–850. https://doi.org/10.14204/ejrep.31.13067.

Flavell, J. H. (1979). Metacognition and cognitive monitoring a new area of cognitive developmental inquiry. American Psychologist, 34(10), 906–911. https://doi.org/10.1037/0003-066x.34.10.906.

Georghiades, P. (2004). From the general to the situated: Three decades of metacognition. International Journal of Science Education, 26(3), 365–383. https://doi.org/10.1080/0950069032000119401.

Hadwin, A. F., Winne, P. H., Stockley, D. B., Nesbit, J. C., & Woszczyna, C. (2001). Context moderates students’ self-reports about how they study. Journal of Educational Psychology, 93(3), 477–487. https://doi.org/10.1037/0022-0663.93.3.477.

Harrison, G. M., & Vallin, L. M. (2018). Evaluating the metacognitive awareness inventory using empirical factor-structure evidence. Metacognition and Learning, 13(1), 15–38. https://doi.org/10.1007/s11409-017-9176-z.

Hunter, J. E., & Schmidt, F. L. (2004). Methods of meta-analysis: Correcting error and bias in research findings (2nd ed.). Thousand Oaks, CA: Sage.

Immekus, J. C., & Imbrie, P. K. (2008). Dimensionality assessment using the full-information item bifactor analysis for graded response data: An illustration with the state metacognitive inventory. Educational and Psychological Measurement, 68(4), 695–709. https://doi.org/10.1177/0013164407313366.

Jacobse, A. E., & Harskamp, E. G. (2012). Towards efficient measurement of metacognition in mathematical problem solving. Metacognition and Learning, 7(2), 133–149. https://doi.org/10.1007/s11409-012-9088-x.

Kim, B., Zyromski, B., Mariani, M., Lee, S. M., & Carey, J. C. (2017). Establishing the factor structure of the 18-item version of the junior metacognitive awareness inventory. Measurement and Evaluation in Counseling and Development, 50(1–2), 48–57. https://doi.org/10.1080/07481756.2017.1326751.

Knapp, G., & Hartung, J. (2003). Improved tests for a random effects meta-regression with a single covariate. Statistics in Medicine, 22(17), 2693–2710. https://doi.org/10.1002/sim.1482.

Lai, E. R. (2011). Metacognition: A literature review research report. Pearson’s Research Reports, (April), 41. https://doi.org/10.2307/3069464.

Li, J., Zhang, B., Du, H., Zhu, Z., & Li, Y. M. (2015). Metacognitive planning: Development and validation of an online measure. Psychological Assessment, 27(1), 260–271. https://doi.org/10.1037/pas0000019.

Livingston, J. A. (1997). Metacognition: An overview. Psychology. https://doi.org/10.1080/0950069032000119401.

Magno, C. (2010). The role of metacognitive skills in developing critical thinking. Metacognition and Learning, 5(2), 137–156. https://doi.org/10.1007/s11409-010-9054-4.

Meijer, J., Sleegers, P., Elshout-Mohr, M., van Daalen-Kapteijns, M., Meeus, W., & Tempelaar, D. (2013). The development of a questionnaire on metacognition for students in higher education. Educational Research, 55(1), 31–52. https://doi.org/10.1080/00131881.2013.767024.

Merchie, E., & Van Keer, H. (2014). Learning from text in late elementary education. Comparing Think-aloud Protocols with Self-reports. Procedia - Social and Behavioral Sciences, 112, 489–496. https://doi.org/10.1016/j.sbspro.2014.01.1193.

Minnaert, A., & Janssen, P. J. (1997). Bias in the assessment of regulation activities in studying at the level of higher education. European Journal of Psychological Assessment, 13(2), 99–108. https://doi.org/10.1027/1015-5759.13.2.99.

Moher, D., Liberati, A., Tetzlaff, J., Altman, D. G., Altman, D., Antes, G., et al. (2009). Preferred reporting items for systematic reviews and meta-analyses: The PRISMA statement. PLoS Medicine, 6(7). https://doi.org/10.1371/journal.pmed.1000097.

Muis, K. R., Winne, P. H., & Jamieson-Noel, D. (2007). Using a multitrait-multimethod analysis to examine conceptual similarities of three self-regulated learning inventories. British Journal of Educational Psychology, 77(1), 177–195. https://doi.org/10.1348/000709905X90876.

Ning, H. K. (2016). Examining heterogeneity in student metacognition: A factor mixture analysis. Learning and Individual Differences, 49, 373–377. https://doi.org/10.1016/j.lindif.2016.06.004.

Ning, H. K. (2017). The Bifactor model of the junior metacognitive awareness inventory (Jr. MAI). Current Psychology, 1–9. https://doi.org/10.1007/s12144-017-9619-3.

O’Neil, H. F., & Abedi, J. (1996). Reliability and validity of a state metacognitive inventory: Potential for alternative assessment. Journal of Educational Research, 89(4), 234–245. https://doi.org/10.1080/00220671.1996.9941208.

Ofodu, G. O., & Adepipe, T. H. (2011). Assessing ESL students’ awareness and application of metacognitive strategies in comprehending academic materials. Journal of Emerging Trends in Educational Research and Policy Studies (JETERAPS), 2(5), 343–346.

Paris, S. G., Cross, D. R., & Lipson, M. Y. (1984). Informed Strategies for Learning: A program to improve children’s reading awareness and comprehension. Journal of Educational Psychology, 76(6), 1239–1252. https://doi.org/10.1037/0022-0663.76.6.1239.

Pedone, R., Semerari, A., Riccardi, I., Procacci, M., Nicolo, G., & Carcione, A. (2017). Development of a self-report measure of metacognition: The metacognition self-assessment scale (MSAS) instrument description and factor structure. Clinical Neuropsychiatry, 14(3), 185–194.

Pena-Ayala, A., & Cardenas, L. (2015). Personal self-regulation, self-regulated learning and coping strategies, in university context with stress. In A. Peña-Ayala (Ed.), Metacognition: Fundaments, applications, and trends (Vol. 76, pp. 39–72). London: Springer. https://doi.org/10.1007/978-3-319-11062-2_9.

Perry, J., Lundie, D., & Golder, G. (2018). Metacognition in schools: What does the literature suggest about the effectiveness of teaching metacognition in schools? Educational Review, 1911, 1–18. https://doi.org/10.1080/00131911.2018.1441127.

Peterson, P. L., Swing, S. R., Braverman, M. T., & Buss, R. R. (1982). Students’ aptitudes and their reports of cognitive processes during direct instruction. Journal of Educational Psychology, 74(4), 535–547. https://doi.org/10.1037/0022-0663.74.4.535.

Porumb, I., & Manasia, L. (2015). A Clusterial conceptualization of Metacognization in students. In O. Clipa & C. R. A. M. A. R. I. U. C. Gabriel (Eds.), Educatia in Societatea Contemporana.Aplicatii (pp. 33–44). London: Lumen Publishing House.

Pour, A. V., & Ghanizadeh, A. (2017). Validating the Persian version of metacognitive awareness inventory and scrutinizing the role of its components in IELTS academic Reading achievement. Modern Journal Of Language Teaching Methods, 7(3), 46–63.

Saraç, S., & Karakelle, S. (2012). On-line and off-line assessment of metacognition improving metacognitive monitoring accuracy in the classroom. International Electronic Journal of Elementary Education, 4(2), 301–315.

Schellings, G. (2011). Applying learning strategy questionnaires: Problems and possibilities. Metacognition and Learning, 6(2), 91–109. https://doi.org/10.1007/s11409-011-9069-5.

Schellings, G. L. M., Van Hout-Wolters, B. H. A. M., Veenman, M. V. J., & Meijer, J. (2013). Assessing metacognitive activities: The in-depth comparison of a task-specific questionnaire with think-aloud protocols. European Journal of Psychology of Education, 28(3), 963–990. https://doi.org/10.1007/s10212-012-0149-y.

Schraw, G. (1994). The effect of metacognitive knowledge on local and global monitoring. Contemporary Educational Psychology, 19, 143–154.

Schraw, G. (1998). On the development of adult metacognition. In C. M. Smith & T. Pourchot (Eds.), Adult learning and development: Perspectives from educational psychology (pp. 89–106). Mahwah, NJ: Erlbaum.

Schraw, G., & Dennison, R. S. (1994). Assessing metacognitive awareness. Contemporary Educational Psychology, 19(4), 460–475. https://doi.org/10.1006/ceps.1994.103.

Schraw, G., & Moshman, D. (1995). Metacognitive theories. Educational Psychology Review, 7(4), 351–371. https://doi.org/10.1007/BF02212307.