Abstract

In this paper, an artificial neural network model was developed to predict the downhole density of oil-based muds under high-temperature, high-pressure conditions. Six performance metrics, namely goodness of fit (R2), mean square error (MSE), mean absolute error (MAE), mean absolute percentage error (MAPE), sum of squares error (SSE) and root mean square error (RMSE), were used to assess the performance of the developed model. From the results, the model had an overall MSE of 0.000477 with an MAE of 0.017 and an R2 of 0.9999, MAPE of 0.127, RMSE of 0.022 and SSE of 0.056. All the model predictions were in excellent agreement with the measured results. Consequently, in assessing the generalization capability of the developed model for the oil-based mud, a new set of data that was not part of the training process of the model comprising 34 data points was used. In this regard, the model was able to predict 99% of the unfamiliar data with an MSE of 0.0159, MAE of 0.101, RMSE of 0.126, SSE of 0.54 and a MAPE of 0.7. In comparison with existing models, the ANN model developed in this study performed better. The sensitivity analysis performed shows that the initial mud density has the greatest impact on the final mud density downhole. This unique modelling technique and the model it evolved represents a huge step in the trajectory of achieving full automation of downhole mud density estimation. Furthermore, this method eliminates the need for surface measurement equipment, while at the same time, representing more accurately the downhole mud density at any given pressure and temperature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

The oil and gas industry is not finding oil in the friendly terrains, it found them a century ago, and by implication, it is not also drilling and completing wells the way it did in the past century (Sidle 2015). It is also safe to add that the outlook of the industry would also not be the same in the next decade. The most probable reasons for this technological and methodical shift by the industry are attributable to: (1) declining reserves in conventional fields and (2) global increase in demand for oil and gas products. The new environment where the oil and gas is found is more often than not unfriendly, and the technologies used to probe the formations in these terrains are still being fine-tuned in order to get the best out of it. As a result, the oil and gas community is in constant search for ways to tackle the new challenges presented by these terrains. This is done by migrating away from the old ways of tackling them and then gravitating towards revolutionary technology, one that would propel the drilling operation in the direction that engenders greater improvements in performance, productivity and efficiency. In the drilling industry, there are myriads of challenges dotting the drilling landscape that the driller almost always grapples with while drilling ahead. Maintaining an optimal performance of the mud in downhole environments is one of these challenges. This challenge is especially unique in unforgiving environments such as those plagued by high temperatures and high pressures (HTHP) as well as in deepwaters. In general, HTHP wells are essentially wells whose static bottom hole temperature ranges between 300 °F and 500 °F and an expected shut-in pressure ranges between 10,000 psi and 25,000 psi (Conn and Roy 2004). Therefore, a good understanding of the downhole environment and how it affects mud properties gives the driller a better grip on wellbore pressure control (Ahmadi et al. 2018; Erge et al. 2016; Peng et al. 2016; Kutasov and Eppelbaum 2015). A basic property of drilling muds which the drilling fluid engineer is required to keep within allowable limits is the mud density. The drilling mud basically provides the hydrostatic pressure as a function of vertical depth to counter the pore pressures that exist in each section of the formation to be drilled. It provides the much needed assurance that no kicks, continuous mud loss into fractures or wellbore instability events occur throughout the whole spectrum of the drilling operation (Aird 2019). Problems with maintenance of this indispensable mud property are usually heightened by high downhole temperatures and pressures of the formation being drilled. While high downhole pressure increases the drilling fluid density, increased temperature results in density reduction (An et al. 2015; Hussein and Amin 2010; Babu 1996; McMordie Jr et al. 1982). If mud density is not kept at optimum condition, especially in highly porous and permeable formations, the consequences would likely range from wellbore pressure management issues which would ultimately give rise to non-productive time (Aird 2019). Hence, if drillers are going to effectively take charge of what happens downhole and make informed decisions as regards the safety of the rig and rig crew, they need accurate, measured and timely formation insights along every foot drilled. Therefore, proper planning and execution of drilling operations, particularly for HPHT wells, require complete and accurate knowledge of the behaviour of the drilling fluid density as the pressure and temperature change during the drilling operation (Ahad et al. 2019). Such information can accurately be obtained only through actual measurements of the drilling fluid density at desired pressures and temperatures. This, however, requires special equipment coupled with the fact that the procedure takes long man hours. Therefore, the best way to determine this property downhole is to create a high-fidel model that would take into account the effects of downhole temperature and pressure on mud density. Numerous models abound in the literature. Some of these models provide an estimate of mud density as it varies with pressure in conjunction with downhole temperature without considering the initial density of the muds. The phenomenal contributions by previous researchers to predictive models in this area over the past years require a historic perspective. A snapshot of these models at this time is presented in Table 1. As tried and true as some of these models in Table 1 have proven to be, there is an on-going effort to retire them due to the huge errors associated with their predictive capabilities. This too is another challenge. However, like all challenges, this one also comes with amazing and numerous opportunities too. One such opportunity is the genuine stride made in the development of new technologies to tackle it. The most current and by far the most pervasive technology that has crossover appeal across various industries is artificial intelligence. The reasons are not far-fetched. AI-based models offer numerous advantages. According to Bahiraei et al. (2019), AI-based models have the ability to learn from patterns and once learned can carry out generalization and estimation at great speed; they are fault tolerant in the sense that they are capable to handle noisy data and they are capable of finding the relationship among nonlinear parameters. A summary of the research efforts in using artificial intelligence techniques in modelling downhole mud density is presented in Table 2.

Materials and methods

Database sources and range of input and output variables

The dataset used for developing the model in this study was obtained from the work of McMordie et al. (1982). This dataset consists of 117 data points. The dataset consists of three input parameters, namely downhole pressure, downhole temperature and initial mud density. The output parameter considered is the final or downhole mud density. The minimum and maximum value of each parameter as well as the units of measurement is shown in Table 3.

Table 4 shows the nature of collected data. It gives a statistical description of the input and output variables using statistical measures such as mean, standard deviation and range.

Overview of artificial neural network

Artificial neural network (ANN) is a technique of artificial intelligence derived from the neural networks found in the nervous system of humans. Simply put, ANN is set of interconnected simulated neurons which are made up of several input signals with synaptic weights. An ANN model simply sums the products of inputs and their corresponding connection weights (w) and then it passes it through a transfer or activation function to get the output of that layer and feed it as an input to the next layer. A bias term is added to the summation function in order to raise or lower the input which is received by the activation function. The activation function does the nonlinear transformation to the input making it capable to learn and perform more complex tasks. The general relationship between input and output in an ANN model can be expressed as shown in Eq. 1 (Fazeli et al. 2013).

where x is an input vector; \(w_{ji}\) denotes the connection weight from the ith neuron in the input layer to the jth neuron in the hidden layer; bj represents the threshold value or bias of jth hidden neuron; \(w_{kj}\) stands for the connection weight from the jth neuron in the hidden layer to the kth neuron in the output layer; bk refers to the bias of the kth output neuron and \(f_{\text{h}}\) and \(f_{\text{o}}\) are the activation functions for the hidden and output neuron, respectively. Since brevity is the soul of wit, this work would refrain from presenting comprehensive details of the ANN methodology but would rather refer the interested reader to the work by Ghaffari et al. (2006) and the articles by Jorjani et al. (2008) and Mekanik et al. (2013) for more elaborate treatments.

Implementation of the artificial neural network

In this paper, the neural network toolbox of MATLAB 2015a mathematical software was used to predict the downhole mud density of oil-based muds in HTHP wells. The settings chosen for the ANN model are presented in Table 5. In the MATLAB software, the dataset was partitioned into three sets: the training set (60%), test set (20%) and validation set (20%). While the training data are used to adjust the weight of the neurons, the validation data are used to ensure the generalization of the network during the training stage and the testing data are used to examine the network after being finalized. The stopping criteria are usually determined by the preset error indices (such as mean square error, MSE) or when the number of epochs reaches 1000 (default setting). However, for this study, the number of epochs was set at 1000.

Performance of the ANN model

The performance of the network architectures in terms of training, testing and validation efficacy is discussed in this section. With prediction capability being the primary objective of a trained ANN, it is felt that the performance of a particular ANN during testing with test data should be the yardstick for selecting the best ANN architecture. The number of neurons in the hidden layer influences the generalization ability of the ANN model. Hence, in order to determine the optimal architecture for the networks, a trial-and-error approach was used to select the optimum number of neurons in the hidden layer. In this direction, a series of topologies were examined, in which the number of neurons was varied from 1 to 20. The mean square error (MSE) was used as the error function. Decision on the optimum topology was based on the minimum error of testing. Each topology was repeated 25 times to avoid random correlation due to the random initialization of the weights. After repeated trials, it was found that a network with five hidden neurons in the hidden layer produced the best performance for the ANN model with a validation MSE value of 8.4 × 10−4. The optimal architecture of the ANN network is shown in Fig. 1.

For this ANN model, the training process was truncated at 130 epochs for a 3-5-1 network architecture with a validation MSE of 8.4 × 10−4. Therefore, the 3-5-1 architecture is considered the best neural network for the present problem due to its superior prediction capability. Figure 2 shows the scatter plots of ANN predicted downhole mud density of oil-based muds versus the actual mud density experimental results for the training, testing and validation sets, respectively. The predicted model fits so well to the actual values for both training, testing and validation sets as can be seen in their correlation coefficients (R) of 0.99998, 0.99995 and 0.99995 for the training, testing and validation data, respectively.

The model generated by applying the Levenberg–Marquardt (LM) algorithm is given in Eq. 2.

Equation 2 represents trained ANN model correlating the three input parameters and the final downhole mud density in MATLAB. Here, ‘purelin’and‘tansig’ are MATLAB activation functions which calculate the layer’s output from its network input. Purelin gives linear relationship between the input and the output, with the algorithm being purelin(n) = n, whereas tansig is a hyperbolic tangent sigmoid transfer function and is mathematically equivalent to ‘tanh’. Tansig is faster than tanh in MATLAB simulations, thus it is used in neural networks. The tansig relation is defined by Eq. 3.

LW and IW are weights of connections from the input layer to the hidden layer and from the hidden layer to the input layer, respectively. In order to predict the downhole mud density using Eq. 2, the values in Table 6 are used. However, the value of xi in Eq. 2 is the individual data points for each of the input variables where x represents the input variables namely downhole pressure, downhole temperature and initial mud density; N is the number of neurons (in this case is five); j is the number of input variables, which in our case are three; b1 is bias of the hidden layer, and b2 is bias of the output layer. Table 5 lists the weights the biases of the developed empirical correlation (Eq. 2) that can be used to predict the downhole mud density.

For example, the downhole mud density are predicted using downhole pressure, downhole temperature and initial mud density, the value of W1 will be taken at j = 1 for downhole pressure, at j = 2 for downhole temperature and j = 3 for initial mud density. The xj in the previous equations are as follows; x at j = 1 is the downhole pressure, x at j = 2 is the downhole temperature, x at j = 3 is initial mud density. For example, the term \(\mathop \sum \nolimits_{j = 1}^{j} w1_{i,j} x_{j}\) for downhole mud density from Table 5 can be calculated as follows; \(\mathop \sum \nolimits_{j = 1}^{j} w1_{i,j} x_{j} = w_{1,1} x_{1} + w_{1,2} x_{2} + w_{1,3} x_{3}\) where the values of w1,1, w1,2 and w1,3 are − 2.33494, 1.6557, 3.604944, respectively. This will repeated for the 6 rows of the matrix, and the corresponding values for each row can be used from the tables. The term x represents the input variables, i.e. x1 represents downhole pressure, x2 represents downhole temperature and x3 represents initial mud density. Figure 3 shows a comparison between actual values and model predicted output values using the developed neural network model.

From Fig. 3, the model output from ANN shows a good match with the experimental data. However, in order to quantify numerically how well the model’s prediction matches actual values, the following performance metrics of R2, MSE, RMSE and SSE are used to assess the model. This is summarized in Table 7.

From Table 7, the assessment is based on the testing values only. Based on this, a combination of low MSE, SSE and RMSE values coupled with high R2 value (close to 1) makes the model a good one.

Relative importance of independent variables in the ANN model

Since every model is only an approximate representation of a system under study, and coupled with the fact that the debate about the opacity of AI-based models keeps lingering, it is always vital to learn about the hidden information on the data as extracted from the modelling technique used. The aim of sensitivity analysis is to vary the input variables of the model and assess the associated changes in model output. This method is particularly useful for identifying weak points of the model (Lawson and Marion 2008). The sensitivity analysis has therefore provided ways of explaining the degree of contribution of each of the input variables to the network. The contribution of each input variable to the prediction of the dependent variable is referred to as the relative importance of that variable. Many methods abound in the literature for calculating the relative importance of input variables. Examples include Garson’s algorithm, connection weights algorithm, use of partial derivatives, Lek’s profile method, etc. For this study, the connection weights algorithm was chosen. The choice is predicated on the fact that Olden et al. (2004) made a comparison of different techniques for assessing input variable contributions in ANNs. Their work showed that the method of connection weights was the least biased among others. This position was corroborated by Watts and Worner (2008). The connection weights algorithm proposed by Olden and Jackson (2002) calculates the sum of products of final weights of the connections from input neurons to hidden neurons with the connections from hidden neurons to output neuron for all input neurons. The connection weights from input neurons to hidden neurons are presented in columns 2–4 of Table 8, while connection weights from the hidden to output neurons is presented in column 5 of Table 8. The relative importance of a given input variable can be defined as shown in Eq. 4.

where RIx is the relative importance of input variable x. \(\mathop \sum \nolimits_{y = 1}^{m} w_{xy} w_{yz}\) is the sum of product of final weights of the connection from input neuron to hidden neurons with the connection from hidden neurons to output neuron, y is the total number of hidden neurons, and z is the output neurons. The sum of the products of the connection weights and rank of the input variables are presented in Table 9.

From Fig. 4, we find the relative importance of the various input parameters. It is to be noted that a large sensitivity to a parameter suggests that the system’s performance can drastically change with small variation in the parameter and vice versa. Following this analogy, it is clear that the process input variable, namely the initial mud density, has the highest impact on the downhole mud density followed by the downhole temperature and then downhole pressure. However, in Fig. 4, the relative importance associated with downhole temperature has negative values. It must be said here that in using the connection weights algorithm, the absolute values are used in determining the relative importance of the input variable. The sign (positive or negative values) helps indicate the direction in which each input affects the output. The positive sign indicates the likelihood that increasing this input variable will increase the output parameter, while the negative sign indicates the possibility that increasing this input variable will decrease the output variable. In this case, Fig. 4 reveals that increases in initial mud density and downhole pressure would lead to increased downhole mud density. However, the negative sign for the temperature indicates that increasing the downhole temperature would surely lead to decreased downhole mud density. These findings are in sync and resonate with what is found in the literature.

Comparison of ANN model’s performance with existing AI models

There are existing studies published in the recent past that focus on the prediction of the density of oil-based muds using artificial intelligence. Table 10 lists out some of these prior studies, and the results of this study are also compared with them.

According to Table 10, we find that the prediction accuracy is significantly different in various studies, and the model developed in this work is superior to all the other models. This is so because the model is not complex judging by the number of neurons in the hidden layer compared to the other models. Considering the results using the accuracy indicators, the ANN model developed in this study is found to be more appropriate for prediction of downhole mud density owing to its low MAE, MAPE and high R2 compared to the other models.

Comparison of the generalization capacity of the developed model with existing models

The usefulness of any model irrespective of the modelling technique used is based on how well it can generalize. By generalization, a model should be able to predict in a consistent manner when new data are supplied to it (Kronberger 2010). Using a new, independent set of data is considered the “gold standard” for evaluating the generalization ability of models (Alexander et al. 2015). Hence, the most convincing way of testing a model is to use it to predict data which has no connection with the data used to estimate model parameters. Thus, there is every good reason for not using the same data as we used in the model development—otherwise it would make one erroneously think that the model gives better predictions than it is really capable of (Lawson and Marion 2008). In this way, we reduce to the barest minimum the possibility of obtaining a “deceitful” good match between the model predictions and the measured data. The oil-based mud density experimental results obtained from the work of Peters et al. (1990) was used as unseen/unfamiliar data to test the oil-based mud density model. This data set consisting of 34 data points was introduced to the ANN model to predict the downhole mud density. Table 11 lists out some of the existing models used for the prediction of the downhole mud density of drilling muds and their performance metric when subjected to this dataset. Five statistical-based performance metrics (R2, MSE, MAE, MAPE and RMSE) were employed to assess the generalization capacity of the developed model as well as existing models.

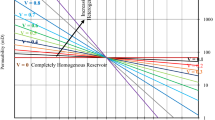

Considering the results in Table 11 in the light of the accuracy indicators mentioned above, it is crystal clear that the compositional model by Hoberock et al. (1982) predicted the unfamiliar data best since it presents the lowest values of MSE, MAE, MAPE and RMSE with a high value of R2; however, the model evolved by ANN exhibits signs closest to the Hoberock et al.’s model. This is seen in the values of the accuracy indicators. It is also worthy to note here that the model by Kutasov (1988) also trails the ANN model in terms of performance. In addition to this, and as made clearer in Fig. 5, the models by Politte (1985) and Sorelle et al. (1982) seem to have similar predictive capabilities since both overlap. The result presented in Fig. 5 indicates that the ANN model has impressively learned the nonlinear relationship between the input variables and the downhole mud density.

Comparison of developed ANN model with an existing equation of state for liquid density prediction

There exists an equation of state for estimating liquid density as a function of temperature and pressure. Furbish (1997) puts forward the equation of state for liquid density as shown in Eq. 5:

where \(\rho_{\text{o}}\) is the initial density of the liquid, \(\alpha \;{\text{and}}\;\beta\) are the local isobaric coefficient of thermal expansion and the local isothermal compressibility, respectively. T, To, P and Po are the final and standard temperatures and pressures of the liquid, respectively. It must be stated here that the use of this equation of state may not require a high computational overhead but may likely not yield accurate predictions since the local isobaric coefficient of thermal expansion and the local isothermal compressibility are not constants but rather a function of temperature and pressure. In this work, the values of the isobaric coefficient (α) and isothermal compressibility (β) would be taken from the work of Zamora et al. (2000) wherein they used 0.0002546/°F and 2.823 µ/°F for α and β, respectively for oil-based mud. In order to assess the predictive capability of the Equation of state for liquid density by Furbish (1997), it was subjected to the dataset in the work of Peters et al. (1990). The performance metrics for this model is shown on the sixth row of Table 11. In comparison with the ANN model’s performance, it is found that the EOS’s performance is somewhat comparable with the ANN model, though the ANN model is slightly better-off. The performance metrics for the ANN model and the EOS, respectively, are: R2 (0.9997, 0.9993); MSE (0.0159, 0.027); MAE (0.1, 0.139) and MAPE (0.7, 0.96).

Disparities between the developed ANN model and published ANN models

The following are the disparities between the ANN model developed in this study and the existing models.

- 1.

The possibility to replicate and reproduce the results from published research is one of the major challenges in model development using artificial intelligence. This makes it rarely possible to re-implement AI models based on the information in the published research, let alone rerun the models because the details of the model and the simulation codes are either not presented in an understandable format or have not been made available. Beyond this, AI techniques such as ANN used in this work are more often than not tagged black box models. This work’s novelty lies in the fact that it has been able to illuminate the box such that the weights and biases that can be used for replicating the models have been presented. A close look at the ANN models by Osman and Aggour (2003), Adesina et al. (2015) and Rahmati and Tartar (2019) indicates that the vital details of the model which can make it replicable are not presented, hence limiting their application.

- 2.

The use of sensitivity analysis in this work has been able to make the AI model developed in this work explainable unlike the other ANN models in the literature

- 3.

There are huge concerns regarding the ability of an AI model to generalize to situations that were not represented in the data set used to train the model. To the best of my knowledge, the models in the literature were not tested for their generalization ability by using a new dataset, hence, it is difficult to ascertain how generalizable these models are in practice.

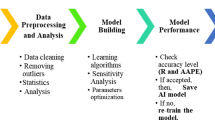

Design of a drilling process incorporating the developed ANN model for estimating downhole mud density

A synthetic drilling process has been designed and it contains no confidential information. This is done to mimic the complexity of an actual drilling process in the field. The approach followed in this design is based on replicating the life of a wellbore drilling process by including the necessary steps occurring during mud circulation. The downhole data acquisition method, its analysis and transmission to the ANN model for computation of the downhole mud density are major parts of the design. This design is exemplified in Fig. 6. An explanation of this figure is given below.

The conventional parts of the design such as the power generation activities, the hoisting activities, the rotary activities and the well control system are well known and would not be discussed extensively. However, only the mud circulating system where the ANN model is required would be discussed in detail. In this area, the major components of the design are the sensors for trapping the downhole pressure and temperature as well as the transmission of the readings to the ANN model.

- (1)

The downhole sensors The sensors for the downhole temperature and pressure measurement would essentially be attached to the logging while drilling tools. The sensors should be of the differential pressure type and should be placed in the logging while drilling (LWD) tool. The basic sensing element should be designed to detect a difference in wellbore pressure and temperature as the depth of the well increases. In this way, the differential pressure transducer interrogates the readings and transmits it to the ANN model software installed in a computer. This enables the model to instantaneously process the readings and calculate the downhole density of the mud and transmit it to the surface panel at the drillers console. This process would save rig time and reduced the time spent on manually testing for the surface density of the mud in the mud tank which may not always represent the density of the mud downhole. It must be said, however, that the data from the sensors requires some cleansing, filtering or analysis; hence, bio-inspired algorithms would be developed for this purpose.

- (2)

The functionality of the developed ANN model in the design A software would be developed based on the ANN model and installed in a computer where the filtered data from the sensor would be passed. The constant in the model which is the initial density of the mud would be manually measured prior to the start of drilling and fed into the model. The downhole temperature and pressure at any given time and depth would be transmitted to the model and the downhole density calculated. Since the sensor data would be streamed and transmitted per unit time, the increase or decrease of the downhole mud density would be referenced to the initial mud density. The trend (either increase or decrease) would be shown graphically with respect to time and depth. If the density falls too low or gets too high, then an alarm would be triggered indicating a low or high mud density. This would be shown on the surface panel at the drillers console. This way, the downhole drilling fluid density that has taken into account the downhole temperature and pressure regimes in the wellbore would be monitored. When the need arises, measures to adjust the mud density would be done on the basis of this knowledge in order to assure safety and wellbore stability.

Practical implication of findings

Despite the fact that the Hoberock et al.’s model performs creditably well, one of the major drawbacks of the Hoberock et al.’s compositional model is that it requires long man hours to determine the volume fraction of oil, water, solids, chemicals, etc., required to perform the density computation. The procedure for carrying out a complete compositional analysis of mud is done using the mud retort test. Users complain the procedure is rigorous, lengthy and time consuming; with the test often taking more than an hour to complete (Salunda, online). However, with the ANN model, the man hours spent carrying out the compositional analysis can hopefully be reduced and reallocated to other high value-added tasks. Hence, the ANN model developed in this work is a valuable substitute for the Hoberock et al.’s model, especially when downhole mud density values are required in real time for critical decisions to be taken.

Conclusion

In this work, an artificial neural network model has been developed for the prediction of the downhole density of oil-based muds in wellbores. The objective of this work was to use a nature-inspired algorithm (ANN) to develop a robust and accurate model for the downhole density of oil-based muds that would be replicable and generalizable across new input datasets. The developed model in this work is robust and reliable due to its simplicity and accuracy for the application of interest. Beyond this, the model can be replicated unlike other AI models in the literature because the threshold weights and biases required for developing the model are provided. The prediction capability of the ANN model has been compared with the existing AI models as well as with other models for predicting OBM density. Based on the obtained results, the outputs of the developed ANN model are in good agreement with corresponding experimental data. In comparison with existing AI models, the developed ANN model gives more accurate estimations. Furthermore, the intelligent ANN model paves the way for rapid predictions of the downhole density of oil-based muds in HTHP wells unlike the time-consuming procedure associated with the Hoberock et al.’s model.

Recommendation

While the industry churns out exabytes of drilling data every nanosecond, and we wait patiently with enthusiasm for oil prices to rise and costs to fall, it is imperative that we use that space of time to leverage on the cost saving and value adding technology of AI to develop high-fidel models for predicting other mud related challenges that affect mud density such as barite sagging in oil-based muds.

Abbreviations

- AI:

-

Artificial intelligence

- ANN:

-

Artificial neural network

- ANN-PSO:

-

Artificial neural network-particle swarm optimization hybrid

- ESD:

-

Equivalent static density

- GA-FIS:

-

Genetic algorithm fuzzy intelligent system

- GP:

-

Genetic programming

- HTHP:

-

High temperature high pressure

- lb/gal:

-

Pounds per gallon

- MAE:

-

Maximum absolute error

- MAPE:

-

Mean absolute percentage error

- MSE:

-

Mean square error

- MWD:

-

Measurement while drilling

- OBM:

-

Oil-based mud

- P:

-

Pressure (psig)

- PSO-ANN:

-

Particle swarm optimization artificial neural network

- R 2 :

-

Correlation coefficient

- RBF:

-

Radial basis function

- RMSE:

-

Root mean square error

- SBM:

-

Synthetic-based mud

- SVM:

-

Support vector machine

- SVR:

-

Support vector regression

- T:

-

Temperature (°F)

- \(\rho_{OBM}\) :

-

Oil-based mud density

References

Adesina FAS, Abiodun A, Anthony A, Olugbenga F (2015) Modelling the effect of temperature on environmentally safe oil based drilling mud using artificial neural network algorithm. Pet Coal J 57(1):60–70

Ahad MM, Mahour B, Shahbazi K (2019) Application of machine learning and fuzzy logic in drilling and estimating rock and fluid properties. In: 5th International conference on applied research in electrical, mechanical and mechatronics engineering

Ahmadi MA (2016) Toward reliable model for prediction of drilling fluid density at wellbore conditions: a LSSVM model. Neurocomput J 211:143–149. https://doi.org/10.1016/j.neucom.2016.01.106

Ahmadi MA, Shadizadeh SR, Shah K, Bahadori A (2018) An accurate model to predict drilling fluid density at wellbore conditions. Egypt J Pet 27(1):1–10. https://doi.org/10.1016/j.ejpe.2016.12.002

Aird P (2019) Deepwater pressure management. In: Deepwater drilling: well planning, design, engineering, operations, and technology application, pp. 69–109. https://doi.org/10.1016/B978-0-08-102282-5.00003-X

Alexander DLJ, Tropsha A, Winkler DA (2015) Beware of R2: simple, unambiguous assessment of the prediction accuracy of QSAR and QSPR models. J Chem Inf Model 55(7):1316–1322

An J, Lee K, Choe J (2015) Well control simulation model of oil based muds for HPHT wells. In: Paper SPE 176093 presented at the SPE/IATMI Asia Pacific oil and gas conference and exhibition held in Nusa Dua, Bali, Indonesia from 20–22 October 2015. https://doi.org/10.2118/176093-MS

Babu DR (1996) Effects of P-\(\rho\)-T behavior of muds on static pressures during deep well drilling—part 2: static pressures. In: Paper (SPE27419) SPE drilling and completion, vol 11, no 2. https://doi.org/10.2118/27419-PA

Bahiraei M, Heshmatian S, Moayedib H (2019) Artificial intelligence in the field of nanofluids: a review on applications and potential future directions. Powder Technol 353:276–301. https://doi.org/10.1016/j.powtec.2019.05.034

Conn L, Roy S (2004) Fluid monitoring service raises bar in HTHP wells. Drill Contract 60:52–53

Demirdal B, Miska S, Takach N, Cunha JC (2007) Drilling fluids rheological and volumetric characterization under downhole conditions. In: Paper SPE 108111 presented at the 2007 SPE Latin American and Carribean petroleum engineering conference held in Buenos Aires, Argentina, 15–18 April

Erge O, Ozbayoglu EM, Miska SZ, Yu M, Takach N, Saasen A, May R (2016) Equivalent circulating density modelling of yield power law fluids validated with CFD approach. J Pet Sci Eng 140:16–27

Fazeli H, Soleimani R, Ahmadi MA, Badrnezhad R, Mohammadi AH (2013) Experimental study and modelling of ultra-filtration of refinery effluents using a hybrid intelligent approach. J Energy Fuels 27(6):3523–3537

Furbish DJ (1997) Fluid physics in geology: an introduction to fluid motions on earth’s surface and within its crusts. Oxford University Press, New York

Ghaffari A, Abdollahi H, Khoshayand MR, Soltani BI, Dadgar A, Rafiee-tehrani M (2006) Performance comparison of neural network training algorithms in modelling of bimodal drug delivery. Int J Pharm 327(1):126–138

Hemphill T, Isambourg P (2005) New model predicts oil, synthetic mud densities. Oil Gas J 103(16):56–58

Hoberock LL, Thomas DC, Nickens HV (1982) Here’s how compressibility and temperature affect bottom-hole mud pressure. Oil Gas J 80(12):159–164

Hussein AMO, Amin RAM (2010) Density measurement of vegetable and mineral based oil used in drilling fluids. In: Paper SPE 136974 presented at the 34th annual SPE international conference and exhibition held in Tinapa—Calabar, Nigeria, 31 July–7 August. http://dx.doi.org/10.2118/136974-MS

Jorjani E, Chehreh CS, Mesroghli SH (2008) Application of artificial neural networks to predict chemical desulfurization of Tabas coal. J Fuel Technol 87(12):2727–2734

Kamari A, Gharagheizi F, Shokrollahi A, Arabloo M, Mohammadi AH (2017) Estimating the drilling fluid density in the mud technology: application in high temperature and high pressure petroleum wells. In: Mohammadi AH (ed) Heavy oil. Nova Science Publishers, Inc., Hauppauge, pp 285–295

Kårstad E, Aadnøy BS (1998) Density behaviour of drilling fluids during high pressure high temperature drilling operations. In: Paper SPE 47806 presented at SPE/IADC Asia Pacific drilling technology conference, Jakarta, Indonesia, September 7–9, pp 227–237. http://dx.doi.org/10.2118/47806-MS

Kemp NP, Thomas DC, Atkinson G, Atkinson BL (1989) Density modeling for brines as a function of composition, temperature, and pressure. SPE Prod Eng 4:394–400

Kronberger G (2010) Symbolic regression for knowledge discovery bloat, overfitting, and variable interaction networks. Ph.D. Dissertation. Technisch-Naturwissenschaftliche Fakultat, Johannes Kepler Universitat, Austria

Kutasov IM (1988) Empirical correlation determines downhole mud density. Oil Gas J 86:61–63

Kutasov IM, Eppelbaum LV (2015). Wellbore and formation temperatures during drilling, cementing of casing and shut-in. In: Proceedings world geothermal congress 2015, Melbourne, Australia, 19–25 April 2015

Lawson D, Marion G (2008) An introduction to mathematical modelling. https://people.maths.bris.ac.uk/~madjl/course_text.pdf. Accessed 10 June 2019

McMordie Jr WC, Bland RG, Hauser JM (1982) Effect of temperature and pressure on the density of drilling fluids. In: Paper SPE 11114 presented at the 57th annual Fall Technical Conference and Exhibition of the Society of Petroleum engineers of AIME held in New Orleans on September 26–29. http://dx.doi.org/10.2118/11114-MS

Mekanik F, Imteaz M, Gato-Trinidad S, Elmahdi A (2013) Multiple regression and artificial neural network for long term rainfall forecasting using large scale climate modes. J Hydrol 503(2):11–21

Olden JD, Jackson DA (2002) Illuminating the “black box”: understanding variable contributions in artificial neural networks. J Ecol Model 154:135–150

Olden JD, Joy MK, Death RG (2004) An accurate comparison of methods for quantifying variable importance in artificial neural networks using simulated data. J Ecol Model 178:389–397

Osman EA, Aggour MA (2003) Determination of drilling mud density change with pressure and temperature made simple and accurate by ANN. In: Paper SPE 81422 presented at the SPE 13th middle east oil show and conference, Bahrain. https://doi.org/10.2118/81422-MS

Peng Q, Fan H, Zhou H, Liu J, Kang B, Jiang W, Gao Y, Fu S (2016) Drilling fluid density calculation model at high temperature high pressure. In: Paper OTC-26620-MS presented at the offshore technology conference Asia, 22–25 March, Kuala Lumpur, Malaysia, https://doi.org/10.4043/26620-MS

Peters EJ, Chenevert ME, Zhang C (1990) A model for predicting the density of oil-based muds at high pressures and temperatures. In: Paper SPE-18036-PA, SPE Drilling Engineering, vol 5, no 2. https://doi.org/10.2118/18036-PA

Politte MD (1985). Invert oil mud rheology as a function of temperature. In: Paper SPE 13458 presented at the SPE/IADC drilling conference, 5–8 March, New Orleans Louisiana. https://doi.org/10.2118/13458-MS

Rahmati AS, Tatar A (2019) Application of radial basis function (RBF) neural networks to estimate oil field drilling fluid density at elevated pressures and temperatures. Oil Gas Sci Technol Rev IFP Energies Nouvelles. https://doi.org/10.2516/ogst/2019021

Salunda (online) Common problems with retort measurements. https://salunda.com/retort-measurements/. Accessed 07 May 2019

Sidle B (2015) Flexible, single skin completion concept meets well integrity, zonal isolation needs. J Petrol Technol 67(11):32

Sorelle RR, Jardiolin RA, Buckley P, Barrrios JR (1982) Mathematical field model predicts downhole density changes in static drilling fluids. In: Paper SPE 11118 presented at SPE annual fall technical conference and exhibition, New Orleans, LA, September 26–29. http://dx.doi.org/10.2118/11118-MS

Tatar A, Halali MA, Mohammadi AH (2016) On the estimation of the density of brine with an extensive range of different salts compositions and concentrations. J Thermodyn Catal. https://doi.org/10.4172/2160-7544.1000167

Tewari S, Dwivedi UD (2017) Development and testing of a NU-SVR based model for drilling mud density estimation of HPHT wells. In: Paper presented at the international conference on challenges and prospects of petroleum production and processing industries

Wang G, Pu XL, Tao HZ (2012) A support vector machine approach for the prediction of drilling fluid density at high temperature and high pressure. J Pet Sci Technol. https://doi.org/10.1080/10916466.2011.578095

Watts MJ, Worner SP (2008) Using artificial neural networks to determine the relative contribution of abiotic factors influencing the establishment of insect pest species. J Ecol Inf 3:64–74

Xu S, Li J, Wu J, Rong K, Wang G (2014) HTHP static mud density prediction model based on support vector machine. Drill Fluid Complet Fluid 31(3):28–31

Zamora M, Broussard PN, Stephens MP (2000) The top 10 mud related concerns in deepwater drilling operations. In: Paper SPE 59019 presented at the SPE international petroleum conference and exhibition in Mexico, Villahermosa, Mexico, 1–3 February

Zhou H, Niu X, Fan H, Wang G (2016) Effective calculation model of drilling fluids density and ESD for HTHP well while drilling. In: Paper IADC/SPE-180573-MS presented at the 2016 IADC/SPE Asia Pacific Drilling Technology Conference, Singapore. https://doi.org/10.2118/180573-MS

Acknowledgements

The authors would like to appreciate the management of the University of Uyo for providing an enabling environment to carry out this research.

Funding

No funding was provided for this research by any individual or corporate organization.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is distributed under the terms of the Creative Commons Attribution 4.0 International License (http://creativecommons.org/licenses/by/4.0/), which permits unrestricted use, distribution, and reproduction in any medium, provided you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons license, and indicate if changes were made.

About this article

Cite this article

Agwu, O.E., Akpabio, J.U. & Dosunmu, A. Artificial neural network model for predicting the density of oil-based muds in high-temperature, high-pressure wells. J Petrol Explor Prod Technol 10, 1081–1095 (2020). https://doi.org/10.1007/s13202-019-00802-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13202-019-00802-6