Abstract

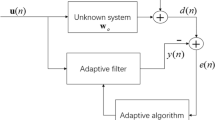

This correspondence presents the adaptive polynomial filtering using the generalized variable step-size least mean \(p\)th power (GVSS-LMP) algorithm for the nonlinear Volterra system identification, under the \(\alpha \)-stable impulsive noise environment. Due to the lack of finite second-order statistics of the impulse noise, we espouse the minimum error dispersion criterion as an appropriate metric for the estimation error, instead of the conventional minimum mean square error criterion. For the convergence of LMP algorithm, the adaptive weights are updated by adjusting \(p\ge 1\) in the presence of impulsive noise characterized by \(1<\alpha <2\). In many practical applications, the autocorrelation matrix of input signal has the larger eigenvalue spread in the case of nonlinear Volterra filter than in the case of linear finite impulse response filter. In such cases, the time-varying step-size is an appropriate option to mitigate the adverse effects of eigenvalue spread on the convergence of LMP adaptive algorithm. In this paper, the GVSS updating criterion is proposed in combination with the LMP algorithm, to identify the slowly time-varying Volterra kernels, under the non-Gaussian \(\alpha \)-stable impulsive noise scenario. The simulation results are presented to demonstrate that the proposed GVSS-LMP algorithm is more robust to the impulsive noise in comparison to the conventional techniques, when the input signal is correlated or uncorrelated Gaussian sequence, while keeping \(1<p<\alpha <2\). It also exhibits flexible design to tackle the slowly time-varying nonlinear system identification problem.

Similar content being viewed by others

References

T. Aboulnasr, K. Mayyas, A robust variable step-size LMS-type algorithm: analysis and simulations. IEEE Trans. Signal Process. 45(3), 631–639 (1997)

W.P. Ang, B. Farhang-Boroujeny, A new class of gradient adaptive step-size LMS algorithms. IEEE Trans. Signal Process. 49(4), 805–810 (2001)

A. Benveniste, Design of adaptive algorithms for the tracking of time varying systems. Int. J. Adapt. Control Signal Process. 1(1), 3–29 (1987)

J.B. Evans, P. Xue, B. Liu, Analysis and implementation of variable step size adaptive algorithms. IEEE Trans. Signal Process. 41(8), 2517–2535 (1993)

B. Farhang-Boroujeny, Adaptive Filters: Theory and Applications (Wiley, New York, 1998)

A. Feuer, E. Weinstein, Convergence analysis of LMS filters with uncorrelated Gaussian data. IEEE Trans. Acoust. Speech Signal Process. 33(1), 222–230 (1985)

S. Haykin, Adaptive Filter Theory, 4th edn. (Prentice Hall, New Jersey, 2002)

A.K. Kohli, D.K. Mehra, Tracking of time-varying channels using two-step LMS-type adaptive algorithm. IEEE Trans. Signal Process. 54(7), 2606–2615 (2006)

A.K. Kohli, A. Rai, Numeric variable forgetting factor RLS algorithm for second-order Volterra filtering. Circuits Syst. Signal Process. 32(1), 223–232 (2013)

R.H. Kwong, E.W. Johnston, A variable step size LMS algorithm. IEEE Trans. Signal Process. 40(7), 1633–1642 (1992)

V.J. Mathews, Adaptive polynomial filters. IEEE Signal Process. Mag. 8(3), 10–26 (1991)

V.J. Mathews, G.L. Sicuranza, Polynomial Signal Processing (Wiley, New York, 2000)

V.J. Mathews, Z. Xie, Stochastic gradient adaptive filters with gradient adaptive step size. IEEE Trans. Signal Process. 41(6), 2075–2087 (1993)

C.J. Masreliez, R.D. Martin, Robust Bayesian estimation for the linear model and robustifying the Kalman filter. IEEE Trans. Autom. Control. 22(3), 361–372 (1977)

P. Mertz, Model of impulsive noise for data transmission. IRE Trans. Commun. Syst. 9(2), 130–137 (1961)

R.D. Nowak, Nonlinear system identification. Circuits Syst. Signal Process. 21(1), 109–122 (2002)

T. Ogunfunmi, Adaptive Nonlinear System Identification: Volterra and Wiener Model Approaches (Springer, Berlin, 2007)

T. Ogunfunmi, T. Drullinger, Equalization of non-linear channels using a Volterra-based non-linear adaptive filter, in Proceedings of IEEE International Midwest Symposium on Circuits and Systems, Seoul, South Korea (2011), pp. 1–4.

A. Papoulis, Probability Random Variables and Stochastic Processes (McGraw-Hill, New York, 1984)

G. Samorodnitsky, M.S. Taqqu, Stable Non-Gaussian Random Processes: Stochastic Models with Infinite Variances (Chapman and Hall, New York, 1994)

M. Schetzen, The Volterra and Wiener Theories of Nonlinear Systems (Wiley, New York, 1980)

M. Shao, C.L. Nikias, Signal processing with fractional lower order moments: stable processes and their applications. Proc. IEEE. 81(7), 986–1010 (1993)

J. Soo, K.K. Pang, A multi step size (MSS) frequency domain adaptive filter. IEEE Trans. Signal Process. 39(1), 115–121 (1991)

B.W. Stuck, Minimum error dispersion linear filtering of scalar symmetric stable processes. IEEE Trans. Autom. Control. 23(3), 507–509 (1978)

I.J. Umoh, T. Ogunfunmi, An adaptive nonlinear filter for system identification. EURASIP J. Adv. Signal Process. 2009, 1–7 (2009)

I.J. Umoh, T. Ogunfunmi, An affine projection-based algorithm for identification of nonlinear Hammerstein systems. Signal Process. 90(6), 2020–2030 (2010)

E. Walach, B. Widrow, The least mean fourth (LMF) adaptive algorithm and its family. IEEE Trans. Inf. Theory 30(2), 275–283 (1984)

B. Weng, K.E. Barner, IEEE Trans. Signal Process. 53, 2588 (2005)

D.T. Westwick, R.E. Kearney, Identification of Nonlinear Physiological Systems (Wiley, New York, 2003)

B. Widrow, J.M. McCool, M.G. Larimore, C.R. Johnson, Stationary and nonstationary learning characteristics of LMS adaptive filter. Proc. IEEE. 64(8), 1151–1162 (1976)

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

The literature [8, 30] of fixed step-size LMS (FSS-LMS) algorithm reflects a tradeoff between the misadjustment and speed of adaptation, which depicts that a small step-size produces small misadjustment, but at the cost of longer convergence time. Under time-varying environment, the optimum value of the step-size in FSS-LMS algorithm strikes a balance between the amount of lag noise and gradient noise [5]. However, the optimum value of step-size can not be determined a priori due to the unknown channel parameters. Therefore, in KVSS-LMS algorithm [10], the variable step-size (VSS) is attuned using

In this KVSS-LMS algorithm, a large prediction error causes the step-size to increase in order to provide fast tracking, while a small prediction error leads to reduction in the step-size to yield small misadjustment. The step-size increases or decreases as the MSE increases or decreases, allowing the adaptive filter to track changes in the time-varying system, as well as to produce a small steady-state error. It also reduces sensitivity of the misadjustment to the level of nonstationarity. This approach is heuristically sound and has resulted in several ad hoc techniques, where the selection of convergence parameters is based on the magnitude of estimation error, polarity of the successive samples of the estimation error, measurement of the crosscorrelation of the estimation error with input data. However, the VSS-LMS algorithms are found to be sensitive to noise disturbances [4, 23] in the low signal-to-noise-ratio (SNR) environment because the step-size update of these algorithms are directly obtained from the instantaneous error that is contaminated by the disturbance noise.

Further in AVSS-LMS algorithm [1], the VSS is controlled using

Here, the error autocorrelation is usually a fine measure of the proximity to the optimum, which rejects the effect of uncorrelated noise sequence on the step-size update. In the early stages of adaptation, the error autocorrelation estimate is large, resulting in a large step-size. However, the small error autocorrelation leads to a small step-size under the optimum conditions. It results in effective adjustment of the step-size, while sustaining the immunity against independent noise disturbance, for the flexible control of misadjustment. The AVSS-LMS algorithm [1] shows substantial convergence rate improvement over the KVSS-LMS algorithm [10] and FSS-LMS algorithm [30] under the stationary environment for the low SNR, as well as the high SNR values. However, the performance of AVSS-LMS algorithm is comparable to the FSS-LMS and KVSS-LMS adaptive algorithms under the nonstationary conditions.

But in SVSS-LMS algorithm [2], the VSS is adjusted using the following recursive relation by adjusting the control parameters \(\bar{\rho }_w \,\,and\,\,\bar{\alpha }_w \).

The above equation can be rewritten in the expanded form as

For \(0\le \bar{\alpha }_w <1\) and \(\bar{Q}\rightarrow high\,\,\,value\), the Eq. (38) can be approximated as

This algorithm [2] outperforms the Mathews’ algorithm [13], when both are set to track the random walk channel under the similar conditions.

Rights and permissions

About this article

Cite this article

Rai, A., Kohli, A.K. Adaptive Polynomial Filtering using Generalized Variable Step-Size Least Mean pth Power (LMP) Algorithm. Circuits Syst Signal Process 33, 3931–3947 (2014). https://doi.org/10.1007/s00034-014-9833-2

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-014-9833-2