Abstract

We suggest several goodness-of-fit (GOF) methods which are appropriate with Type-II right censored data. Our strategy is to transform the original observations from a censored sample into an approximately i.i.d. sample of normal variates and then perform a standard GOF test for normality on the transformed observations. A simulation study with several well known parametric distributions under testing reveals the sampling properties of the methods. We also provide theoretical analysis of the proposed method.

Similar content being viewed by others

References

Arcones MA, Wang Y (2006) Some new tests for normality based on U-processes. Stat Probab Lett 76:69–82

Bargal AI, Thomas DR (1983) Smooth goodness of fit tests for the Weibull distribution with singly censored data. Commun Stat Theory Methods 12:1431–1447

Baringhaus L, Danschke R, Henze N (1989) Recent and classical tests for normality. A comparative study. Commun Stat Simul Comput 18:363–379

Barr DR, Davidson Teddy (1973) A Kolmogorov–Smirnov test for censored samples. Technometrics 15:739–757

Brain CW, Shapiro SS (1983) A regression test for exponentiality. Technometrics 25:69–76

Castro-Kuriss C (2011) On a goodness-of-fit test for censored data from a location-scale distribution with applications. Chil J Stat 2:115–136

Castro-Kuriss C, Kelmansky DM, Leiva V, Martínez EJ (2010) On a goodness-of-fit test for normality with unknown parameters and type-II censored data. J Appl Stat 37:1193–1211

Chen, G (1991) Empirical processes based on regression residuals: Theory and Application. Ph.D. Thesis-Simon Fraser University

Chen G, Balakrishnan N (1995) A general purpose approximate goodness-of-fit test. J Qual Technol 27:154–161

D’Agostino R, Massaro JM (1992) Goodness-of-fit tests. In: Balakrishnan N (ed) Handbook of the logistic distribution. Marcel Dekker Inc, New York, pp 291–371

D’Agostino R, Stephens M (1986) Goodness-of-fit techniques. Marcel Dekker Inc, New York

Dufour R, Maag UR (1978) Distribution results for modified Kolmogorov–Smirnov statistics for truncated or censored samples. Technometrics 20:29–32

Durbin J (1973) Weak convergence of the sample distribution function when parameters are estimated. Ann Stat 1:279–290

Epps TW (2005) Tests for location-scale families based on the empirical characteristic function. Metrika 62:99–114

Epps TW, Pulley LB (1983) A test for normality based on the empirical characteristic function procedures. Biometrika 70:723–726

Fischer T, Kamps U (2011) On the existence of transformations preserving the structure of order statistics in lower dimensions. J Stat Plan Inference 141:536–548

Glen AG, Foote BL (2009) An inference methodology for life tests with complete samples or type-II right censoring. IEEE Trans Reliab 58:597–603

Grané A (2012) Exact goodness-of-fit tests for censored data. Ann Inst Stat Math 64:1187–1203

Gupta AK (1952) Estimation of the mean and standard deviation of a normal population from a censored sample. Biometrika 39:266–273

Henze N (1990) An approximation to the limit distribution of the Epps–Pulley test statistic for normality. Metrika 37:7–18

Henze N, Wagner T (1997) A new approach to the BHEP tests for multivariate normality. J Multivar Anal 62:1–23

Klar B, Meintanis SG (2012) Specification tests for the response distribution in generalized linear models. Comput Stat 27:251–267

Johnson NL, Kotz S, Balakrishnan N (1994) Continuous univariate distributions, vol 1. Wiley, New York

Lin C-T, Huang Y-L, Balakrishnan N (2008) A new method for goodness-of-fit testing based on type-II censored samples. IEEE Trans Reliab 57:633–642

Loynes RM (1980) The empirical distribution function of residuals from generalised regression. Ann Stat 8:285–298

Meintanis SG (2009) Goodness-of-fit testing by transforming to normality: comparison between classical and characteristic function-based methods. J Stat Comput Simul 79:205–212

Michael JR, Schucany WR (1979) A new approach to testing goodness of fit for censored samples. Technometrics 21:435–441

Mihalko DP, Moore DS (1980) Chi-square tests of fit for type-II censored data. Ann Stat 8:625–644

O’Reilly FJ, Stephens MA (1988) Transforming censored samples for testing fit. Technometrics 30:79–86

Pettitt AN (1976) Cramér-von Mises statistics for testing normality with censored samples. Biometrika 63:475–481

Pettitt AN (1977) Tests for the exponential distribution with censored data using the Cramér-von Mises statistics. Biometrika 64:629–632

Peña EA (1995) Residuals from type II censored samples. In: Balakrishnan N (ed) Recent advances in life-testing and reliability. CRC Press, London, pp 523–543

R Core Team (2012) R: a language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria

Rao JS, Sethuraman J (1975) Weak convergence of empirical distribution functions of random variables subject to perturbations and scale factors. Ann Stat 3:299–313

Tenreiro C (2009) On the choice of the smoothing parameter for the BHEP goodness-of-fit test. Comput Stat Data Anal 53:1038–1053

Thode HC (2002) Testing for normality. Marcel Dekker Inc, New York

Tiku ML (1967) Estimating the mean and standard deviation from a censored normal sample. Biometrika 54:155–165

Tiku ML, Tan WY, Balakrishnan N (1986) Robust inference. Marcel Dekker Inc, New York

van der Vaart AW, Wellner JA (2002) Weak convergence and empirical processes. Springer, New York

Wilk MB, Gnanadesikan R, Huyett MJ (1962) Estimation of parameters of the gamma distribution using order statistics. Biometrika 49:525–545

Author information

Authors and Affiliations

Corresponding author

Additional information

Simos G. Meintanis: On sabbatical leave from the University of Athens.

Appendix

Appendix

In this appendix we shall investigate the reasons underlying the eventual validity of the Chen–Balakrishnan transformation. In doing so, first in Sect. 7.1 of the appendix we report results on the process corresponding to goodness-of-fit testing for the normal distribution with estimated parameters. Then in Sects. 7.2 and 7.3 of the appendix we study in detail the process produced by the Chen–Balakrishnan transformation and compare it with the process in Sect. 7.1 both theoretically and by simulation. The figures referred to in this appendix can be retrieved from the technical report (TR) at arXiv:1312.3078 [stat.ME].

1.1 The empirical process under normality

Suppose that \(Z_j, \ j=1,\ldots ,n\), are iid normal with unknown mean and variance. Then most standard goodness-of-fit tests are merely functionals of the empirical process

where \(\bar{Z}\) and \(s^{2}_{Z}\) are the sample mean and sample variance of \(Z_1,\ldots ,Z_n\), and \(U_j=\varPhi (Z_j)\) and \(t \in [0,1]\). This process has been studied by Durbin (1973) and showed that under regularity conditions,

where \(\alpha \) is a centered Gaussian process with covariance function

where \(\varPhi ^{-1}\) and \(\varphi \) are the quantile and density function of the standard normal distribution (We note that Loynes (1980) extended the analysis from the iid setting to the case of generalized linear models). Clearly the process \(\alpha _n\) is identical to the process involved in the Chen–Balakrishnan transformation only in the case of testing for normality with estimated parameters.

1.2 The empirical process under non-normality

In this section, we consider iid random variables \(X_j\) with DF \(\mathcal{{F}}_{\vartheta }(x)\) (assumed to be continuous and strictly increasing) and the standardized quantile residuals \(Z_j= \frac{Y_j-\bar{Y}}{s_Y}\) with \(Y_j= \varPhi ^{-1} \left( \mathcal{{F}}_{\hat{\vartheta }}(X_j) \right) \) (concerning the term standardized quantile residual, refer to Klar and Meintanis (2012), sec 2.1). We shall study the following empirical process based on the \(Z_j\):

where \(\mathcal{{ F}}_{\vartheta }^{-1}(p)\) denotes the quantile function of \(\mathcal{{ F}}_{\vartheta }(\cdot )\), and \(U_j = \mathcal{{F}}_{\vartheta }(X_j)\) are iid uniformly distributed on \([0,1]\). This is the empirical process actually produced by the Chen–Balakrishnan transformation. Now define \(c_Y(t)=\varPhi (\bar{Y} + s_Y \varPhi ^{-1}(t))\), and, similarly, \(c_N(t)=\varPhi (\bar{N} + s_N \varPhi ^{-1}(t))\), where \(N_j=\varPhi ^{-1}(U_j)\) are iid standard normal random variates, and \(\bar{N}\) and \(s_N^2\) are the arithmetic mean and sample variance of \(N_1,\ldots ,N_n\).

Then we can decompose the above process as (compare Chen 1991, pp 126-128)

where

and

The first part \(\hat{\beta }_{n,1}(t)\) in decomposition (Sect. 7.1) is the difference of an empirical process and a random perturbation thereof, and should be \(o_P(1)\) under appropriate regularity conditions (see Chen 1991, p.128; Loynes (1980), Lemma 1; and Rao and Sethuraman 1975). To check this claim, we simulated a random sample of size \(n\) from a unit mean exponential distribution and computed \(\hat{\beta }_{n,1}(t), \ t \in [0,1]\), on the basis of an equidistant grid with spacing equal to 0.005. This was repeated \(B=10000\) times. We approximated the mean function \(E[\hat{\beta }_{n,1}(t)]\) and the standard deviation \(\sqrt{Var[\hat{\beta }_{n,1}(t)]}\) by the arithmetic mean and empirical standard deviation based on the \(B\) replications; Figure 4 in the TR shows the result for sample sizes \(n=10,40,160\) and \(640\). Clearly the mean function is nearly zero and decreases for increasing \(n\), while the standard deviation is small compared to the standard deviation of \(\hat{\beta }_n\) or \(\hat{\beta }_{n,2}\) (see below). The corresponding variance seems to converge to zero, but rather slowly, with a speed of convergence approximately equal to \(1/\sqrt{n}\).

The second part \(\hat{\beta }_{n,2}\) corresponds to the normal empirical process \(\hat{\alpha }_{n}\) in Sect. 7.1 of the appendix. Figure 5 in the TR shows the empirical mean function and standard deviation of \(\hat{\beta }_{n,2}\) for an underlying exponential distribution computed in the same way as for \(\hat{\beta }_{n,1}\) above. The mean function, which takes on much larger values than that of \(\hat{\beta }_{n,1}\), again converges to zero, whereas the variance function is nearly constant for \(n\ge 40\).

For the third part we have

In general, this process does not converge to zero in probability. However, the contribution of \(\hat{\beta }_{n,3}\) seems to be negligibly small in comparison to \(\hat{\beta }_{n,2}\) in many situations. Figure 6 in the TR shows the empirical mean function and standard deviation of \(\hat{\beta }_{n,3}\) for the exponential distribution, computed as above. The mean function is very small and goes to zero. The standard deviation is small compared to the standard deviation of \(\hat{\beta }_{n,2}\), and it converges, but not to zero. We stress that the crucial point for the behavior of \(\hat{\beta }_{n,3}\) is the coupling between the \(Y_j\)’s and the normal variates \(N_j\) which are both based on the original \(X_j\), the first computed by using \(\hat{\vartheta }\) while the second by using \(\vartheta \). In fact if we generate iid standard normal random variables \(\tilde{N}_j\) independent of the \(X_j\)’s and use them instead of the \(N_j\)’s, the mean function is small, but does not seem to converge to zero, and the variance is much larger, even larger than that of \(\hat{\beta }_{n,2}\).

From the above it follows that the values of the process \(\hat{\beta }_{n}\) will be eventually dominated by \(\hat{\beta }_{n,2}\), at least for large \(n\). This is documented in Figure 7 of the TR where the mean and standard deviation of all four processes are plotted for \(n=40\). Note that the standard deviations of \(\hat{\beta }_{n}\) (in red) and \(\hat{\beta }_{n,2}\) (in green) are nearly identical, and therefore, visually indistinguishable.

1.3 Further analysis of the process \(\varvec{\hat{\beta }}_{n,3}\)

To keep things simple, we assume in the following that \(\vartheta \in \varTheta \subset \mathbb {R}\). Let \(\vartheta _0\) denote the true parameter value, and define

Then, \(N_j(\vartheta _0)=N_j, \bar{N}(\vartheta _0)=\bar{N}, s_N^2(\vartheta _0)=s_N^2\), and \(N_j(\hat{\vartheta })=Y_j, \bar{N}(\hat{\vartheta })=\bar{Y}, s_N^2(\hat{\vartheta })=s_Y^2\). Putting

we obtain \(h_t(\vartheta _0)=c_N(t)\) and \(h_t(\hat{\vartheta })=c_Y(t)\). Thus, we can write

where

Assume now that \(\sqrt{n}(\hat{\vartheta }-\vartheta _0)=O_p(1)\). Then, by using the expansion

with \(\vartheta ^*\) between \(\hat{\vartheta }\) and \(\vartheta _0\), and by omitting the quadratic term, we see that \(\hat{\beta }_{n,3}(t)\) can be approximated by

Of course, the validity of such a Taylor expansion is not enough to justify the uniform convergence \(\sup _t |\hat{\beta }_{n,3}(t) - \mathring{\beta }_{n,3}(t)|=o_P(1)\). A sufficient condition would be Fréchet differentiability of \(g_t(\cdot )\) (see, e.g. van der Vaart and Wellner (2002), p. 373). However, since we do not intend to give rigorous theory here, this issue is not discussed in any detail. Further analysis of \(g_t^{\prime }\left( \vartheta _0\right) \) leads to the following result, the proof of which is omitted.

Lemma 7.1

Let \(\bar{W}\) and \(s_W^2\) denote the arithmetic mean and sample variance of the random variables \(W_{j0}:=W_j(\vartheta _0)\), with \(W_j(\vartheta ):=d N_j(\vartheta )/d\vartheta \), while \(r\) denotes the sample correlation coefficient of \(W_{10},\ldots ,W_{n0}\) and \(N_1,\ldots ,N_n\). Then,

where \(\varphi (\cdot )\) denotes the density of the standard normal distribution.

Since \(N_1,\ldots ,N_n\) are iid standard normal variates, \(\bar{N} \rightarrow 0\) and \(s_N\rightarrow 1\) almost surely. Furthermore, \(\bar{W} \rightarrow \mu _W, s_W \rightarrow \sigma _W\), and \(r \rightarrow \rho \) a.s., where \((\mu _W,\sigma _W^2)\) are the mean and variance of \(W_{10}\), while \(\rho \) denotes the correlation coefficient of \(W_{10}\) and \(N_1\). Hence, the following approximation holds for the process in (7.3).

Lemma 7.2

The process \(\mathring{\beta }_{n,3}(t)\) can be approximated by the process

where

Figure 8 in the TR shows the simulated mean and standard deviation of \(\hat{\beta }_{n,3}\) in (7.2), \(\mathring{\beta }_{n,3}\) in (7.3), and \(\tilde{\beta }_{n,3}\) in (7.4) for sample size \(n=40\) and \(n=640\), again for the exponential distribution. The mean functions take on very small values; the standard deviations are very similar in all cases.

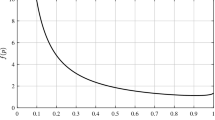

Figure 9 of the TR shows the function \(\tilde{h}_t^{\prime }(\vartheta _0)\), the part inside the brackets in \(\tilde{g}_t^{\prime }(\vartheta _0)\), and \(\tilde{g}_t^{\prime }(\vartheta _0)\) itself. The values of \(\tilde{g}_t^{\prime }(\vartheta _0)\) are close to zero on the whole interval. For this reason, \(\hat{\beta }_{n,3}\) is negligible in comparison to \(\hat{\beta }_{n,2}\) for the exponential case at hand.

We also performed Monte Carlo experiments for other gamma distributions with shape parameter not equal to one. These experiments lead to qualitatively similar results and although not reported here they are available from the authors upon request. A reasonable overall conclusion seems to be that under different sampling scenarios the processes \(\hat{\beta }_{n,1}\) and \(\hat{\beta }_{n,3}\) in decomposition (Sect. 7.1) are asymptotically negligible, and hence the behavior of the process \(\hat{\beta }_{n}\) of the Chen–Balakrishnan transformation is dominated by the values of the process \(\hat{\beta }_{n,2}\). The latter process however coincides with the process \(\hat{\alpha }_n(t)\) in Sect. 7.1 of the appendix which is involved in goodness-of-fit testing for normality with estimated parameters, and this fact justifies the validity of the Chen–Balakrishnan transformation.

Rights and permissions

About this article

Cite this article

Goldmann, C., Klar, B. & Meintanis, S.G. Data transformations and goodness-of-fit tests for type-II right censored samples. Metrika 78, 59–83 (2015). https://doi.org/10.1007/s00184-014-0490-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00184-014-0490-z