Abstract

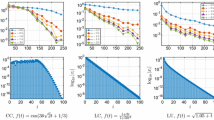

We consider the sparse polynomial approximation of a multivariate function on a tensor product domain from samples of both the function and its gradient. When only function samples are prescribed, weighted \(\ell ^1\) minimization has recently been shown to be an effective procedure for computing such approximations. We extend this work to the gradient-augmented case. Our main results show that for the same asymptotic sample complexity, gradient-augmented measurements achieve an approximation error bound in a stronger Sobolev norm, as opposed to the \(L^2\)-norm in the unaugmented case. For Chebyshev and Legendre polynomial approximations, this sample complexity estimate is algebraic in the sparsity s and at most logarithmic in the dimension d, thus mitigating the curse of dimensionality to a substantial extent. We also present several experiments numerically illustrating the benefits of gradient information over an equivalent number of function samples only.

Similar content being viewed by others

Notes

This is not to be confused with expansions in Hermite polynomials, which we do not address in this paper. See [31] for some work in this direction.

As we discuss in Sect. 6, we use a slightly different method of proof to remove the factor \(\lambda \) in the error bound, at the expense of a slightly increased log factor.

References

Adcock, B.: Infinite-dimensional \(\ell ^1\) minimization and function approximation from pointwise data. Constr. Approx. 45(3), 345–390 (2017)

Adcock, B.: Infinite-dimensional compressed sensing and function interpolation. Found. Comput. Math. 18(3), 661–701 (2018)

Adcock, B., Bao, A., Brugiapaglia, S.: Correcting for unknown errors in sparse high-dimensional function approximation (2017). arXiv:1711.07622

Adcock, B., Brugiapaglia, S.: Robustness to unknown error in sparse regularization. IEEE Trans. Inform. Theory 64(10), 6638–6661 (2018)

Adcock, B., Brugiapaglia, S., Webster, C.G.: Compressed sensing approaches for polynomial approximation of high-dimensional functions. In: Boche, H., Caire, G., Calderbank, R., März, M., Kutyniok, G., Mathar, R. (eds.) Compressed Sensing and Its Applications, pp. 93–124. Birkhäuser, Berlin (2017)

Aleseev, A.K., Navon, I.M., Zelentsov, M.E.: The estimation of functional uncertainty using polynomial chaos and adjoint equations. Int. J. Numer. Methods Fluids 67, 328–341 (2011)

Bigot, J., Boyer, C., Weiss, P.: An analysis of block sampling strategies in compressed sensing. IEEE Trans. Inform. Theory 62(4), 2125–2139 (2016)

Boyer, C., Bigot, J., Weiss, P.: Compressed sensing with structured sparsity and structured acquisition. Appl. Comput. Harmonic Anal. 46(2), 312–350 (2019)

Candès, E.J., Wakin, M.B.: An introduction to compressive sampling. IEEE Signal Process. Mag. 25(2), 21–30 (2008)

Chkifa, A., Cohen, A., Migliorati, G., Tempone, R.: Discrete least squares polynomial approximation with random evaluations-application to parametric and stochastic elliptic pdes. ESAIM Math. Model. Numer. Anal. 49(3), 815–837 (2015)

Chkifa, A., Cohen, A., Schwab, C.: High-dimensional adaptive sparse polynomial interpolation and applications to parametric PDES. Found. Comput. Math. 14, 601–633 (2014)

Chkifa, A., Cohen, G., Schwab, C.: Breaking the curse of dimensionality in sparse polynomial interpolation and applications to parametric pdes. J. Math. Pures Appl. 103, 400–428 (2015)

Chkifa, A., Dexter, N., Tran, H., Webster, C.G.: Polynomial approximation via compressed sensing of high-dimensional functions on lower sets. Math. Comput. 87(311), 1415–1450 (2018)

Chun, I.-Y., Adcock, B.: Compressed sensing and parallel acquisition. IEEE Trans. Inform. Theory 63(8), 4860–4882 (2017)

Cohen, A., Davenport, M.A., Leviatan, D.: On the stability and accuracy of least squares approximations. Found. Comput. Math. 13, 819–834 (2013)

Cohen, A., Migliorati, G.: Optimal weighted least-squares methods. SMAI J. Comput. Math. 3, 181–203 (2017)

Cohen, A., Migliorati, G.: Multivariate approximation in downward closed polynomial spaces. In: Dick, J., Kuo, F.Y., Woźniakowski, H. (eds.) Contemporary Computational Mathematics: A Celebration of the 80th Birthday of Ian Sloan, pp. 233–282. Springer, Berlin (2018)

Constantine, P.G.: Active Subspaces: Emerging Ideas for Dimension Reduction in Parameter Studies. SIAM, New Delhi (2015)

Foucart, S., Rauhut, H.: A Mathematical Introduction to Compressive Sensing. Birkhäuser, Berlin (2013)

Gross, D.: Recovering low-rank matrices from few coefficients in any basis. IEEE Trans. Inform. Theory 57(3), 1548–1566 (2011)

Guo, L., Narayan, A., Xiu, D., Zhou, T.: A gradient enhanced \(L^1\) mininization for sparse approximation of polynomial chaos expansions. J. Comput. Phys. 367, 49–64 (2018)

Hadigol, M., Doostan, A.: Least squares polynomial chaos expansion: a review of sampling strategies. Comput. Methods Appl. Mech. Eng. 332, 382–407 (2018)

Hampton, J., Doostan, A.: Compressive sampling of polynomial chaos expansions: convergence analysis and sampling strategies. J. Comput. Phys. 280, 363–386 (2015)

Komkov, V., Choi, K.K., Haug, E.J.: Design Sensitivity Analysis of Structural Systems, vol. 177. Academic Presss, Cambridge (1986)

Li, Y., Anitescu, M., Roderick, O., Hickernell, F.: Orthogonal bases for polynomial regression with derivative information in uncertainty quantification. Int. J. Uncertain. Quantif. 1(4), 297–320 (2011)

Lockwood, B., Mavriplis, D.: Gradient-based methods for uncertainty quantification in hypersonic flows. Comput. Fluids 85, 27–38 (2013)

Migliorati, G.: Multivariate Markov-type and Nikolskii-type inequalities for polynomials associated with downward closed multi-index sets. J. Approx. Theory 189, 137–159 (2015)

Migliorati, G., Nobile, F., von Schwerin, E., Tempone, R.: Approximation of quantities of interest in stochastic pdes by the random discrete \(l^2\) projection on polynomial spaces. SIAM J. Sci. Comput. 35(3), A1440–A1460 (2013)

Migliorati, G., Nobile, F., von Schwerin, E., Tempone, R.: Analysis of the discrete \(l^2\) projection on polynomial spaces with random evaluations. Found. Comput. Math. 14, 419–456 (2014)

Peng, J., Hampton, J., Doostan, A.: A weighted \(\ell _1\)-minimization approach for sparse polynomial chaos expansions. J. Comput. Phys. 267, 92–111 (2014)

Peng, J., Hampton, J., Doostan, A.: On polynomial chaos expansion via gradient-enhanced \(\ell _1\)-minimization. J. Comput. Phys. 310, 440–458 (2016)

Rauhut, H., Ward, R.: Sparse legendre expansions via \(\ell _1\)-minimization. J. Approx. Theory 164(5), 517–533 (2012)

Rauhut, H., Ward, R.: Interpolation via weighted \(\ell _1\) minimization. Appl. Comput. Harmon. Anal. 40(2), 321–351 (2016)

Seshadri, P., Narayan, A., Mahadevan, S.: Effectively subsampled quadratures for least squares polynomials approximations. SIAM/ASA J. Uncertain. Quantif. 5, 1003–1023 (2017)

Szegö, G.: Orthogonal Polynomials. American Mathematical Society, Providence (1975)

Tang, G.: Methods for high dimensional uncertainty quantification: regularization, sensitivity analysis, and derivative enhancement. PhD thesis, Stanford University (2013)

van den Berg, E., Friedlander, M.P.: SPGL1: A solver for large-scale sparse reconstruction (2007, June). http://www.cs.ubc.ca/~mpf/spgl1/. Accessed Jan 2016

van den Berg, E., Friedlander, M.P.: Probing the pareto frontier for basis pursuit solutions. SIAM J. Sci. Comput 31(2), 890–912 (2008)

Xu, Z., Zhou, T.: A gradient enhanced \(L^1\) recovery for sparse Fourier expansions (Preprint) (2017)

Yan, L., Guo, L., Xiu, D.: Stochastic collocation algorithms using \(\ell _1\)-minimization. Int. J. Uncertain. Quantif. 2(3), 279–293 (2012)

Acknowledgements

This work is supported in part by the NSERC Grant 611675 and an Alfred P. Sloan Research Fellowship. Yi Sui also acknowledges support from an NSERC PGSD scholarship.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Karlheinz Groechenig.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Adcock, B., Sui, Y. Compressive Hermite Interpolation: Sparse, High-Dimensional Approximation from Gradient-Augmented Measurements. Constr Approx 50, 167–207 (2019). https://doi.org/10.1007/s00365-019-09467-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00365-019-09467-0