Abstract

We study the homology of simplicial complexes built via deterministic rules from a random set of vertices. In particular, we show that, depending on the randomness that generates the vertices, the homology of these complexes can either become trivial as the number \(n\) of vertices grows, or can contain more and more complex structures. The different behaviours are consequences of different underlying distributions for the generation of vertices, and we consider three illustrative examples, when the vertices are sampled from Gaussian, exponential, and power-law distributions in \({\mathbb {R}}^d\). We also discuss consequences of our results for manifold learning with noisy data, describing the topological phenomena that arise in this scenario as “crackle”, in analogy to audio crackle in temporal signal analysis.

Similar content being viewed by others

1 Introduction

This paper treats the homology of simplicial complexes built via deterministic rules from a random set of vertices. In particular, it shows that, depending on the randomness that generates the vertices, the homology of these complexes can either become trivial as the sample size grows, or can contain more and more complex structures.

The motivation for these results comes from applications of topological tools for pattern analysis, object identification, and especially for the analysis of data sets. Typically, one starts with a collection of points and forms some simplicial complexes associated to these, and then takes their homology. For example, the \(0\)-dimensional homology of such complexes can be interpreted as a version of clustering. The basic philosophy behind this attempt is that topology has an essentially qualitative nature and should therefore be robust with respect to small perturbations. Some recent references are [2, 3, 9, 15, 19] with two reviews, from different aspects, in [1] and [12]. Many of these papers find their raison d’être in essentially statistical problems, in which data generate the structures.

An important example occurs in the following manifold learning problem. Let \(\mathcal {M}\) be an unknown manifold embedded in a Euclidean space, and suppose that we are a given a set of independent and identically distributed \((\mathrm {i.i.d.})\) random samples \(\mathcal {X}_n = \big \{X_1,\ldots ,X_n\big \}\) from the manifold. In order to recover the homology of \(\mathcal {M}\), we consider the homology of

where \(B_\varepsilon (X)\) is a closed ball of radius \(\varepsilon \) about the point \(X\). The belief, or hope, is that for large enough \(n\) the homology of \(U\) will be equivalent to that of \(\mathcal {M}\). A confounding issue arises when the sample points do not necessarily lie on the manifold, but rather are perturbed from it by a random amount. When this happens, it will follow from our results that the precise distribution behind the randomness plays a qualitatively important role. It is known that if the perturbations come from a bounded or strongly concentrated distribution, then they do not lead to much spurious homology, and the above line of attack, appropriately applied, works. For example, it was shown in [17] that for Gaussian noise it is possible to clean the data and recover the underlying topology of \(\mathcal {M}\) in a way that is essentially independent on the ambient dimension. Both [16, 17] contain results of the form that, given a nice enough \(\mathcal {M}\), and any \(\delta >0\), there are explicit conditions on \(n\) and \(\varepsilon \) such that the homology of \(U\) is equal to the homology of \(\mathcal {M}\) with a probability of at least \((1-\delta )\). However, for other distributions no such results exist, nor, in view of the results of this paper, are they to be expected.

Figure 1 provides an illustrative example of what happens when sampling points from an annulus and perturbing them with additional noise before reconstructing the annulus as in (1.1). In particular, it shows that if the additional noise is in some sense large then sample points can appear basically anywhere, introducing extraneous homology elements.

(a) The original space \(\mathcal {M}\) (an annulus) that we wish to recover from random samples. (b) With the appropriate choice of radius, we can easily recover the homology of the original space from random samples from \(\mathcal {M}\). (c) In the presence of bounded noise, homology recovery is undamaged. (d) In the presence of unbounded noise, many extraneous homology elements appear, and significantly interfere with homology recovery

In order to be able, eventually, to extend the work in [17] beyond Gaussian noise, and make more concrete statements about the probabilistic features of the homology this extension generates, it is necessary to first focus on the behaviour of samples generated by pure noise, with no underlying manifold. In this case, thinking of the above setup, the manifold \(\mathcal {M}\) is simply the point at the origin, and the homology that we shall be trying to recapture is trivial. Nevertheless, we shall see that differing noise models can make this task extremely delicate, regardless of sample size.

1.1 Summary of Results

To start being more concrete, let

be a set of \(n\,\mathrm {i.i.d.}\) random samples in \({\mathbb {R}}^d\), from a common density function \(f\). Recall that the abstract simplicial complex \(\check{C}(\mathcal {X},\varepsilon )\) constructed according to the following rules is called the Čech complex associated to \(\mathcal {X}\) and \(\varepsilon \):

-

(1)

The \(0\)-simplices of \(\check{C}(\mathcal {X},\varepsilon )\) are the points in \(\mathcal {X}\),

-

(2)

An \(n\)-simplex \(\sigma =[x_{i_0},\ldots ,x_{i_n}]\) is in \(\check{C}(\mathcal {X},\varepsilon )\) if \(\bigcap _{k=0}^{n} B_{x_{i_k}}\!(\varepsilon ) \ne \emptyset \).

An important result, known as the “nerve theorem”, links Čech complexes and the neighborhood set \(U\) of (1.1), establishing that they are homotopy equivalent (cf. [7]). In particular, they have the same Betti numbers, measures of homology that we shall concentrate on in what follows.

If the sample distribution has a compact support \(S\), then it is easy to show that, for a fixed \(\varepsilon \) and large enough \(n\),

where \(\simeq \) denotes homotopy equivalence and \(\Vert \cdot \Vert \) is the standard \(L^2\) norm in \({\mathbb {R}}^d\). Thus, there is not much to study in this case. However, when the support of the distribution is unbounded, interesting phenomena occur.

To study these phenomena, we shall consider three representative examples of probability densities. These are the power-law, exponential, and the standard Gaussian distributions, whose density functions are given, respectively, by

where \(\alpha > d\) and \(c_{\mathrm {p}},c_{\mathrm {e}},c_{\mathrm {g}}\) are appropriate normalization constants that will not be of concern to us.

For large samples from any of these distributions we shall show that there exists a “core”—a region in which the density of points is very high and so placing unit balls around them completely covers the region. Consequently, the Čech complex inside the core is contractible. The size of the core obviously grows to infinity as the sample size \(n\) goes to infinity, but its exact size will depend on the underlying distribution. For the three examples above, we study the size of the core in Sect. 2.1. Denoting a lower bound for the radius of the core by \(R_n^{\mathrm {c}}\), we will show that

Note that in all three cases we have tacitly assumed that the cores are balls, a natural consequence of the spherical symmetry of the probability densities.

Beyond the core, the topology is more varied. For fixed \(n\), there may be additional isolated components, but no longer placed densely enough to connect with one another and to form a contractible set. Indeed, we shall show that the individual components will typically have non trivial homology. Thus, in this region, the topology of the Čech complex is highly nontrivial, and many homology elements of different orders appear. We call this phenomenon “crackling”, akin to the well known phenomenon caused by noise interference in audio signals and commonly referred to as crackling.

As for core size, the exact crackling behaviour depends on the choice of distribution. It turns out that Gaussian samples do not lead to crackling, but the other two cases do. To describe this, with some imprecision of notation we shall write \([a,b)\) not only for an interval on the real line, but also for the annulus

In Sects. 2.2 and 2.3 we shall show that the exterior of the core can be divided into disjoint spherical annuli at radii

where by \( a_n \ll b_n\) we mean that \((b_n-a_n) \rightarrow \infty \) as \(n\rightarrow \infty \). These radii are defined differently for each of the two crackling distributions, and we will show that there are different types of crackling (i.e. of homology) dominating in different regions.

In \([R_{0,n},\infty )\) there are mostly disconnected points, and no structures with nontrivial homology. In \([R_{1,n},R_{0,n})\) connectivity is a bit higher, and a finite number of non-trivial \(1\)-cycles appear. In \([R_{2,n},R_{1,n})\) we have a finite number of non-trivial \(2\)-cycles, while the number of \(1\)-cycles grows to infinity as \(n\rightarrow \infty \). In general, in \([R_{k,n},R_{k-1,n})\), as \(n\rightarrow \infty \) we have a finite number of non-trivial \(k\)-cycles, infinitely many \(l\)-cycles for \(l<k\), and no cycles of dimension \(l>k\). In other words, the crackle starts with a pure dust at \(R_{0,n}\) and as we get closer to the core, higher dimensional homology gradually appears. See Fig. 2 in the following section for more details.

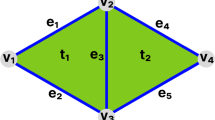

The layered behaviour of crackle. Inside the core (\(B_{R_n^{\mathrm {c}}}\)) the complex consists of a single component and no cycles. The exterior of the core is divided into separate annuli. Going from right to left, we see how the Betti numbers grow. In each annulus we present the Betti number that was most recently changed

As we already mentioned, the Gaussian distribution is fundamentally different than the other two, and does not lead to crackling. In Sect. 2.4 we show that, for the Gaussian distribution, there are hardly any points located outside the core. Thus, as \(n\rightarrow \infty \), the union of balls around the sample points becomes a giant contractible ball of radius of order \(\sqrt{2\log n}\).

It is now possible to understand a little better how the results of this paper relate to the noisy manifold learning problem discussed above. For example, if the distribution of the noise is Gaussian, our results imply that if the manifold is well behaved, and the sample size is moderate, noise outliers should not significantly interfere with homology recovery, since Gaussian noise does not introduce artificial homology elements with large samples. However, there is a delicate counterbalance here between “moderate” and “large”. Once the sample size is large, the core is also large, and the reconstructed manifold will have the topology of \(\mathcal {M}\oplus B_{O(\sqrt{2\log n})}(0)\), where \(\oplus \) is Minkowski addition. As \(n\) grows, the core will eventually envelope any compact manifold, and thus the homology of \(\mathcal {M}\) will be hidden by that of the core.

On the other hand, if the distribution of the noise is power-law or exponential, then noise outliers will typically generate extraneous homology elements that, for almost any sample size, will complicate the estimation of the original manifold. Furthermore, increasing the sample size in no way solves this problem. Note that this issue is in addition to the fact that increasing the sample size will, as in the Gaussian case, create the problem of a large core concealing the topology of \(\mathcal {M}\).

Thus, from a practical point of view, the message of this paper is that outliers cause problems in manifold estimation when noise is present, a fact well known to all practitioners who have worked in the area. What is qualitatively new here is a quantification of how this happens, and how it relates to the distribution of the noise. We do not attempt to solve the estimation problem here, but unfortunately it follows from the results of this paper that algorithms for handling outliers will probably involve knowing at least the tail behaviour of the error distribution, despite the fact that in practical situations one does not generally want to take as known prior knowledge.

1.2 On Persistence Intervals

While the above discussion has concentrated on the persistence of noise induced crackle as sample sizes grow, and the regions in \({\mathbb {R}}^d\) in which different types of homology appear, the proofs below also yield information about the more classical persistence diagrams of topological data analysis (cf. [8, 10–12]).

For example, in the two cases for which crackle persists—the power-law and exponential cases—estimates of the type appearing in Sect. 3 indicate that, with high probability, there exist extremely long bars in the bar code representation of persistent homology. Up to lower order corrections, preliminary calculations show that bar lengths for the \(k\)-th homology can be as large as \(O(n^{a_k})\) for the power-law case, and \(b_k (\log \log n)\) for the exponential case, for appropriate \(a_k\) and \(b_k\). More detailed studies of these phenomena will appear in a later publication.

1.3 Poisson Processes

Although we have described everything so far in terms of a random sample \(\mathcal {X}\) of \(n\) points taken from a density \(f\), there is another way to approach the results of this paper, and that is to replace the points of \(\mathcal {X}\) with the points of a \(d\)-dimensional Poisson process \(\mathcal {P}_n\) whose intensity function is given by \(\lambda _n = n f\). In this case the number of points is no longer fixed, but has mean \(n\). Similarly to many phenomena in random geometric graphs (see [18]), the results of this paper hold without any change, if we replace \(\mathcal {X}\) by \(\mathcal {P}\).

1.4 Disclaimers

Before starting the paper in earnest, and so as not to be accused of myopia, we note that the subject of manifold learning is obviously much broader that that described above, and algorithms for “estimating” an underlying manifold from a finite sample abound in the statistics and computer science literatures. Very few of them, however, take an algebraic point of view that we or the literature quoted above take. Furthermore, we note that other important results about the homology of Rips and Čech complexes for various distributions can be found in the papers [4–6, 13, 14]. While the methods and emphases of these papers are rather different, they demonstrate phenomena similar to the ones in this paper. The study of random geometric complexes typically concentrates on situations for which the number of points (\(n\)) goes to infinity and the radius (\(r_n\)) involved in defining the complexes goes to zero. Decreasing the radius \(r_n\) plays a similar role to increasing \(R_n\), as treated in this paper. Both actions result in making the complex sparser. For example, if \(r_n \rightarrow 0\) relatively slow (\(r_n = \Omega ((\log n/n)^{1/d})\)), the entire complex behaves like the “core” discussed earlier. On the other hand, if \(r_n\rightarrow 0\) fast enough (\(r_n = o(n^{-1/d})\)), then the entire complex behaves like “crackle”. For more details see [13].

2 Results

In this section we shall present all our main results, along with some discussion, more technical than that of the Introduction. Recall from Sect. 1.3 that although we present all results for the point set \(\mathcal {X}\), they also hold if we replace the points of \(\mathcal {X}\) by the points of an appropriate Poisson process. All proofs are deferred to Sect. 3.

2.1 The Core of Distributions with Unbounded Support

We start by examining the core of the power-law, exponential and Gaussian distributions. These distributions are spherically symmetric and the samples are concentrated near the origin. By “core” we refer to a centered ball \(B_{R_n}\triangleq B_{R_n}(0) \subset {\mathbb {R}}^d\) containing a very large number of points from the sample \(\mathcal {X}_n\), such that

i.e. the unit balls around the sample points completely cover \(B_{R_n}\). In this case the homology of \(\bigcup _{X\in \mathcal {X}_n\cap B_{R_n}} B_1(X)\), or equivalently, of \(\check{C}(\mathcal {X}_n \cap B_{R_n},1)\), is trivial. Obviously, as \(n\rightarrow \infty \), the radius \(R_n\) grows as well.

Let \(\big \{R_n\big \}_{n=1}^\infty \) be an increasing sequence of positive numbers. Define by \(C_n\) the event that \(B_{R_n}\) is covered, i.e.

We wish to find the largest possible value of \(R_n\) such that \(\mathbb {P}\big (C_n\big ) \rightarrow 1\). The following theorem presents lower bounds for this value.

Theorem 1

Let \(\varepsilon >0\), and define

where the three distributions are given by (1.2)–(1.4), and

If \(R_n \le R_n^{\mathrm {c}}\), then

Theorem 1 implies that the core size has a completely different order of magnitude for each of the three distributions. The heavy-tailed, power-law distribution has the largest core, while the core of the Gaussian distribution is the smallest. While Theorem 1 provides a lower bound to the size of the core, the results in Theorems 2, 3 and 4 indicate the existence of an equivalent upper bound. In fact we believe that the upper bound would differ from the lower bound in Theorem 1 only by a constant, but this will not be pursued in this paper. In the following sections we shall study the behaviour of the Čech complex outside the core.

2.2 How Power-Law Noise Crackles

In this section we explore the crackling phenomenon in the power-law distribution with a density function given by

where \(\alpha > d\). Let \(B_{R_n}\subset {\mathbb {R}}^d\) be the centered ball with radius \(R_n\), and let

be the Čech complex constructed from sample points outside \(B_{R_n}\). We wish to study

the \(k\)-th Betti number of \(\check{C}_n\).

Note that the minimum number of points required to form a non-trivial \(k\)-dimensional cycle (\(k\ge 1\)) is \(k+2\). In this case, the \(k\)-cycle is the boundary of the \(k+1\) dimensional simplex spanned by these points. For \(k\ge 1\) and \(\mathcal {Y}\subset {\mathbb {R}}^d\), denote

i.e. \(T_k\) takes the value \(1\) if \(\check{C}(\mathcal {Y},1)\) is a minimal \(k\)-dimensional cycle, and \(0\) otherwise. This indicator function will be used to define the limits of the Betti numbers.

Theorem 2

If \(\lim _{n\rightarrow \infty }n R_n^{-\alpha } = 0\), then

where

and where \(s_{d-1}\) is the surface area of the \((d-1)\)-dimensional unit sphere in \({\mathbb {R}}^d\).

Next, we define the following values, which will serve as critical radii for the crackle,

The following is a straightforward corollary of Theorem 2, and summarizes the behaviour of \({\mathbb {E}}\big \{{\beta _{k,n}}\big \}\) in the power-law case.

Corollary 1

For \(k\ge 0\) and \(\varepsilon >0\),

Theorem 2 and Corollary 1 reveal that the crackling behaviour is organized into separate “layers”, see Fig. 2. Dividing \({\mathbb {R}}^d\) into a sequence of annuli at radii

we observe a different behaviour of the Betti numbers in each annulus. We shall briefly review the behaviour in each annulus, in a decreasing order of radii values. The following description is mainly qualitative, and refers to expected values only.

-

\([R_{0,n}^\varepsilon ,\infty )\)—there are hardly any points (\(\beta _k\sim 0\), \(0\le k \le d-1\)).

-

\([R_{0,n},R_{0,n}^\varepsilon )\)—points start to appear, and \(\beta _0\sim \mu _{\mathrm {p},0}\). The points are very few and scattered, so no cycles are generated (\(\beta _k \sim 0\), \(1\le k \le d-1\)).

-

\([R_{1,n}^\varepsilon ,R_{0,n})\)—the number of components grows to infinity, but no cycles are formed yet (\(\beta _0 \sim \infty \), and \(\beta _k = 0\), \(1 \le k \le d-1\)).

-

\([R_{1,n},R_{1,n}^\varepsilon )\)—a finite number of \(1\)-dimensional cycles show up, among the infinite number of components (\(\beta _0 \sim \infty \), \(\beta _1\sim \mu _{\mathrm {p},1}\), and \(\beta _k = 0\), \(1 \le k \le d-1\)).

-

\([R_{2,n}^\varepsilon ,R_{1,n})\)—we have \(\beta _0\sim \infty \), \(\beta _1\sim \infty \), and \(\beta _k\sim 0\) for \(k\ge 1\).

This process goes on, until the \((d-1)\)-dimensional cycles appear

-

\([R_{d-1},R_{d-1}^\varepsilon )\)—we have \(\beta _{d-1}\sim \mu _{\mathrm {p},d-1}\) and \(\beta _k\sim \infty \) for \(0\le k \le d-2\).

-

\([R_n^{\mathrm {c}},R_{d-1})\)—just before we reach the core, the complex exhibits the most intricate structure, with \(\beta _k \sim \infty \) for \(0\le k \le d-1\).

Note that there is a very fast phase transition as we move from the contractible core to the first crackle layer. At this point we do not know exactly where and how this phase transition takes place. A reasonable conjecture would be that the transition occurs at \(R_n = n^{1/\alpha }\) (since at this radius the term \(n R_n^{-\alpha }\) that appears in Theorem 2 changes its limit, affecting the limiting Betti numbers). However, this remains for future work.

2.3 How Exponential Noise Crackles

In this section we focus on the exponential density function \(f=f_{\mathrm {e}}\). The results in this section are very similar to the those for the power law distribution, and we shall describe them briefly. Differences lie in the specific values of the \(R_{k,n}\) and in the terms in the limit formulae.

Theorem 3

If \(\lim _{n\rightarrow \infty }n\mathrm{e}^{-R_n} = 0\), then

where

and where \(y_i^1\) is the first coordinate of \(y_i\in {\mathbb {R}}^d\).

Next, define

From Theorem 3 we can conclude the following.

Corollary 2

For \(k\ge 0\) and \(\varepsilon >0\),

As in the power-law case, Theorem 3 implies the same “layered” behaviour, the only difference being in the values of \(R_{k,n}\). From examining the values of \(R_n^{\mathrm {c}}\), and \(R_{k,n}\) it is reasonable to guess that the phase transition in the exponential case occurs at \(R_n = \log n\).

2.4 Gaussian Noise Does Not Crackle

Simplicial complexes built over vertices sampled from the standard Gaussian distribution exhibit a completely different behaviour to that we saw in the power-law and exponential cases. Define

then

Theorem 4

If \(f=f_{\mathrm {g}}\), \(\varepsilon > 0\), and \(R_n = R_{0,n}^\varepsilon \), then for \(0\le k \le d-1\)

Note that in the Gaussian case \(\lim _{n\rightarrow \infty }\big ( R_{0,n}^\varepsilon - R_n^{\mathrm {c}}\big ) = 0\). This implies that as \(n\rightarrow \infty \) we have the core which is contractible, and outside the core there is hardly anything. In other words, the ball placed around every new point we add to the sample immediately connects to the core, and thus, the Gaussian noise does not crackle.

3 Proofs

We now turn to proofs, starting with the proof of the main result of Sect. 2.1.

3.1 The Core

Proof

[Proof of Theorem 1] The proof covers all three distributions, except for specific calculations near the end. Take a grid on \({\mathbb {R}}^d\) of size \(g = \frac{1}{2\sqrt{d}}\). Let \(\mathcal{{Q}}_n\) be the collection of cubes in this grid that are contained in \(B_{R_n}\). Let \(\tilde{C}_n\) be the following event

i.e. \(\tilde{C}_n\) is the event that every cube in \(\mathcal{{Q}}_n\) contains at least one point from \(\mathcal {X}_n\). Recall the definition of \(C_n\),

Then it is easy to show that \(\tilde{C}_n \subset C_n\). The complementary event \(\tilde{C}_n^c\) is the event that at least one cube is empty. Thus,

where

In addition, the number of cubes that are contained in \(B_{R_n}\) is less than \(\big (2{{R_n}/{g}}\big )^d\). Therefore,

Now, choose any \(\varepsilon > 0\) and set

where

It is easy to verify that in all cases we have

Thus, from (3.1) we conclude that \(\mathbb {P}(\tilde{C}_n) \rightarrow 1\). Since \(\mathbb {P}\big (C_n\big ) \ge \mathbb {P}(\tilde{C}_n)\) we now have that for \(R_n = R_n^{\mathrm {c}}\), in each of the distributions,

which completes the proof.\(\square \)

3.2 Crackle: Notation and General Lemmas

For \(R_n > 0\), set

i.e. \(\mathcal {X}_{n,R_n}\) consists of the points of \(\mathcal {X}_n\) located outside the ball \(B_{R_n}\). Next, recall the definition of \(T_k\),

for \(\mathcal {Y}\subset {\mathbb {R}}^d\), and write

where \(k\ge 1\). Observe that

We will evaluate the limits of \({\mathbb {E}}\big \{{S_{k,n}}\big \},\,{\mathbb {E}}\{{\widehat{S}_{k,n}}\}\) and \({\mathbb {E}}\big \{{L_{k,n}}\big \}\) and deduce from these the limit of \({\mathbb {E}}\big \{{\beta _{k,n}}\big \}\).

In addition, set

The following two lemmas are purely technical, but will considerably simplify our computations later.

Lemma 1

Let \(f:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\) be a spherically symmetric probability density. Then,

where \(s_{d-1}\) is the volume of the \(d-1\) dimensional unit sphere.

Proof

\(S_{0,n}\) is simply a sum of Bernoulli variables, therefore

Writing the integral in polar coordinates yields

where \(J(\theta ) = | {\frac{\partial x}{\partial \theta }}|\). Since \(f\) is spherically symmetric, \(f(r\theta ) = f(r)\), and therefore

The proof for \(\hat{S}_{0,n}\) is similar, using the fact that the probability that a point \(x\in {\mathbb {R}}^d\) is disconnected from the rest of the complex \(\check{C}(\mathcal {X}_n,1)\) is \((1-p(x))^{n-1}\).\(\square \)

Lemma 2

Let \(f:{\mathbb {R}}^d\rightarrow {\mathbb {R}}\) be a spherically symmetric probability density. Then, for \(k\ge 1\),

where \(s_{d-1}\) is the volume of the \(d-1\) dimensional sphere, and where

Proof

The proof is in the same spirit of the proof of Lemma 1, but technically more complicated. Thinking of \(S_{k,n}\) as a sum of Bernoulli variables, we have that

Let \(I_k\) denote the integral above. Then, using the change of variables

yields

Moving to polar coordinates yields

where \(J(\theta ) = | {\frac{\partial x}{\partial \theta }}|\), and \(f(x) = f(\Vert x\Vert )\) by the spherical symmetry assumption. Set

Since \(T_k\) is rotation invariant, it is easy to show that for every \(\theta \in S^{d-1}\)

Thus,

This completes the proof for \(S_{k,n}\). The proof for \(\widehat{S}_{k,n}\) is similar.\(\square \)

In what follows, we shall use the following elementary limits:

-

1.

For every \(k > 0\),

$$\begin{aligned} \lim _{n\rightarrow \infty }n^{-k} \left( {\begin{array}{c}n\\ k\end{array}}\right) = \frac{1}{k!} \end{aligned}$$(3.5) -

2.

For every sequence \(a_n\rightarrow 0\) and \(k\ge 0\),

$$\begin{aligned} \lim _{n\rightarrow \infty }\frac{(1-a_n)^{n-k}}{\mathrm{e}^{-na_n}} = 1 \end{aligned}$$(3.6)

3.3 Crackle: The Power Law Distribution

In this section we prove the results in Sect. 2.2. First, we need a few lemmas.

Lemma 3

If \(f=f_{\mathrm {p}}\), and \(R_n\rightarrow \infty \), then

where \(\mu _{\mathrm {p},0}\) is defined in (2.1).

If, in addition, \(nR_n^{-\alpha }\rightarrow 0\), then

Proof

From Lemma 1 we have that

Making the change of variables \(r\rightarrow R_n \rho \) yields

Applying the dominated convergence theorem to the previous integral gives

This proves the first part of the lemma.

Next, from Lemma 1 we have that

The power term is bounded by \(1\) and therefore will not affect the conditions needed for dominated convergence. Thus, using (3.6), we only need to evaluate its limit.

and after the change of variables \(r\rightarrow R_n\rho \) we have

If \(nR_n^{-\alpha }\rightarrow 0\), then, by dominated convergence, we have

Thus,

and therefore we have

This completes the proof of the second part of the lemma.\(\square \)

Lemma 4

If \(f=f_{\mathrm {p}}\), and \(R_n\rightarrow \infty \) then

where \(\mu _{\mathrm {p},k}\) is defined in (2.2). If, in addition,\(n R_n^{-\alpha } \rightarrow 0\), then

Proof

The proof is in the spirit of the proof of Lemma 3, but technically more complicated. From Lemma 2 we have that

where

Making the change of variables \(r \rightarrow R_n\rho \) yields

Thus, using (3.5),

It is easy to show that the integrand is bounded by an integrable term, so the dominated convergence theorem applies, yielding

This proves the first part of the lemma.

Next, the terms \(G_k(r)\) and \(\hat{G}_k(r)\) in Lemma 2 differ only by the term \((1-p(r\mathrm {e}_1, r\mathrm {e}_1+\mathbf {y}))^{n-k-2}\), so dominated convergence still applies. Now,

and substituting \(r\rightarrow R_n\rho \) yields

If \(nR_n^{-\alpha }\rightarrow 0\), then using the dominated convergence we have

Thus,

and therefore, using (3.6),

This completes the proof of the second part of the lemma.\(\square \)

Lemma 5

If \(f=f_{\mathrm {p}}\), and \(R_n\rightarrow \infty \) then

for some \(\hat{\mu }_{\mathrm {p},k}> 0\).

Proof

The proof is very similar to the proof of Lemma 4. We need only replace \(T_k\) with an indicator function that tests whether a sub-complex generated by \(k+3\) points is connected. The exact value of \(\hat{\mu }_{\mathrm {p},k}\) will not be needed anywhere.\(\square \)

We can now prove Theorem 2.

Proof of Theorem 2 To prove the limit for \(\beta _{0,n}\) simply combine Lemma 3 with the inequality (3.2). To prove the limit for \(\beta _{k,n}\), \(k\ge 1 \), combine Lemmas 4 and 5 with the inequality (3.3).\(\square \)

3.4 Crackle: The Exponential Distribution

In this section we wish to prove Theorem 3. We start with the following lemmas.

Lemma 6

If \(f=f_{\mathrm {e}}\), and \(R_n\rightarrow \infty \) then

where \(\mu _{\mathrm {e},0}\) is defined in (2.3).

If, in addition, \(n\mathrm{e}^{-R_n}\rightarrow 0\) then

Proof

From Lemma 1 we have that

Using the change of variables \(r\rightarrow \rho + R_n\) yields

Applying dominated convergence to the last integral yields

This proves the first part of the lemma.

Next, from Lemma 1 we have that

The power term will not affect the dominated convergence conditions. Thus, we only need to evaluate its limit.

and after the change of variables \(r\rightarrow \rho +R_n\) we have

If \(n\mathrm{e}^{-R_n}\rightarrow 0\), then

Thus,

and therefore, using (3.6), we have

This completes the proof of the second part of the lemma.\(\square \)

Lemma 7

If \(f=f_{\mathrm {e}}\), and \(R_n\rightarrow \infty \) then

where \(\mu _{\mathrm {e},k}\) is defined in (2.4).

If, in addition, \(n\mathrm{e}^{-R_n}\rightarrow 0\) then

Proof

From Lemma 2 we have that

where

Making the change of variables \(r\rightarrow \rho + R_n\) yields

The last integral can be easily shown to satisfy the conditions of the dominated convergence theorem. In addition, it is easy to show that

where \(y_i^1\) is the first coordinate of \(y_i \in {\mathbb {R}}^d\), and also that

Altogether, we have that

proving the first part of the lemma.

Next, as in the proof of Lemma 4, we need to evaluate the term \(p(r\mathrm {e}_1, r\mathrm {e}_1+\mathbf {y})\).

The change of variables \(r\rightarrow \rho +R_n\) yields

If \(n\mathrm{e}^{-R_n}\rightarrow 0\), then

Thus,

and therefore,

This completes the proof.\(\square \)

Lemma 8

If \(f=f_{\mathrm {e}}\), and \(R_n\rightarrow \infty \) then

where \(\hat{\mu }_{\mathrm {e},k}> 0\).

Proof

As for the proof of Lemma 5, mimic now the proof of Lemma 7, replacing \(T_k\) with an indicator function that tests whether a sub-complex generated by \(k+3\) points is connected.\(\square \)

Proof (Proof of Theorem 3)

The proof follows the same steps as the proof of Theorem 2.\(\square \)

3.5 Crackle: The Gaussian Distribution

In this section we prove Theorem 4.

Proof (Proof of Theorem 4)

From Lemma 1 we have that

Making the change of variables \(r \rightarrow (\rho ^2 + R_n^2)^{1/2}\) which implies \(\textit{dr} = \frac{\rho }{(\rho ^2+R_n^2)^{1/2}}{ d}\rho \), we have

The integrand is bounded, and applying dominated convergence we have

Taking \(R_n = R_{0,n}^\varepsilon \triangleq \sqrt{2 \log n + \big ({d-2}+\varepsilon \big ) \log \log n}\), we have

and so

which implies that

Finally, for every \(0 \le k \le d-1\),

Therefore,

completing the proof.\(\square \)

References

Adler, R.J., Bobrowski, O., Borman, M.S., Subag, E., Weinberger, S.: Persistent homology for random fields and complexes. In: Borrowing Strength: Theory Powering Applications—A Festschrift for Lawrence D. Brown, p. 124–143. Institute of Mathematical Statistics, Beachwood (2010)

Aronshtam, L., Linial, N., Łuczak, T., Meshulam, R.: Collapsibility and vanishing of top homology in random simplicial complexes. Discrete Comput. Geom. 49(2), 317–334 (2013)

Babson, E., Hoffman, C., Kahle, M.: The fundamental group of random 2-complexes. J. Am. Math. Soc. 24(1), 1–28 (2011)

Bobrowski, O.: Algebraic Topology of Random Fields and Complexes. Ph.D. thesis, Faculty of Electrical Engineering, Technion-Israel Institute of Technology (2012). http://www.graduate.technion.ac.il/Theses/Abstracts.asp?Id=26908

Bobrowski, O., Adler, R.J.: Distance functions, critical points, and topology for some random complexes (2011). arXiv:1107.4775

Bobrowski, O., Mukherjee, S.: The topology of probability distributions on manifolds. Probab. Theory Relat. Fields. 1–36 (2014). doi: 10.1007/s00440-014-0556-x

Borsuk, K.: On the imbedding of systems of compacta in simplicial complexes. Fundam. Math. 35(1), 217–234 (1948)

Carlsson, G.: Topology and data. Bull. Am. Math. Soc. 46(2), 255–308 (2009)

Cohen, D.C., Farber, M., Kappeler, T.: The homotopical dimension of random 2-complexes (2010). arXiv:1005.3383

Edelsbrunner, H., Harer, J.: Persistent homology—a survey. Contemp. Math. 453, 257–282 (2008)

Edelsbrunner, H., Harer, J.L.: Computational Topology: An Introduction. American Mathematical Society, Providence, RI (2010)

Ghrist, R.: Barcodes: the persistent topology of data. Bull. Am. Math. Soc. 45(1), 61–75 (2008)

Kahle, M.: Random geometric complexes. Discrete Comput. Geom. 45(3), 553–573 (2011)

Kahle, M., Meckes, E.: Limit the theorems for betti numbers of random simplicial complexes. Homol. Homotopy Appl. 15(1), 343–374 (2013)

Meshulam, R., Wallach, N.: Homological connectivity of random k-dimensional complexes. Random Struct. Algorithms 34(3), 408–417 (2009)

Niyogi, P., Smale, S., Weinberger, S.: Finding the homology of submanifolds with high confidence from random samples. Discrete Comput. Geom. 39(1–3), 419–441 (2008)

Niyogi, P., Smale, S., Weinberger, S.: A topological view of unsupervised learning from noisy data. SIAM J. Comput. 40(3), 646–663 (2011)

Penrose, M.: Random Geometric Graphs. Oxford Studies in Probability, vol. 5. Oxford University Press, Oxford (2003)

Pippenger, N., Schleich, K.: Topological characteristics of random triangulated surfaces. Random Struct. Algorithms 28(3), 247–288 (2006)

Acknowledgments

Adler and Bobrowski were supported in part by AFOSR FA8655-11-1-3039. Weinberger was supported in part by AFOSR FA9550-11-1-0216. The authors would like to thank Yuliy Baryshnikov, Matthew Strom Borman, Matthew Kahle, and Katherine Turner for a number of interesting and useful conversations, and Peter Landweber for useful comments on the first revision of this paper.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Adler, R.J., Bobrowski, O. & Weinberger, S. Crackle: The Homology of Noise. Discrete Comput Geom 52, 680–704 (2014). https://doi.org/10.1007/s00454-014-9621-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00454-014-9621-6