Abstract

This study investigated the influence of five problem characteristics on students’ achievement-related classroom behaviors and academic achievement. Data from 5,949 polytechnic students in PBL curricula across 170 courses were analyzed by means of path analysis. The five problem characteristics were: (1) problem clarity, (2) problem familiarity, (3) the extent to which the problem stimulated group discussion, (4) self-study, and (5) identification of learning goals. The results showed that problem clarity led to more group discussion, identification of learning goals, and self-study than problem familiarity. On the other hand, problem familiarity had a stronger and direct impact on academic achievement.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

There are three key elements that characterize problem-based learning (PBL): (1) problems, (2) tutors, and (3) students (Majoor et al. 1990). To gain more in-depth understanding of how and why PBL works, generally two research approaches are taken. One approach is to focus on one specific element of PBL. For instance, various studies have been carried out to understand the role of the tutor (e.g., Schmidt et al. 1993). Another approach is to look at more than one element of PBL such as the relationships between problems, tutors, and students (Gijselaers and Schmidt 1990). Both approaches are useful in their own right; while the first approach provides more detailed information about one specific element of PBL, the second approach provides insights on the interrelationship between the different elements. The present study followed the first approach and focuses on one of the key elements of PBL: the problems.

As the name implies, problem-based learning is an instructional method in which problems are the focal part of the learning process. Problems are the instructional materials presented to students to trigger their learning process. Problems are typically descriptions of real-life situations or phenomena which students are required to explain or resolve (Barrows and Tamblyn 1980a, b; Colliver 2000; Hmelo-Silver 2004). Problems are often presented in text format, sometimes with pictures and computer simulations. They are also sometimes known as “triggers”, “cases”, or “scenarios” in the PBL literature.

Problems serve to initiate the learning process. Schmidt (1983) summarized the PBL process in a seven-step model. To address the problem, students work in their groups to first discuss and analyze it. This leads to the generation of several learning goals that require further exploration. The students then use the learning goals as guidelines for their self-directed learning activities. During the period of self-directed learning, they find out more information to answer the problem. Following that, the students reconvene, present to one another, and compile the information gathered. This results in integration of their new knowledge in the context of the problem (Barrows and Tamblyn 1980a, b; Colliver 2000; Hmelo-Silver 2004).

Considering that problems play such an important role in PBL, it is crucial that problems are of high quality, that is, they are effective in helping students to learn and understand a topic thoroughly. To examine the role problems play in PBL, Gijselaers and Schmidt (1990) investigated the relationships between the quality of problems, tutor performances, students’ prior knowledge, the extent of their group functioning, time spent on individual study, and their interest in subject matter using a causal model. In the causal model, the quality of problems, tutor’s performances, and students’ prior knowledge were categorized as input variables; group functioning and self-study time as process variables; and interest and academic achievement as output variables. The results showed that of the three input variables, the quality of problems had a direct and strong influence on the students’ group functioning, which influenced the time students spent on studying and eventually their academic achievement. The results of this study suggest that a good problem leads to improved learning in PBL (Schmidt and Moust 2000). The study was replicated by Berkel and Schmidt (2000) confirming the earlier findings: problems have the strongest impact on student learning in PBL.

While these studies were the first to look at various elements of PBL and how these elements are related to each other and students’ academic achievement, they did not shed light on which particular aspect of the problem was essential for student learning. For instance, does it matter whether a problem is clearly stated to aid the students in their learning, and is this more important than having a problem that uses a familiar context that students can relate to? In turn, does this stimulate students to engage in group discussion and work hard during self-study?

In the above studies, all these characteristics that define good problems, which we will from now onwards refer to as problem characteristics, were aggregated under one multi-dimensional scale: the “quality of problems”. By aggregating these problem characteristics important information may have been lost because it is not clear how the individual problem characteristics (i.e. the individual items in the rating scale) influence each other. For instance, it is possible that clearly formulated problems have a positive effect on group discussion, but a negative effect on the generation of learning goals because the problem appears so straight forward that only few general learning goals are identified. Knowing how the individual problem characteristics are related to each other is important because it would provide insight in the causal mechanisms that make a good problem. In addition to this (as in the above studies), it would be useful to include outcome measures such as student engagement and academic achievement to examine how the problem characteristics predict student learning and performance.

With the present study we conducted an analysis at the micro level using the same rating scale as Gijselaers and Schmidt (1990) used and investigated how the individual problem characteristics (i.e. the items) are interrelated with each other and academic achievement. By conducting a micro level analysis we expected to gain an in-depth understanding of what makes a problem effective in terms of student learning and achievement in the PBL classroom.

In our model, we followed the same classification of input, process, and output variables as in the Gijselaers and Schmidt (1990) model but at the micro level. As input variables we used problem clarity (“The problem statements were clear to me”) and problem familiarity (“I had difficulties relating the problems to what I already know”). We hypothesized that these input variables would influence the process variables: Group discussion (“The problems sufficiently triggered group discussion”), identification learning goals (“Based on the problem triggers we were able to come up with a satisfactory list of learning goals”) and self-study (“The problems stimulated me to work hard during the study periods”). Moreover, we expected that group discussion would lead to the identification of learning goals and both would influence how hard students work during self-study.

The variables used so far in the model are self-reports measured by means of Gijselaers and Schmidt’s rating scale items. Since these items are self-reports and not observational measures, we did not expect that these items would be strong direct predictors of students’ academic achievement. For instance, if students report that problems in a certain module stimulated them to work hard, it does not necessarily mean that they actually worked hard. We propose that in order to make adequate predictions of students’ academic achievement, the self-reported problem ratings need to translate themselves into actual engagement of the students, which should be observable behaviors in the classroom. For instance, if a problem is rated as being effective because it stimulates students to work hard, one would expect to observe students being involved in discussions, exerting effort on working on the problem, doing self-study, and persisting over a long period of time. Data reflecting these behaviors should be observational rather than self-reported since there is a possibility that students are not consciously aware of their learning behaviors in the classroom. To measure these behaviors, we included an observational measure obtained through teacher observations of students’ achievement-related classroom behaviors (Rotgans and Schmidt 2011).

In sum, we tested a micro level causal model in which we hypothesized that problem input variables would be related to problem process variables, and they in turn to outcome variables. However, to make adequate predictions of students’ academic achievement, based on the problem characteristics, we included an observational measure of students’ achievement-related classroom behaviors acting as a mediator between their self-reports and academic achievement. Data were collected from 5,949 polytechnic students in Singapore who were enrolled in 170 different PBL courses. Data were analyzed by means of path analysis.

Method

Participants

The sample consisted of 5,949 respondents (51% female and 49% male) with an average age of 19 years (SD = 1.79). The data were collected during the first and second semester of the academic year 2009/2010. As such, the sample covered all 170 courses at the polytechnic.

Educational context

In this polytechnic, the instructional method is PBL for all its modules and programs. In this approach five students work together in one team under the guidance of a tutor. Each class comprises four to five teams. Unique to this polytechnic’s approach to PBL is that students work on one problem during the course of each day (Alwis and O’Grady 2002) A typical day starts with the presentation of a problem. Students discuss in their teams what they know, do not know, and what they need to find out. Doing so, students activate their prior knowledge, come up with tentative explanations for the problem, and formulate their own learning goals (Hmelo-Silver 2004; Schmidt 1983, 1993). Subsequently, a period of self-study follows, in which students individually and collaboratively find information to address the learning goals. At the end of the day the teams come together to present, elaborate upon, and synthesize their findings.

Materials

Problem characteristics, the problem rating scale

A rating scale from Gijselaers and Schmidt (1990) was adapted to measure the quality of problems. The instrument consisted of five items, which we used in our path model. We considered the following items as input variables for the path model: (1) The problem statements were clear to me; and (2) I had difficulties relating the problems to what I already know (Reversed). As process variables in the model we used the following items: (3) The problems sufficiently triggered group discussion; (4) Based on the problem triggers we were able to come up with a satisfactory list of “things we need to find out”; and (5) The problems stimulated me to work hard during the study periods. Some minor modifications to the wordings of the items were made to better capture the educational context of the institution. For instance, at the institution, students know problems as “problem triggers” and learning goals are generally referred to as “what we need to find out”. Other items, which were irrelevant for the educational context of this study, were removed. All items were scored on a 5-point Likert scale: 1 (not true at all), 2 (not true for me), 3 (neutral), 4 (true for me), and 5 (very true for me). Since the scale was administered towards the end of the semester, students were instructed to think about the problems they had experienced so far in their course and respond on their overall experience of the course.

The construct validity of the slightly revised problem rating scale was established by means of confirmatory factor analysis (Byrne 2001). The assumption was that all 5 items for each measure were manifestations of one underlying factor. The results revealed that the data fitted the hypothesised model well. The average Chi-square/df ratio (i.e. average all five measurement occasions) was 1.51, p = .22, RMSEA = .01 and CFI = 1.00. All factor loadings were statistically significant. The reliability of the measure was determined by calculating Hancock’s coefficient H (Hancock and Mueller 2001). The coefficient H is a construct reliability measure for latent variable systems that represents a relevant alternative to the conventional Cronbach’s alpha. Hancock recommended a cut-off value for the coefficient H of .70. The average coefficient H for the problem rating scales was .79. Overall, the results demonstrate that the psychometric characteristics of the scale are adequate.

Achievement-related classroom behaviors

This measure was based on tutor observations representing students’ achievement-related behaviors (Rotgans et al. 2008; Rotgans and Schmidt 2011). In this measure tutors rated students’ participation, teamwork, presentation skills, and self-directed learning. A grade was assigned to each student based on the tutor observations for the day. The grade was reflected on a 5-point performance scale: 0 (fail), 1 (conditional pass), 2 (acceptable), 3 (good), and 4 (excellent). The reliability and validity of this measure was established in a study by (Chai and Schmidt 2007). Their findings were based on 1,059 student observations by 230 tutors, which resulted in generalisability coefficients ranging from .55 to .94 (average = .83). Overall, these values are indicative of a high reliability of this measure.

Academic achievement measure

As an academic achievement measure, written achievement tests of 30–60 min duration were used in the analysis. The results of three test scores for each course were aggregated and used in the analysis. Most of the tests were a combination of open-ended questions and multiple-choice questions. Scores were distributed on a scale ranging from 0 to 4 with .5 increments: 0 (full fail), .5 (fail), 1.0 (conditional pass I), 1.5 (conditional pass II), 2.0 (acceptable), 2.5 (satisfactory), 3.0 (good), 3.5 (very good), and 4.0 (excellent).

Procedure

The problem rating scale was administered online at the end of the first and second semester of the academic year 2009/2010 to the entire population. Responding to the rating scale was compulsory. Towards the end of each semester, students’ achievement related classroom behavior scores were extracted and the average score for each course (i.e. 15 observations for each course) was determined. The same was done for the score of the written achievement tests.

Analysis

The relationships between the variables were analyzed using path analysis. For the model, Chi-square accompanied with degrees of freedom, p-value, and the root mean square error of approximation (RMSEA) were used as indices of absolute fit between the models and the data. The Chi-square is a statistical measure to test the closeness of fit between the observed and predicted covariance matrix. A small Chi-square value, relative to the degrees of freedom, indicates a good fit (Byrne 2001). A Chi-square/df ratio of less than 3 is considered to be indicative of a good fit. RMSEA is sensitive to model specification and is minimally influenced by sample size and not overly affected by estimation method (Fan et al. 1999). The lower the RMSEA value, the better the fit. A commonly reported cut-off value is .06 (Hu and Bentler 1999). In addition to these absolute fit indices, the comparative fit index (CFI) was calculated. The CFI value ranges from zero to one and a value greater than .95 is considered a good model fit (Byrne 2001).

Results

Table 1 depicts the descriptive statistics as well as the correlation matrix for the measured variables. The intercorrelations were low to moderate.

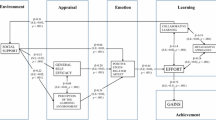

The tested path model produced the following model fit statistics: Chi-square/df = 5.69, p < .01, RMSEA = .03, CFI = 1.00. These values are indicative of a good model fit. All path coefficients (i.e. standardized regression weights) were statistically significant at the 1%. See Fig. 1 for an overview of the model.

The outcome of the path model suggests that the input variable “problem clarity” had a relatively strong effect on the process variable and a weaker effect on the outcome variable. Having a clear problem statement seems to be of importance; if a problem statement is clearly formulated it has a relatively strong effect on the “group discussion” (standardized β = .38) and the “identification of learning goals” (standardized β = .24). Albeit to a lesser extent, this variable was directly related to some of the output variable; having a clear problem directly influenced how hard the groups worked during self-study (standardized β = .18) and on students’ “achievement-related behaviors” during class (standardized β = .09). The second input variable—problem familiarity—was weakly related to the process variable “self-study” (standardized β = .04), and directly to the output variables students’ “achievement-related classroom behaviors” (standardized β = .15) and “academic achievement” (standardized β = .12).

The two process variables were relatively strongly related to each other; group discussion leading to identification and generation of learning goals (standardized β = .45). Both process variables were in turn relatively strong predictors of the first output variable “self-study” (standardized β = .29 and .20, respectively), which in turn was a relatively good indicator of the observed “achievement-related classroom behaviors” during class (standardized β = .21). Finally, the observed achievement-related behaviors during class were a relatively strong predictor of how students performed at the written achievement tests (standardized β = .45). Overall, the model could explain 23% of the variance in students’ academic achievement.

Discussion

The objective of the study was to conduct a micro level analysis to examine the interrelations between five problem characteristics and students’ achievement-related classroom behaviors as well as academic achievement in a PBL setting. To this end, 5,949 polytechnic level students, enrolled in PBL curricula, were asked to rate five problem characteristics for 170 courses. The results of the path analysis suggest that particularly the input variable problem clarity had a relatively large effect on the other problem characteristics, such as the process variables: group discussion and identification of learning goals. Subsequently, both group discussion and the identification of learning goals led to high engagement during self-study. This in turn was relatively strongly related to students’ achievement-related classroom behaviors and academic achievement.

Surprisingly, the second input variable problem familiarity, which refers to students’ prior knowledge about the problems, had no influence on the process variables group discussion and identification of learning goals, but a weak effect on self-study. Problem familiarity had a relatively stronger direct effect on the output variables achievement-related classroom behaviors and academic achievement.

A key finding of our study is that clearly stated problems in PBL seem more important for student learning than being familiar with the problems. As an explanation we propose that when a problem is clearly stated, students can easily comprehend the problem and have a clear idea of what the problem is about. Since every student in the group is more likely to have a clear understanding of what the problem is about, it is then relatively easy for students—even if they do not have any prior knowledge about the problem—to engage in group discussion, which means engaging in theory construction, formulating of hypotheses, and eventually the identification of learning goals. This, in turn, is likely to drive students to work harder to resolving the given problem. The results of several studies support this explanation. For instance, Sockalingam and Schmidt (2010) showed that problems that were clear to students made it easier for them to identify the appropriate learning goals, which in turn motivated them to work on the problems. A study by Hurk et al. (2001a, b) showed that adding keywords in the problem statement increased its clarity, which provided essential guidelines for their individual study. Congruent with this, Hurk et al. (2001a, b) also showed that the quality of learning issues generated from a problem depended on three important problem characteristics; useful key words, conciseness, and clearness. In short, the findings suggest that when a problem is clear to students, they are better able to specify clear learning issues and demarcate what they need to focus on. This in turn leads to more elaborate self-study and higher academic achievement.

Although it is possible that vaguely stated problems would also require students to elaborate and work hard, the difference (when compared with clearly stated problems) is that students are likely to engage in more unstructured and irrelevant discussions about the problem. This could be worsened if students in a team have differing opinions and interpretations of the problem. Even though irrelevant discussions may be useful, relevancy of discussions to the problem is necessary for effective learning. Otherwise, there will not be a need for a specific problem in the first place. Given a fixed period of study time, students are likely to accomplish less with a vaguely stated problem than with a clearly stated problem. When given a clearly stated problem, students are likely to generate learning issues that are congruent with the faculty-intended learning objectives. However, when presented with a vaguely stated problem, students may discuss broadly on the subject and deviate from its faculty-intended learning objectives. Similarly, it is possible that students also work hard when given a vaguely stated problem. Unfortunately, this effort may be fruitless when working on a vague problem as students may focus on irrelevant and unintended learning issues. In sum, compared to a clearly stated problem, a vague problem is likely to lead to ineffective group discussions and self-study, which results in lower levels of academic achievement.

Problem familiarity seemed to have no impact on group discussion or identification of learning goals and only a small effect on self-study. This is in line with findings from Gijselaers and Schmidt’s study (1990) that congruence of students’ prior knowledge with the problems did not contribute to group functioning and time spent on self-study, but resulted in higher academic achievement. What this means is that students working on a familiar problem could be involved in more self-study than collaborative work, which results in better academic achievement. This perhaps suggests that students who have more prior knowledge about the problem may be better able to identify and find suitable learning resources. Being more familiar with the problem context thus may have a beneficial effect on finding matching information and being more engaged during self-study which leads to better academic achievement. De Grave et al. (2001) showed that when students have all read the same materials from the same book, fewer viewpoints were discussed in the group.

Another key finding from this study is that the self-reported level of engagement during self-study was a relatively good predictor of students’ actual achievement-related behaviors in the classroom, as observed and recorded by the tutors. Once students engaged in the observable classroom behaviors, it was a relatively strong predictor of their academic achievement, explaining about 23% of the variance. We tested an alternative model in which we omitted the variable achievement-related classroom behaviors. The results of this alternative model showed a significant reduction in the explained variance in academic achievement. Without achievement-related classroom behaviors, only 8% of the variance in academic achievement could be explained. This outcome demonstrates that students self-reported levels of engagement during self-study is not sufficient to adequately predict academic achievement, but only if the self-reported levels of engagement can be observed (i.e. the students did indeed work hard on the problem) it is an adequate predictor of academic achievement. This outcome is in line with earlier studies conducted in the same educational context (cf. Rotgans et al. 2008; Rotgans and Schmidt 2011), which demonstrated that the predictive power of self-report measures on academic achievement could be significantly improved by including achievement-related classroom behaviors as a mediator variable.

In conclusion, the present study is among the first to shed more light on the causal interactions of specific problem characteristics at the micro level. Results revealed that a clearly formulated problem is the most significant aspect of high quality problems in PBL. That is, a clearly formulated problem results in all the desired learning behaviors expected from PBL: group discussion, identification of learning goals and eventually a deeper understanding of the topic. Considering that the structure of one-day, one-problem approach at the polytechnic is not different from conventional PBL in the sense that students still have a problem trigger as the starting point of their learning process, engage in collaborative group discussions, formulate hypotheses about the problem, engage in independent self-study, and elaborate on their findings, it is expected that the results of the present study can be largely generalized to conventional PBL.

The educational implications of this finding are that problem designers need to assure that the problems are clearly stated. According to Mayer (1999), fruitful techniques to do so are: (1) using unambiguous headings for the problem to provide context, (2) providing a summary of information or including additional questions and statements, which can help students identify the important learning issues. In addition to this, Sockalingam and Schmidt (2010) suggested using key words, analogies, examples, metaphors, stories, and informative pictures in the problem statement to increase the clarity of problems in PBL. Whether these techniques help to improve the problem clarity demands further research. In particular experimental studies seem at its place here to systematically determine which manipulation has the largest effect on problem clarity in PBL.

A shortcoming of our study is that we included only a limited number of problem characteristics in the model. It would be more informative to include more input variables in the model to see if—besides problem clarity and problem familiarity—there are other input variable that influence the process and output variables in PBL. For instance, Sockalingam and Schmidt (2010) proposed a total of 11 problem characteristics that seem to have a direct effect on student learning in PBL. Of these, they identified problem difficulty, problem format, and problem relevance in addition to problem clarity and problem familiarity as input variables that determine the effectiveness of the problems. As such, it is recommended to include these variable in future studies to gain a more detailed picture of what makes a good problem in PBL.

References

Alwis, W. A. M., & O’Grady, G. (2002). One day-one problem at Republic Polytechnic. Paper 4th Asia-Pacific Conference on PBL, Thailand.

Barrows, H. S., & Tamblyn, R. M. (1980a). Problem- based learning: An approach to medical education. New York: Springer.

Barrows, H. S., & Tamblyn, R. M. (1980b). Problem-based learning. New York, NY: Springer Publishing.

Byrne, B. M. (2001). Structural equation modeling with Amos: Basic concepts, applications and programming. Mahwah, N.J.: Lawrence Erlbaum Assoc Inc.

Chai, J., & Schmidt, H. G. (2007). Generalizability and unicity of global ratings by teachers. Singapore: International Problem Based Learning Symposium.

Colliver, J. A. (2000). Effectiveness of problem-based learning curricula: Research and theory. Academic Medicine, 75(3), 259–266.

De Grave, W. S., Dolmans, D. H. J. M., & Van der Vleuten, C. P. M. (2001). Student perceptions about the occurrence of critical incidents in the tutorial group. Medical Teacher, 23, 421–427.

Fan, X., Thompson, B., & Wang, L. (1999). Effects of sample size, estimation methods, and model specification on structural equation. Structural Equation Modeling, 6(1), 56–83.

Gijselaers, W. H., & Schmidt, H. G. (1990). Development and evaluation of a causal model of problem-based learning. In Z. H. Nooman, H. G. Schmidt, & E. S. Ezzat (Eds.), Innovation in medical education: An evaluation of its present status. New York: Springer Publishing Co.

Hancock, G. R., & Mueller, R. O. (2001). Rethinking construct reliability within latent systems. In R. Cudeck, S. D. Toit, & D. Sörbom (Eds.), Structural equation modeling: Present and future—A festschrift in honor of Karl Jöreskog (pp. 195–216). Lincolnwood, IL: Scientific Software International.

Hmelo-Silver, C. E. (2004). Problem-based learning: What and how do students learn? Educational Psychology Review, 16(3), 235–266.

Hu, L., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling, 6(1), 1–55.

Majoor, G. D., Schmidt, H. G., Snellen-Balendong, H. A. M., Moust, J. H. C., & Stalenhoef-Halling, B. (1990). Construction of problems for problem-based learning. In Z. Nooman, H. G. Schmidt, & E. S. Ezzat (Eds.), Innovation in medical education. New York: Springer.

Mayer, R. E. (1999). Designing instruction for constructivist learning. In C. M. Reigeluth (Ed.), Instructional-design theories and models (pp. 141–160). Mahwah, NJ: Lawrence Erlbaum Associates.

Rotgans, J. I., Alwis, W. A. M., & Schmidt, H. G. (2008). Measuring self-regulation, motivation, and academic achievement. New York: Annual meeting of the American Educational Research Association.

Rotgans, J. I., & Schmidt, H. G. (2011). Situational interest and academic achievement in the active-learning classroom. Learning and Instruction, 21(1), 58–67.

Schmidt, H. G. (1983). Problem-based learning: Rationale and description. Medical Education, 17(1), 11–16.

Schmidt, H. G. (1993). Foundations of problem-based learning: Some explanatory notes. Medical Education, 27(5), 422–432.

Schmidt, H. G., & Moust, J. H. C. (2000). Factors affecting small-group tutorial learning: A review of research. In D. H. Evensen & C. E. Hmelo (Eds.), Problem-based learning: A research perspective on learning interactions (pp. 19–52). Mahwah, NJ: Lawrence Erlbaum.

Schmidt, H. G., Van der Arend, A., Moust, J. H. C., Kokx, I., & Boon, L. (1993). Influence of tutors subject-matter expertise on student effort and achievement in problem-based learning. Academic Medicine, 68(10), 784–791.

Sockalingam, N., & Schmidt, H. G. (2010). Characteristics of problems in problem-based learning: The students’ perspective. Interdisciplinary Journal of Problem-based Learning. In Press.

Van Berkel, H. J. M., & Schmidt, H. G. (2000). Motivation to commit oneself as a determinant of achievement in problem-based learning. Higher Education, 40, 231–242.

Van Den Hurk, M., Dolmans, D. H. J. M., Wolfhagen, I. H. A. P., & Van Der Vleuten, C. P. M. (2001a). Quality of student-generated learning issues in a problem-based curriculum. Medical Teacher, 23(6), 567–571.

Van Den Hurk, M., Dolmans, D. H. J. M., Wolfhagen, I. H. A. P., & Van Der Vleuten, C. P. M. (2001b). Testing a causal model for learning in a problem-based curriculum. Advances in Health Science Education, 6, 141–149.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Sockalingam, N., Rotgans, J.I. & Schmidt, H.G. The relationships between problem characteristics, achievement-related behaviors, and academic achievement in problem-based learning. Adv in Health Sci Educ 16, 481–490 (2011). https://doi.org/10.1007/s10459-010-9270-3

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10459-010-9270-3