Abstract

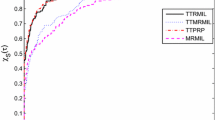

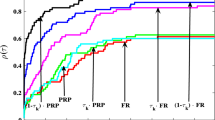

Recently, conjugate gradient methods, which usually generate descent search directions, are useful for large-scale optimization. Narushima et al. (SIAM J Optim 21:212–230, 2011) have proposed a three-term conjugate gradient method which satisfies a sufficient descent condition. We extend this method to two parameters family of three-term conjugate gradient methods which can be used to control the magnitude of the directional derivative. We show that these methods converge globally and work well for suitable choices of the parameters. Numerical results are also presented.

Similar content being viewed by others

References

Al-Baali, M.: Descent property and global convergence of the Fletcher-Reeves method with inexact line search. IMA J. Numer. Anal. 5, 121–124 (1985)

Barzilai, J., Borwein, J.M.: Two-point stepsize gradient methods. IMA J. Numer. Anal. 8, 141–148 (1988)

Bongartz, I., Conn, A.R., Gould, N.I.M., Toint, P.L.: CUTE: constrained and unconstrained testing environments. ACM Trans. Math. Softw. 21, 123–160 (1995)

Cheng, W.: A two-term PRP-based descent method. Numer. Funct. Anal. Optim. 28, 1217–1230 (2007)

Dai, Y.-H., Kou, C.-X.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23, 296–320 (2013)

Dai, Y.-H., Liao, L.Z.: New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43, 87–101 (2001)

Dai, Y.-H., Yuan, Y.: A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10, 177–182 (1999)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program. 91, 201–213 (2002)

Fletcher, R.: Practical Methods of Optimization (Second Edition). Wiley, New York (1987)

Fletcher, R.: On the Barzilai-Borwein Method, Optimization and Control with Applications, Springer series in Applied Optimization, 96, 235–256, Springer, New York (2005)

Fletcher, R., Reeves, C.M.: Function minimization by conjugate gradients. Comput. J. 7, 149–154 (1964)

Gilbert, J.C., Nocedal, J.: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2, 21–42 (1992)

Gould, N.I.M., Orban, D., Toint, P.L.: CUTEr and SifDec: a constrained and unconstrained testing environment, revisited. ACM Trans. Math. Softw. 29, 373–394 (2003)

Hager, W.W. http://people.clas.ufl.edu/hager/. Accessed 10 Mar 2014

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16, 170–192 (2005)

Hager, W.W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2, 35–58 (2006)

Hager W.W., Zhang, H.: CG_DESCENT Version 1.4 User’s Guide, University of Florida (2005). http://people.clas.ufl.edu/hager/. Accessed 10 Mar 2014

Hager, W.W., Zhang, H.: Algorithm 851: CG_DESCENT, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Softw. 32, 113–137 (2006)

Hager, W.W., Zhang, H.: The limited memory conjugate gradient method. SIAM J. Optim. 23, 2150–2168 (2013)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49, 409–436 (1952)

Liu, Y., Storey, C.: Efficient generalized conjugate gradient algorithms, Part1: Theory. J. Optim. Theory. Appl. 69, 129–137 (1991)

Narushima, Y., Yabe, H., Ford, J.A.: A three-term conjugate gradient method with sufficient descent property for unconstrained optimization. SIAM J. Optim. 21, 212–230 (2011)

Nocedal, J., Wright, S.J.: Numerical Optimization, 2nd edn. Springer Series in Operations Research, Springer, New York (2006)

Sugiki, K., Narushima, Y., Yabe, H.: Globally convergent three-term conjugate gradient methods that use secant conditions and generate descent search directions for unconstrained optimization. J Optim Theory. Appl. 153, 733–757 (2012)

Sorenson, H.W.: Comparison of some conjugate direction procedures for function minimization. J. Frankl. Inst. 288, 421–441 (1969)

Yu, G., Guan, L., Li, G.: Global convergence of modified Polak-Ribiére-Polyak conjugate gradient methods with sufficient descent property. J. Ind. Manag. Optim. 4, 565–579 (2008)

Zhang, L.: A new Liu-Storey type nonlinear conjugate gradient method for unconstrained optimization problems. J. Comput. Appl. Math. 225, 146–157 (2009)

Zhang, L., Zhou, W., Li, D.H.: Global convergence of a modified Fletcher-Reeves conjugate gradient method with Armijo-type line search. Numer. Math. 104, 561–572 (2006)

Zhang, L., Zhou, W., Li, D.H.: A descent modified Polak-Ribière-Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26, 629–640 (2006)

Zhang, L., Zhou, W., Li, D.H.: Some descent three-term conjugate gradient methods and their global convergence. Optim. Methods Softw. 22, 697–711 (2007)

Zoutendijk, G.: Nonlinear Programming, Computational Methods, in Integer and Nonlinear Programming. Abadie, J. (ed.) North-Holland, Amsterdam, 37–86, 1970

Acknowledgments

The authors would like to thank Prof. William W. Hager, the Editor-in-Chief of the journal, and the anonymous reviewers for valuable comments on a draft of this paper. We would also like to thank Prof. Yu-Hong Dai for providing his program code of conjugate gradient methods. The second and third authors are supported in part by the Grant-in-Aid for Scientific Research (C) 25330030 of Japan Society for the Promotion of Science

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Al-Baali, M., Narushima, Y. & Yabe, H. A family of three-term conjugate gradient methods with sufficient descent property for unconstrained optimization . Comput Optim Appl 60, 89–110 (2015). https://doi.org/10.1007/s10589-014-9662-z

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-014-9662-z