Abstract

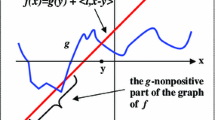

We investigate projected scaled gradient (PSG) methods for convex minimization problems. These methods perform a descent step along a diagonally scaled gradient direction followed by a feasibility regaining step via orthogonal projection onto the constraint set. This constitutes a generalized algorithmic structure that encompasses as special cases the gradient projection method, the projected Newton method, the projected Landweber-type methods and the generalized expectation-maximization (EM)-type methods. We prove the convergence of the PSG methods in the presence of bounded perturbations. This resilience to bounded perturbations is relevant to the ability to apply the recently developed superiorization methodology to PSG methods, in particular to the EM algorithm.

Similar content being viewed by others

Notes

We use the term “algorithm” for the iterative processes discussed here, even for those that do not include any termination criterion. This does not create any ambiguity because whether we consider an infinite iterative process or an algorithm with a termination rule is always clear from the context.

References

Bauschke, H.H., Borwein, J.M.: On projection algorithms for solving convex feasibility problems. SIAM Rev. 38, 367–426 (1996)

Bertero, M., Boccacci, P.: Introduction to Inverse Problems in Imaging. Institute of Physics, Bristol (1998)

Bertero, M., Lantéri, H., Zanni, L.: Iterative image reconstruction: a point of view. In: Censor, Y., Jiang, M., Louis, A.K. (eds.) Mathematical Methods in Biomedical Imaging and Intensity-Modulated Radiation Therapy (IMRT), Publications of the Scuola Normale Superiore, vol. 7, pp. 37–63. Edizioni della Normale, Pisa (2008)

Bertsekas, D.P.: On the Goldstein-Levitin-Polyak gradient projection method. IEEE Trans. Autom. Control 21, 174–184 (1976)

Bertsekas, D.P.: Projected Newton methods for optimization problems with simple constraints. SIAM J. Control Optim. 20, 221–246 (1982)

Bertsekas, D.P.: Nonlinear Programming. Athena Scientific, Belmont (1999)

Bonettini, S., Zanella, R., Zanni, L.: A scaled gradient projection method for constrained image deblurring. Inverse Probl. 25, 015002 (2009). (23pp)

Butnariu, D., Davidi, R., Herman, G.T., Kazantsev, I.G.: Stable convergence behavior under summable perturbations of a class of projection methods for convex feasibility and optimization problems. IEEE J. Sel. Top. Signal Process. 1, 540–547 (2007)

Byrne, C.L., Censor, Y.: Proximity function minimization using multiple Bregman projections, with applications to split feasibility and Kullback-Leibler distance minimization. Ann. Oper. Res. 105, 77–98 (2001)

Byrne, C.L.: Iterative image reconstruction algorithms based on cross-entropy minimization. IEEE Trans. Image Process. 2, 96–103 (1993)

Byrne, C.L.: Applied Iterative Methods. A K Peters, Wellesley (2008)

Cegielski, A.: Iterative Methods for Fixed Point Problems in Hilbert Spaces. Lecture Notes in Mathematics, vol. 2057. Springer, Heidelberg (2013)

Censor, Y.: Weak and strong superiorization: between feasibility-seeking and minimization. An. St. Univ. Ovidius Constanta, Ser. Mat. 23, 41–54 (2015)

Censor, Y., Davidi, R., Herman, G.T.: Perturbation resilience and superiorization of iterative algorithms. Inverse Probl. 26, 065008 (2010). (12pp)

Censor, Y., Davidi, R., Herman, G.T., Schulte, R.W., Tetruashvili, L.: Projected subgradient minimization versus superiorization. J. Optim. Theory Appl. 160, 730–747 (2014)

Censor, Y., Elfving, T., Herman, G.T., Nikazad, T.: On diagonally relaxed orthogonal projection methods. SIAM J. Sci. Comput. 30, 473–504 (2008)

Censor, Y., Zaslavski, A.J.: Convergence and perturbation resilience of dynamic string-averaging projection methods. Comput. Optim. Appl. 54, 65–76 (2013)

Censor, Y., Zenios, S.A.: Parallel Optimization: Theory, Algorithms, and Applications. Oxford University Press, New York (1997)

Cheng, Y.C.: On the gradient-projection method for solving the nonsymmetric linear complementarity problem. J. Optim. Theory Appl. 43, 527–541 (1984)

Chinneck, J.W.: Feasibility and Infeasibility in Optimization: Algorithms and Computational Methods. International Series in Operations Research and Management Science, vol. 118. Springer, New York (2008)

Combettes, P.L.: Inconsistent signal feasibility problems: least-squares solutions in a product space. IEEE Trans. Signal Process. 42, 2955–2966 (1994)

Combettes, P.L.: Quasi-Fejérian analysis of some optimization algorithms. In: Butnariu, D., Censor, Y., Reich, S. (eds.) Inherently Parallel Algorithms in Feasibility and Optimization and Their Applications. Studies in Computational Mathematics, vol. 8, pp. 115–152. Elsevier, Amsterdam (2001)

Csiszár, I.: Why least squares and maximum entropy? An axiomatic approach to inference for linear inverse problems. Ann. Stat. 19, 2032–2066 (1991)

Davidi, R., Herman, G.T., Censor, Y.: Perturbation-resilient block-iterative projection methods with application to image reconstruction from projections. Int. Trans. Oper. Res. 16, 505–524 (2009)

Gafni, E.M., Bertsekas, D.P.: Two-metric projection methods for constrained optimization. SIAM J. Control Optim. 22, 936–964 (1984)

Garduño, E., Herman, G.T.: Superiorization of the ML-EM algorithm. IEEE Trans. Nucl. Sci. 61, 162–172 (2014)

Goldstein, A.A.: Convex programming in Hilbert space. Bull. Am. Math. Soc. 70, 709–710 (1964)

Golub, G.H., Van Loan, C.F.: Matrix Computations, 3rd edn. Johns Hopkins University Press, Baltimore (1996)

Helou Neto, E.S., De Pierro, A.R.: Convergence results for scaled gradient algorithms in positron emission tomography. Inverse Probl. 21, 1905–1914 (2005)

Helou Neto, E.S., De Pierro, A.R.: Incremental subgradients for constrained convex optimization: a unified framework and new methods. SIAM J. Optim. 20, 1547–1572 (2009)

Herman, G.T.: Superiorization for image analysis. Combinatorial Image Analysis. Lecture Notes in Computer Science, vol. 8466, pp. 1–7. Springer, Switzerland (2014)

Herman, G.T.: Fundamentals of Computerized Tomography: Image Reconstruction from Projections, 2nd edn. Springer, London (2009)

Herman, G.T., Garduño, E., Davidi, R., Censor, Y.: Superiorization: an optimization heuristic for medical physics. Med. Phys. 39, 5532–5546 (2012)

Iusem, A.N.: Convergence analysis for a multiplicatively relaxed EM algorithm. Math. Methods Appl. Sci. 14, 573–593 (1991)

Jiang, M., Wang, G.: Development of iterative algorithms for image reconstruction. J. X-ray Sci. Technol. 10, 77–86 (2001)

Jiang, M., Wang, G.: Convergence studies on iterative algorithms for image reconstruction. IEEE Trans. Med. Imaging 22, 569–579 (2003)

Jin, W., Censor, Y., Jiang, M.: A heuristic superiorization-like approach to bioluminescence tomography. In: World Congress on Medical Physics and Biomedical Engineering May 26–31, 2012, Beijing, China, IFMBE Proceedings, vol. 39, pp. 1026–1029. Springer, Heidelberg (2013)

Kiwiel, K.C.: Convergence of approximate and incremental subgradient methods for convex optimization. SIAM J. Optim. 14, 807–840 (2004)

Landweber, L.: An iteration formula for Fredholm integral equations of the first kind. Am. J. Math. 73, 615–624 (1951)

Lantéri, H., Roche, M., Cuevas, O., Aime, C.: A general method to devise maximum-likelihood signal restoration multiplicative algorithms with non-negativity constraints. Signal Process. 81, 945–974 (2001)

Levitin, E.S., Polyak, B.T.: Constrained minimization methods. USSR Comput. Math. Math. Phys. 6, 1–50 (1966)

Li, W.: Remarks on convergence of the matrix splitting algorithm for the symmetric linear complementarity problem. SIAM J. Optim. 3, 155–163 (1993)

Luo, S., Zhou, T.: Superiorization of EM algorithm and its application in single-photon emission computed tomography (SPECT). Inverse Probl. Imaging. 8, 223–246 (2014)

Luo, Z.Q., Tseng, P.: On the linear convergence of descent methods for convex essentially smooth minimization. SIAM J. Control Optim. 30, 408–425 (1992)

Luo, Z.Q., Tseng, P.: Error bounds and convergence analysis of feasible descent methods: a general approach. Ann. Oper. Res. 46, 157–178 (1993)

Mangasarian, O.L.: Convergence of iterates of an inexact matrix splitting algorithm for the symmetric monotone linear complementarity problem. SIAM J. Optim. 1, 114–122 (1991)

McCormick, S.F., Rodrigue, G.H.: A uniform approach to gradient methods for linear operator equations. J. Math. Anal. Appl. 49, 275–285 (1975)

Nesterov, Y.: Introductory Lectures on Convex Optimization: A Basic Course, Applied Optimization, vol. 87. Springer, New York (2004)

Nikazad, T., Davidi, R., Herman, G.T.: Accelerated perturbation-resilient block-iterative projection methods with application to image reconstruction. Inverse Probl. 28, 035005 (2012). (19pp)

Pang, J.S.: A posteriori error bounds for the linearly-constrained variational inequality problem. Math. Oper. Res. 12, 474–484 (1987)

Pang, J.S.: Error bounds in mathematical programming. Math. Program. 79, 299–332 (1997)

Penfold, S.N., Schulte, R.W., Censor, Y., Rosenfeld, A.B.: Total variation superiorization schemes in proton computed tomography image reconstruction. Med. Phys. 37, 5887–5895 (2010)

Piana, M., Bertero, M.: Projected Landweber method and preconditioning. Inverse Probl. 13, 441–463 (1997)

Polyak, B.T.: Introduction to Optimization. Optimization Software, New York (1987)

Davidi, R., Censor, Y., Schulte, R.W., Geneser, S., Xing, L.: Feasibility-seeking and superiorization algorithms applied to inverse treatment planning in radiation therapy. Contemp. Math. 636, 83–92 (2015)

Schrapp, M.J., Herman, G.T.: Data fusion in X-ray computed tomography using a superiorization approach. Rev. Sci. Instr. 85, 053701 (2014)

Shepp, L.A., Vardi, Y.: Maximum likelihood reconstruction for emission tomography. IEEE Trans. Med. Imaging 1, 113–122 (1982)

Solodov, M.V.: Convergence analysis of perturbed feasible descent methods. J. Optim. Theory Appl. 93, 337–353 (1997)

Solodov, M.V., Zavriev, S.K.: Error stability properties of generalized gradient-type algorithms. J. Optim. Theory Appl. 98, 663–680 (1998)

Trussell, H., Civanlar, M.: The Landweber iteration and projection onto convex sets. IEEE Trans. Acoust. Speech Signal Process. 33, 1632–1634 (1985)

Acknowledgments

We greatly appreciate the constructive comments of two anonymous reviewers and the Coordinating Editor which helped us improve the paper. This work was supported in part by the National Basic Research Program of China (973 Program) (2011CB809105), the National Science Foundation of China (61421062) and the United States-Israel Binational Science Foundation (BSF) Grant number 2013003.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Jin, W., Censor, Y. & Jiang, M. Bounded perturbation resilience of projected scaled gradient methods. Comput Optim Appl 63, 365–392 (2016). https://doi.org/10.1007/s10589-015-9777-x

Received:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-015-9777-x