Abstract

In this paper we address game theory problems arising in the context of network security. In traditional game theory problems, given a defender and an attacker, one searches for mixed strategies which minimize a linear payoff functional. In the problems addressed in this paper an additional quadratic term is added to the minimization problem. Such term represents switching costs, i.e., the costs for the defender of switching from a given strategy to another one at successive rounds of a Nash game. The resulting problems are nonconvex QP ones with linear constraints and turn out to be very challenging. We will show that the most recent approaches for the minimization of nonconvex QP functions over polytopes, including commercial solvers such as CPLEX and GUROBI, are unable to solve to optimality even test instances with \(n=50\) variables. For this reason, we propose to extend with them the current benchmark set of test instances for QP problems. We also present a spatial branch-and-bound approach for the solution of these problems, where a predominant role is played by an optimality-based domain reduction, with multiple solutions of LP problems at each node of the branch-and-bound tree. Of course, domain reductions are standard tools in spatial branch-and-bound approaches. However, our contribution lies in the observation that, from the computational point of view, a rather aggressive application of these tools appears to be the best way to tackle the proposed instances. Indeed, according to our experiments, while they make the computational cost per node high, this is largely compensated by the rather slow growth of the number of nodes in the branch-and-bound tree, so that the proposed approach strongly outperforms the existing solvers for QP problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider a finite two-person zero-sum game \(\varGamma\), composed from a player set \(N=\{1,2\}\), each member thereof having a finite strategy space \(S_1,S_2\) associated with it, and a utility function \(u_i:S_1\times S_2\rightarrow {\mathbb {R}}\) for all \(i\in N\). We assume a zero-sum Nash game, making \(u_2:=-u_1\) hereafter, and letting the players choose their actions simultaneously and stochastically independent of one another (contrary to a Stackelberg game, where one player would follow the other, which we do not consider here). The game is then the triple \(\varGamma =(N,\mathcal S=\{S_1,S_2\},H=\{u_1,-u_1\})\), and is most compactly represented by giving only the payoff function \(u_1\) in matrix form (since the strategy spaces are finite) as

An equilibrium in \(\varGamma\) is a simultaneous optimum for both players w.r.t. \(u_1\). Assuming a maximizing first player, an equilibrium is a pair \((x^*,z^*)\) satisfying the saddle-point condition

It is well known that many practical games do not have such an equilibrium point; as one of the simplest instances, consider the classical rock-scissors-paper game, represented by the payoff matrix

This game has no equilibria in pure strategies: any fixed choice of rock, scissors or paper would imply a constant loss for the first player (and likewise for the second player). This means that player 1 is forced to randomize its actions in every round of the game, and this concept leads to the idea of mixed extensions of a game, which basically changes the above optimization problem into one over the convex hulls \(\varDelta (S_1), \varDelta (S_2)\) of the action spaces, rather than the finite sets \(S_1,S_2\). An element of \(\varDelta (S_i)\) is then a probability distribution over the elements of the support \(S_i\), and prescribes to pick a move at random whenever the game is played.

The game rewards its players after each round, and upon every new round, both players are free to choose another element from their action space at random. Implicitly, this choice is without costs, but what if not? Many real life instances of games do incur a cost for changing one’s action from \(a_1\in S_1\) in the first to some distinct \(a_2\in S_1\) in the next round. Matrix games cannot express such costs in their payoff functions, and more complex game models such as sequential or stochastic games come with much more complicated models and equilibrium concepts. The goal of this work is to retain the simplicity of matrix games but endow them with the ability to include switching costs with the minimal natural (modeling) effort.

The area of system security [2, 30] offers rich examples of such instances, such as (among many):

-

Changing passwords [25]: if the currently chosen password is \(p_1\) and we are obliged to pick a fresh password (say, different from the last couple of passwords that we had in the past), the use of the new password \(p_2\ne p_1\) induces quite some efforts, as we have to memorize the password, while choosing it as hard as possible to guess. The “cost” tied to the change is thus not monetary, but the cognitive efforts to create and memorize a new password. This effort can make people reluctant to change their passwords (or write them down, or use a very similar password for the new one).

-

Changing computer/server configurations: this usually means taking a computer (e.g., a server) offline for a limited time, thus cutting down productivity perhaps, and hence causing costs. If security is drawn from randomly changing configurations (and passwords, resp. password changing rules are only one special case here), then this change incurs costs by temporal outages of IT infrastructure for the duration of the configuration change, and the efforts (person-hours) spent on applying this change. This is why server updates or patches are usually done over nights or weekends, when the loads are naturally low. If the optimization would, however, prescribe a rather frequent change of configurations at random intervals, this can quickly become a practical inhibitor, unless the switching costs are accounted for by optimization.

-

Patrolling and surveillance [4, 24]: consider a security guard on duty to repeatedly check a few locations, say A, B, ..., E, which are connected at distances as depicted in Fig. 1. This is a chasing-evading game with the guard acting as player 1 against an intruder being player 2, and with the payoff function u being an indicator of whether the guard caught the intruder at location \(i\in \{\)A,...,E\(\}\), or whether the two missed each other. This is yet another instance of a game with all equilibria in mixed strategies, but with the unpleasant side-effect for the guard that gets the prescription to randomly spot check distant locations to “play” the equilibrium \(\mathbf{x^*}\), the guard would have to move perhaps long distances between the locations. For example, if it is at A in round 1 and the next sample from the random distribution \(\mathbf{x^*}\in \varDelta (\{\)A,...,E\(\})\) tells to check point E next, the shortest path would be of length \(1+3+2=6\) over C. Starting from A, however, it would be shorter and hence more convenient for the guard to check location B first along the way, but this would mean deviating from the equilibrium! A normal game theoretic equilibrium calculation does not consider this kind of investment to change the current strategy. This may not even count as bounded rationality, but simply as acting “economic” from the guard’s perspective. But acting economically here is then not governed by a utility maximizing principle, but rather by a cost minimization effort.

Generalizing the patrolling game example, the issue applies to all sorts of moving target defense: for example, changing the configuration of a computer system so as to make it difficult for an attacker to break in, often comes with high efforts and even risks for the defending player 1 (the system administrator), since it typically means taking off machines from the network, reconfiguring them to close certain vulnerabilities, and then putting them back to work hoping that everything restarts and runs smoothly again. A normal game theoretic model accounts only for the benefits of that action, but not for the cost of taking the action.

Including the cost to switch from one action to the next is more complicated than just assigning a cost function \(c:S_1\rightarrow {\mathbb {R}}\) and subtracting this from the utilities to redefine them as \(u_1'(i,j)=u_1(i,j)-c(i)\), since the cost to play \(a_i\) will generally depend on the previous action \(a_j\) played in the previous round.

We can model this sort of payoff by another function \(s:S_1\times S_1\rightarrow {\mathbb {R}}\) that we call the switching cost. The value of s(i, j) is then precisely the cost incurred to change the current action \(i\in S_1\) into the action \(j\in S_1\) in the next round of the game. Intuitively, this adds another payoff dimension to the game, where a player, w.l.o.g. being player 1 in the following, plays “against itself”, since the losses are implied by its own behavior. While the expected payoffs in a matrix game \(\mathbf{A}\) under mixed strategies \(\mathbf{x}\in \varDelta (S_1),\mathbf{z}\in \varDelta (S_2)\) are expressible by the bilinear functional \(\mathbf{x}^T\mathbf{A}{} \mathbf{z}\), the same logic leads to the hypothesis that the switching cost should on average be given by the quadratic functional \(\mathbf{x}^T\mathbf{S}\mathbf{x}\), where the switching cost matrix is given, like the payoff matrix above, as

This intuition is indeed right [26], but for a rigorous problem statement, we will briefly recap the derivation given independently later by [32] to formally state the problem.

1.1 Paper Outline

The paper is structured as follows. In Sect. 2 we give a formal description of the problem as a nonconvex QP one with linear constraints, and we report a complexity result, proved in Appendix 1 In Sect. 3 we present a (spatial) branch-and-bound approach for the problem, putting a particular emphasis on the bound-tightening procedure, which turns out to be the most effective tool to attack it. In Sect. 4 we present a real-life instance. In Sect. 5 we present some computational experiments. We first describe the set of test instances. Next, we discuss the performance of existing solvers over these instances. Finally, we present and comment the computational results attained by the proposed approach. In Sect. 6 we draw some conclusions and discuss possible future developments.

1.2 Statement of contribution

The main contributions of this work are:

-

addressing an application of game theory arising in the context of network security, where switching costs come into play, and showing that the resulting problem can be reformulated as a challenging nonconvex QP problem with linear constraints;

-

introducing a large set of test instances, which turn out to be very challenging for existing QP solvers and, for this reason, could be employed to extend the current benchmark set of QP problems (see [11]);

-

proposing a branch-and-bound approach for the solution of the addressed QP problems, based on standard tools, but with the empirical observation that a very aggressive use of bound-tightening techniques, with a high computational cost per node of the branch-and-bound tree, is the key for an efficient solution of these problems.

2 Formal description of the problem

Let the game come as a matrix \(\mathbf{A}\in {\mathbb {R}}^{n\times m}\), where n and m are the number of strategies for player 1 and 2, respectively, with equilibrium \((\mathbf{x}^*,\mathbf{z}^*)\), and let it be repeated over the time \(t\in {\mathbb {N}}\). At each time t, let \(X_t\sim \mathbf{x}^*\) be the random action sampled from the equilibrium distribution over the action space (with \(\mathbf{x}^*\) being the optimal distribution). In a security setting and zero-sum game, neither player has an interest of being predictable by its opponent, so we assume stochastic independence of the action choices by both players between any two repetitions of the game. Then, we have \(\Pr (X_{t-1}=i,X_t=j)=\Pr (X_{t-1}=i)\cdot \Pr (X_{t}=j)\), so that any future system state remains equally predictable whether or not the current state of the system is known. Hence, the switching cost can be written as

With this, player 1’s payoff functional becomes vector-valued now as

and the game is multi-objective for the first player. As we are interested mostly in the best behavior for player 1 and the analysis would be symmetric from player 2’s perspective, we shall not explore the view of the second player hereafter.

Remark 1

The game could be equally well multi-objective for the second player too, and in fact a practical instance of such a situation may also come from security: it could be in an adversary’s interest to “keep the defender busy”, thus causing much friction by making the defender move fast from one place to the other. This is yet just another instance of a denial-of-service attack, to which such a game model would apply.

For the sake of computing a multi-objective equilibrium, more precisely a Pareto-Nash equilibrium, the algorithm in [27] based on the method laid out in [16] proceeds by scalarizing (1) by choice of some \(\alpha \in (0,1)\), to arrive at the real-valued goal function

for the first player to optimize. Now, the usual way from here to an optimization problem for player 1 involving a rational opponent applies as for standard matrix games [26]: let \(\mathbf{e}_i\in {\mathbb {R}}^m\) be i-th unit vector, then \(\arg \max _{\mathbf{z}\in \varDelta (S_2)}(\mathbf{x}^T\mathbf{A}{} \mathbf{z})=\arg \max _{i}(\mathbf{x}^T\mathbf{A}{} \mathbf{e}_i)\). After introducing the additional variable v, the resulting problem becomes

which is almost the familiar optimization problem to be solved for a Nash equilibrium in a finite matrix game. It differs from the well known linear program only in the quadratic term, and, in fact, the equilibrium problem for matrix games is recovered by substituting \(\alpha =1\) in (2). Note that the matrix \(\mathbf{S}\) in the quadratic term will (in most cases) have a zero diagonal, nonnegative off-diagonal entries, be indefinite and not symmetric in general (patrolling game example given above already exhibits a variety of counterexamples leading to nonsymmetric distance matrices \(\mathbf{S}\) if the graph is directed). Of course, symmetry of \(\mathbf{S}\) can be easily recovered, so in what follows we will assume that \(\mathbf{S}\) is symmetric. The two extreme values \(\alpha =0\) and \(\alpha =1\) give rise to simple problems. Indeed, as already commented, for \(\alpha =1\) the problem is an LP one, while for \(\alpha =0\) is a Standard QP (StQP) problem, which is in general NP-hard (e.g., in view of the reformulation of the max clique problem as an StQP problem, see [18]), but is trivial in the case of zero diagonal and nonnegative off-diagonal entries (each vertex of the unit simplex is a globally optimal solution). For what concerns the intermediate values \(\alpha \in (0,1)\) we can prove the following result, stating the complexity of problem (2) .

Theorem 1

Problem (2)is NP-hard.

Proof

See Appendix 1. \(\square\)

Remark 2

The dependence of next actions on past ones extends to other scenarios too: for example, if the game is about coordination in wireless settings (e.g., collaborative drones), the players, e.g., drones, share a common communication channel. Every exchange of information occupies that channel for a limited period of time, thus constraining what the other players can do at the moment. Such effects can be described by stochastic games, but depending on how far the effect reaches in the future, backward inductive solution methods may become computationally infeasible [14]; likewise, extending the strategy space to plan ahead a fixed number of k steps (to account for one strategy determining the next k repetitions of the game) may exponentially enlarge the strategy space (by a factor of \(2^{O(k)}\), making the game infeasible to analyze if k is large). Games with switching cost offer a neat bypass to that trouble: if an action is such that it occupies lots of resources for a player, thus preventing it from taking further moves in the next round of the game, we can express this as a switching cost. Assume, for instance, that an action in a game \(\varGamma\) is such that the player is blocked for the next k rounds, then the switching cost is k-times the expected utility \({\overline{u}}\) (with the expectation taken over the equilibrium distribution played by the participants) that these next k rounds would give. Virtually, the situation is thus like if the player would have paid the total average gain over the next rounds where it is forced to remain idle (thus gaining nothing):

Expression (3) will in practice be only an approximate identity, since we assumed that the game, viewed as a stochastic process, has already converged to stationarity (so that the equilibrium outcome \({\overline{u}}\) is actually rewarded). The speed of convergence, indeed, can itself be of interest to be controlled in security applications using moving target defenses [32]. The crucial point of modeling a longer lasting effect of the current action like described above, however, lies in the avoidance of complexity: expression (3) has no issues with large k, while more direct methods of modeling a game over k rounds, or including a dependency on the last k moves, is relatively more involved (indeed, normal stochastic games consider a first-order Markov chain, where the next state of the game depends on the last state; the setting just described would correspond to an order k chain, whose conversion into a first order chain is also possible, but complicates matters significantly).

3 A branch and bound approach

After incorporating parameter \(\alpha\) into the definitions of matrix \(\mathbf{S}\) and vectors \(\mathbf{A}_j\), \(j=1,\ldots ,m\), and after introducing the vector of variables \(\mathbf{y}\), problem (2) can be rewritten as the following problem with bilinear objective function and linear constraints:

where \(\mathbf{S}_i\) denotes the i-th row of matrix \(\mathbf{S}\) and \(\varDelta _n\) denotes the n-dimensional unit simplex. In what follows we will denote by P the feasible region of this problem, and by \(P_{\mathbf{x},\mathbf{y}}\) its projection over the space of \(\mathbf{x}\) and \(\mathbf{y}\) variables.

Each node of the branch-and-bound tree is associated to a box \(B=[{\varvec{\ell }}_{\mathbf{x}}, \mathbf{u}_{\mathbf{x}}]\times [{\varvec{\ell }}_{\mathbf{y}},\mathbf{u}_{\mathbf{y}}]\), where \({\varvec{\ell }}_{\mathbf{x}}, \mathbf{u}_{\mathbf{x}}\) and \({\varvec{\ell }}_{\mathbf{y}}, \mathbf{u}_{\mathbf{y}}\) denote lower and upper bound vectors for variables \(\mathbf{x}\) and \(\mathbf{y}\), respectively. An initial box \(B_0\), containing \(P_{\mathbf{x},\mathbf{y}}\) is easily computed. It is enough to set \({\varvec{\ell }}_{\mathbf{x}}=\mathbf{0}\), \(\mathbf{u}_{\mathbf{x}}=\mathbf{e}\) (the vector whose entries are all equal to one), and

Note that, although not strictly necessary, we can also bound variable v to belong to an interval. Indeed, we can impose \(v\ge 0\) (due to nonnegativity of the entries of vectors \(\mathbf{A}_j\), \(j=1,\ldots ,m\)), and

which certainly holds at optimal solutions of problem (4). In what follows we describe in detail each component of the branch-and-bound approach, whose pseudo-code is then sketched in Algorithm 1.

3.1 Lower bounds

Given box \(B=[{\varvec{\ell }}_{\mathbf{x}}, \mathbf{u}_{\mathbf{x}}]\times [{\varvec{\ell }}_{\mathbf{y}},\mathbf{u}_{\mathbf{y}}]\), then the well known McCormick underestimating function (see [17])

can be employed to limit from below the bilinear term \(x_i y_i\) over the rectangle \([\ell _{x_i}, u_{x_i}]\times [\ell _{y_i}, u_{y_i}]\). In fact, it turns out that the McCormick underestimating function is the convex envelope of the bilinear term over the given rectangle. Then, after introducing the additional variables \(f_i\), we have that the optimal value of the following LP problem is a lower bound for problem (4) over the box B:

The optimal solution of the LP problem will be denoted by \((\mathbf{x}^\star (B), \mathbf{y}^\star (B),\mathbf{f}^\star (B), v^\star (B))\).

3.2 Upper bound

The global upper bound (GUB in what follows) can be initialized with \(+\infty\) or, alternatively, if a local search procedure is available, one may run a few local searches from randomly generated starting points, and take the lowest local minimum value as initial GUB value, although, according to our experiments, there is not a significant variation in the computing times if such local searches are performed. During the execution of the branch-and-bound algorithm, each time we compute the lower bound (5) over a box B, its optimal solution is a feasible solution for problem (4) and, thus, we might update the upper bound as follows:

3.3 Branching

The branching strategy we employed is a rather standard one. Given node B, we first compute the quantities:

measuring the error of McCormick underestimator for each bilinear term \(x_i y_i\) at the optimal solution of the relaxed problem (5). Then, we select \(r\in \arg \max _{i=1,\ldots ,n} g_i\), i.e., the index corresponding to the bilinear term where we have the largest error at the optimal solution of the relaxation. Next, we might define the following branching operations for box B:

- Branching on x and y::

-

Define four children nodes by adding constraints \(\{x_r\le x_r^\star (B),\ y_r\le y_r^\star (B)\}\), \(\{x_r\le x_r^\star (B),\ y_r\ge y_r^\star (B)\}\), \(\{x_r\ge x_r^\star (B),\ y_r\le y_r^\star (B)\}\), \(\{x_r\ge x_r^\star (B),\ y_r\ge y_r^\star (B)\}\), respectively (quaternary branching);

- Branching on x::

-

Define two children nodes by adding constraints \(x_r\le x_r^\star (B)\) and \(x_r\ge x_r^\star (B)\), respectively (binary branching);

- Branching on y::

-

Define two children nodes by adding constraints \(y_r\le y_r^\star (B)\) and \(y_r\ge y_r^\star (B)\), respectively (binary branching).

Note that all choices above, with the new McCormick relaxation given by the new limits on the variables, reduce to zero the error for bilinear term \(x_r y_r\) at the optimal solution of problem (5). It is worthwhile to remark that the computed lower bound tends to become exact even when branching is always performed with respect to variables of the same type (say, always variables \(x_i\), \(i=1,\ldots ,n\)). Indeed, it is enough to have that \(\Vert \mathbf{u}_{\mathbf{x}}-{\varvec{\ell }}_{\mathbf{x}}\Vert \rightarrow 0\) or, alternatively, that \(\Vert \mathbf{u}_{\mathbf{y}}-{\varvec{\ell }}_{\mathbf{y}}\Vert \rightarrow 0\) in order to let the underestimating function values converge to the original objective function values. This is a consequence of the fact that the McCormick underestimation function tends to the value of the corresponding bilinear term even when only one of the two intervals on which it is defined shrinks to a single point. In the computational experiments we tried all three possibilities discussed above and it turns out that the best choice is the binary branching obtained by always branching on y variables.

3.4 Bound-tightening technique

A reduction of the boxes merely based on the above branching strategy would lead to a quite inefficient algorithm. It turns out that performance can be strongly enhanced by an Optimality-Based Bound-Tightening (OBBT in what follows) procedure (see, e.g., [8, 31]). An OBBT procedure receives in input a box B and returns a tightened box in output, removing feasible points which do not allow to improve the current best feasible solution. More formally, let \({{\mathcal {B}}}\) be the set of n-dimensional boxes. Then:

In our approach, we propose to employ an OBBT procedure, which is expensive but, as we will see, also able to considerably reduce the number of branch-and-bound nodes. The lower and upper limits \(\ell _{x_i}, \ell _{y_i}, u_{y_i}, u_{x_i}\), \(i=1,\ldots ,n\) are refined through the solution of LP problems having the feasible set defined by constraints (5b)-(5g) and the additional constraint

stating that we are only interested at feasible solutions where the underestimating function, i.e., the left-hand side of the constraint, corresponding to the objective function (5a), is not larger than the current upper bound GUB. Thus, each call of this OBBT procedure requires the solution of 4n LP problems with the following objective functions:

Note that all these problems are bounded in view of the fact that \(\mathbf{x}\) is constrained to belong to the unit simplex. In fact, what we observed through our computational experiments is that it is not necessary to solve all 4n LPs but it is enough to concentrate the effort on the most ’critical’ variables. More precisely, in order to reduce the number of LPs without compromising the performance, we employed the following strategies (see also [12] for strategies to reduce the effort). Taking into account the quantities \(g_i\) computed in (6), we notice that the larger the \(g_i\) value, the higher is the need for a more accurate underestimation of the corresponding bilinear term. Then, we solved the following LP problems.

-

\(\lceil 0.2 n \rceil\) LP problems with objective function \(\min \ y_i\), for all i corresponding to the \(\lceil 0.2 n \rceil\) largest \(g_i\) values;

-

a fixed number \(\lceil 0.1 n \rceil\) of LP problems with objective function \(\max \ y_i\), for all i corresponding to the \(\lceil 0.1 n \rceil\) largest \(g_i\) values;

-

again \(\lceil 0.1 n \rceil\) LP problems with objective function \(\max \ x_i\), for all i corresponding to the \(\lceil 0.1 n \rceil\) largest \(g_i\) values;

-

no LP problem with objective function \(\min \ x_i\).

These choices have been driven by some experimental observations. In particular, we noticed that the lower limit for \(y_i\) is the most critical for the bound computation or, stated in another way, constraint

is often the active one. For this reason a larger budget of LP problems is allowed to improve this lower limit with respect to the upper limits. Instead, we never try to improve the lower limit \(\ell _{x_i}\) because it is experimentally observed that this limit can seldom be improved.

This way, the overall number of LPs to be solved at each call of the OBBT procedure is reduced to approximately 0.4n. Note that rather than solving all LP problems with the same feasible set, we could solve each of them with a different feasible region by incorporating all previously computed new limits in the definition of the feasible region for the next limit to be computed. That leads to sharper bounds, however we excluded this opportunity since we observed that LP solvers strongly benefit from the opportunity of solving problems over the same feasible region.

The underestimating function depends on the lower and upper limits \(\ell _{x_i}, \ell _{y_i}, u_{y_i}, u_{x_i}\). Thus, once we have updated all such limits, we can call procedure OBBT again in order to further reduce the limits. These can be iteratively reduced until some stopping condition is fulfilled. Such iterative procedure has been proposed and theoretically investigated, e.g., in [8]. That obviously increases the computational cost per node, since the overall number of LPs to be solved at each node is now approximately 0.4n times the number of calls to the procedure OBBT, which depends on the stopping condition. But, again, we observed that the additional computational cost is compensated by a further reduction of the overall number of nodes in the branch-and-bound tree.

It is important to stress at this point that OBBT procedures in general and the one proposed here in particular, are not new in the literature. The main contribution of this work lies in the observation that a very aggressive application of the proposed OBBT, while increasing considerably the computational cost per node, is the real key for an efficient solution of the addressed problem. Indeed, we will see through the experiments, that our approach is able to significantly outperform commercial QP solvers like CPLEX and GUROBI, and a solver like BARON, which is strongly based on tightening techniques.

3.5 Pseudo-code of the branch-and-bound approach

In this section we collect all the previously described tools and present the pseudo-code of the proposed branch-and-bound approach. In Line 1 an initial box \(B_0\) is introduced and the collection of branch-and-bound nodes \({{\mathcal {C}}}\) still to be explored is initialized with it. In Line 2 a lower bound over \(B_0\) is computed, while in Line 3 the current best observed feasible point \(\mathbf{z}^\star\) and the current GUB value are initialized. Take into account that such values can also be initialized after running a few local searches from randomly generated starting points. Lines 4–21 contain the main loop of the algorithm. Until the set of nodes still to be explored is not empty, the following operations are performed. In Line 5 one node in \({{\mathcal {C}}}\) with the lowest lower bound is selected. In Line 6 the index k of the branching variable is selected as the one with the largest gap \(g_i\) as defined in (6). In Line 7 the branching operation is performed. In Line 8 the selected node \({\bar{B}}\) is removed from \({{\mathcal {C}}}\), while in Lines 9–19 the following operations are performed for each of its child nodes. In the loop at Lines 10–17, first procedure OBBT is applied and then the lower bound over the tightened region is computed, until a stopping condition is satisfied. In particular, in our experiments we iterate until the difference between the (non-decreasing) lower bounds at two consecutive iterations fall below a given threshold \(\epsilon\) (\(\epsilon =10^{-3}\) in our experiments). In Lines 13–16 both \(\mathbf{z}^\star\) and GUB are possibly updated through the optimal solution of the relaxed problem. In Line 18 we add the child node to \({{\mathcal {C}}}\). Finally, in Line 20 we remove from \({{\mathcal {C}}}\) all nodes with a lower bound not lower than \((1-\varepsilon )GUB\), where \(\varepsilon\) is a given tolerance value. In all the experiments, we fixed a relative tolerance \(\varepsilon =10^{-3}\), which is considered adequate for practical applications.

Note that we do not discuss convergence of the proposed branch-and-bound approach, since it easily follows by rather standard and general arguments which can be found in [15].

In Algorithm 1 we highlighted with a frame box, both the stopping condition in Line 10 and the call to the OBBT procedure at Line 11, since the performance of the proposed algorithm mainly depends on how these two lines are implemented.

In what follows, in order to stress the importance of bound-tightening, we will refer to the proposed approach as Branch-and-Tight (B&T), which belongs to the class of Branch-and-Cut approaches, since tightening the bound of a variable is a special case of cutting plane.

4 Example: Crime prevention by patrolling (of the Police)

In this section we present an example of real-life instance where player 1 is the police, patrolling around in a certain geographic area. In this area, we consider the roads as defining a directed graph that the police traverses seeking to prevent crimes. They do so by randomly checking locations in the area, reachable over the roads, and traveling from one location to the other induces a certain travel time. For the crime distribution, we assume that “more public space means more witnesses, so less likelihood of a crime committed”. Applying this intuition to a roadmap, we take the probability of crimes to occur at a location as inverse proportional to the number of ways leading to this point. That is, the “more remote” a place is, the more likely is a crime to happen there, and places that are reachable over many ways are more likely to be crowded, so crimes are less likely there (of course, not all sorts of crimes, such as pickpockets would certainly prefer crowded places, but mugging is preferably done where a victim cannot easily escape). Note, however, the probability can also be defined in different ways, e.g., by taking into account police records. Our showcase region is the Hampi village in India, shown as a screenshot map in Figure ??. The respective geographic information ships with the package dodgr (distances on directed graphs) [19] for the R environment, so that we can conveniently use geographic information and digitalized roadmaps to define the playground for the game model (see [20] for how this is done step-by-step). In our case, the dodgr package directly lets us compute travel times or distances, representing the costs incurred to realize a random spot checking, which is precisely the matrix \(\mathbf{S}\) above. The actual payoff structure in the game depends on the locations and their likelihoods to become crime scenes. The road network for the Hampi village, has 1901 vertices and 3891 edges. At this point a subset of vertices of the road network is selected. The resulting vertices in the graph are then defined as pure strategies in the game, i.e., places for the police to be checked, with inter-vertex travel times taken as the switching cost in the matrix \(\mathbf{S}\). For practical purposes, it is reasonable to take a subset with cardinality between 50 and 100. Indeed, this size also appears reasonable in light of the fact that police patrols can certainly not check arbitrarily many places in reasonable time and efficiency, thus problem instances between 50 and 100 places appear as the most that is physically feasible.

Note that games of a shape like that of our police patrolling example exist in manifold versions in the literature, such as regarding the patrolling of coast guards [10], border patrol [21], pipeline protection [3], airport surveillance [22], and game theoretic models against environmental crime (so-called green security games) [5]; see [28] for a more extensive overview of related game models. Common to all these is their goal of optimizing patrolling (and to an extent also surveillance), but none of this past work accounts for the efforts of realizing the patrolling in practice, in light of the efforts practically invested (for the moving), but not theoretically counted in the optimization model.

5 Numerical results

In this section we will first describe the test instance generator and, then, we will present and discuss the results of extensive computational experiments with such instances.

5.1 Test instances description

The game is about spot checking a set of n places to guard them against an adversary. The places are spatially scattered, with a directed weighted graph describing the connections (direct reachability) of place v from place u by an edge \(v\rightarrow u\) with a random length.

The payoffs in the game are given by an \(n\times n\) matrix \(\mathbf{A}\) (so \(m=n\) in the above description), and are interpreted as the loss that the defending player 1 suffers when checking place i while the attacker is at place j. Thus, the defender can:

-

either miss the attacker (\(i\ne j\)) in which case there will be a Weibull-distributed random loss with shape parameter 5 and scale parameter 10.63 (so that the variance is 5); (this distribution is a common choice to describe economic losses, among other extreme value distributions);

-

or hit the attacker at \(i=j\), in which case there is zero loss.

The defender is thus minimizing, and the attacker is maximizing. The problem above is that of the defender. The Nash equilibrium then gives the optimal random choice of spot checks to minimize the average loss. To avoid trivialities, the payoff matrices are constructed not to admit pure strategy equilibria, so that the optimum (without switching cost) is necessarily a mixed strategy.

As for the switching cost, if the defender is currently at position i and next – according to the optimal random choice – needs to check the (non-adjacent) place j, then the cost for the switch from i to j is the shortest path in the aforementioned graph (note that, since the graph is directed, the matrix \(\mathbf{S}\) is generally nonsymmetric).

For the random instances, the matrix \(\mathbf{S}\) is thus obtained from a (conventional) all-shortest path algorithm applied to the graph. Note that the graph is an Erdös-Renyi type graph with n nodes and \(p=0.3\) chance of any two nodes having a connection. We also made tests with sparser (\(p=0.2\)) and denser (\(p=0.4\)) graphs, which result in \(\mathbf{S}\) matrices with larger entries in the former case and smaller in the latter case (the entries are given by shortest paths which tend to be smaller in denser graphs). The computational results with these different p values (not reported here) show that the instances with \(p=0.2\) are slightly more challenging than those with \(p=0.3\), while those with \(p=0.4\) are slightly simpler. However, the differences are not very significant. Also note that all entries of matrix \(\mathbf{S}\) are positive except for the diagonal ones, so that the matrix is dense. We underline this fact since, according to the experimental results reported, e.g., in [7, 33], the density of the Hessian matrix is a relevant factor to assess the difficulty of nonconvex QP problems.

Remark 3

The Erdös-Renyi model is here a suitable description of patrolling situations in areas where moving from any point to any other point is possible without significant physical obstacles in between. Examples are water areas (e.g., coasts) or natural habitats (woods, ...), in which guards are patrolling. It goes without saying that implementing the physical circumstances into the patrolling problem amounts to either a particular fixed graph topology or class of graphs (e.g., trees as models for harbor areas, or general scale-free networks describing communication relations). Such constrained topologies, will generally induce likewise constrained and hence different (smaller) strategy spaces, but leave the problem structure as such unchanged, except for the values involved.

The weights in the graph were chosen exponentially distributed with rate parameter \(\lambda =0.2\), and the Weibull distribution for the losses has a shape parameter 5 and scale parameter \(\sim 10.63\), so that both distributions have the same variance of 5.

Remark 4

The choice of the Weibull distribution is because of its heavy tails, useful to model extreme events (in actuarial science, where it appears as a special case of the generalized extreme value distribution). If the graph is an attack graph, we can think of possibly large losses that accumulate as the adversary traverses an attack path therein (but not necessarily stochastically independent, which the Weibull-distribution sort of captures due to its memory property). Besides, both the exponential and the Weibull distribution only take non-negative values, and thus lend themselves to a meaningful assignment of weights as “distances” in a graph.

The graph sizes considered are \(n=50,75,100\) and for each graph size we consider ten random instances. We restricted the attention to \(\alpha\) values in \(\{0.3,0.4,\ldots ,0.9\}\) since problems with \(\alpha\) values smaller than 0.3 and larger than 0.9 turned out to be simple ones. The overall number of instances is, thus, 210 (70 for each size \(n=50,75,100\)).

Note that all the data of the test instances are available at the web site http://www.iasi.cnr.it/~liuzzi/StQP.

5.2 Description of the experiments

The problem discussed in this paper belongs to the class of nonconvex QP problems with linear constraints, which is a quite active research area. Even well-known commercial solvers, like CPLEX and GUROBI, have recently included the opportunity of solving these problems. In [33] different solution approaches have been compared over different nonconvex QP problems, namely: Standard Quadratic Programming problems (StQP), where the feasible region is the unit simplex; BoxQP, where the feasible region is a box; and general QPs, where the feasible region is a general polytope (in [7] an extensive comparison has also been performed more focused on BoxQPs). The approaches tested in [33] have been the nonconvex QP solver of CPLEX, quadprogBB (see [9]), BARON (see [29]), and quadprogIP, introduced in [33]. According to the computational results reported in that work, solvers quadprogIP and quadprogBB have quite good performance on some subclasses. More precisely, quadprogIP works well on the StQP problem (see also [13] for another approach working well on this subclass), while quadprogBB performs quite well on BoxQPs, especially when the Hessian matrix of the objective function is dense. However, they do not perform very well on QP problems with general linear constraints. Some experiments we performed show that their performance is not good also on the QP subclass discussed in this paper. For this reason we do not include their results in our comparison. Thus, in the comparison we included: the nonconvex QP solver of CPLEX (v. 12.10), the best performing over QPs with general linear constraints according to what is reported in [33]; the nonconvex QP solver of GUROBI (v. 9.0.0), which has been recently introduced and is not tested in that paper; BARON (v. 2019.12.7), since bound-tightening, which, as we will see, is the most relevant operation in the proposed approach, also plays a central role in that solver.

We performed four different sets of experiments:

-

Experiments to compare our approach B&T with the above mentioned existing solvers over the subclass of QP problems discussed in this paper (only at dimension \(n=50\), which, as we will see, is already challenging for all the competitors);

-

Experiments with B&T by varying the intensity of bound-tightening (no bound-tightening, light bound-tightening, strong bound-tightening), in order to put in evidence that (strong) bound-tightening is the key operation in our approach;

-

Experiments with B&T at dimensions \(n=50,75,100\), in order to see how it scales as the dimension increases;

-

Experiments with the Crime Prevention instance described in Sect. 4.

All the experiments have been carried out on an Intel® Xeon® gold 6136 CPU at 3GHz with 48 cores and 256GB main memory. The algorithm has been coded using the Julia [6] language (version 1.3.1). Doing the implementation we parallelized as much as possible the bound-tightening procedure discussed in Sect. 3.4, where many LPs with the same feasible region need to be solved. The code is available for download at the URL http://www.iasi.cnr.it/~liuzzi/StQP.

5.2.1 Comparison with the existing literature

As a first experiment, we compare our method with the commercial solvers BARON, CPLEX and Gurobi. We run all these methods over ten instances at dimension \(n=50\) with \(\alpha \in \{0.3,0.4,\ldots ,0.9\}\) (thus, overall, 70 instances). We set a time limit of 600 seconds. A relative tolerance \(\varepsilon =10^{-3}\) is required for all solvers since, as already previously commented, it is considered adequate for this application. In Table 1, we report the average performance. For each method we report the number of nodes (column nn), the percentage gap after the time limit and in brackets the computational time needed to reach it (column GAP % (s)), and finally the percentage of success, i.e. the percentage of instances solved to optimality within the time limit (column Succ %). In our opinion, this table reports the most important finding of this paper. It can be seen that all the commercial solvers fail on most of the instances (apart from 7 out of 10 instances with \(\alpha =0.9\) and, for what concerns CPLEX, an instance with \(\alpha =0.8\)), whereas B&T solves all the instances with an average time of less than 30 seconds (the complete results are reported in Appendix 1). These results show that, although commercial solvers are fully developed, there is still room for improvements. In particular, it seems that performing bound-tightening in a very intensive way can strongly enhance the performance of a solution approach. In fact, as previously recalled, BARON already incorporates bound-tightening techniques but, as we will see in the following set of experiments, the intensity with which bound-tightening is applied also makes a considerable difference. Before that, however, for the sake of completeness, we report in Table 2, the results of B&T over the \(n=50\) test instances when a lower tolerance value is employed, namely \(\varepsilon =10^{-5}\), in order to evaluate the impact of this parameter on the performance of B&T. It is interesting to notice that the lower value has a clear impact on the instances with small \(\alpha\) value (say \(\alpha \le 0.5\)), even causing a failure with \(\alpha =0.4\), while the impact is much milder for larger \(\alpha\) values. In this table we also include column time/LP, where we report the average time for the solution of each LP. Such value is almost equal to the overall CPU time divided by the number of LPs solved. This is due to the fact that the computing times of B&T are almost entirely due to the OBBT procedure and, in particular, to the LPs needed to apply it. An analogous consideration applies to all the experiments we reported in this paper.

5.2.2 Importance of bound-tightening

As already stressed many times, the quite good performance of B&T is due to the bound-tightening procedure. It is now time to show it with numbers. To this end, besides the already proposed setting for our approach, we ran it under two different settings:

-

No bound-tightening at each node we do not apply the procedure OBBT, but we simply compute the lower bound by solving problem (5);

-

Light Bound-Tightening] at each node we only solve the following LPs once (and, consequently, we compute the solution of problem (5) only once)

-

\(\lceil 0.1 n \rceil\) LP problems with objective function \(\min \ y_i\), for all i corresponding to the \(\lceil 0.1 n \rceil\) largest \(g_i\) values;

-

a fixed number \(\lceil 0.05 n \rceil\) of LP problems with objective function \(\max \ y_i\), for all i corresponding to the \(\lceil 0.05 n \rceil\) largest \(g_i\) values;

-

again \(\lceil 0.05 n \rceil\) LP problems with objective function \(\max \ x_i\), for all i corresponding to the \(\lceil 0.05 n \rceil\) largest \(g_i\) values;

-

no LP problem with objective function \(\min \ x_i\).

-

Of course, this strongly reduces the effort per node. With no bound-tightening a single LP is solved per node, while with light bound-tightening \(\lceil 0.2 n \rceil +1\) LPs are solved at each node. In fact, light bound-tightening already requires a considerable computational effort per node (and, as we will see, it is already enough to perform better than existing solvers). However, the originally proposed strong bound-tightening procedure, where the OBBT procedure is iteratively applied and at each iteration \(\lceil 0.4 n \rceil\) LPs are solved, delivers better results. In Table 3, we report the average performance on the instances with \(n=50\) in terms of number of nodes, number of LPs solved, CPU time in seconds and percentage gap of the three levels of bound-tightening. It is evident from the table that the OBBT procedure is what really makes the difference: most of the instances are not solved without bound-tightening, whereas the number of nodes and the CPU time needed to solve the instances decrease as we increase the level of bound-tightening. In Appendix 1 we also report the full table with all the results.

5.2.3 Computational results over the proposed test instances as n increases

In this subsection we show the behavior of B&T as the dimension n increases. We have solved ten instances for three different sizes \(n=50,~75,~100\) and the usual values of \(\alpha =\{0.3,0.4,\ldots ,0.9\}\) (thus, overall 210 instances). Note that, for all the different values of n, lower and larger values of \(\alpha\) with respect to those we tested give rise to much simpler instances (recall that the problem becomes polynomially solvable for the extreme values \(\alpha =0\) and \(\alpha =1\)). We set a time limit of 10800s for all instances. For \(n=50\) and \(n=75\) we solve all the instances to optimality (in fact, the largest time to solve an instance with \(n=50\) is about 2 minutes, whereas for \(n=75\) the largest time is below 1 hour, but most of the problems are solved within 10 minutes). In Figures 2a–c we report the box plot of number of nodes, number of LPs and CPU time needed for the different values of \(\alpha\) when \(n=50\). The figure shows that the hardest instances are the ones corresponding to the central values of \(\alpha\) and this will turn out to hold true also at larger dimensions. We also observe that the overall number of nodes is extremely limited, thus confirming that, while computationally expensive, the bound-tightening procedure allows to considerably reduce the size of the branch-and-bound tree (again, this fact is observed also at larger dimensions).

In Fig. 3, we report the different box plots for all the instances at \(n=75\). It is worthwhile to remark that we solve most of them within ten minutes and exploring less than 300 nodes.

Finally, in Fig. 4 we report the performance of B&T on problems of dimension \(n=100\). In this case there are seven instances we are not able to solve within the time limit. These occur for values \(\alpha \in \{0.6,0.7,0.8\}\), thus confirming that the central values of this parameter give rise to the most challenging instances. With respect to \(n=50\) and \(n=75\), we have the additional box plot displayed in Fig. 4d reporting the final percentage gap when the time limit is reached. Note that it is never larger than 1.2% and most of the times it is lower than 0.5%, thus showing that, even when the algorithm does not terminate, the quality of the returned solution is guaranteed to be high.

5.2.4 Numerical results for the Crime Prevention application

In this subsection we solve an instance of the Crime Prevention application, described in Sect. 4, where 50 check spots have been identified (i.e., \(n=50\) according to our notation). In Table 4, we report the results for different values of \(\alpha\) obtained by B&T and by the commercial solvers CPLEX, Gurobi and BARON. We report for each method the CPU time (we set a time limit of 600 seconds) followed in brackets by the gap at the end of the time, the number of nodes, and for our method also the number of LPs solved, since it is our major computational burden. Although this instance turns out to be less challenging than the random ones previously considered, and also the other solvers are able to return the solution within the time limit for most (but not all) \(\alpha\) values, B&T is the only method able to solve the instance for all the values of \(\alpha\) and in all cases within 3 seconds. Therefore, also for this real world application, our method outperforms the commercial solvers, confirming the effectiveness of the approach. We also run B&T with a larger number of check spots (namely, 75 e 100) and the usual different \(\alpha\) values. The method is able to solve them all within 11 s, thus confirming once again the efficiency of B&T.

6 Conclusions and future work

In this paper we addressed some game theory problems arising in the context of network security. In these problems there is an additional quadratic term, representing switching costs, i.e.. the costs for the defender of switching from a given strategy to another one at successive rounds of the game. The resulting problems can be reformulated as nonconvex QP with linear constraints. Test instances of these problems turned out to be very challenging for existing solvers, and we propose to extend with them the current benchmark set of test instances for QP problems. We presented a spatial branch-and-bound approach to tackle these problems and we have shown that a rather aggressive application of an OBBT procedure is the key for their efficient solution. The procedure is expensive, since it requires multiple solutions of LP problems at each node of the branch-and-bound tree. But we empirically observed that the high computational costs per node of the branch-and-bound tree are largely compensated by the low number of nodes to be explored. We recall that the code of B&T and all the data of the test instances are available at the web site http://www.iasi.cnr.it/~liuzzi/StQP. As a topic for future research, we would like to further investigate the use of OBBT procedures in the solution of QP problems, and we would like to identify other cases, besides those addressed in this paper, where their intensive application may considerably enhance the performance of branch-and-bound approaches. We have actually already performed some experiments and obtained promising results over test instances of nonconvex QPs with general linear constraints. We plan to present the results in a future work. Moreover, it would be interesting to evaluate whether the intensive application of OBBT procedures is also able to enhance the performance of commercial nonconvex QP solvers.

References

OpenStreetMap (2021). https://www.openstreetmap.org/#map=15/15.3368/76.4601

Alpcan, T., Başar, T.: Network security: A decision and game-theoretic approach. Cambridge University Press (2010)

Alpern, S., Lidbetter, T., Morton, A., Papadaki, K.: Patrolling a Pipeline. In: Zhu, Q., Alpcan, T., Panaousis, E., Tambe, M., Casey, W. (eds.) Decision and Game Theory for Security. Lecture Notes in Computer Science, pp. 129–138. Springer International Publishing, Cham (2016)

Alpern, S., Morton, A., Papadaki, K.: Patrolling games. Operations Research 59(5), 1246–1257 (2011)

Basak, A., Fang, F., Nguyen, T.H., Kiekintveld, C.: Combining Graph Contraction and Strategy Generation for Green Security Games. In: Zhu, Q., Alpcan, T., Panaousis, E., Tambe, M., Casey, W. (eds.) Decision and Game Theory for Security. Lecture Notes in Computer Science, pp. 251–271. Springer International Publishing, Cham (2016)

Bezanson, J., Edelman, A., Karpinski, S., Shah, V.B.: Julia: A fresh approach to numerical computing. SIAM review 59(1), 65–98 (2017). https://doi.org/10.1137/141000671

Bonami, P., Günlük, O., Linderoth, J.: Globally solving nonconvex quadratic programming problems with box constraints via integer programming methods. Mathematical Programming Computation 10, 333–382 (2018)

Caprara, A., Locatelli, M.: Global optimization problems and domain reduction strategies. Mathematical Programming 125(1), 123–137 (2010)

Chen, J., Burer, S.: Globally solving nonconvex quadratic programming problems via completely positive programming. Mathematical Programming Computation 4(1), 33–52 (2012)

Fang, F., Jiang, A.X., Tambe, M.: Optimal patrol strategy for protecting moving targets with multiple mobile resources. In: Proceedings of the 2013 international conference on Autonomous agents and multi-agent systems, AAMAS ’13, pp. 957–964. International Foundation for Autonomous Agents and Multiagent Systems, Richland, SC (2013)

Furini, F., Traversi, E., Belotti, P., Frangioni, A., Gleixner, A., Gould, N., Liberti, L., Lodi, A., Misener, R., Mittelmann, H., et al.: Qplib: a library of quadratic programming instances. Mathematical Programming Computation 11(2), 237–265 (2019)

Gleixner, A., Berthold, T., Müller, B., Weltge, S.: Three enhancements for optimization-based bound tightening. Journal of Global Optimization 67, 731–757 (2017)

Gondzio, J., Yildirim, E.A.: Global solutions of nonconvex standard quadratic programs via mixed integer linear programming reformulations. Journal of Global Optimization to appear (2021)

Hansen, K.A., Koucky, M., Lauritzen, N., Miltersen, P.B., Tsigaridas, E.P.: Exact algorithms for solving stochastic games. In: Proceedings of the forty-third annual ACM symposium on Theory of computing, pp. 205–214 (2011)

Horst, R., Tuy, H.: Global optimization: Deterministic approaches (2nd edition). Springer Science & Business Media (2013)

Lozovanu, D., Solomon, D., Zelikovsky, A.: Multiobjective games and determining pareto-nash equilibria. Buletinul Academiei de Ştiinţe a Republicii Moldova. Matematica 3, 115–122 (2005)

McCormick, G.P.: Computability of global solutions to factorable nonconvex programs: Part i-convex underestimating problems. Mathematical Programming 10(1), 147–175 (1976)

Motzkin, T., Straus, E.: Maxima for graphs and a new proof of a theorem of Turán. Canadian Journal of Mathematics 17(4), 533–540 (1965)

Padgham, M.: dodgr: An r package for network flow aggregation. Transport Findings (2019). 10.32866/6945

Padgham, M.: GitHub - ATFutures/dodgr: Distances on Directed Graphs in R (2021). https://github.com/ATFutures/dodgr

Papadaki, K., Alpern, S., Lidbetter, T., Morton, A.: Patrolling a Border. Operations Research 64(6), 1256–1269 (2016). https://doi.org/10.1287/opre.2016.1511

Pita, J., Tambe, M., Kiekintveld, C., Cullen, S., Steigerwald, E.: GUARDS - Innovative Application of Game Theory for National Airport Security. IJCAI (2011). https://doi.org/10.5591/978-1-57735-516-8/IJCAI11-451

P.M., P., S.A., V.: Quadratic programming with one negative eigenvalue is NP-hard. Journal of Global Optimization 1(1), 15–22 (1991)

Rass, S., Alshawish, A., Abid, M.A., Schauer, S., Zhu, Q., De Meer, H.: Physical intrusion games-optimizing surveillance by simulation and game theory. IEEE Access 5, 8394–8407 (2017)

Rass, S., König, S.: Password security as a game of entropies. Entropy 20(5), 312 (2018)

Rass, S., König, S., Schauer, S.: On the cost of game playing: How to control the expenses in mixed strategies. In: International Conference on Decision and Game Theory for Security, pp. 494–505. Springer (2017)

Rass, S., Rainer, B.: Numerical computation of multi-goal security strategies. In: International Conference on Decision and Game Theory for Security, pp. 118–133. Springer (2014)

Rass, S., Schauer, S., König, S., Zhu, Q.: Cyber-Security in Critical Infrastructures: A Game-Theoretic Approach. SpringerNature (2020)

Sahinidis, N.V.: Baron: A general purpose global optimization software package. Journal of Global Optimization 8(2), 201–205 (1996)

Tambe, M.: Security and game theory: algorithms, deployed systems, lessons learned. Cambridge University Press (2011)

Tawarmalani, M., Sahinidis, N.V.: Global optimization of mixed-integer nonlinear programs: A theoretical and computational study. Mathematical Programming 99(3), 563–591 (2004)

Wachter, J., Rass, S., König, S.: Security from the adversary’s inertia-controlling convergence speed when playing mixed strategy equilibria. Games 9(3), 59 (2018)

Xia, W., Vera, J.C., Zuluaga, L.F.: Globally solving nonconvex quadratic programs via linear integer programming techniques. INFORMS Journal on Computing 32(1), 40–56 (2020)

Funding

Open access funding provided by Università degli Studi di Parma within the CRUI-CARE Agreement.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

A Proof of Theorem 1

In this section we consider the complexity of problem (2). Such problem is a nonconvex QP with linear constraints. NP-hardness of QP problems has been established for different special cases like, e.g., the already mentioned StQP problems (see [18]) and the Box QP problems (see, e.g., [23]). However, due to its special structure, none of the known complexity results can be applied to establish the NP-hardness of problem (2). Thus, in what follows we formally prove that its corresponding decision problem is NP-complete. Let

where \(S_{ii}=0\) for each \(i=1,\ldots ,n\) and \(S_{ij}\ge 0\) for each \(i\ne j\), while \(\mathbf{A}_k\ge \mathbf{0}\), \(k=1,\ldots ,m\). Moreover, let

be the n-dimensional unit simplex. After incorporating \(\alpha\) and \((1-\alpha )\) respectively into \(\mathbf{S}\) and \(\mathbf{A}_k\), \(k=1,\ldots ,m\), problem (2) is equivalent to \(\min _{\mathbf{x}\in \varDelta _n} f(\mathbf{x})\). Then, we would like to establish the complexity of the following decision problem:

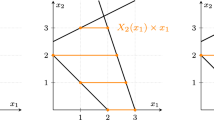

We prove the result by providing a polynomial transformation of the max clique decision problem: Given a graph \(G=(V,E)\) and a positive integer \(k\le |V|\), does there exist a clique C in G with cardinality at least k? We define the following instance of the decision problem (9). Let

Moreover, let \(m=n\) and for each \(k=1,\ldots ,n\) let \(\mathbf{A}_k=\mathbf{e}_k\), where \(\mathbf{e}_k\) is the vector with all components equal to 0, except the k-th one, which is equal to 1. Stated in another way, the piece-wise linear part is \(\max _{k=1,\ldots ,n} x_k\). Finally, let \(\xi =\frac{1}{k}\). We claim that the minimum value of f over \(\varDelta _n\) is not larger than \(\xi =\frac{1}{k}\) if and only if G contains a clique with cardinality k. The if part is very simple. Indeed, let us consider the feasible solution \(x_i=\frac{1}{k}\) if \(i\in C\), where C is a clique of cardinality k, and let \(x_i=0\) otherwise. Then, the value of f at this point is equal to \(\frac{1}{k}\). Indeed , the value of the quadratic part is 0, while the value of the piece-wise linear part is \(\frac{1}{k}\). The proof of the only if part is a bit more complicated. We would like to prove that, in case no clique with cardinality at least k exists, then the minimum value of f over the unit simplex is larger than \(\frac{1}{k}\). Let us denote by \(\mathbf{x}^*\) the minimum of f over the unit simplex. Let

and let C be the maximum clique over the sub-graph induced by K, whose cardinality is at most \(k-1\). We first remark that if, for some \(i\in K\setminus C\), it holds that

then the quadratic part contains the term

which concludes the proof. Therefore, for \(i\in K\setminus C\) we assume that either \(x_i^*<\frac{1}{n^2}\) or

Now, let

If \(\exists \ k_1,k_2\in K_1\) and \((k_1,k_2)\not \in E\), then \(n^4 x_{k_1}^*x_{k_2}^*\ge 1\), which concludes the proof. Then, we assume that for each \(k_1, k_2\in K_1\), \((k_1,k_2)\in E\), i.e., \(K_1\) itself is a clique. Now let us consider the following subset of C

It must hold that \(|C_1|\ge |K_1|\). Indeed, if \(|C_1|<|K_1|\), then \((C\setminus C_1) \cup K_1\) is also a clique with cardinality larger than C, which is not possible in view of the fact that C has maximum cardinality. Then, in view of (10) we have that

and, moreover, by definition of \(K_1\), we also have

Since \(|C_1|\ge |K_1|\), we have that

is such that \(|T|\le |K_1| + |C\setminus C_1|\le |C_1|+|C\setminus C_1|=|C|\le k-1\). Thus, taking into account that

we must have that

and, consequently, taking into account that \(|T|\le k-1\), for at least one index \(j\in T\) it must hold that

so that the piece-wise linear part of f is larger than \(\frac{1}{k}\), which concludes the proof.

B Detailed numerical results

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Liuzzi, G., Locatelli, M., Piccialli, V. et al. Computing mixed strategies equilibria in presence of switching costs by the solution of nonconvex QP problems. Comput Optim Appl 79, 561–599 (2021). https://doi.org/10.1007/s10589-021-00282-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10589-021-00282-7