Abstract

We present an improved model and theory for time-causal and time-recursive spatio-temporal receptive fields, obtained by a combination of Gaussian receptive fields over the spatial domain and first-order integrators or equivalently truncated exponential filters coupled in cascade over the temporal domain. Compared to previous spatio-temporal scale-space formulations in terms of non-enhancement of local extrema or scale invariance, these receptive fields are based on different scale-space axiomatics over time by ensuring non-creation of new local extrema or zero-crossings with increasing temporal scale. Specifically, extensions are presented about (i) parameterizing the intermediate temporal scale levels, (ii) analysing the resulting temporal dynamics, (iii) transferring the theory to a discrete implementation in terms of recursive filters over time, (iv) computing scale-normalized spatio-temporal derivative expressions for spatio-temporal feature detection and (v) computational modelling of receptive fields in the lateral geniculate nucleus (LGN) and the primary visual cortex (V1) in biological vision. We show that by distributing the intermediate temporal scale levels according to a logarithmic distribution, we obtain a new family of temporal scale-space kernels with better temporal characteristics compared to a more traditional approach of using a uniform distribution of the intermediate temporal scale levels. Specifically, the new family of time-causal kernels has much faster temporal response properties (shorter temporal delays) compared to the kernels obtained from a uniform distribution. When increasing the number of temporal scale levels, the temporal scale-space kernels in the new family do also converge very rapidly to a limit kernel possessing true self-similar scale-invariant properties over temporal scales. Thereby, the new representation allows for true scale invariance over variations in the temporal scale, although the underlying temporal scale-space representation is based on a discretized temporal scale parameter. We show how scale-normalized temporal derivatives can be defined for these time-causal scale-space kernels and how the composed theory can be used for computing basic types of scale-normalized spatio-temporal derivative expressions in a computationally efficient manner.

Similar content being viewed by others

1 Introduction

Spatio-temporal receptive fields constitute an essential concept for describing neural functions in biological vision [11, 12, 31–33] and for expressing computer vision methods on video data [1, 35, 43, 88, 99].

For offline processing of pre-recorded video, non-causal Gaussian or Gabor-based spatio-temporal receptive fields may in some cases be sufficient. When operating on video data in a real-time setting or when modelling biological vision computationally, one does however need to take into explicit account the fact that the future cannot be accessed and that the underlying spatio-temporal receptive fields must therefore be time-causal, i.e. the image operations should only require access to image data from the present moment and what has occurred in the past. For computational efficiency and for keeping down memory requirements, it is also desirable that the computations should be time-recursive, so that it is sufficient to keep a limited memory of the past that can be recursively updated over time.

The subject of this article is to present an improved temporal scale-space model for spatio-temporal receptive fields based on time-causal temporal scale-space kernels in terms of first-order integrators or equivalently truncated exponential filters coupled in cascade, which can be transferred to a discrete implementation in terms of recursive filters over discretized time. This temporal scale-space model will then be combined with a Gaussian scale-space concept over continuous image space or a genuinely discrete scale-space concept over discrete image space, resulting in both continuous and discrete spatio-temporal scale-space concepts for modelling time-causal and time-recursive spatio-temporal receptive fields over both continuous and discrete spatio-temporal domains. The model builds on previous work by Fleet and Langley [20], Lindeberg and Fagerström [66], Lindeberg [56–59] and is here complemented by (i) a better design for the degrees of freedom in the choice of time constants for the intermediate temporal scale levels from the original signal to any higher temporal scale level in a cascade structure of temporal scale-space representations over multiple temporal scales, (ii) an analysis of the resulting temporal response dynamics, (iii) details for discrete implementation in a spatio-temporal visual front-end, (iv) details for computing spatio-temporal image features in terms of scale-normalized spatio-temporal differential expressions at different spatio-temporal scales and (v) computational modelling of receptive fields in the lateral geniculate nucleus (LGN) and the primary visual cortex (V1) in biological vision.

In previous use of the temporal scale-space model by Lindeberg and Fagerström [66], a uniform distribution of the intermediate scale levels has mostly been chosen when coupling first-order integrators or equivalently truncated exponential kernels in cascade. By instead using a logarithmic distribution of the intermediate scale levels, we will here show that a new family of temporal scale-space kernels can be obtained with much better properties in terms of (i) faster temporal response dynamics and (ii) fast convergence towards a limit kernel that possesses true scale-invariant properties (self-similarity) under variations in the temporal scale in the input data. Thereby, the new family of kernels enables (i) significantly shorter temporal delays (as always arise for truly time-causal operations), (ii) much better computational approximation to true temporal scale invariance and (iii) computationally much more efficient numerical implementation. Conceptually, our approach is also related to the time-causal scale-time model by Koenderink [39], which is here complemented by a truly time-recursive formulation of time-causal receptive fields more suitable for real-time operations over a compact temporal buffer of what has occurred in the past, including a theoretically well-founded and computationally efficient method for discrete implementation.

Specifically, the rapid convergence of the new family of temporal scale-space kernels to a limit kernel when the number of intermediate temporal scale levels tends to infinity is theoretically very attractive, since it provides a way to define truly scale-invariant operations over temporal variations at different temporal scales, and to measure the deviation from true scale invariance when approximating the limit kernel by a finite number of temporal scale levels. Thereby, the proposed model allows for truly self-similar temporal operations over temporal scales while using a discretized temporal scale parameter, which is a theoretically new type of construction for temporal scale spaces.

Based on a previously established analogy between scale-normalized derivatives for spatial derivative expressions and the interpretation of scale normalization of the corresponding Gaussian derivative kernels to constant \(L_p\)-norms over scale [53], we will show how scale-invariant temporal derivative operators can be defined for the proposed new families of temporal scale-space kernels. Then, we will apply the resulting theory for computing basic spatio-temporal derivative expressions of different types and describe classes of such spatio-temporal derivative expressions that are invariant or covariant to basic types of natural image transformations, including independent rescaling of the spatial and temporal coordinates, illumination variations and variabilities in exposure control mechanisms.

In these ways, the proposed theory will present previously missing components for applying scale-space theory to spatio-temporal input data (video) based on truly time-causal and time-recursive image operations.

A conceptual difference between the time-causal temporal scale-space model that is developed in this paper and Koenderink’s fully continuous scale-time model [39] or the fully continuous time-causal semigroup derived by Fagerström [16] and Lindeberg [56] is that the presented time-causal scale-space model will be semi-discrete, with a continuous time axis and discretized temporal scale parameter. This semi-discrete theory can then be further discretized over time (and for spatio-temporal image data also over space) into a fully discrete theory for digital implementation. The reason why the temporal scale parameter has to be discrete in this theory is that according to theoretical results about variation diminishing linear transformations by Schoenberg [81–87] and Karlin [36] that we will build upon, there is no continuous parameter semigroup structure or continuous parameter cascade structure that guarantees non-creation of new structures with increasing temporal scale in terms of non-creation of new local extrema or new zero-crossings over a continuum of increasing temporal scales.

When discretizing the temporal scale parameter into a discrete set of temporal scale levels, we do however show that there exists such a discrete parameter semigroup structure in the case of a uniform distribution of the temporal scale levels and a discrete parameter cascade structure in the case of a logarithmic distribution of the temporal scale levels, which both guarantee non-creation of new local extrema or zero-crossings with increasing temporal scale. In addition, the presented semi-discrete theory allows for an efficient time-recursive formulation for real-time implementation based on a compact temporal buffer, which Koenderink’s scale-time model [39] does not, and much better temporal dynamics than the time-causal semigroup previously derived by Fagerström [16] and Lindeberg [56].

Specifically, we argue that if the goal is to construct a vision system that analyses continuous video streams in real time, as is the main scope of this work, a restriction of the theory to a discrete set of temporal scale levels with the temporal scale levels determined in advance before the image data are sampled over time is less of a practical constraint, since the vision system anyway has to be based on a finite amount of sensors and hardware/wetware for sampling and processing the continuous stream of image data.

1.1 Structure of this Article

To give the contextual overview to this work, Sect. 2 starts by presenting a previously established computational model for spatio-temporal receptive fields in terms of spatial and temporal scale-space kernels, based on which we will replace the temporal smoothing step.

Section 3 starts by reviewing previously theoretical results for temporal scale-space models based on the assumption of non-creation of new local extrema with increasing scale, showing that the canonical temporal operators in such a model are first-order integrators or equivalently truncated exponential kernels coupled in cascade. Relative to previous applications of this idea based on a uniform distribution of the intermediate temporal scale levels, we present a conceptual extension of this idea based on a logarithmic distribution of the intermediate temporal scale levels, and show that this leads to a new family of kernels that have faster temporal response properties and correspond to more skewed distributions with the degree of skewness determined by a distribution parameter c.

Section 4 analyses the temporal characteristics of these kernels and shows that they lead to faster temporal characteristics in terms of shorter temporal delays, including how the choice of distribution parameter c affects these characteristics. In Sect. 5, we present a more detailed analysis of these kernels, with emphasis on the limit case when the number of intermediate scale levels K tends to infinity, and making constructions that lead to true self-similarity and scale invariance over a discrete set of temporal scaling factors.

Section 6 shows how these spatial and temporal kernels can be transferred to a discrete implementation while preserving scale-space properties also in the discrete implementation and allowing for efficient computations of spatio-temporal derivative approximations. Section 7 develops a model for defining scale-normalized derivatives for the proposed temporal scale-space kernels, which also leads to a way of measuring how far from the scale-invariant time-causal limit kernel a particular temporal scale-space kernel is when using a finite number K of temporal scale levels.

In Sect. 8, we combine these components for computing spatio-temporal features defined from different types of spatio-temporal differential invariants, including an analysis of their invariance or covariance properties under natural image transformations, with specific emphasis on independent scalings of the spatial and temporal dimensions, illumination variations and variations in exposure control mechanisms. Finally, Sect. 9 concludes with a summary and discussion, including a description about relations and differences to other temporal scale-space models.

To simplify the presentation, we have put some of the theoretical analysis in the appendix. Appendix 1 presents a frequency analysis of the proposed time-causal scale-space kernels, including a detailed characterization of the limit case when the number of temporal scale levels K tends to infinity and explicit expressions their moment (cumulant) descriptors up to order four. Appendix 2 presents a comparison with the temporal kernels in Koenderink’s scale-time model, including a minor modification of Koenderink’s model to make the temporal kernels normalized to unit \(L_1\)-norm and a mapping between the parameters in his model (a temporal offset \(\delta \) and a dimensionless amount of smoothing \(\sigma \) relative to a logarithmic time scale) and the parameters in our model (the temporal variance \(\tau \), a distribution parameter c and the number of temporal scale levels K) including graphs of similarities vs. differences between these models. Appendix 3 shows that for the temporal scale-space representation given by convolution with the scale-invariant time-causal limit kernel, the corresponding scale-normalized derivatives become fully scale covariant/invariant for temporal scaling transformations that correspond to exact mappings between the discrete temporal scale levels.

This paper is a much further developed version of a conference paper [62] presented at the SSVM 2015, with substantial additions concerning

-

the theory that implies that the temporal scales are implied to be discrete (Sects. 3.1–3.2),

-

more detailed modelling of biological receptive fields (Sect. 3.6),

-

the construction of a truly self-similar and scale-invariant time-causal limit kernel (Sect. 5),

-

theory for implementation in terms of discrete time-causal scale-space kernels (Sect. 6.1),

-

details concerning more rotationally symmetric implementation over spatial domain (Sect. 6.3),

-

definition of scale-normalized temporal derivatives for the resulting time-causal scale-space (Sect. 7),

-

a framework for spatio-temporal feature detection based on time-causal and time-recursive spatio-temporal scale space, including scale normalization as well as covariance and invariance properties under natural image transformations and experimental results (Sect. 8),

-

a frequency analysis of the time-causal and time-recursive scale-space kernels (Appendix 1),

-

a comparison between the presented semi-discrete model and Koenderink’s fully continuous model, including comparisons between the temporal kernels in the two models and a mapping between the parameters in our model and Koenderink’s model (Appendix 2) and

-

a theoretical analysis of the evolution properties over scales of temporal derivatives obtained from the time-causal limit kernel, including the scaling properties of the scale normalization factors under \(L_p\)-normalization and a proof that the resulting scale-normalized derivatives become scale invariant/covariant (Appendix 3).

In relation to the SSVM 2015 paper, this paper therefore first shows how the presented framework applies to spatio-temporal feature detection and computational modelling of biological vision, which could not be fully described because of space limitations, and then presents important theoretical extensions in terms of theoretical properties (scale invariance) and theoretical analysis as well as other technical details that could not be included in the conference paper because of space limitations.

2 Spatio-Temporal Receptive Fields

The theoretical structure that we start from is a general result from axiomatic derivations of a spatio-temporal scale-space based on the assumptions of non-enhancement of local extrema and the existence of a continuous temporal scale parameter, which states that the spatio-temporal receptive fields should be based on spatio-temporal smoothing kernels of the form (see overviews in Lindeberg [56, 57]):

where

-

\(x = (x_1, x_2)^T\) denotes the image coordinates,

-

t denotes time,

-

s denotes the spatial scale,

-

\(\tau \) denotes the temporal scale,

-

\(v = (v_1, v_2)^T\) denotes a local image velocity,

-

\({\varSigma }\) denotes a spatial covariance matrix determining the spatial shape of an affine Gaussian kernel \(g(x;\; s, {\varSigma }) = \frac{1}{2 \pi s \sqrt{\det {\varSigma }}} \mathrm{e}^{-x^T {\varSigma }^{-1} x/2s}\),

-

\(g(x_1 - v_1 t, x_2 - v_2 t;\; s, {\varSigma })\) denotes a spatial affine Gaussian kernel that moves with image velocity \(v = (v_1, v_2)\) in space-time and

-

\(h(t;\; \tau )\) is a temporal smoothing kernel over time.

A biological motivation for this form of separability between the smoothing operations over space and time can also be obtained from the facts that (i) most receptive fields in the retina and the LGN are to a first approximation space-time separable and (ii) the receptive fields of simple cells in V1 can be either space-time separable or inseparable, where the simple cells with inseparable receptive fields exhibit receptive fields subregions that are tilted in the space-time domain and the tilt is an excellent predictor of the preferred direction and speed of motion [11, 12].

For simplicity, we shall here restrict the above family of affine Gaussian kernels over the spatial domain to rotationally symmetric Gaussians of different size s, by setting the covariance matrix \({\varSigma }\) to a unit matrix. We shall also mainly restrict ourselves to space-time separable receptive fields by setting the image velocity v to zero.

A conceptual difference that we shall pursue is by relaxing the requirement of a semigroup structure over a continuous temporal scale parameter in the above axiomatic derivations by a weaker Markov property over a discrete temporal scale parameter. We shall also replace the previous axiom about non-creation of new image structures with increasing scale in terms of non-enhancement of local extrema (which requires a continuous scale parameter) by the requirement that the temporal smoothing process, when seen as an operation along a one-dimensional temporal axis only, must not increase the number of local extrema or zero-crossings in the signal. Then, another family of time-causal scale-space kernels becomes permissible and uniquely determined, in terms of first-order integrators or truncated exponential filters coupled in cascade.

The main topics of this paper are to handle the remaining degrees of freedom resulting from this construction about (i) choosing and parameterizing the distribution of temporal scale levels, (ii) analysing the resulting temporal dynamics, (iii) describing how this model can be transferred to a discrete implementation over discretized time, space or both while retaining discrete scale-space properties, (iv) using the resulting theory for computing scale-normalized spatio-temporal derivative expressions for purposes in computer vision and (v) computational modelling of biological vision.

3 Time-Causal Temporal Scale-Space

When constructing a system for real-time processing of sensor data, a fundamental constraint on the temporal smoothing kernels is that they have to be time-causal. The ad hoc solution of using a truncated symmetric filter of finite temporal extent in combination with a temporal delay is not appropriate in a time-critical context. Because of computational and memory efficiency, the computations should furthermore be based on a compact temporal buffer that contains sufficient information for representing the sensor information at multiple temporal scales and computing features therefrom. Corresponding requirements are necessary in computational modelling of biological perception.

3.1 Time-Causal Scale-Space Kernels for Pure Temporal Domain

To model the temporal component of the smoothing operation in Eq. (1), let us initially consider a signal f(t) defined over a one-dimensional continuous temporal axis \(t \in {\mathbb R}\). To define a one-parameter family of temporal scale-space representation from this signal, we consider a one-parameter family of smoothing kernels \(h(t;\, \tau )\) where \(\tau \ge 0\) is the temporal scale parameter

and \(L(t;\; 0) = f(t)\). To formalize the requirement that this transformation must not introduce new structures from a finer to a coarser temporal scale, let us following Lindeberg [45] require that between any pair of temporal scale levels \(\tau _2 > \tau _1 \ge 0\) the number of local extrema at scale \(\tau _2\) must not exceed the number of local extrema at scale \(\tau _1\). Let us additionally require the family of temporal smoothing kernels \(h(u;\ \tau )\) to obey the following cascade relation

between any pair of temporal scales \((\tau _1, \tau _2)\) with \(\tau _2 > \tau _1\) for some family of transformation kernels \((\Delta h)(t;\; \tau _1 \mapsto \tau _2)\). Note that in contrast to most other axiomatic scale-space definitions, we do, however, not impose a strict semigroup property on the kernels. The motivation for this is to make it possible to take larger scale steps at coarser temporal scales, which will give higher flexibility and enable the construction of more efficient temporal scale-space representations.

Following Lindeberg [45], let us further define a scale-space kernel as a kernel that guarantees that the number of local extrema in the convolved signal can never exceed the number of local extrema in the input signal. Equivalently, this condition can be expressed in terms of the number of zero-crossings in the signal. Following Lindeberg and Fagerström [66], let us additionally define a temporal scale-space kernel as a kernel that both satisfies the temporal causality requirement \(h(t;\; \tau ) = 0\) if \(t< 0\) and guarantees that the number of local extrema does not increase under convolution. If both the raw transformation kernels \(h(u;\ \tau )\) and the cascade kernels \((\Delta h)(t;\; \tau _1 \mapsto \tau _2)\) are scale-space kernels, we do hence guarantee that the number of local extrema in \(L(t;\; \tau _2)\) can never exceed the number of local extrema in \(L(t;\; \tau _1)\). If the kernels \(h(u;\ \tau )\) and additionally the cascade kernels \((\Delta h)(t;\; \tau _1 \mapsto \tau _2)\) are temporal scale-space kernels, these kernels do hence constitute natural kernels for defining a temporal scale-space representation.

3.2 Classification of Scale-Space Kernels for Continuous Signals

Interestingly, the classes of scale-space kernels and temporal scale-space kernels can be completely classified based on classical results by Schoenberg and Karlin regarding the theory of variation diminishing linear transformations. Schoenberg studied this topic in a series of papers over about 20 years [81–87], and Karlin [36] then wrote an excellent monograph on the topic of total positivity.

Variation diminishing transformations. Summarizing main results from this theory in a form relevant to the construction of the scale-space concept for one-dimensional continuous signals [48, Sect. 3.5.1], let \(S^-(f)\) denote the number of sign changes in a function f

where the supremum is extended over all sets \(t_1 < t_2 < \dots < t_J\) (\(t_j \in {\mathbb R}\)), J is arbitrary but finite, and \(V^-(v)\) denotes the number of sign changes in a vector v. Then, the transformation

where G is a distribution function (essentially the primitive function of a convolution kernel), is said to be variation diminishing if

holds for all continuous and bounded \(f_\mathrm{in}\). Specifically, the transformation (5) is variation diminishing if and only if G has a bilateral Laplace-Stieltjes transform of the form [85]

for \(-c < \text{ Re }(s) < c\) and some \(c > 0\), where \(C \ne 0\), \(\gamma \ge 0\), \(\delta \) and \(a_i\) are real, and \(\sum _{i=1}^{\infty } a_i^2\) is convergent.

Classes of Continuous Scale-Space Kernels Interpreted in the temporal domain, this result implies that for continuous signals, there are four primitive types of linear and shift-invariant smoothing transformations; convolution with the Gaussian kernel,

convolution with the truncated exponential functions,

as well as trivial translation and rescaling. Moreover, it means that a shift-invariant linear transformation is variation diminishing if and only if it can be decomposed into these primitive operations.

3.3 Temporal Scale-Space Kernels Over Continuous Temporal Domain

In the above expressions, the first class of scale-space kernels (8) corresponds to using a non-causal Gaussian scale-space concept over time, which may constitute a straightforward model for analysing pre-recorded temporal data in an offline setting where temporal causality is not critical and can be disregarded by the possibility of accessing the virtual future in relation to any pre-recorded time moment.

Adding temporal causality as a necessary requirement, and with additional normalization of the kernels to unit \(L_1\)-norm to leave a constant signal unchanged, it follows that the following family of truncated exponential kernels

constitutes the only class of time-causal scale-space kernels over a continuous temporal domain in the sense of guaranteeing both temporal causality and non-creation of new local extrema (or equivalently zero-crossings) with increasing scale [45, 66]. The Laplace transform of such a kernel is given by

and coupling K such kernels in cascade leads to a composed kernel

having a Laplace transform of the form

The composed kernel has temporal mean and variance

In terms of physical models, repeated convolution with such kernels corresponds to coupling a series of first-order integrators with time constants \(\mu _k\) in cascade

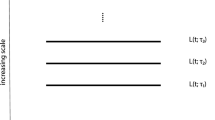

with \(L(t;\; 0) = f(t)\). In the sense of guaranteeing non-creation of new local extrema or zero-crossings over time, these kernels have a desirable and well-founded smoothing property that can be used for defining multi-scale observations over time. A constraint on this type of temporal scale-space representation, however, is that the scale levels are required to be discrete and that the scale-space representation does hence not admit a continuous scale parameter. Computationally, however, the scale-space representation based on truncated exponential kernels can be highly efficient and admits for direct implementation in terms of hardware (or wetware) that emulates first-order integration over time, and where the temporal scale levels together also serve as a sufficient time-recursive memory of the past (see Fig. 1).

3.4 Distributions of the Temporal Scale Levels

When implementing this temporal scale-space concept, a set of intermediate scale levels \(\tau _k\) has to be distributed between some minimum and maximum scale levels \(\tau _\mathrm{min} = \tau _1\) and \(\tau _\mathrm{max} = \tau _K\). Next, we will present three ways of discretizing the temporal scale parameter over K temporal scale levels.

Uniform Distribution of the Temporal Scales If one chooses a uniform distribution of the intermediate temporal scales

then the time constants of all the individual smoothing steps are given by

Logarithmic Distribution of the Temporal Scales with Free Minimum Scale More natural is to distribute the temporal scale levels according to a geometric series, corresponding to a uniform distribution in units of effective temporal scale \(\tau _{\mathrm {eff}} = \log \tau \) [47]. If we have a free choice of what minimum temporal scale level \(\tau _\mathrm{min}\) to use, a natural way of parameterizing these temporal scale levels is by using a distribution parameter \(c > 1\)

which by Eq. (14) implies that time constants of the individual first-order integrators should be given by

Logarithmic Distribution of the Temporal Scales with Given Minimum Scale. If the temporal signal is on the other hand given at some minimum temporal scale \(\tau _\mathrm{min}\), we can instead determine \(c = \left( \frac{\tau _\mathrm{max}}{\tau _\mathrm{min}} \right) ^{\frac{1}{2(K-1)}}\) in (18) such that \(\tau _1 = \tau _\mathrm{min}\) and add \(K - 1\) temporal scales with \(\mu _k\) according to (20).

Logarithmic Memory of the Past When using a logarithmic distribution of the temporal scale levels according to either of the last two methods, the different levels in the temporal scale-space representation at increasing temporal scales will serve as a logarithmic memory of the past, with qualitative similarity to the mapping of the past onto a logarithmic time axis in the scale-time model by Koenderink [39]. Such a logarithmic memory of the past can also be extended to later stages in the visual hierarchy.

3.5 Temporal Receptive Fields

Figure 2 shows graphs of such temporal scale-space kernels that correspond to the same value of the composed variance, using either a uniform distribution or a logarithmic distribution of the intermediate scale levels.

Electric wiring diagram consisting of a set of resistors and capacitors that emulate a series of first-order integrators coupled in cascade, if we regard the time-varying voltage \(f_\mathrm{in}\) as representing the time-varying input signal and the resulting output voltage \(f_\mathrm{out}\) as representing the time- varying output signal at a coarser temporal scale. Such first-order temporal integration can be used as a straightforward computational model for temporal processing in biological neurons (see also Koch [37, Chapts. 11–12] regarding physical modelling of the information transfer in dendrites of neurons)

In general, these kernels are all highly asymmetric for small values of K, whereas the kernels based on a uniform distribution of the intermediate temporal scale levels become gradually more symmetric around the temporal maximum as K increases. The degree of continuity at the origin and the smoothness of transition phenomena increase with K such that coupling of \(K \ge 2\) kernels in cascade implies a \(C^{K-2}\)-continuity of the temporal scale-space kernel. To guarantee at least \(C^1\)-continuity of the temporal derivative computation kernel at the origin, the order n of differentiation of a temporal scale-space kernel should therefore not exceed \(K - 2\). Specifically, the kernels based on a logarithmic distribution of the intermediate scale levels (i) have a higher degree of temporal asymmetry which increases with the distribution parameter c and (ii) allow for faster temporal dynamics compared to the kernels based on a uniform distribution.

In the case of a logarithmic distribution of the intermediate temporal scale levels, the choice of the distribution parameter c leads to a trade-off issue in that smaller values of c allow for a denser sampling of the temporal scale levels, whereas larger values of c lead to faster temporal dynamics and a more skewed shape of the temporal receptive fields with larger deviations from the shape of Gaussian derivatives of the same order (Fig. 2).

3.6 Computational Modelling of Biological Receptive Fields

Receptive Fields in the LGN Regarding visual receptive fields in the lateral geniculate nucleus (LGN), DeAngelis et al. [11, 12] report that most neurons (i) have approximately circular centre-surround organisation in the spatial domain and that (ii) most of the receptive fields are separable in space-time. There are two main classes of temporal responses for such cells: (i) a “non-lagged cell” is defined as a cell for which the first temporal lobe is the largest one (Fig. 3, left), whereas (ii) a “lagged cell” is defined as a cell for which the second lobe dominates (Fig. 3, right).

Equivalent kernels with temporal variance \(\tau = 1\) corresponding to the composition of \(K = 7\) truncated exponential kernels in cascade and their first- and second-order derivatives. Top row Equal time constants \(\mu \). Second row Logarithmic distribution of the scale levels for \(c = \sqrt{2}\). Third row Logarithmic distribution for \(c = 2^{3/4}\). Bottom row Logarithmic distribution for \(c = 2\)

Such temporal response properties are typical for first- and second-order temporal derivatives of a time-causal temporal scale-space representation. The spatial response, on the other hand, shows a high similarity to a Laplacian of a Gaussian, leading to an idealized receptive field model of the form [57, Eq. (108)]

Figure 3 shows results of modelling separable receptive fields in the LGN in this way, using a cascade of first-order integrators/truncated exponential kernels of the form (12) for modelling the temporal smoothing function \(h(t;\; \tau )\).

Computational modelling of space-time separable receptive field profiles in the lateral geniculate nucleus (LGN) as reported by DeAngelis et al. [12] using idealized spatio-temporal receptive fields of the form \(T(x, t;\; s, \tau ) = \partial _{x^{\alpha }} \partial _{t^{\beta }} g(;\; s) \, h(t;\; \tau )\) according to Eq. (1) and with the temporal smoothing function \(h(t;\; \tau )\) modelled as a cascade of first-order integrators/truncated exponential kernels of the form (12). Left a “non-lagged cell” modelled using first-order temporal derivatives. Right a “lagged cell” modelled using second-order temporal derivatives. Parameter values: a \(h_{xxt}\): \(\sigma _x = 0.5^{\circ }\), \(\sigma _t = 40\) ms. b \(h_{xxtt}\): \(\sigma _x = 0.6^{\circ }\), \(\sigma _t = 60\) ms (Horizontal dimension: space x. Vertical dimension: time t)

Receptive Fields in V1 Concerning the neurons in the primary visual cortex (V1), DeAngelis et al. [11, 12] describe that their receptive fields are generally different from the receptive fields in the LGN in the sense that they are (i) oriented in the spatial domain and (ii) sensitive to specific stimulus velocities. Cells (iii) for which there are precisely localized “on” and “off” subregions with (iv) spatial summation within each subregion, (v) spatial antagonism between on- and off-subregions and (vi) whose visual responses to stationary or moving spots can be predicted from the spatial subregions are referred to as simple cells as discovered by Hubel and Wiesel [31–33]. In Lindeberg [57], an idealized model of such receptive fields was proposed of the form

where

-

\(\partial _{\varphi } = \cos \varphi \, \partial _{x_1} + \sin \varphi \, \partial _{x_2}\) and \(\partial _{\bot \varphi } = \sin \varphi \, \partial _{x_1} - \cos \varphi \, \partial _{x_2}\) denote spatial directional derivative operators in two orthogonal directions \(\varphi \) and \(\bot \varphi \),

-

\(m_1 \ge 0\) and \(m_2 \ge 0\) denote the orders of differentiation in the two orthogonal directions in the spatial domain with the overall spatial order of differentiation \(m = m_1 + m_2\),

-

\(v_1 \, \partial _{x_1} + v_2 \, \partial _{x_2} + \partial _t\) denotes a velocity-adapted temporal derivative operator

and the meanings of the other symbols are similar as explained in connection with Eq. (1).

Computational modelling of simple cells in the primary visual cortex (V1) as reported by DeAngelis et al. [12] using idealized spatio-temporal receptive fields of the form \(T(x, t;\; s, \tau , v) = \partial _{x^{\alpha }} \partial _{t^{\beta }} g(x - v t;\; s) \, h(t;\; \tau )\) according to Eq. (1) and with the temporal smoothing function \(h(t;\; \tau )\) modelled as a cascade of first-order integrators/truncated exponential kernels of the form (12). Left column Separable receptive fields corresponding to mixed derivatives of first- or second-order derivatives over space with first-order derivatives over time. Right column Inseparable velocity-adapted receptive fields corresponding to second- or third-order derivatives over space. Parameter values: a \(h_{xt}\): \(\sigma _x = 0.6^{\circ }\), \(\sigma _t = 60\) ms. b \(h_{xxt}\): \(\sigma _x = 0.6^{\circ }\), \(\sigma _t = 80\) ms. c \(h_{xx}\): \(\sigma _x = 0.7^{\circ }\), \(\sigma _t = 50\) ms, \(v = 0.007^{\circ }\)/ms. d \(h_{xxx}\): \(\sigma _x = 0.5^{\circ }\), \(\sigma _t = 80\) ms, \(v = 0.004^{\circ }\)/ms. (Horizontal axis: Space x in degrees of visual angle. Vertical axis: Time t in ms)

Figure 4 shows the result of modelling the spatio-temporal receptive fields of simple cells in V1 in this way, using the general idealized model of spatio-temporal receptive fields in Eq. (1) in combination with a temporal smoothing kernel obtained by coupling a set of first-order integrators or truncated exponential kernels in cascade. As can be seen from the figures, the proposed idealized receptive field models do well reproduce the qualitative shape of the neurophysiologically recorded biological receptive fields.

These results complement the general theoretical model for visual receptive fields in Lindeberg [57] by (i) temporal kernels that have better temporal dynamics than the time-causal semigroup derived in Lindeberg [56] by decreasing faster with time (decreasing exponentially instead of polynomially) and with (ii) explicit modelling results and a theory (developed in more detail in following sections)Footnote 1 for choosing and parameterizing the intermediate discrete temporal scale levels in the time-causal model.

With regard to a possible biological implementation of this theory, the evolution properties of the presented scale-space models over scale and time are governed by diffusion and difference equations [see Eqs. (23–24) in the next section], which can be implemented by operations over neighbourhoods in combination with first-order integration over time. Hence, the computations can naturally be implemented in terms of connections between different cells. Diffusion equations are also used in mean field theory for approximating the computations that are performed by populations of neurons, see e.g. Omurtag et al. [76], Mattia and Guidice [73], Faugeras et al. [18].

By combination of the theoretical properties of these kernels regarding scale-space properties between receptive field responses at different spatial and temporal scales as well as their covariance properties under natural image transformations (described in more detail in the next section), the proposed theory can be seen as a both theoretically well-founded and biologically plausible model for time-causal and time-recursive spatio-temporal receptive fields.

3.7 Theoretical Properties of Time-Causal Spatio-Temporal Scale-Space

Under evolution of time and with increasing spatial scale, the corresponding time-causal spatio-temporal scale-space representation generated by convolution with kernels of the form (1) with specifically the temporal smoothing kernel \(h(t;\; \tau )\) defined as a set of truncated exponential kernels/first-order integrators in cascade (12) obeys the following system of differential/difference equations

with the difference operator \(\delta _{\tau }\) over temporal scale

Theoretically, the resulting spatio-temporal scale-space representation obeys similar scale-space properties over the spatial domain as the two other spatio-temporal scale-space models derived in Lindeberg [56–58] regarding (i) linearity over the spatial domain, (ii) shift invariance over space, (iii) semigroup and cascade properties over spatial scales, (iv) self-similarity and scale covariance over spatial scales so that for any uniform scaling transformation \((x', t')^T = (S x, t)^T\) the spatio-temporal scale-space representations are related by \(L'(x', t';\; s', \tau _k;\; {\varSigma }, v') = L(x, t;\; s, \tau _k;\; {\varSigma }, v)\) with \(s' = S^2 s\) and \(v' = S v\) and (v) non-enhancement of local extrema with increasing spatial scale.

If the family of receptive fields in Eq. (1) is defined over the full group of positive definite spatial covariance matrices \({\varSigma }\) in the spatial affine Gaussian scale-space [48, 56, 69], then the receptive field family also obeys (vi) closedness and covariance under time-independent affine transformations of the spatial image domain, \((x', t')^T = (A x, t)^T\) implying \(L'(x', t';\; s, \tau _k;\; {\varSigma }', v') = L(x, t;\; s, \tau _k;\; {\varSigma }, v)\) with \({\varSigma }' = A{\varSigma }A^T\) and \(v' = Av\), and as resulting from, e.g., local linearizations of the perspective mapping (with locality defined as over the support region of the receptive field). When using rotationally symmetric Gaussian kernels for smoothing, the corresponding spatio-temporal scale-space representation does instead obey (vii) rotational invariance.

Over the temporal domain, convolution with these kernels obeys (viii) linearity over the temporal domain, (ix) shift invariance over the temporal domain, (x) temporal causality, (xi) cascade property over temporal scales, (xii) non-creation of local extrema for any purely temporal signal. If using a uniform distribution of the intermediate temporal scale levels, the spatio-temporal scale-space representation obeys a (xiii) semigroup property over discrete temporal scales. Due to the finite number of discrete temporal scale levels, the corresponding spatio-temporal scale-space representation cannot however for general values of the time constants \(\mu _k\) obey full self-similarity and scale covariance over temporal scales. Using a logarithmic distribution of the temporal scale levels and an additional limit case construction to the infinity, we will however show in Sect. 5 that it is possible to achieve (xiv) self-similarity (41) and scale covariance (49) over the discrete set of temporal scaling transformations \((x', t')^T = (x, c^j t)^T\) that precisely corresponds to mappings between any pair of discretized temporal scale levels as implied by the logarithmically distributed temporal scale parameter with distribution parameter c.

Over the composed spatio-temporal domain, these kernels obey (xv) positivity and (xvi) unit normalization in \(L_1\)-norm. The spatio-temporal scale-space representation also obeys (xvii) closedness and covariance under local Galilean transformations in space-time, in the sense that for any Galilean transformation \((x', t')^T = (x - ut, t)^T\) with two video sequences related by \(f'(x', t') = f(x, t)\), their corresponding spatio-temporal scale-space representations will be equal for corresponding parameter values \(L'(x', t';\; s, \tau _k;\; {\varSigma }, v') = L(x, t;\; s, \tau _k;\; {\varSigma }, v)\) with \(v' = v-u\).

If additionally the velocity value v and/or the spatial covariance matrix \({\varSigma }\) can be adapted to the local image structures in terms of Galilean and/or affine invariant fixed point properties [48, 56, 64, 69], then the spatio-temporal receptive field responses can additionally be made (xviii) Galilean invariant and/or (xix) affine invariant.

4 Temporal Dynamics of the Time-Causal Kernels

For the time-causal filters obtained by coupling truncated exponential kernels in cascade, there will be an inevitable temporal delay depending on the time constants \(\mu _k\) of the individual filters. A straightforward way of estimating this delay is by using the additive property of mean values under convolution \(m_K = \sum _{k=1}^K \mu _k\) according to (14). In the special case when all the time constants are equal \(\mu _k = \sqrt{\tau /K}\), this measure is given by

showing that the temporal delay increases if the temporal smoothing operation is divided into a larger number of smaller individual smoothing steps.

In the special case when the intermediate temporal scale levels are instead distributed logarithmically according to (18), with the individual time constants given by (19) and (20), this measure for the temporal delay is given by

with the limit value

when the number of filters tends to infinity.

By comparing Eqs. (26) and (27), we can specifically note that with increasing number of intermediate temporal scale levels, a logarithmic distribution of the intermediate scales implies shorter temporal delays than a uniform distribution of the intermediate scales.

Table 1 shows numerical values of these measures for different values of K and c. As can be seen, the logarithmic distribution of the intermediate scales allows for significantly faster temporal dynamics than a uniform distribution.

Additional Temporal Characteristics Because of the asymmetric tails of the time-causal temporal smoothing kernels, temporal delay estimation by the mean value may however lead to substantial overestimates compared to, e.g., the position of the local maximum. To provide more precise characteristics, let us first consider the case of a uniform distribution of the intermediate temporal scales, for which a compact closed-form expression is available for the composed kernel and corresponding to the probability density function of the Gamma distribution

The temporal derivatives of these kernels relate to Laguerre functions (Laguerre polynomials \(p_n^{\alpha }(t)\) multiplied by a truncated exponential kernel) according to Rodrigues formula:

Let us differentiate the temporal smoothing kernel

and solve for the position of the local maximum

Table 2 shows numerical values for the position of the local maximum for both types of time-causal kernels. As can be seen from the data, the temporal response properties are significantly faster for a logarithmic distribution of the intermediate scale levels compared to a uniform distribution and the difference increases rapidly with K. These temporal delay estimates are also significantly shorter than the temporal mean values, in particular for the logarithmic distribution.

If we consider a temporal event that occurs as a step function over time (e.g. a new object appearing in the field of view) and if the time of this event is estimated from the local maximum over time in the first-order temporal derivative response, then the temporal variation in the response over time will be given by the shape of the temporal smoothing kernel. The local maximum over time will occur at a time delay equal to the time at which the temporal kernel has its maximum over time. Thus, the position of the maximum over time of the temporal smoothing kernel is highly relevant for quantifying the temporal response dynamics.

5 The Scale-Invariant Time-Causal Limit Kernel

In this section, we will show that in the case of a logarithmic distribution of the intermediate temporal scale levels, it is possible to extend the previous temporal scale-space concept into a limit case that permits for covariance under temporal scaling transformations, corresponding to closedness of the temporal scale-space representation to a compression or stretching of the temporal scale axis by any integer power of the distribution parameter c.

Concerning the need for temporal scale invariance of a temporal scale-space representation, let us first note that one could possibly first argue that the need for temporal scale invariance in a temporal scale-space representation is different from the need for spatial scale invariance in a spatial scale-space representation. Spatial scaling transformations always occur because of perspective scaling effects caused by variations in the distances between objects in the world and the observer and do therefore always need to be handled by a vision system, whereas the temporal scale remains unaffected by the perspective mapping from the scene to the image.

Temporal scaling transformations are, however, nevertheless important because of physical phenomena or spatio-temporal events occurring faster or slower. This is analogous to another source of scale variability over the spatial domain, caused by objects in the world having different physical size. To handle such scale variabilities over the temporal domain, it is therefore desirable to develop temporal scale-space concepts that allow for temporal scale invariance.

Fourier Transform of Temporal Scale-Space Kernel When using a logarithmic distribution of the intermediate scale levels (18), the time constants of the individual first-order integrators are given by (19) and (20). Thus, the explicit expression for the Fourier transform obtained by setting \(q = i \omega \) in (11) is of the form

Characterization in Terms of Temporal Moments Although the explicit expression for the composed time-causal kernel may be somewhat cumbersome to handle for any finite value of K, in Appendix 1(a) we show how one based on a Taylor expansion of the Fourier transform can derive compact closed-form moment or cumulant descriptors of these time-causal scale-space kernels. Specifically, the limit values of the first-order moment \(M_1\) and the higher order central moments up to order four when the number of temporal scale levels K tends to infinity are given by

and give a coarse characterization of the limit behaviour of these kernels essentially corresponding to the terms in a Taylor expansion of the Fourier transform up to order four. Following a similar methodology, explicit expressions for higher order moment descriptors can also be derived in an analogous fashion, from the Taylor coefficients of higher order, if needed for special purposes.

In Fig. 9 in Appendix 1(a), we show graphs of the corresponding skewness and kurtosis measures as function of the distribution parameter c, showing that both these measures increase with the distribution parameter c. In Fig. 12 in Appendix 2, we provide a comparison between the behaviour of this limit kernel and the temporal kernel in Koenderink’s scale-time model showing that although the temporal kernels in these two models to a first approximation share qualitatively coarsely similar properties in terms of their overall shape (see Fig. 11 in Appendix 2), the temporal kernels in these two models differ significantly in terms of their skewness and kurtosis measures.

The Limit Kernel By letting the number of temporal scale levels K tend to infinity, we can define a limit kernel \({\varPsi }(t;\; \tau , c)\) via the limit of the Fourier transform (33) according to (and with the indices relabelled to better fit the limit case):

By treating this limit kernel as an object by itself, which will be well defined because of the rapid convergence by the summation of variances according to a geometric series, interesting relations can be expressed between the temporal scale-space representations

obtained by convolution with this limit kernel.

Self-Similar Recurrence Relation for the Limit Kernel over Temporal Scales Using the limit kernel, an infinite number of discrete temporal scale levels are implicitly defined given the specific choice of one temporal scale \(\tau = \tau _0\):

Directly from the definition of the limit kernel, we obtain the following recurrence relation between adjacent scales:

and in terms of the Fourier transform:

Behaviour Under Temporal Rescaling Transformations From the Fourier transform of the limit kernel (38), we can observe that for any temporal scaling factor S, it holds that

Thus, the limit kernel transforms as follows under a scaling transformation of the temporal domain:

If we for a given choice of distribution parameter c rescale the input signal f by a scaling factor \(S = 1/c\) such that \(t' = t/c\), it then follows that the scale-space representation of \(f'\) at temporal scale \(\tau ' = \tau /c^2\)

will be equal to the temporal scale-space representation of the original signal f at scale \(\tau \)

Hence, under a rescaling of the original signal by a scaling factor c, a rescaled copy of the temporal scale-space representation of the original signal can be found at the next lower discrete temporal scale relative to the temporal scale-space representation of the original signal.

Applied recursively, this result implies that the temporal scale-space representation obtained by convolution with the limit kernel obeys a closedness property over all temporal scaling transformations \(t' = c^j t\) with temporal rescaling factors \(S = c^{j}\) (\(j \in {\mathbb Z}\)) that are integer powers of the distribution parameter c ,

allowing for perfect scale invariance over the restricted subset of scaling factors that precisely matches the specific set of discrete temporal scale levels that is defined by a specific choice of the distribution parameter c. Based on this desirable and highly useful property, it is natural to refer to the limit kernel as the scale-invariant time-causal limit kernel.

Applied to the spatio-temporal scale-space representation defined by convolution with a velocity-adapted affine Gaussian kernel \(g(x-vt;\; s, {\varSigma })\) over space and the limit kernel \({\varPsi }(t;\; \tau , c)\) over time

the corresponding spatio-temporal scale-space representation will then under a scaling transformation of time \((x', t')^T = (x, c^j t)^T\) obey the closedness property

with \(\tau ' = c^{2j} \tau \) and \(v' = v/c^j\).

Self-Similarity and Scale Invariance of the Limit Kernel Combining the recurrence relations of the limit kernel with its transformation property under scaling transformations, it follows that the limit kernel can be regarded as truly self-similar over scale in the sense that (i) the scale-space representation at a coarser temporal scale (here \(\tau \)) can be recursively computed from the scale-space representation at a finer temporal scale (here \(\tau /c^2\)) according to (41), (ii) the representation at the coarser temporal scale is derived from the input in a functionally similar way as the representation at the finer temporal scale and (iii) the limit kernel and its Fourier transform are transformed in a self-similar way (44) and (43) under scaling transformations.

In these respects, the temporal receptive fields arising from temporal derivatives of the limit kernel share structurally similar mathematical properties as continuous wavelets [10, 30, 71, 75] and fractals [5, 6, 72], while with the here conceptually novel extension that the scaling behaviour and self-similarity over scale is achieved over a time-causal and time-recursive temporal domain.

6 Computational Implementation

The computational model for spatio-temporal receptive fields presented here is based on spatio-temporal image data that are assumed to be continuous over time. When implementing this model on sampled video data, the continuous theory must be transferred to discrete space and discrete time.

In this section, we describe how the temporal and spatio-temporal receptive fields can be implemented in terms of corresponding discrete scale-space kernels that possess scale-space properties over discrete spatio-temporal domains.

6.1 Classification of Scale-Space Kernels for Discrete Signals

In Sect. 3.2, we described how the class of continuous scale-space kernels over a one-dimensional domain can be classified based on classical results by Schoenberg regarding the theory of variation diminishing transformations as applied to the construction of discrete scale-space theory in Lindeberg [45] [48, Sect. 3.3]. To later map the temporal smoothing operation to theoretically well-founded discrete scale-space kernels, we shall in this section describe corresponding classification result regarding scale-space kernels over a discrete temporal domain.

Variation Diminishing Transformations Let \(v = (v_1, v_2, \dots , v_n)\) be a vector of n real numbers and let \(V^-(v)\) denote the (minimum) number of sign changes obtained in the sequence \(v_1, v_2, \dots , v_n\) if all zero terms are deleted. Then, based on a result by Schoenberg [84], the convolution transformation

is variation diminishing, i.e.,

holds for all \(f_\mathrm{in}\) if and only if the generating function of the sequence of filter coefficients \(\varphi (z) = \sum _{n=-\infty }^{\infty } c_n z^n\) is of the form

where \(c > 0\), \(k \in {\mathbb Z}\), \(q_{-1}, q_1, \alpha _i, \beta _i, \gamma _i, \delta _i \ge 0\) and \(\sum _{i=1}^{\infty }(\alpha _i + \beta _i + \gamma _i + \delta _i) < \infty \). Interpreted over the temporal domain, this means that besides trivial rescaling and translation, there are three basic classes of discrete smoothing transformations:

-

two-point weighted average or generalized binomial smoothing

$$\begin{aligned} \begin{aligned} f_\mathrm{out}(x)&= f_\mathrm{in}(x) + \alpha _i \, f_\mathrm{in}(x - 1) \quad (\alpha _i \ge 0),\\ f_\mathrm{out}(x)&= f_\mathrm{in}(x) + \delta _i \, f_\mathrm{in}(x + 1) \quad (\delta _i \ge 0), \end{aligned} \end{aligned}$$(53) -

moving average or first-order recursive filtering

$$\begin{aligned} \begin{aligned} f_\mathrm{out}(x)&= f_\mathrm{in}(x) + \beta _i \, f_\mathrm{out}(x - 1) \quad (0 \le \beta _i < 1), \\ f_\mathrm{out}(x)&= f_\mathrm{in}(x) + \gamma _i \, f_\mathrm{out}(x + 1) \quad (0 \le \gamma _i < 1), \end{aligned}\nonumber \\ \end{aligned}$$(54) -

infinitesimal smoothingFootnote 2 or diffusion as arising from the continuous semigroups made possible by the factor\(\mathrm{e}^{(q_{-1}z^{-1} + q_1z)}\).

To transfer the continuous first-order integrators derived in Sect. 3.3 to a discrete implementation, we shall in this treatment focus on the first-order recursive filters, which by additional normalization constitute both the discrete correspondence and a numerical approximation of time-causal and time-recursive first-order temporal integration (15).

6.2 Discrete Temporal Scale-Space Kernels Based on Recursive Filters

Given video data that have been sampled by some temporal frame rate r, the temporal scale \(\sigma _t\) in the continuous model in units of seconds is first transformed to a variance \(\tau \) relative to a unit time sampling

where r may typically be either 25 fps or 50 fps. Then, a discrete set of intermediate temporal scale levels \(\tau _k\) is defined by (18) or (16) with the difference between successive scale levels according to \(\Delta \tau _k = \tau _k - \tau _{k-1}\) (with \(\tau _0 = 0\)).

For implementing the temporal smoothing operation between two such adjacent scale levels (with the lower level in each pair of adjacent scales referred to as \(f_\mathrm{in}\) and the upper level as \(f_\mathrm{out}\)), we make use of a first-order recursive filter normalized to the form

and having a generating function of the form

which is a time-causal kernel and satisfies discrete scale-space properties of guaranteeing that the number of local extrema or zero-crossings in the signal will not increase with increasing scale [45, 66]. These recursive filters are the discrete analogue of the continuous first-order integrators (15). Each primitive recursive filter (56) has temporal mean value \(m_k = \mu _k\) and temporal variance \(\Delta \tau _k = \mu _k^2 + \mu _k\), and we compute \(\mu _k\) from \(\Delta \tau _k\) according to

By the additive property of variances under convolution, the discrete variances of the discrete temporal scale-space kernels will perfectly match those of the continuous model, whereas the mean values and the temporal delays may differ somewhat. If the temporal scale \(\tau _k\) is large relative to the temporal sampling density, the discrete model should be a good approximation in this respect.

By the time-recursive formulation of this temporal scale-space concept, the computations can be performed based on a compact temporal buffer over time, which contains the temporal scale-space representations at temporal scales \(\tau _k\) and with no need for storing any additional temporal buffer of what has occurred in the past to perform the corresponding temporal operations.

Concerning the actual implementation of these operations computationally on signal processing hardware of software with built-in support for higher order recursive filtering, one can specifically note the following: If one is only interested in the receptive field response at a single temporal scale, then one can combine a set of \(K'\) first-order recursive filters (56) into a higher order recursive filter by multiplying their generating functions (57)

thus performing \(K'\) recursive filtering steps by a single call to the signal processing hardware or software. If using such an approach, it should be noted, however, that depending on the internal implementation of this functionality in the signal processing hardware/software, the composed call (59) may not be as numerically well-conditioned as the individual smoothing steps (56) which are guaranteed to dampen any local perturbations. In our Matlab implementation, for offline processing of this receptive field model, we have therefore limited the number of compositions to \(K' = 4\).

6.3 Discrete Implementation of Spatial Gaussian Smoothing

To implement the spatial Gaussian operation on discrete sampled data, we do first transform a spatial scale parameter \(\sigma _x\) in units of, e.g., degrees of visual angle to a spatial variance s relative to a unit sampling density according to

where p is the number of pixels per spatial unit, e.g., in terms of degrees of visual angle at the image centre. Then, we convolve the image data with the separable two-dimensional discrete analogue of the Gaussian kernel [45]

where \(I_n\) denotes the modified Bessel functions of integer order and which corresponds to the solution of the semi-discrete diffusion equation

where \(\nabla _5^2\) denotes the five-point discrete Laplacian operator defined by \((\nabla _5^2 f)(n_1, n_2) = f(n_1-1, n_2) + f(n_1+1, n_2) +f(n_1, n_2-1) + f(n_1, n_2+1)- 4 f(n_1, n_2)\). These kernels constitute the natural way to define a scale-space concept for discrete signals corresponding to the Gaussian scale-space over a symmetric domain.

This operation can be implemented either by explicit spatial convolution with spatially truncated kernels

for small \(\varepsilon \) of the order \(10^{-8}\) to \(10^{-6}\) with mirroring at the image boundaries (adiabatic boundary conditions corresponding to no heat transfer across the image boundaries) or using the closed-form expression of the Fourier transform

Alternatively, to approximate rotational symmetry by higher degree of accuracy, one can define the 2-D spatial discrete scale-space from the solution of [48, Sect. 4.3]

where \((\nabla _{\times }^2 f)(n_1, n_2) = \tfrac{1}{2} (f(n_1+1, n_2+1) + f(n_1+1, n_2-1) +f(n_1-1, n_2+1) + f(n_1-1, n_2-1)- 4 f(n_1, n_2))\) and specifically the choice \(\gamma = 1/3\) gives the best approximation of rotational symmetry. In practice, this operation can be implemented by first one step of diagonal separable discrete smoothing at scale \(s_{\times } = s/6\) followed by a Cartesian separable discrete smoothing at scale \(s_5 = 2s/3\) or using a closed-form expression for the Fourier transform derived from the difference operators

6.4 Discrete Implementation of Spatio-Temporal Receptive Fields

For separable spatio-temporal receptive fields, we implement the spatio-temporal smoothing operation by separable combination of the spatial and temporal scale-space concepts in Sects. 6.2 and 6.3. From this representation, spatio-temporal derivative approximations are then computed from difference operators

expressed over the appropriate dimensions and with higher order derivative approximations constructed as combinations of these primitives, e.g. \(\delta _{xy} = \delta _x \, \delta _y\), \(\delta _{xxx} = \delta _x \, \delta _{xx}\), \(\delta _{xxt} = \delta _{xx} \, \delta _t\), etc. From the general theory in Lindeberg [46, 48], it follows that the scale-space properties for the original zero-order signal will be transferred to such derivative approximations, including a true cascade smoothing property for the spatio-temporal discrete derivative approximations

and preservation of certain algebraic properties of Gaussian derivatives (see [63] for additional statements).

For non-separable spatio-temporal receptive fields corresponding to a non-zero image velocity \(v = (v_1, v_2)^T\), we implement the spatio-temporal smoothing operation by first warping the video data \((x_1', x_2')^T = (x_1 - v_1 t, x_2 - v_2 t)^T\) using spline interpolation. Then, we apply separable spatio-temporal smoothing in the transformed domain and unwarp the result back to the original domain. Over a continuous domain, such an operation is equivalent to convolution with corresponding velocity-adapted spatio-temporal receptive fields, while being significantly faster in a discrete implementation than explicit convolution with non-separable receptive fields over three dimensions.

7 Scale Normalization for Spatio-Temporal Derivatives

When computing spatio-temporal derivatives at different scales, some mechanism is needed for normalizing the derivatives with respect to the spatial and temporal scales, to make derivatives at different spatial and temporal scales comparable and to enable spatial and temporal scale selection.

7.1 Scale Normalization of Spatial Derivatives

For the Gaussian scale-space concept defined over a purely spatial domain, it can be shown that the canonical way of defining scale-normalized derivatives at different spatial scales s is according to [53]

where \(\gamma _s\) is a free parameter. Specifically, it can be shown [53, Sect. 9.1] that this notion of \(\gamma \)-normalized derivatives corresponds to normalizing the m:th order Gaussian derivatives \(g_{\xi ^m} = g_{\xi _1^{m_1} \xi _2^{m_2}}\) in N-dimensional image space to constant \(L_p\)-norms over scale

with

where the perfectly scale-invariant case \(\gamma _s = 1\) corresponds to \(L_1\)-normalization for all orders \(|m| = m_1 + \dots + m_N\). In this paper, we will throughout use this approach for normalizing spatial differentiation operators with respect to the spatial scale parameter s.

7.2 Scale Normalization of Temporal Derivatives

If using a non-causal Gaussian temporal scale-space concept, scale-normalized temporal derivatives can be defined in an analogous way as scale-normalized spatial derivatives as described in the previous section.

For the time-causal temporal scale-space concept based on first-order temporal integrators coupled in cascade, we can also define a corresponding notion of scale-normalized temporal derivatives

which will be referred to as variance-based normalization reflecting the fact the parameter \(\tau \) corresponds to the variance of the composed temporal smoothing kernel. Alternatively, we can determine a temporal scale normalization factor \(\alpha _{n,\gamma _{\tau }}(\tau )\)

such that the \(L_p\)-norm [with p determined as function of \(\gamma \) according to (73)] of the corresponding composed scale-normalized temporal derivative computation kernel \(\alpha _{n,\gamma _{\tau }}(\tau ) \, h_{t^n}\) equals the \(L_p\)-norm of some other reference kernel, where we here initially take the \(L_p\)-norm of the corresponding Gaussian derivative kernels

This latter approach will be referred to as \(L_p\) -normalization.Footnote 3

For the discrete temporal scale-space concept over discrete time, scale normalization factors for discrete \(l_p\)-normalization are defined in an analogous way with the only difference that the continuous \(L_p\)-norm is replaced by a discrete \(l_p\)-norm.

In the specific case when the temporal scale-space representation is defined by convolution with the scale-invariant time-causal limit kernel according to (39) and (38), it is shown in Appendix 3 that the corresponding scale-normalized derivatives become truly scale covariant under temporal scaling transformations \(t' = c^j t\) with scaling factors \(S = c^j\) that are integer powers of the distribution parameter c

between matching temporal scale levels \(\tau ' = c^{2j} \tau \). Specifically, for \(\gamma = 1\) corresponding to \(p = 1\), the scale-normalized temporal derivatives become fully scale invariant

7.3 Computation of Temporal Scale Normalization Factors

For computing the temporal scale normalization factors

in (75) for \(L_p\)-normalization according to (76), we compute the \(L_p\)-norms of the scale-normalized Gaussian derivatives, from closed-form expressions if \(\gamma = 1\) (corresponding to \(p = 1\))

or for values of \(\gamma \ne 1\) by numerical integration. For computing the discrete \(l_p\)-norm of discrete temporal derivative approximations, we first (i) filter a discrete delta function by the corresponding cascade of first-order integrators to obtain the temporal smoothing kernel and then (ii) apply discrete derivative approximation operators to this kernel to obtain the corresponding equivalent temporal derivative kernel, (iii) from which the discrete \(l_p\)-norm is computed by straightforward summation.

To illustrate how the choice of temporal scale normalization method may affect the results in a discrete implementation, Tables 3 and 4 show examples of temporal scale normalization factors computed in these ways by either (i) variance-based normalization \(\tau ^{n/2}\) according to (74) or (ii) \(L_p\)-normalization \(\alpha _{n,\gamma _{\tau }}(\tau )\) according to (75–76) for different orders of temporal temporal differentiation n, different distribution parameters c and at different temporal scales \(\tau \), relative to a unit temporal sampling rate. The value \(c = \sqrt{2}\) corresponds to a natural minimum value of the distribution parameter from the constraint \(\mu _2 \ge \mu _1\), the value \(c = 2\) to a doubling scale sampling strategy as used in a regular spatial pyramids and \(c = 2^{3/4}\) to a natural intermediate value between these two. Results for additional values of K are shown in [63].

Notably, the numerical values of the resulting scale normalization factors may differ substantially depending on the type of scale normalization method and the underlying number of first-order recursive filters that are coupled in cascade. Therefore, the choice of temporal scale normalization method warrants specific attention in applications where the relations between numerical values of temporal derivatives at different temporal scales may have critical influence.

Specifically, we can note that the temporal scale normalization factors based on \(L_p\)-normalization differ more from the scale normalization factors from variance-based normalization (i) in the case of a logarithmic distribution of the intermediate temporal scale levels compared to a uniform distribution, (ii) when the distribution parameter c increases within the family of temporal receptive fields based on a logarithmic distribution of the intermediate scale levels or (iii) a very low number of recursive filters are coupled in cascade. In all three cases, the resulting temporal smoothing kernels become more asymmetric and do hence differ more from the symmetric Gaussian model.

On the other hand, with increasing values of K, the numerical values of the scale normalization factors converge much faster to their limit values when using a logarithmic distribution of the intermediate scale levels compared to using a uniform distribution. Depending on the value of the distribution parameter c, the scale normalization factors do reasonably well approach their limit values after \(K = 4\) to \(K = 8\) scale levels, whereas much larger values of K would be needed if using a uniform distribution. The convergence rate is faster for larger values of c.

7.4 Measuring the Deviation from the Scale-Invariant Time-Causal Limit Kernel

To quantify how good an approximation a time-causal kernel with a finite number of K scale levels is to the limit case when the number of scale levels K tends to infinity, let us measure the relative deviation of the scale normalization factors from the limit kernel according to

Table 5 shows numerical estimates of this relative deviation measure for different values of K from \(K = 2\) to \(K = 32\) for the time-causal kernels obtained from a uniform vs. a logarithmic distribution of the scale values. From the table, we can first note that the convergence rate with increasing values of K is significantly faster when using a logarithmic vs. a uniform distribution of the intermediate scale levels.

Not even \(K = 32\) scale levels is sufficient to drive the relative deviation measure below \(1~\%\) for a uniform distribution, whereas the corresponding deviation measures are down to machine precision when using \(K = 32\) levels for a logarithmic distribution. When using \(K = 4\) scale levels, the relative derivation measure is down to \(10^{-2}\) to \(10^{-4}\) for a logarithmic distribution. If using \(K = 8\) scale levels, the relative deviation measure is down to \(10^{-4}\) to \(10^{-8}\) depending on the value of the distribution parameter c and the order n of differentiation.

From these results, we can conclude that one should not use a too low number of recursive filters that are coupled in cascade when computing temporal derivatives. Our recommendation is to use a logarithmic distribution with a minimum of four recursive filters for derivatives up to order two at finer scales and a larger number of recursive filters at coarser scales. When performing computations at a single temporal scale, we often use \(K = 7\) or \(K = 8\) as default.

8 Spatio-Temporal Feature Detection

In the following, we shall apply the above theoretical framework for separable time-causal spatio-temporal receptive fields for computing different types of spatio-temporal feature, defined from spatio-temporal derivatives of different spatial and temporal orders, which may additionally be combined into composed (linear or non-linear) differential expressions.

8.1 Partial Derivatives

A most basic approach is to first define a spatio-temporal scale-space representation \(L :{\mathbb R}^2 \times {\mathbb R}\times {\mathbb R}_+ \times {\mathbb R}_+\) from any video data \(f :{\mathbb R}^2 \times {\mathbb R}\) and then defining partial derivatives of any spatial and temporal orders \(m = (m_1, m_2)\) and n at any spatial and temporal scales s and \(\tau \) according to

leading to a spatio-temporal N-jet representation of any order

Figure 5 shows such kernels up to order two in the case of a 1+1-D space-time.

8.2 Directional Derivatives

By combining spatial directional derivative operators over any pair of ortogonal directions \(\partial _{\varphi } = \cos \varphi \, \partial _x + \sin \varphi \, \partial _y\) and \(\partial _{\bot \varphi } = \sin \varphi \, \partial _x - \cos \varphi \, \partial _y\) and velocity-adapted temporal derivatives \(\partial _{t_v} = \partial _t + v_x \, \partial _x + v_y \, \partial _y\) over any motion direction \(v = (v_x, v_y, 1)\), a filter bank of spatio-temporal derivative responses can be created

for different sampling strategies over image orientations \(\varphi \) and \(\bot \varphi \) in image space and over motion directions v in space-time (see Fig. 6 for illustrations of such kernels up to order two in the case of a 1+1-D space-time).

Space-time separable kernels \(T_{x^{m}t^{n}}(x, t;\; s, \tau ) = \partial _{x^m t^n} (g(x;\; s) \, h(t;\; \tau ))\) up to order two obtained as the composition of Gaussian kernels over the spatial domain x and a cascade of truncated exponential kernels over the temporal domain t with a logarithmic distribution of the intermediate temporal scale levels (\(s = 1\), \(\tau = 1\), \(K = 7\), \(c = \sqrt{2}\)) (Horizontal axis: space x. Vertical axis: time t)

Velocity-adapted spatio-temporal kernels \(T_{x^{m}t^{n}}(x, t;\; s, \tau , v) = \partial _{x^m t^n} (g(x - vt;\; s) \, h(t;\; \tau ))\) up to order two obtained as the composition of Gaussian kernels over the spatial domain x and a cascade of truncated exponential kernels over the temporal domain t with a logarithmic distribution of the intermediate temporal scale levels (\(s = 1\), \(\tau = 1\), \(K = 7\), \(c = \sqrt{2}\), \(v = 0.5\)) (Horizontal axis: space x. Vertical axis: time t)

Note that as long as the spatio-temporal smoothing operations are performed based on rotationally symmetric Gaussians over the spatial domain and using space-time separable kernels over space-time, the responses to these directional derivative operators can be directly related to corresponding partial derivative operators by mere linear combinations. If extending the rotationally symmetric Gaussian scale-space concept to an anisotropic affine Gaussian scale-space and/or if we make use of non-separable velocity-adapted receptive fields over space-time in a spatio-temporal scale space, to enable true affine and/or Galilean invariances, such linear relationships will, however, no longer hold on a similar form.

For the image orientations \(\varphi \) and \(\bot \varphi \), it is for purely spatial derivative operations, in the case of rotationally symmetric smoothing over the spatial domain, in principle sufficient to to sample the image orientation according to a uniform distribution on the semi-circle using at least \(|m|+1\) directional derivative filters for derivatives of order |m|.

For temporal directional derivative operators to make fully sense in a geometrically meaningful manner (covariance under Galilean transformations of space-time), they should however also be combined with Galilean velocity adaptation of the spatio-temporal smoothing operation in a corresponding direction v according to (1) [42, 44, 51, 56]. Regarding the distribution of such motion directions \(v = (v_x, v_y)\), it is natural to distribute the magnitudes \(|v| = \sqrt{v_x^2 + v_y^2}\) according to a self-similar distribution