Abstract

We discuss models and data of crowd disasters, crime, terrorism, war and disease spreading to show that conventional recipes, such as deterrence strategies, are often not effective and sufficient to contain them. Many common approaches do not provide a good picture of the actual system behavior, because they neglect feedback loops, instabilities and cascade effects. The complex and often counter-intuitive behavior of social systems and their macro-level collective dynamics can be better understood by means of complexity science. We highlight that a suitable system design and management can help to stop undesirable cascade effects and to enable favorable kinds of self-organization in the system. In such a way, complexity science can help to save human lives.

Similar content being viewed by others

1 Introduction

Over the past decades complexity science has evolved from purely theoretical contributions towards applications with real-life relevance. In this paper, we will pay particular attention to the problem of collective dynamics in human populations [1–3]. Topics addressed range from crowd disasters [4] to crime [5, 6], terrorism [7–9], wars [10–12], and the epidemic spreading of diseases [13, 14]. These fields increasingly include quantitative, realistic, and even predictive models, bringing together statistical data analysis, modeling efforts, analytical approaches, and laboratory experiments. These advances make the research described in this paper increasingly relevant for real-life situations, creating the opportunity to employ scientific knowledge to save human lives.

The diverse problems we consider exhibit systemic instabilities in which control of the macro-level, the collective dynamics, is lost even though there seems to be a reasonable level of control at the micro-level of individual system components [15]. In traffic flows, for example, researchers have found that “phantom” traffic jams can occur in the absence of accidents or bottlenecks [16, 17]. Despite the drivers’ best efforts to prevent traffic jams, they are unavoidable if the vehicle density crosses a certain threshold [18, 19]. At high densities, small variations in speed are amplified from one driver to the next, causing a cascade effect. This ends in a situation undesirable for all—one in which every driver is stopped. What, then, can be done to prevent these systemic instabilities? We suggest here that a better scientific understanding, based on methods from complexity science, can help. For example, despite the complexity of traffic patterns [20], they can be described by analytic and predictive models [18]. This has enabled traffic assistance systems based on a distributed control approach and bottom-up self-organization, which can be used for effective congestion avoidance [21, 22]. Surprisingly, well-designed self-regulation in this case outperforms classical top down control.

The focus of this paper will be to give an overview of the new scientific understanding of crowd disasters, crime, terrorism, wars, and epidemics gained through a complex systems perspective. We will explain why “linear thinking” and classical control approaches fail to overcome or mitigate such problems efficiently due to the non-linear, non-equilibrium and therefore often counter-intuitive nature of these problems. Furthermore, we outline how complexity science can provide better solutions to some long-standing problems than conventional approaches. In particular, we will illustrate how this can contribute to addressing a number of serious issues still plaguing society despite better-than-ever science, measurement opportunities, information systems, and technology.

2 Crowd Disasters and How to Avoid Them

Tragic crowd disasters repeatedly occur despite strict codes and guidelines for the organization of mass events. In fact, the number of crowd disasters and the overall number of fatalities are on the rise, probably as a result of the increasing frequency and size of mass events (see Fig. 1). The classical approach to the avoidance of crowd disasters is reflected in the concept of “crowd control”, which assumes that it is necessary to “make people behave”. The concept bears some resemblance to how police sometimes handle violent demonstrations and riots. For example, tear gas and police cordons are used with the intention to gain control over crowds. However, there is evidence that such measures, which are usually intended to improve the safety of the crowd, may also unintentionally deteriorate a situation. For example, the use of tear gas may have played a significant role in the crowd disasters in Lima, Peru (1998), Durban, South Africa (2000), Lisbon, Portugal (2000), Harare, Zimbabwe (2000), and Chicago, Illinois (2003) [23].

Our approach in the following is to clarify the cause of crowd disasters and then to discuss simple rules to improve crowd safety, oriented at giving more control to the individuals (“empowerment”). This strategy can be best described as creating a system design (“institutional setting”) enabling all individuals and stakeholders to make a beneficial contribution to the proper functioning of the system—here mass events.

2.1 Crowd Turbulence

Crowd disasters are examples of situations in which people are killed by other people, even though typically nobody wants to harm anybody. To explain the reasons for crowd disasters, concepts such as “pushing”, “mass panic”, “stampede”, “crowd crushes” or “trampling” have often been used. However, such views tend to blame the visitors of mass events, thereby preventing the proper understanding of crowd disasters and their avoidance in the future. In fact, social order will generally not break down during crowd disasters [24] and it has been found that extreme events may lead to an emergent collective identity that will result in increased solidarity with strangers [25] rather than the opposite.

-

1.

The term “pushing” suggests that people are relentlessly pushing towards their destination, disregarding the situation of others. However, when the density in the crowd is very high, even inadvertent body movements will cause physical interactions with others. The forces transmitted by such involuntary body interactions may add up from one body to the next, thereby causing a situation where people are unintentionally pushed around in the crowd. This situation is hard to distinguish from intentional pushing.

-

2.

Fatalities during a crowd disaster are often perceived as the result of a “crowd crush”, i.e. a situation in which the pressure on human bodies becomes so high that it causes deadly injuries. Such a situation may arise in the case of a “stampede”, i.e. people desperately rushing into one direction but then facing a bottleneck, such that the available space per person is increasingly reduced, until the density in the crowd becomes life-threatening. For example, the crowd disaster in Minsk, Belarus (1999), was caused by people fleeing into a subway station from heavy rain.

-

3.

Another explanation of crowd disasters assumes a “state of (psychological) panic”, which makes individuals behave in an irrational and relentless way, such that people are killed. An example of this kind may be the crowd disaster in Baghdad, Iraq (2005), which was triggered by rumours regarding an imminent suicide bomber. The assumption of mass panic interprets fatalities in crowd disasters similar to manslaughter by a rioting mob—an interpretation, which may warrant the use of tear gas or police cordons to control an “outraged crowd”.

In sharp contrast to this, it turns out that many crowd disasters do not result from a “stampede” or “mass panic” [26]. Prior to 2007, crowd-flow theory generally assumed a state of equilibrium in the crowd, where each level of crowd density (1/m\(^2\)) could be mapped to a corresponding value of the crowd flow (1/m/s). This assumption turned out to be approximately correct for low-to-medium levels of crowd density. However, it was later found [27] that at very high density the crowd is driven far from equilibrium.

In many cases, fatalities result from a phenomenon called “crowd turbulence”, which occurs when the density in the crowd is so high that bodies automatically touch each other. As a result, physical forces are transmitted from one body to another—a process that is unavoidable under such conditions. These forces may add up and create force chains that cause sudden subsequent pushes from various directions, which can usually not be anticipated. Eventually, the individuals are pushed so much that one or some of them may stumble and fall to the ground. This creates a “hole” in the crowd, which breaks the balance of forces for the surrounding people: they are pushed from behind, but not anymore from the front, such that they are either forced to step on fallen persons (“trampling”) or they will also fall. Due to this domino effect, such “holes” eventually grow bigger and bigger (a situation that may be coined “black hole effect”). This explains the phenomenon of piles of people, which are often identified as the causes of crowd disasters and interpreted as instances of trampling. Under such conditions, it is extremely difficult for individuals to get back on their feet and people on the ground eventually die of suffocation.

The occurrence of “crowd turbulence” can be understood and reproduced in computer simulations by means of force-based models. Generally speaking, the behavior of a pedestrian results from two distinct kinds of mechanisms:

-

1.

The cognitive processes [28] used by a pedestrian during interactions with other individuals, which apply when people undertake avoidance manoeuvres, choose an avoidance side, plan a way to their destination, or coordinate their movements with others.

-

2.

The physical pressures resulting from body contacts with neighboring individuals, which apply mostly in situations of overcrowding and are responsible for the phenomenon of crowd turbulence.

While the first category of interactions can be successfully described by means of a variety of methods (e.g. social forces, cognitive heuristics, cellular automata), the second type of interactions necessarily calls for force-based models, as it describes the result of unintentional movements due to physical pressures exerted by densely packed bodies.

Therefore, crowd turbulence can be described by means of a contact force \(\vec {f}_{ij}\) exerted by a pedestrian \(j\) on another pedestrian \(i\) defined as:

Herein, \(\vec {n}_{ij}\) is the normalized vector pointing from pedestrian \(j\) to \(i\), and \(d_{ij}\) is the distance between the pedestrians’ centres of mass [29]. The parameter \(k\) indicates the strength of between-body repulsion force. The function \(g(x)\) is defined as

For simplicity, the projection of the pedestrian’s body on the horizontal plane is represented by a circle of radius \(r_i = m_i/320\), where \(m_i\) represents the mass of pedestrian \(i\). The physical interaction with a wall \(W\) is represented analogously by another contact force:

Here \(d_{iW}\) is the distance to the wall \(W\) and \(\vec {n}_{iW}\) is the direction perpendicular to it. Therefore, both contact forces \(\vec {f}_{ij}\) and \(\vec {f}_{iW}\) are nonzero only when the pedestrian \(i\) is in physical contact with a wall or another individual. Under normal walking conditions (that is, when no body contacts occur), the movement of pedestrians is determined by strategic and cognitive processes that can be described by means of several distinct methods such as social forces [30] or cognitive heuristics [28]. Nevertheless, the method that is used to describe the free movements of pedestrians has little effect on the dynamics that emerge at extreme densities, since pedestrians movements are mostly unintentional in situations of overcrowding. Describing such situations we may thus use a generic component \(\vec {f}_{i}^{0}\) describing how the pedestrian \(i\) would move under normal walking conditions. The resulting acceleration equation in this case then reads

where \(\vec {v}_{i}\) denotes the speed of pedestrian \(i\), where the component \(\vec {f}_{i}^{0}\) is negligible under extremely crowded conditions. The acceleration equation can be solved together with the usual equation of motion

where \(\vec {x}_{i}\) denotes the location of pedestrian \(i\) at time \(t\).

Computer simulations of the above model in crowded situations, where physical interactions dominate over intentional movements, give rise to global breakdowns of coordination, where strongly fluctuating and uncontrollable patterns of motion occur. Crowd turbulence is particularly likely around bottlenecks, where local increases of the pedestrian density enhance the propagation of physical pressures from one individual to another. In particular, the unbalanced pressure distribution results in sudden stress releases and earthquake-like mass displacements of many pedestrians in all possible directions, which is well approximated by a power law with an exponent of \(1.95\) (see Fig. 2). This result is in good agreement with empirical observations of crowd turbulence, which exhibit a power law distribution with exponent \(2.01\) [27].

Result of computer simulations of the above model indicating a power law distribution of displacements at extreme density. The displacement of a pedestrian \(i\) is the change of location of that individual between two subsequent stops where the speed of \(i\) is less than 0.05 m/s. Simulations were conducted at a density of 6 pedestrians per m\(^2\), corresponding to a unidirectional flow of 360 pedestrians in a corridor of length 10 m and width 6 m with a bottleneck of width 4 m. Model parameters were set to \(k=5\times 10^3\) and the body masses \(m_i\) were uniformly distributed in the interval [60, 100 kg]

2.2 Simple Rules for Safety

Preparing for mass events is a major challenge, as there is a host of things that can go wrong over the course of an event (for a recent example see Ref. [26]). Therefore, the overall strategy is to develop and implement a suitable system design, which can be well operated under normal, but also challenging conditions. This includes institutional settings (e.g. spatial boundary conditions) and the way interactions between different stakeholders are organized. All elements of the plan, should be exercised before, including contingency plans. In case of the reorganization of the annual pilgrimage in Saudi-Arabia (“Hajj”), for example, many elements were combined to increase the level of safety. This included [31]:

-

1.

Advance information,

-

2.

Good signage,

-

3.

Reliable communication,

-

4.

A control tower jointly used by all responsible authorities,

-

5.

A new Jamarat Bridge design (with separate ramps for pilgrims coming from and going to different areas),

-

6.

A unidirectional flow organization,

-

7.

A combined routing concept and scheduling program,

-

8.

A real-time flow monitoring,

-

9.

A re-routing in situations of high capacity utilization of certain routes,

-

10.

Contingency plans for all kinds of situations (including bad weather conditions).

It is important to consider that the organization of a mass event must also always take into account the natural (physical, physiological, psychological, social and economic) needs of humans, such as sufficient space, water, food, toilet facilities, perceived progress towards the goal, feeling of safety, information, communication, etc. Neglecting such factors can promote crowd disasters, in particular, if several shortcomings come together.

While sophisticated procedures combining expert consulting, computer simulations and video monitoring remain the safest way to avoid accidents, most event organizers are not in a position to undertake them all. In fact, such complex procedures are often expensive and turn out to be cost-effective only for large events, or if mass events are regularly held at the same place (e.g. the Muslim pilgrimage in Mecca). Hence, organizers of small- and intermediate-size events are often constrained to rely on their intuition and past experience to assess the safety of the events they are in charge of. However, the dynamics of crowd behavior is complex and often counter-intuitive. A systemic failure is usually not the result of one single, well-identifiable factor. Instead, it is the interaction between many factors that cause a situation to get out of control, while each factor alone does not necessarily constitute the source of disaster per se [26].

The following paragraphs offer some simple rules that can help one to improve the safety of mass events. These rules should not be considered as replacement of existing regulations, but can nevertheless complement more sophisticated procedures, and are easy to apply for all types of crowding, ranging from large mass events to daily commuting at train stations and airports.

2.2.1 Design of the Environment

When mass events are planned, one simple rule to keep in mind is that counter-flows, merging flows, and crossing flows generate frictional effects and coordination problems among neighboring people, which create local peaks of density that increase the likelihood of congestion. Even though this is a well-established fact in academic circles, it remains a frequent source of crowd disasters [26]. Likewise, the topology of the environment plays a crucial role to ensure a smooth flow of people. Avoiding bottlenecks is probably the most important recommendation. The problems implied by bottlenecks have been demonstrated with many computer simulations, regardless of the underlying model [28, 29, 32]. Bottlenecks exacerbate the physical pressure among neighboring individuals, and create the initial perturbations that might trigger crowd turbulence. Furthermore, bottlenecks produce long queues and excessive waiting times. Therefore, while people naturally keep reasonable distances between each other, they tend to become impatient and reduce inter-individual distances when their perceived progress towards the goal is too slow. This causes a vicious circle: The increasing local density reduces the flow through the bottleneck, and the reduced flow further increases the local density. Importantly, bottlenecks do not only result from the static environment, but can also be created dynamically by other external factors. Ambulances, police cars, people sitting on the ground, lost belongings, or temporary fences often create bottleneck situations that were not initially foreseen. Another less intuitive side-effect is that the temporary widening of pathways can be dangerous as well, because people typically make use of the additional space to overtake those who walk in front of them. This creates bottleneck situations when the pathway narrows again to its normal width. Finally, recent computer simulations have revealed that sharp turns increase the physical pressure around the inner edge of the bend [28]. Even though turnings are not necessarily dangerous, they constitute potential zones of danger in case of unexpected overcrowding.

2.2.2 Early Detection of Problems

When crowd turbulence begins, the situation typically quickly gets out of control. Detecting this danger early enough is crucial, but remains a challenge. Clear signs of emergency, such as people falling or calling for help usually happen at a stage that is already critical and does not enable a timely response. The most accurate indicator of danger is the local density level. Recent studies have revealed the existence of local density thresholds above which the danger to the crowd is significantly increased [28]: Stop-and-go waves tend to emerge beyond 2–4 people/m\(^2\), while crowd turbulence is likely to occur above 4–7 people/m\(^2\), depending on the average body diameter. The problem is that local densities are hard to measure without a proper monitoring system, which is not available at many mass events. Nevertheless, crowd managers can make use of simple signs as a proxy for local density. For example, one may observe the frequency and strength of body contacts among neighboring people. Typically, when the frequency of involuntary body contacts increases—often perceived as pushy behavior—this indicates that pressure in the crowd builds up and pressure relief strategies are needed. Crowd managers should also be alerted by the emergence of stop-and-go-waves, a self-organized phenomenon characterized by alternating moving and stopping phases, which is visible to the naked eye [27, 28]. As this stop-and-go pattern is related with a reduced flow of people, the density may quickly increase due to the vicious circle effect described in the previous section, so that measures for pressure relief must be taken. Finally, behaviors such as people climbing walls, overcoming fences, or disrespecting conventional routes—often interpreted as relentless and aggressive behaviors—may indicate escape attempts. They should be considered as a serious warning signal of a possible, upcoming crowd disaster.

2.2.3 Information Flows and Communication

Another major element in minimizing the risk of accidents is to set up an efficient communication system. A reliable flow of information between the visitors of an event, the organizers, security staff, police and ambulance is crucial for coordination. In fact, visitors often become aware of developing congestion too late because of the short-range visibility in crowded areas. This may impair orientation, which further increases the problems in the critical zone. In such situations, calm but clear loud speaker announcements informing visitors and well visible, variable message signs can let people know why they have to wait and what they should do. A robust communication system often constitutes the last means to gain control before a situation gets out of hand. It is also important that crowd managers have a reliable overview of the situation. Existing technologies offer useful monitoring systems that allow for real-time measurements of density levels and predictions of future crowd movements (also see Sect. 2.3 describing the use of smartphone applications for inferring crowd density). While such sophisticated monitoring systems are among the most efficient ways to keep track of the situation, most events are not yet using them. The minimum necessary requirement, however, is video monitoring covering the relevant zones of the event, from which the organizers can precisely evaluate the crowd movements and detect signs of upcoming congestion.

2.3 Apps for Saving Lives

Pre-routing strategies are an important element of crowd management as well. For this, a well-functioning information and communication system is crucial. While classically, the situation in the crowd is observed by means of surveillance cameras, helicopters or drones, and local police forces, there is an array of crowd sensors available today, based on video, WiFi, GPS, and mobile tracking. Smartphones, in particular, offer a new way of collecting information about crowded areas and of providing advice to attendees of mass events.

Advances in microelectronics have resulted in modern mobile phones that offer significant processing power, sensing capabilities, and communication features. In combination with a large market penetration they provide an excellent instrument for crowd monitoring. In particular, data can be acquired by deploying a mobile application that probes relevant modalities, e.g. the GPS or acceleration sensors. Adopting a participatory sensing scheme, macroscopic crowd parameters (e.g. density), mesoscopic parameters (e.g., collective behavior recognition, group detection, lane and queue detection, onset of panic prediction) and microscopic parameters of single individuals (e.g., modes of locomotion, velocity and direction, gestures) can be inferred.

In safety critical and crowded environments such as major sports or religious events, instrumenting the environment with static sensors based on video or infrared cameras is currently the most reliable way to obtain a near 100 % sample of the crowd at a sub-second and sub-meter resolution required to accurately measure crowd pressure. However, smartphone apps can be used to infer a good approximation of crowd behavior at large scales, and can be used to pro-actively intervene before crowds reach critically high density levels.

From a safety perspective, employing apps for large-scale events has at least three major advantages:

-

1.

Broadband-communication allows to capture, transmit and centrally process data in near real-time and to extract and visualize relevant crowd parameters in a command and control center (Fig. 3).

-

2.

Offering bi-directional communication, safety personnel can send notifications, warnings, or even guide the user in case of an emergency situation. Incorporating the user’s localization, geo-located messages increase the relevance for each user, helping to follow rules such as those outlined in Sect. 2.2 [33].

-

3.

Data, once collected, can be used to “replay” the event. The post hoc analysis is a critical step in the organization of an event and can reveal critical factors that should be addressed for future events. Although today a great deal of data is available for such analyses, event organisers and crowd managers are sometimes forced to manually scan through video material, reports from security services in the field, or feedback from individual visitors. Combining such heterogeneous sources is a tedious and error-prone task, thus making it difficult to reach a reliable assessment and situational awareness. Localizing users via smartphones potentially offers a more accurate assessment and allows to capture crowd dynamics by aggregation.

The use of smart phones for crowd monitoring is a very recent development. Different modalities such as bluetooth sensing [34, 35] or app-based GPS localization [36] have been used to capture collective dynamics such as flocking, crowd turbulence or mobility patterns during large-scale events. In particular, individual location data can be aggregated using non-parametric probability density functions [36, 37] to estimate safety-relevant characteristics such as crowd movement velocity, density, turbulence or crowd pressure.

Here, we demonstrate the potential of location-data obtained from mobile phones during the Züri Fäscht festival 2013 in Switzerland (see Fig. 4). The Züri Fäscht is a three-days event comprising an extensive program with concerts, dance parties, and shows. It is hosted in the city center of Zürich and is the biggest festival in Switzerland. Up to 2 million visitors have been estimated to attend the festival over the course of three days. In 2013, 56,000 visitors downloaded the festival app, from which 28,000 gave informed consent to anonymously contributed their location data. Figure 5 shows the number of users simultaneously contributing their location data over the course of the event, and the amount of data samples collected. While collecting only a subsample from the entire crowd, previous work [36] showed a correlation coefficient greater than \(0.8\) between the estimated density and actual density determined from video recordings.

The time-dependent density of visitors derived from the app data is shown in Fig. 6. The velocity of festival visitors is represented in Fig. 7 and the movement direction in Fig. 8. Together the three figures reflect the collective crowd dynamics. While Fig. 6 shows spectator densities for multiple events at different times, Figs. 7 and 8 show the mobility of the crowd before, during, and after the fireworks show at lake Zürich. Mobility patterns can be clearly identified. For instance, a strong northbound flow of the crowd can be observed before the event. Interestingly, lanes appear with a right-handed pedestrian traffic (highlighted box), which improves mobility. To support such kind of self-regulation, street signs (e.g., walking direction arrows) have been employed at other places, for instance at obstacles dividing the pathway.

Several reality mining projects have demonstrated that it is not only possible to sense collective behaviors with mobile devices, but also to offer real-time feedback to the users via a return channel of the app. This allows crowd control staff to actively moderate the changes in the collective behavior. For example, the safety personnel may advise visitors to avoid crowded areas and suggest alternative routes. Such measures thus help improve the safety of crowds considerably.

3 Crime, Terrorism, and War

The quantitative study of crime and of violent conflict—both within and between nations—has a long tradition in the social sciences. With the growing availability of detailed empirical data in recent years, these topics have increasingly attracted the attention of the complexity science community. The strive to statistically characterize such data is not new—Richardson’s seminal work on the size of inter-state wars, for example, dates back to 1948 [38]. Recently, however, large datasets on both crime and conflict, often providing detailed information on each incident, its location and timing, have increasingly become available.Footnote 1 Such data has allowed the kind of statistical, system-level analysis we provide here to shed new light on a number of mechanisms underlying global crime and conflict patterns. The combined focus on crime and conflict in this section is intentional as both may be seen as breakdowns of social order, albeit of very different scale and arguably with very different origins.

3.1 Fighting Crime Cycles: The Cyclic Re-emergence of Crime and How to Prevent it

Crowd disasters are rare, but may kill hundreds of people at a time. In contrast to this, crimes are committed many times each day but usually involve only a few people in every incident. However, the impact of crime adds up and is considerable. In the US, for example, over 12,700 people were murdered in 2012 [39]. Economically, cybercrime, for example, results in hundreds of billions of dollars of damage world-wide every year.

To contain criminal activities, societies resort to various mechanisms punishing behavior that deviates from generally accepted norms in intolerable and destructive ways. The percentage of the population imprisoned in a country is an indication for the severity of state punishment (see Fig. 9a). Remarkably, the US has the highest incarceration rate in the world [40].Footnote 2 Nevertheless, its crime rates remain very high by international comparison and are much higher than in many other industrialized countries [42].Footnote 3 In particular, in connection with the “war on drugs”, apparently more than 45 million arrests were made, yet it was recently declared a failure. In fact, US Attorney General Eric Holder concluded: “Too many Americans go to too many prisons for far too long, and for no truly good law enforcement reason.” [44].

a Empirical data on the imprisoned population world-wide (the full list of countries if available upon request). b number of homicides and c violent crimes in the US per 100,000 population. In addition to the average for the US (cyan), panels (b) and (c) show averages of US States with very high (black), high (red), moderate (green) and low (blue) crime rates. The four categories are obtained through grouping US States by their average crime rate over the period 1965–2010 and then assigning the 25 % most affected States to the first category, the 25 % next most affected States to the second category, etc. Examples of US States with very high crime rates are Washington D.C. and New York. States with low crime rates are, for instance, North Dakota and Utah (Color figure online)

But why do crime deterrence measures fail? According to standard rational choice theories of crime, they should not. The economic model of crime assumes that criminals are utility maximizers who optimize their payoffs \(\pi \) under restrictions and risk [45]. There are gains \(g>0\) (either material or physical or both) to be made with little effort, but there is also the threat of being sanctioned with a punishment fine \(f>0\). If \(s\) is the probability for an individual to commit a crime, and \(p\) is the probability for the criminal act to be detected, the payoff is

It is clear from Eq. (6) that, at a given detection rate \(p\), higher punishment (larger \(f\)) should reduce crime. However, this simple argument clearly neglects strategic interaction and numerous socio-economic factors, all of which play a crucial role in the evolution of criminal activity.

In reality, increasing levels of deterrence do not necessarily reduce the level of crime. Instead, one often finds crime cycles. The homicide rate in the United States, for example, began to rise steadily in the 1960s and oscillated at around 8 to 10 homicides per 100,000 for 20 years. It only started to significantly decline in the early 1990s (see Fig. 9b). In fact, such fluctuations in the frequency of crime are visible across a wide and diverse spectrum of criminal offenses, suggesting that cyclic recurrence is a fundamental characteristic of crime (see Fig. 9c). This observation is notable, in particular, as different criminal offenses—homicide and theft, for example—are thought to arise from different kinds of motivations: homicides are usually assumed to be related to manifestations of aggression, whereas theft is most often economically motivated [46].

Criminological research has identified a number of factors that influence the recurrence of crime, its trends and variation across regions and countries. Beside structural factors, such as unemployment [47, 48] and economic deprivation [49], a number of studies have highlighted the influence of demography [50], youth culture [51, 52], social institutions [53] and urban development [54]. Others have pointed to the influence of political legitimacy [49], law enforcement strategies [55, 56], and the criminal justice system [50]. Some recent studies in criminology, in fact, argue that trends in the levels of crime may be best understood as arising from a complex interplay of such factors [54, 57]. Very recent empirical work has further shown that social networks of criminals have a distinct impact on the occurrence of crime [58, 59], thus highlighting the complex interdependence of crime and its social context.

Despite these advances the situation for policy makers to date remains rather opaque, since fully satisfactory explanations for the recurrence of crime are still lacking. Few studies fully recognize the complex interaction of crime and its sanctioning [5, 6, 60, 61]. Analyzing this relationship, however, is of critical importance when trying to explain why, contrary to expectations [45], increased punishment fines do not necessarily reduce crime rates [62]. To understand its social context, crime should be considered as a social dilemma situation: it would be favorable for all if nobody committed a crime, but there are individual incentives to do so. As a consequence crime may spread, thereby creating “tragedies of the commons” [63]. Large-scale corruption and tax evasion in some troubled countries or mafia and drug wars are examples of this.

If nobody is watching, criminal behavior (such as stealing) may seem rational to the individual, since it promises a considerable reward for little effort. To suppress crime it seems plausible to alter the decision situation formalized in Eq. (7) such that the probability \(p\) of catching a criminal times the imposed fine \(f\) eliminates the reward \(g\) of the crime, i.e.

According to this, whether it is favorable or not to commit a crime critically depends both on the fine \(f\) and on the probability of catching a criminal \(p\). Note that in particular the non-trivial interdependence of crime and the resources invested into detecting and punishing it should strongly affect the resulting spatio-temporal dynamics.

In the simple, evolutionary game-theoretical model we discuss next,Footnote 4 the probability of detecting criminal activities is explicitly related to the inspection effort invested by the authorities. The game is played between “criminals” (\(s_x = C\)), punishing “inspectors” (\(s_x = P\)), and “ordinary individuals” (\(s_x =O\)) neither committing crimes nor participating in inspection activities. Each player tends to imitate the strategy of the best performing neighbor.Footnote 5 The game is staged on a \(L\times L\) square lattice with periodic boundary conditions, where individuals play the game with their \(k=4\) nearest neighbors. Criminals, when facing ordinary individuals, make the gain \(g\ge 0\). When facing inspectors, however, criminals obtain the payoff \(g-f\), where \(f\ge 0\) is the punishment fine. If faced with each other, none of two interacting criminals obtains a benefit. Ordinary people receive no payoffs when encountering inspectors or other ordinary individuals. Only when faced with criminals, they suffer the consequences of crime in form of a negative payoff \(-g \le 0\). Inspectors, on the other hand, always bear the cost of inspection, \(c \ge 0\), but when catching a criminal, an inspector receives a reward \(r\ge 0\), i.e. the related payoff is \(r-c\). The introduction of dimensionless parameters is possible in the form of “(relative) inspection costs” \(\alpha =c/f\), the “(relative) temptation” \(\beta =g/f\), and the “(relative) inspection incentive” \(\gamma =r/f\), which leads to the following payoffs:

Herein, \(N_O\), \(N_P\), and \(N_C\) are the numbers of ordinary individuals, inspectors and criminals among the \(k=4\) nearest neighbors. Monte Carlo simulations are performed as described in the Methods section of Ref. [6].

The collective spatio-temporal dynamics of this simple spatial inspection game is complex and counter-intuitive, capturing the essence of the crime-fighting problem well. One can observe various phase transitions between different kinds of collective outcomes, as demonstrated in Fig. 10. The phase transitions are either continuous (solid lines in Fig. 10) or discontinuous (dashed lines in Fig. 10), and this differs markedly from what would be expected according to the rational choice Eq. (7) or the well-mixed model [64], namely due to the significant effects of inspection and spatial interactions. Four different situations can be distinguished:

-

1.

Dominance of criminals for high temptation \(\beta \) and high inspection costs \(\alpha \),

-

2.

Coexistence of criminals and punishing inspectors (\(P+C\) phase) for large values of temptation \(\beta \) and moderate inspection costs \(\alpha \),

-

3.

Dominance of police for moderate inspection costs \(\alpha \) and low values of temptation \(\beta \), but only if the inspection incentives \(\gamma \) are moderate [see panel (A)], and

-

4.

Cyclical dominance for small inspection costs \(\alpha \) and small temptation \(\beta \), where criminals outperform ordinary individuals, while these outperform punishing inspectors, and those win against the criminals (\(C+O+P\) phase) (supplementary videos of different routes towards the emergence of the cyclic phase are available at youtube.com/watch?v=pH9l-2h6PRo and youtube.com/watch?v=gVnCN3a9ki8).

Figure 11 provides a more detailed quantitative analysis, displaying in panel (A) the sudden first-order phase transitions from the \(C+O+P\) phase to the pure \(P\) phase, and from this pure \(P\) phase to the pure \(C\) phase, as inspection costs \(\alpha \) increase. In panel (B), we also observe a sudden, discontinuous phase transition, emerging as neighboring individuals are rewired. This introduces a small-world effect. We observe that conflicts escalate in amplitude until an absorbing phase is reached. It follows that the exact impact of each specific parameter variation depends strongly on the location within the phase diagram, i.e. the exact parameter combination. General statements such as “increasing the fine reduces criminal activity” tend to be wrong, which contradicts the widely established point of view. This may explain why empirical evidence is not in agreement with common theoretical expectations, and it also means we need to reconsider the traditional perspective on crime.

Phase diagrams, demonstrating the spontaneous emergence and stability of the recurrent nature of crime and other possible outcomes of the competition of criminals (\(C\)), ordinary individuals (\(O\)) and punishing inspectors (\(P\)). The diagrams show the strategies remaining on the square lattice after sufficiently long relaxation times as a function of the (relative) inspection costs \(\alpha \) and the (relative) temptation \(\beta \), a for inspection incentive \(\gamma =0.5\) and b for \(\gamma =1.0\). The overlayed color intensity represents the crime rate, i.e. the average density of criminals in the system. (Figure adapted from Ref. [6])

A representative cross-section of the phase diagram presented in Fig. 10a (a), and the robustness of crime cycles against the variation of network topology (b). These results invalidate straightforward gain-loss principles (“linear thinking”) as proper description of the relationship between crime, inspection, and punishment rates. a For \(\beta =0.2\) and \(\gamma =0.5\), increasing \(\alpha \) initially leaves the stationary densities of strategies almost unaffected, while subsequently two discontinuous first-order phase transition occur. b The social interaction networks were constructed by rewiring links of a square lattice of size \(400 \times 400\) with probability \(\lambda \). As \(\lambda \) increases, due to the increasing interconnectedness of the players, the amplitude of oscillations become comparable to the system size. A supplementary video is available at http://youtube.com/watch?v=oGNOmLognOY. (Figure adapted from Ref. [6])

The model reviewed here, therefore, demonstrates that that “linear thinking” is inadequate as a means to devise successful crime prevention policies. The level of complexity governing criminal activities in competition with sanctioning efforts appears to be much greater than often assumed so far. Our results also reveal that crime is likely to be recurrent when there is a gain associated with criminal activity. While our model is, of course, stylized, it can nonetheless help to shed light on the counterintuitive impact of punishment on the occurrence and, in particular, recurrence of crime.

Our model also highlights that crime may not primarily be the result of activities of individual criminals. It should be rather viewed as result of social interactions of people with different behaviors and the collective dynamics resulting from imitative interactions [58, 59]. In other words, the emergence of crime may not be well understood by just assuming a “criminal nature” of particular individuals—this picture probably applies just to a small fraction of people committing crimes. In fact, criminal behavior in many cases is opportunity-driven or arises as a result of social interactions that get out of hand. This suggests that changing social context and conditions may make a significant contribution to the reduction of crime, which in turn has relevant implications for policies and law.

It is commonly assumed that we have come a long way in deterring crime. Many different types of crime are punished all over the world based on widely accepted moral norms, and there exist institutions, organizations, and individuals who do their utmost to enforce these norms. However, specific deterrence strategies may sometimes have no, or even unintended, adverse effects. Recent research finds, for example, that even though video surveillance usually allows for a faster identification of criminal delinquents, it rarely leads to a significant decrease in criminal activities in the surveyed area [65]. In fact, it does not always lead to an increased individual perception of safety [66], even though this is a key arguments for its widespread use.

Other recent findings suggest that certain police strategies might even be outright counter-productive. Based on the assumption that removing the leader of a criminal organization will disrupt its social network, police often attempt to identify and arrest him or her. A recent study analyzing cannabis producer networks in the Netherlands, however, shows that this strategy may be fundamentally flawed: all network disruption strategies analyzed in the study did not disrupt the network at all or, worse, increased the efficiency of the network through efficient network recovery [67].

These examples clearly highlight that an insufficient understanding of the complex dynamical interactions underlying criminal activities may cause strong adverse effects of well-intended deterrence strategies. A new way of thinking, maybe even a new kind of science for deterring crime is thus sorely needed—in particular one that takes into account not just the obvious and similarly linear relations between various factors, but one that also looks particularly at the interdependence and interactions of each individual and its social environment. One then finds that this gives rise to strongly counter-intuitive results that can only be understood as the outcome of emergent, collective dynamics. This is why complexity science can make important and substantial contributions to the understanding and containment of crime.

3.2 Dynamics of Terrorism and Insurgent Conflict

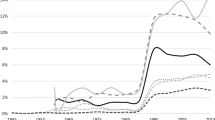

The past decade has seen a resurgence of international terrorism and several violent civil conflicts. The insurgencies that followed the US-lead interventions in Afghanistan and Iraq have caused civilian casualties in the order of hundreds of thousands while the US-declared “war on terror” has not significantly curbed international terrorist activity (Fig. 12). In fact, in the political arena, critics argue that international interventions may themselves serve as triggers for terrorist attacks while others suggest the exact opposite, i.e. that only the decisive interventions and aggressive measures against terrorist organizations have stopped a much more severe escalation of international terrorism.

International terrorism disaggregated by region. a number of events and b fatalities per year. The data were obtained from the Global Terrorism Database (GTD) (http://www.start.umd.edu/gtd/) and represent incidents clearly identified as terrorist attacks that lead to at least one fatality

There are no studies to date that can convincingly support either of the above views and establish a clear causal relationship between international terrorist activities and the “war on terror”. At the level of individual conflicts, however, there are a number of studies that have helped to clarify the link between interventions and levels of violence [68–71]. These studies in particular emphasize reactive or “tit-for-tat” dynamics as a fundamental endogenous mechanism responsible for both escalation and de-escalation of conflict. In the context of Iraq, one can find such reactive dynamics for both insurgent and coalition forces [69, 70]. Other research has identified similar dynamics in the Israeli-Palestinian conflict where both the Israeli and Palestinian side were found to significantly react to violent attacks of the opposing side [72, 73]. Establishing the (causal) effect of actions by one conflict party on the reactions of the other faction(s) thus appears central to a systemic understanding of endogenous conflict processes.

There is complementary statistical evidence pointing to relatively simple relationships underlying aggregate conflict patterns. Empirical data of insurgent attacks, for example, exhibits heavy tailed severity distributions and bursty temporal dynamics [7, 8]. The complementary cumulative distribution function (CCDF) of event severities is usually found to follow a power law (see Fig. 13):

where \(x_0\) is the lower bound for the power law behavior with exponent \(\alpha \). Note that such statistical regularities can, for example, be used to estimate the future probability of large terrorist events [74]. Bursty temporal dynamics may be characterized by considering the distribution of inter-event times, i.e the length of the time intervals between subsequent events. In a structureless dataset, that is in a dataset where the timing of events is statistically independent, the CCDF of inter-event times simply follows an exponential distribution. The deviation of timing signatures from an exponential shape—for example log-normal or stretch exponential signatures—thus points to significant correlations between the timing of subsequent events.Footnote 6 Researchers have offered competing explanations for these system-level statistical regularities. The power laws in the severity of attacks, for example, have been linked to competition of insurgent groups and security forces for control of the state [7], but also to a positive feedback loop between the size and experience of terrorist organizations or insurgent groups and the frequency with which they commit attacks [75].

Empirical severity and timing signatures of conflict events during the war in Iraq (2004–2009). The complementary cumulative distribution functions (CCDFs) of the event severity in Iraq (a) and Baghdad (b) follow robust power laws (\(\alpha =2.48\pm 0.05\), \(x_0=2\), \(p<0.001\) and \(\alpha =2.57\pm 0.05\), \(x_0=2\), \(p<0.001\) respectively). Panels c–e show the CCDF of inter event times in a 6 months time window for different periods of the conflict in Baghdad. In 2006 ad 2007, the timing of events correlates significantly in time, i.e. the signature of inter-event times (d) significantly deviates from the exponential timing signature that is characteristic for uncorrelated event timing. In contrast, prior to 2006 (c) and after 2007 (e) event timing is indistinguishable from that of a random process with exponentially distributed inter-event times. (f) Shows the number of events per day in Baghdad for the whole period considered. The black bar indicates where an Anderson–Darling test rejects the hypothesis of exponential distribution of inter-event times at a 5 % significance level for moving windows of 6 months, i.e. where the event timing signature is non-trivial. Note that timing analysis was performed for the greater Baghdad area to avoid spurious timing signatures of unrelated events (see also the “Discussion” in the text). The data were obtained from the Guardian website [76]; fit lines shown were estimated using maximum likelihood estimation [77]

It is important to note that the choice of spatial and temporal units of analysis in such aggregate, system-level analysis may strongly affect results. Choosing empirically motivated and sufficiently small spatial units of analysis avoids problems arising from considering time series of potentially unrelated or only very weakly related incidents. In the context of Iraq, for example, the violence dynamics in the Kurdish dominated North are generally quite different from those in Baghdad or the Sunni triangle. The same applies to the selection of suitable time periods, in which the same conflict mechanisms produce aggregate patterns. In fact, the non-parametric analysis of event timing signatures rests on the assumption that the conflict dynamics in the period analyzed are (approximately) stationary [8]. Timing analyses are therefore usually performed for shorter time intervals—for example 6 months—where the overall intensity of conflict does not significantly change (see Fig. 13).

While such statistical signatures point to similarities in conflict patterns across cases and help identify general conflict mechanisms, detailed case studies on the micro-dynamics of violence suggest that mechanisms at the group- or individual-level may be very conflict- and context-specific [78]. A number of micro-level mechanisms that all contribute to the aggregate violence patterns are likely to be present in many conflicts (see, for example Ref. [79]). The effect of (local) contact between warring factions on levels of violence, for instance, has been of central interest in conflict studies. Examining violence in Jerusalem between 2001 and 2009, a recent study addresses this question using an innovative combination of formal computational modeling and rich, spatially disaggregated data on violent incidents and contextual variables [80]. Past research has given empirical support for two competing perspectives: The first assumes that intermixed group settlement patterns reduce violence, as more frequent interactions enable rivals to overcome their prejudices towards each other and thus become more tolerant. The other argues that group segregation more effectively reduces violence, given less frequent contact and fewer possibilities for violent encounters (see Ref. [80] for a detailed overview of the literature supporting the two perspectives).

The study on Jerusalem now demonstrates that, in fact, both perspectives can be reconciled, if one acknowledges that the social or cultural distance \(\tau \) between groups effectively mitigates the effect of spatial proximity. Spatial proximity, in other words is necessary but not sufficient to trigger local outbreaks of violence between members of different groups—only in a situation where tensions between groups are high a chance encounter on the street would likely trigger a violent outbreak. Formally, the study assumes that the probability of any chance encounter to result in violence depends on an individual’s violence threshold \(\Gamma \), which changes as a function of personal exposure to violence (decreases) and the threat of police intervention (increases). Whether this disposition to engage in violence translates into a violent incident then is conditional on the social or cultural distance \(\tau \), such that the higher \(\tau \), the more likely it is that violence ensues:

Here \(\lambda \) sets the scale of the probability of violence to erupt, the smaller \(\lambda \) the more abrupt the transition from no violence to violence as a function of \(\tau -\Gamma \).

One can quantitatively show that this local, individual-based mechanism can indeed explain a substantial part of the aggregate violence dynamics in Jerusalem [80]. Moreover, considering counter-factual scenarios the model allows to analyze how different levels of segregation corresponding to proposed “futures” for Jerusalem would affect violence levels in the city. Conceptually, the study highlights the complex inter-dependence of micro-level conflict processes. In fact, not only the effect of (local) contact is conditional on socio-cultural distance: conflict (or its absence) in turn also affects the socio-cultural distances between conflict parties.

One key difficulty in research on the micro-dynamics of conflict is focusing on the “correct” theoretically and empirically plausible mechanisms that drive violence. In the study on Jerusalem, for example, one specific mechanism was shown to account for a significant part of the spatial patterns of violence in the city. This and similar studies are powerful in the sense that they explicitly test a particular causal micro-macro-link. Similarly, one may reverse this process and try to infer micro-processes based on the macro-patterns. Recently, such causal inference designs have been extended to be applicable to micro-level conflict event data. A novel inferential technique called Matched Wake Analysis (MWA) [81] estimates the causal effect of different types of conflict events on the subsequent conflict trajectory. Using sliding spatial and temporal windows around intervention events guarantees that the choice of unit of analysis does not systematically bias inferences, a problem known as the modifiable areal unit problem (MAUP) [82, 83]. For robust causal inference the method uses statistical matching [84] on previous conflict trends and geographic covariates. In order to the estimate average treatment effects for one type of event (treatment) relative to another one (control) the method uses a Difference-in-Differences regression design. Formally, it estimates how the number of dependent events after interventions, \(\mathrm {n}_{\mathrm {post}}\), changes compared to the number of dependent events prior, \(\mathrm {n}_{\mathrm {pre}}\), as a function of interventionsFootnote 7:

In this expression \(\beta _{2}\) is the estimated average treatment effect of the treated, our quantity of interest (see Ref. [81] for further details on the method).

Such inferential methodology is especially useful when testing specific causal hypotheses, in particular those that may otherwise be difficult to detect against the background of large-scale violence unrelated to the hypothesized mechanism. We have previously emphasized the importance of reactive dynamics for an understanding of endogenous conflict processes. One such example is the effects of counterinsurgency measures on the level of insurgent violence. The direction of these effects is generally disputed, both theoretically and empirically. One line of reasoning suggests that hurting civilians indiscriminately will generally cause reactive support for the military adversary [70, 85]. Another line of thinking suggests that the deterrent effect of such measures leads to less support for the adversary [68, 86].

We use the MWA technique here to directly test this effect empirically by focusing on the initial insurgency (beginning of 2004 to early 2006) in Iraq. Specifically, we focus on the greater Baghdad area—the focal area of violence—and consider three frequent types of events. Raids refer to surprise attacks on homes and compounds, in which the military suspects insurgents or arms caches. The relative heavy-handedness and the high probability of disturbing or harming innocent bystanders sets these operations apart from more selective detentions. In turn, to attack incumbent forces insurgents frequently rely on Improvised Explosive Devices (IEDs) and civilian support in manufacturing, transporting, and planting them. If civilians were really more inclined to support the strategic adversary after having been targeted in raids, one would expect more IED attacks to take place after and in the spatial vicinity of raids compared to detentions or arrests under otherwise comparable conditions. The opposite should be true if deterrence (by raids) was the mechanism at work. As visible in Fig. 14, raids lead to higher levels of subsequent IED attacks compared to detentions: our estimates suggest that 2–3 raids, lead to one additional IED attack in the direct spatial vicinity within up to 10–12 days. This result thus lends empirical support to the notion that civilians support the adversary in reaction to indiscriminate violence. Based on such improved methodology for studying micro-level processes in conflicts, it is possible to gain a better and systematic understanding of the driving forces behind violence.

Reactive violence dynamics in Iraq. a Map of the greater Baghdad area for the period 01/2004–03/2006 showing the sample of conflict events used in our analysis. b Estimated change in the number of attacks with Improvised Explosive Devices (IEDs) following raids in comparison to those following detentions. After matching on spatial covariates and trends in IED attacks preceding the interventions, the causal effect of raids versus detentions was estimated with a Difference-in-Differences regression design [81]. Note the noticeable positive effect (lighter colors) in the interpretable (non-shaded) areas of the plot at distances up to about 3 km and 10–12 days: on average for every 2–3 raids one more IED attack is observed compared to less heavy-handed interventions. Data used for this analysis are significant action (SIGACT) military data (see for example Ref. [70]) (Color figure online)

The research discussed in this section highlights that the contributions of complexity science and empirical, case-oriented conflict research to the study of conflict are in many ways complimentary. A systemic perspective can serve to identify abstract general relationships that help shed light on aggregate empirical patterns—much like statistical physics, which uses the self-averaging properties of large \(N\) systems to study system level properties that emerge from complex microscopic interactions [75]. The in-depth and theory-driven analysis of selected cases in the literature on micro-dynamics of conflict on the other hands, tries to systematically identify these detailed micro-level mechanisms. While there has recently been a noticeable shift towards the study of micro-dynamics of conflict with significant conceptual and technical progress [78], it is important to note that historically much of the social science literature on conflict has mainly analyzed aggregate country- or system-level data.

The fundamental challenge to date remains bridging the divide between these two perspectives. In theory, statistical analysis at the level of aggregate distributions can offer unique systematic insights for the whole ensemble with detailed micro-level inference revealing the multitude of mechanisms underlying the ensemble properties. Yet in practice, the two research strands often exist rather disconnected and insights from one do not enter into the analysis or models of the other. While there may in principle be a systematic limit to the generalizability of insights from the macro to the micro level and vice versa, the current situation is clearly a consequence of lack of engagement with the respective “other” literature.

We are convinced that in light of the increasing availability of detailed conflict event datasets and the emergence of Big Data on conflicts, complexity science can make a very substantial contribution to conflict research. Its impact and relevance will be more substantial the more we tap into the unique insights on both the micro- and macro-dynamics of conflict the social science literature already has to offer.

3.3 Interstate Wars and How to Predict them

If conflicts are difficult to stop, then it should be of utmost importance to prevent their occurrence in the first place. However, any hope of preventing them rests on the ability to forecast their onset with a certain level of accuracy.

Unfortunately, forecasting conflicts has remained largely elusive to scholars of international relations. Historical studies of single wars lack out-of-sample predictive power [87–89]. More systematic approaches focusing on the conditions that tend to lead to war (e.g., arms races [90], long-standing territorial rivalries [91], or large and rapid shifts in power [92, 93]) rely on indicators that are coarse in time (typically yearly), and therefore tend to miss the crucial steps of the escalation of tensions and the timing of the conflict outbreak [11, 94–96]. Efforts at finer-grained codification of geopolitical tensions are labor-intensive and costly [97, 98] and limited in time [99, 100]. More systematic coding mechanisms using computer algorithms [101, 102] also have a limited time span, as do prediction markets [103, 104]. In addition, event-based data tend to miss the subtleties of international interactions: on the one hand, the absence of an event may be as important as its occurrence. Moreover, seemingly large events need not be cause for alarm whereas small events may greatly matter. The main obstacle to testing our ability to forecast conflicts, in other words, has been the lack of measures of tensions that are both fine-grained in time and cover a large time-span [105–107].

To fill this gap, geopolitical tensions were estimated by analyzing a large dataset of historical newspaper articles [12]. Google News Archive—the world’s largest newspaper database with over 60 million pagesFootnote 8—was used to search the text of every article for mentions of a given country together with a set of keywords typically associated with tensions (e.g., crisis, conflict, antagonism, clash, contention, discord, fight, attack, combat).Footnote 9 A mention of a country together with any of the pre-specified keywords in a given week resulted in an increased estimated level of tensions for that country in that week. This procedure was repeated for every week from January 1st 1902 to December 31st 2011, and for every country included in the Correlates of War dataset [108].

The resulting dataset is a fine-grained and direct proxy for the evolution of tensions in each country, with more than one hundred years of weekly time series for 167 countries. Moreover, by relying on journalists’ perceptions of international tensions, some of the pitfalls associated with event-data can be avoided, since contemporaries will process events in view of their respective context and likely consequences, instead of simply classifying them in preexisting categories.

Reports about tensions in the news were found to be significantly higher prior to wars than otherwise (Fig. 15), which implies that news reports convey valuable information about the likely onset of a conflict in the future [12]. In fact, reports about geopolitical tensions typically increase well ahead of the onset of conflict (Fig. 16), therefore potentially giving decision-makers ample early warnings to devise and implement a response to help prevent the outbreak.

Estimated probability distribution of the (logged) number of conflict-related news for all countries and all weeks since 1902. The curve “War within 1 year” refers to the distribution of conflict-related news for those countries involved in an interstate war within the next 12 months (i.e., a conflict with at least 1,000 battle deaths involving two or more states)

Observed number of conflict-related news items prior and after the onset of interstate wars. Each vertical line is a boxplot of the bootstrapped median number of conflict-related news prior to all interstate wars since 1902. The number of news tends to rise well ahead of the onset of conflict, and to remain relatively high thereafter, reflecting growing concerns prior to a conflict and lingering ones after its onset [12]

Even when controlling for a multitude of explanatory variables that have been identified as relevant in the literature (e.g., regime type, relative power, military expenditure), the sheer number of conflict-related news provides earlier warning signals for the onset of conflict than existing models. This hypothesis was tested more formally by using only information available at the time (out-of-sample forecasting). Using such data, the onset of a war within the next few months could be predicted with up to 85 % confidence. Keyword-based predictions significantly improved upon existing methods. These predictions also worked well before the onset of war—more than one year prior to interstate wars—giving policy-makers significant additional warning time [12].

Here we report on an additional finding suggesting the importance of uncertainty prior to conflict as an early warning signal for war. The outbreak of conflict is rarely unavoidable. While countries often prepare for potential conflicts well in advance, the escalation of tensions typically reflects a failed bargaining process, and news articles mirror this escalation by reporting on these developments. Yet, escalation is rarely linear, and its outcome remains uncertain until the very end. A negotiated solution can always be reached before hostilities start. As a result, proximity to the conflict will probably increase not only observers’ (journalists) worries about the outcome of the process, but may also generate diverging opinions and scenarios about the likely outcome of the process. As a result, we should observe not only an increase over time in the number of conflict-related news, but also an increase in the variance associated with these news.

Indeed, we find that the probability of conflict significantly increases as a function of both the weekly change in the number of conflict-related news items (\(\Delta \)news) and their standard deviation within a window of one year (Fig. 17). This results goes beyond [12] and suggests not only that the total number of conflict-related news and its change over time can be used as early warning signals for war, but also that time-variability in the number of news could significantly improve upon existing forecasting efforts. This finding is also in line with [109], since increased levels of variability can be used as early warning signals for critical transitions in a large number of physical, biological and socio-economic systems characterized by complexity and non-linearity (see also [110]).

Estimated probability of the onset of interstate war within the next 3 months. \(\Delta \)news denotes the weekly change in the number of conflict-related news (i.e., \(\Delta \)news\(_{i,t}\equiv \) news\(_{i,t}/(1+\)news\(_{i,t-1}\) for country \(i\) and week \(t\)), and SD measures the moving standard deviation of the number of news (divided by the total number of news in the world to address non-stationarity problems), applying a one-year averaging moving window

4 Spreading of Diseases and How to Respond

In the previous sections, we have seen how crime and conflict can spread in space and time. We will now address another serious threat, the emergence and worldwide spread of infectious diseases. Pandemics have plagued mankind since the onset of civilization. The transition from nomadic hunter-gatherer life styles to settled cultures marked a point in history at which growing human populations, in combination with increasingly cultured life-stock, led to zoonotic infectious diseases crossing the intra-species barrier. New pathogens that could be sustained in human populations evolved, and eventually lead to infectious diseases specific to humans. It is thus no surprise that the greatest killer among viral diseases, the smallpox virus, emerged approx. 4000–7000 years ago [111]. It is estimated that smallpox alone has caused between 300 and 500 million deaths, and along with measles killed up to 90 % of the native American population in the years that followed contact with Europeans in the fifteenth century. Historic records indicate that various large scale pandemics with devastating consequences swept through Europe in the past two millennia. The Antonine Plague (smallpox or measles) killed up to 5 million people in ancient Rome and has been argued to be one of the factors that lead to the destabilization of the Roman Empire [112], while the Black Death (bubonic plague) swept across the European continent in the fourteenth century and erased more than 25 % of the European population [113]. The largest known pandemic in history, the Spanish flu of 1918–1919, caused an estimated 20–50 million deaths worldwide within a year, more than the casualties of World War I over the previous 4 years. This pandemic was caused by an influenza A virus subtype H1N1, a strain similar to the one that triggered the 2009 influenza pandemic (“swine flu”). Although all of these major events are unique and different from each other in many ways, a number of factors are common to all of them. First, increasing interactions with animal populations in farming increases the likelihood that novel pathogens are introduced to human populations. Second, larger and denser human populations increase the opportunity for pathogens to evolve the ability to be sustained in these populations, adapt to the new human host, and trigger local outbreaks. Third, increasing mobility, for instance driven by trade and commerce, promotes the spatial distribution of emergent pathogens, and generates the conditions for full-blown pandemics to follow a local outbreak.

In recent decades, the threat of pandemics has been substantially reduced by a more advanced medical system, the development of sophisticated antibiotics, antiviral drugs, and vaccination campaigns. The greatest success along these lines is the worldwide eradication of smallpox in 1979 by a decade-long global vaccination campaign. Today, worldwide health surveillance systems for timely outbreak identification are in place, containing emergent infectious disease, and combating endemic diseases such as polio, tuberculosis, and malaria. However, although advances along these lines are promising news, modern civilization also contributes to the emergence of new pandemics. First, intensive animal farming or industrial livestock production yields a higher rate of intra-species barrier crossing and thus the emergence of potentially virulent human infectious diseases. Second, worldwide population increases quickly, having recently crossed the 7 billion mark. More than 50 % of the Earth’s population live in dense metropolitan mega cities, thus providing ideal conditions for an emergent pathogen to be sustained by human-to-human contacts. Third, the modern world is extremely connected by intense long range traffic. For example, more than three billion passengers travel among four thousand globally distributed airports every year (Fig. 18).

Pandemics of the past and present. Global disease proliferation has been prevalent throughout human history. Before worldwide eradication in 1979, smallpox had claimed the largest death toll since its emergence approx. 5,000 years ago followed by measles which is believed to have killed a substantial fraction of native Americans in the times after Columbus. Both, the Justinian Plague and the Black Death were caused by bacterial diseases and each one killed more than half the European population in a few years. The twentieth century Spanish flu claimed more victims that world war I and HIV/AIDS is the most recent globally prevalent non-curable infectious disease

Containing global pandemics is certainly a major concern to ensure stable socio-economic conditions in the world and anything that can potentially reduce the socio-economic impact of these events is helpful. In the last decade mathematically parsimonious SIR (susceptible-infected-recovered) models [114] have been extended successfully by including social interaction networks, spatial effects, as well as public and private transport, and by more and more fine-grained epidemiological models [115–120]. One of the most relevant and surprising lessons of such models is that, in order to minimize the number of casualties and costs of fighting diseases in industrialized countries, it might be economically plausible for them to share vaccine doses with developing countries, even for free [121]. In this way, the number of infections can be more effectively contained, particularly in the critical, early stage of disease spreading.

In the following, we will focus on two aspects. Firstly, a better understanding and prediction of the spatio-temporal spreading of diseases on a global scale, which permits the development of more effective response strategies. Second, we will address the issue of information strategies to encourage voluntary vaccination of citizens, which might be more effective than attempting to enforce compulsory vaccination through law. In this context, it is useful to remember that each percentage point reduction in the number of people falling ill potentially benefits the lives of tens of thousands of people.

4.1 Modelling Disease Dynamics

The development of mathematical models in the context of infectious disease dynamics has a long history. In 1766 Daniel Bernoulli published a paper on the effectiveness of inoculation against smallpox infections [122]. His seminal work not only contained the first application of the theory of differential equations, but was also published before it was known that infectious diseases were caused by bacteria or viruses and were transmissible. The prevalent scientific opinion in Bernoulli’s time was that infectious diseases were caused by an invisible poisonous vapor, known as the miasma. Evidence existed, however, that inoculation of healthy individuals with degraded smallpox material incurred immunity to smallpox in some cases. Bernoulli’s theoretical work shed light on the effectiveness of this procedure, a topic vividly discussed by the scientific elite of Europe at that time. In the beginning of the twentieth century Kermack and McKendrick laid the foundation of mathematical epidemiology in a series of publications [123, 124] and introduced the SIR (susceptible—infected—recovered) model which is still used today in most state-of-the-art computational models for the dynamics of infectious diseases (see also Refs. [125, 126]).

These types of models were designed to describe the time course of epidemics in single populations in which every individual is assumed to interact with every other individual at the same rate. The spatial spread of epidemics was first modeled by parsimonious reaction diffusion type Equations [127] in which the local nonlinear dynamics are combined with ordinary diffusion in space. The combination of local, initially exponential growth typical of disease dynamics, with diffusion in space, generically yields regular wave fronts and constant spreading speeds. This type of approach was successfully applied in the context the spread of the Black Death in Europe in the fourteenth century [128]. Despite their high level of abstraction, these models provide a solid intuition and understanding of spreading processes. Their mathematical simplicity allows one to compute how key properties (e.g. spreading speed, arrival times, and pattern geometry) depend on system parameters [129].