Abstract

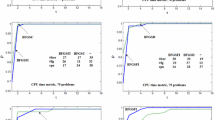

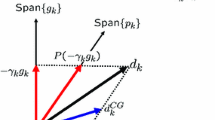

The introduction of quasi-Newton and nonlinear conjugate gradient methods revolutionized the field of nonlinear optimization. The self-scaling memoryless Broyden–Fletcher–Goldfarb–Shanno (SSML-BFGS) method by Perry (Disscussion Paper 269, 1977) and Shanno (SIAM J Numer Anal, 15, 1247–1257, 1978) provided a good understanding about the relationship between the two classes of methods. Based on the SSML-BFGS method, new conjugate gradient algorithms, called CG_DESCENT and CGOPT, have been proposed by Hager and Zhang (SIAM J Optim, 16, 170–192, 2005) and Dai and Kou (SIAM J Optim, 23, 296–320, 2013), respectively. It is somewhat surprising that the two conjugate gradient methods perform more efficiently than the SSML-BFGS method. In this paper, we aim at proposing some suitable modifications of the SSML-BFGS method such that the sufficient descent condition holds. Convergence analysis of the modified method is made for convex and nonconvex functions, respectively. The numerical experiments for the testing problems from the Constrained and Unconstrained Test Environment collection demonstrate that the modified SSML-BFGS method yields a desirable improvement over CGOPT and the original SSML-BFGS method.

Similar content being viewed by others

References

Perry, J. M.: A class of conjugate gradient algorithms with a two-step variable-metric memory. Discussion Paper 269, Center for Mathematical Studies in Economics and Management Sciences, Northwestern University, Evanston, Illinois (1977).

Shanno, D.F.: On the convergence of a new conjugate gradient algorithm. SIAM J. Numer. Anal. 15, 1247–1257 (1978)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16, 170–192 (2005)

Dai, Y.H., Kou, C.X.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23, 296–320 (2013)

Beale, E.M.L.: A derivation of conjugate gradients. In: Lootsman, F.A. (ed.) Numerical Methods for Nonlinear Optimization, pp. 39–43. Academic Press, London (1972)

Powell, M.J.D.: Restart procedures for the conjugate gradient method. Math. Progr. 12, 241–254 (1977)

Wei, Z., Li, G., Qi, L.: New quasi-Newton methods for unconstrained optimization problems. Appl. Math. Comput. 175, 1156–1188 (2006)

Zhang, J.Z., Deng, N.Y., Chen, L.H.: New quasi-Newton equation and related methods for unconstrained optimization. J. Optim. Theory Appl. 102, 147–167 (1999)

Zhang, J.Z., Xu, C.X.: Properties and numerical performance of quasi-Newton methods with modified quasi-Newton equations. J. Comput. Appl. Math. 137, 269–278 (2001)

Oren, S.S.: Self scaling variable metric (SSVM) algorithms, part II: implementation and experiments. Manag. Sci. 20(5), 863–874 (1974)

Oren, S.S., Luenberger, D.G.: Self scaling variable metric (SSVM) algorithms, part I: criteria and sufficient conditions for scaling a class of algorithms. Manag. Sci. 20(5), 845–862 (1974)

Zoutendijk, G.: Nonlinear programming, computational methods. In: Abadie, J. (ed.) Integer and Nonlinear Programming, pp. 37–86. North-Holland, Amsterdam (1970)

Powell, M.J.D.: Nonconvex minimization calculations and the conjugate gradient method. In: Griffiths, D.F. (ed.) Lecture Notes in Mathematics, pp. 122–141. Springer, Berlin (1984)

Gilbert, J.C., Nocedal, J.: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2(1), 21–42 (1992)

Dai, Y.H., Liao, L.Z.: New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43, 87–101 (2001)

Gould, N. I. M., Orban, D., Toint, Ph. L.: CUTEr (and SifDec), a constrained and unconstrained testing environment, revisited. Technical Report TR/PA/01/04, CERFACS, Toulouse, France (2001).

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Progr. 91, 201–213 (2002)

Dai, Y.H.: Nonlinear conjugate gradient methods. Published Online, Wiley Encyclopedia of Operations Research and Management Science (2011)

Dai, Y.H., Yuan, Y.: A nonlinear conjugate gradient with a strong global convergence property. SIAM J. Optim. 10(1), 177–182 (1999)

Dai, Y.H., Yuan, Y.: An efficient hybrid conjugate gradient method for unconstrained optimization. Ann. Oper. Res. 103, 33–47 (2001)

Acknowledgments

This work was partly supported by the Chinese NSF Grants (Nos. 11331012 and 81173633), the China National Funds for Distinguished Young Scientists (No. 11125107), and the CAS Program for Cross & Coorperative Team of the Science & Technology Innovation. The authors are very grateful to the two anonymous referees for their valuable suggestions and comments on an early version of this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by N. Yamashita.

Rights and permissions

About this article

Cite this article

Kou, C.X., Dai, Y.H. A Modified Self-Scaling Memoryless Broyden–Fletcher–Goldfarb–Shanno Method for Unconstrained Optimization. J Optim Theory Appl 165, 209–224 (2015). https://doi.org/10.1007/s10957-014-0528-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-014-0528-4

Keywords

- Unconstrained optimization

- Self-scaling

- Conjugate gradient method

- Quasi-Newton method

- Global convergence

- Improved Wolfe line search