Abstract

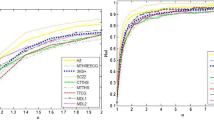

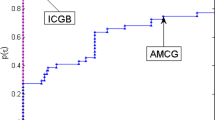

In this paper, a self-adjust conjugate gradient method is proposed for solving unconstrained problems, which can generate sufficient descent directions at each iteration. Different from the existent methods, a dynamical adjustment of conjugacy condition in our proposed method is developed, which can be regarded as the inheritance and development of properties of standard Hestenes–Stiefel method. Under mild condition, we show the proposed method convergent globally even if the objective function is nonconvex. Numerical results illustrate that our method can efficiently solve the test problems and therefore is promising.

Similar content being viewed by others

References

Hager, W.W., Zhang, H.: A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2(1), 35–58 (2006)

Hestenes, M.R., Stiefel, E.: Methods of conjugate gradients for solving linear systems. J. Res. Natl. Bur. Stand. 49(6), 409–436 (1952)

Dai, Y.H., Liao, L.Z.: New conjugacy conditions and related nonlinear conjugate gradient methods. Appl. Math. Optim. 43(1), 87–101 (2001)

Sun, W., Yuan, Y.X.: Optimization Theory and Methods: Nonlinear Programming. Springer, New York (2006)

Hager, W.W., Zhang, H.: A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16(1), 170–192 (2005)

Zhang, L., Zhou, W.J., Li, D.H.: A descent modified Polak-Ribi\(\grave{e}\)re-Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26(4), 629–640 (2006)

Cheng, W.Y., Li, D.H.: An active set modified Polak–Ribi\(\grave{e}\)re–Polyak method for large-scale nonlinear bound constrained optimization. J. Optim. Theory Appl. 155(3), 1084–1094 (2012)

Narushima, Y., Yabe, H., Ford, J.A.: A three-term conjugate gradient method with sufficient descent property for unconstrained optimization. SIAM J. Optim. 21(1), 212–230 (2011)

Zhang, L., Zhou, W.J., Li, D.H.: Global convergence of a modified Fletcher–Reeves conjugate gradient method with Armijo-type line search. Numer. Math. 104(4), 561–572 (2006)

An, X.M., Li, D.H., Xiao, Y.H.: Sufficient descent directions in unconstrained optimization. Comput. Optim. Appl. 48(3), 515–532 (2011)

Jiang, X.Z., Jian, J.B.: A sufficient descent Dai–Yuan type nonlinear conjugate gradient method for unconstrained optimization problems. Nonlinear Dyn. 72(1–2), 101–112 (2013)

Dai, Y.H., Kou, C.X.: A nonlinear conjugate gradient algorithm with an optimal property and an improved Wolfe line search. SIAM J. Optim. 23(1), 296–320 (2013)

Birgin, E.G., Martnez, J.M.: A spectral conjugate gradient method for unconstrained optimization. Appl. Math. Optim. 43(2), 117–128 (2001)

Yu, G.H., Guan, L.T., Chen, W.F.: Spectral conjugate gradient methods with sufficient descent property for large-scale unconstrained optimization. Optim. Methods Softw. 23(2), 275–293 (2008)

Yu, G.H.: Nonlinear self-scaling conjugate gradient methods for large-scale optimization problems (in chinese). Ph.D. thesis, Sun Yat-Sen University (2007)

Livieris, I.E., Pintelas, P.: A new class of spectral conjugate gradient methods based on a modified secant equation for unconstrained optimization. J. Comp. Appl. Math. 239, 396–405 (2013)

Livieris, I.E., Pintelas, P.: A new conjugate gradient algorithm for training neural networks based on a modified secant equation. Appl. Math. Comput. 221, 491–502 (2013)

Kou, C.X.: An improved nonlinear conjugate gradient method with an optimal property. Sci. China Math. 57(3), 635–648 (2014)

Kou, C.X., Dai, Y.H.: A modified self-scaling memoryless Broyden–Fletcher–Goldfarb–Shanno method for unconstrained optimization. J. Optim. Theory Appl. (2014). doi:10.1007/s10957-014-0528-4

Deng, S.H., Wan, Z., Chen, X.H.: An improved spectral conjugate gradient algorithm for nonconvex unconstrained optimization problems. J. Optim. Theory Appl. 157(3), 820–842 (2013)

Andrei, N.: A simple three-term conjugate gradient algorithm for unconstrained optimization. J. Comp. Appl. Math. 241, 19–29 (2013)

Andrei, N.: On three-term conjugate gradient algorithms for unconstrained optimization. Appl. Math. Comput. 219(11), 6316–6327 (2013)

Andrei, N.: Another conjugate gradient algorithm with guaranteed descent and the conjugacy conditions for large-scaled unconstrained optimization. J. Optim. Theory Appl. 159(3), 159–182 (2013)

Andrei, N.: A Dai–Yuan conjugate gradient algorithm with sufficient descent and conjugacy conditions for unconstrained optimization. Appl. Math. Lett. 21(2), 165–171 (2008)

Andrei, N.: Open problems in nonlinear conjugate gradient algorithms for unconstrained optimization. Bull. Malays. Math. Sci. Soc. 34(2), 319–330 (2011)

Babaie-Kafaki, S., Reza, G.: A descent family of Dai–Liao conjugate gradient methods. Optim. Methods Softw. 29(3), 583–591 (2014)

Babaie-Kafaki, S., Reza, G.: The Dai–Liao nonlinear conjugate gradient method with optimal parameter choices. Euro. J. Operat. Res. 234(3), 625–630 (2014)

Gilbert, J.C., Nocedal, J.: Global convergence properties of conjugate gradient methods for optimization. SIAM J. Optim. 2(1), 21–42 (1992)

Wolfe, P.: Convergence conditions for ascent methods. SIAM Rev. 11(2), 226–235 (1969)

Dolan, E.D., Moré, J.J.: Benchmarking optimization software with performance profiles. Math. Program 91(2, Ser. A), 201–213 (2002)

Acknowledgments

We are grateful to the anonymous referees and editor for their useful comments, which have made the paper clearer and more comprehensive than the earlier version. We would like to thank Professors N. Andrei for his THREECG code and Professors W.W.Hager and H. Zhang for their CG-DESCENT code for numerical comparison. This work is supported by the Natural Science Foundation of China (11361001), Doctor Foundation of Beifang University of Nationalities (2014XBZ09), and Fundamental Research Funds for the Central Universities (K50513100007).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Florian A. Potra.

Rights and permissions

About this article

Cite this article

Dong, X., Liu, H. & He, Y. A Self-Adjusting Conjugate Gradient Method with Sufficient Descent Condition and Conjugacy Condition. J Optim Theory Appl 165, 225–241 (2015). https://doi.org/10.1007/s10957-014-0601-z

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10957-014-0601-z

Keywords

- Self-adjusting conjugate gradient method

- Sufficient descent condition

- Conjugacy condition

- Global convergence

- Numerical comparison