Abstract

In this position paper, we discuss how different branches of research on clustering and pattern mining, while rather different at first glance, in fact have a lot in common and can learn a lot from each other’s solutions and approaches. We give brief introductions to the fundamental problems of different sub-fields of clustering, especially focusing on subspace clustering, ensemble clustering, alternative (as a variant of constraint) clustering, and multiview clustering (as a variant of alternative clustering). Second, we relate a representative of these areas, subspace clustering, to pattern mining. We show that, while these areas use different vocabularies and intuitions, they share common roots and they are exposed to essentially the same fundamental problems; in particular, we detail how certain problems currently faced by the one field, have been solved by the other field, and vice versa.

The purpose of our survey is to take first steps towards bridging the linguistic gap between different (sub-) communities and to make researchers from different fields aware of the existence of similar problems (and, partly, of similar solutions or of solutions that could be transferred) in the literature on the other research topic.

Similar content being viewed by others

1 Introduction

In an old Indian tale, a king has the blind men of his empire examining an elephant. Then he asks about their findings. Their descriptions of what an elephant is, naturally differ widely. The one who felt the ear of the elephant describes it as a hand fan. The others insist that an elephant is like a pot, a ploughshare, a tree trunk, a pillar, a wall, or a brush, depending on whether they felt the elephant’s head, tusk, trunk, foot, belly, or the tip of the tail, respectively.

In general for unsupervised data mining, and in particular in its emerging research areas of discovering, summarizing and using multiple clusterings, by growing understanding and communication between researchers we see an increase in awareness that each of us can only discover part of the truth a dataset holds. Hence, the better we understand each other’s approaches to ultimately the same problem, exploratory analysis of data, the more we can learn from each other; be it from the problems faced, or the (partial) solutions that have been discovered.

In this position paper, surveying different approaches to examine presumably the same elephant, we therefore set out to take first steps towards bridging the linguistic gap between different communities. Our preeminent goal is to make researchers from the different fields aware of the existence of problems in other fields that are very similar to the problems they are facing within their own problem setting, and in particular to raise awareness of the (partial) solutions and theoretical advances made that might be applicable, or in the least learned from and build upon.

Earlier versions of this work appeared at the MultiClust workshops 2010 and 2011 (Kriegel and Zimek 2010; Vreeken and Zimek 2011). Here, we combine and expound these viewpoints, in order to show the bigger picture on how different subfields investigate essentially the same problem. Besides generally extending our discussion, we give more details, include more examples, and have expanded our survey for identifying key references on the considered fields. Furthermore, we follow up discussions presented by Färber et al. (2010) and Kriegel et al. (2011c).

We first, in Sect. 2, set out to discuss similar traits and problems as well as opposing views in specific areas of clustering. As such, we discuss specializations to subspace clustering and ensemble clustering, and we touch on the related aspects of alternative (constraint) clustering and multiview clustering (in the variant aiming at the identification of different clustering solutions). We will also discuss that the fundamental problem, multiple truths, is present in other areas as well but did not find a solution there either. In this wider horizon we touch, e.g., on classification, where a specialization allows for multiple labels or hierarchical classes.

Next, in Sect. 3, we focus on subspace clustering as a representative and detail the many commonalities between clustering and pattern mining. We will show that there is an interesting ‘relationship story’ (Vreeken and Zimek 2011) between these seemingly different fields, beyond their unsupervised exploratory nature. To this end we will discuss the common roots, similar techniques, and in particular the problems these areas share. We point out problems faced by one area, that in fact have been considered and (partly) solved by the other—albeit with different vocabulary and intuitions.

Finally, in Sect. 4, we round up, summarize the points taken, and suggest further directions to explore.

The research and discussion described in this paper builds upon and extends work published at MultiClust’10 and at MultiClust’11 by Kriegel and Zimek (2010), Vreeken and Zimek (2011).

2 Clustering approaches examining different parts of the elephant

In the following, we first give an overview of the problems and basic solutions of subspace clustering (Sect. 2.1). Afterwards we discuss ensemble clustering approaches (Sect. 2.2) and touch on alternative clustering (Sect. 2.3) and multiview clustering (Sect. 2.4). We summarize similarities and differences between these sub-fields (Sect. 2.5) and we collect questions in these areas where answers from other areas may be helpful in improving the field. Finally (Sect. 2.6), we will also see, however, that the fundamental problem for all these areas, the existence of multiple truths, is acknowledged in other research areas or clustering subtopics as well yet did not find satisfying treatment so far. Let us thus emphasize that we here do neither aim at solving all the open questions, nor aim at unifying different research areas, but instead aim to inspire discussion between different research areas, to put the figure of the elephant together from the different impressions.

2.1 Subspace clustering

Subspace clustering refers to the task of identifying clusters of similar vectors where the similarity is defined w.r.t. a subspace of the data space. The subspace is not necessarily the same (and actually is usually different) for different clusters within one clustering solution. The key-issue in subspace clustering lies in defining similarity over only certain attributes, using possibly different attributes for different (potential) clusters. By how we choose weightings, selections, and/or combinations of attributes, we implicitly choose which subspaces in the data will satisfy our current similarity measure; and hence, we have to choose these properties carefully and appropriately per application domain.

Which subspaces are important for the similarity measure is a task typically left to be learned during the clustering process, since for different clusters within one and the same clustering solution usually different subspaces are relevant. Hence subspace clustering algorithms cannot be thought of as usual clustering algorithms where we simply use a different definition of similarity: the similarity measure and the clustering solution are derived simultaneously and hence strongly depend on each other.

Kröger and Zimek (2009), Kriegel et al. (2012) provide short, merely theoretical overviews on this topic. Kriegel et al. (2009) discussed the problem in depth, also differentiating several partial problems and surveying a number of example algorithms. Latest developments have been surveyed by Sim et al. (2012). Although there is a wide variety of task definitions for clustering in subspaces, the term ‘subspace clustering’ is also often used in a narrower sense to relate to a special category of clustering algorithms in axis-parallel subspaces where the clusters—residing in different subspaces—may overlap. That is, some of the clusters’ objects can be part of several different clusters.

Another family of clustering in axis-parallel subspaces is called ‘projected clustering’, where the dataset is partitioned in a set of disjunct clusters (and, sometimes, an additional set of noise objects). Recent experimental evaluation studies covered some selections of these specific subtypes of subspace clustering (Moise et al. 2009; Müller et al. 2009c) and these subtypes may be the most interesting ones for considering relationships with the other research areas discussed in this survey.

Clustering in axis-parallel subspaces is based on the distinction between relevant and irrelevant attributes. This distinction generally assumes that the variance of attribute values for a relevant attribute over all points of the corresponding cluster is rather small compared to the overall range of attribute values. For irrelevant attributes the variance of values within a given cluster is high or the values are indistinguishable from the rest of the data. For example, one could assume a relevant attribute for a given cluster being normally distributed with a small standard deviation whereas the values of irrelevant attributes are uniformly distributed over the complete data space.

The geometrical intuition of these assumptions relates to the points of a cluster being widely scattered in the direction of irrelevant axes while being densely clustered along relevant attributes. When selecting the relevant attributes only, the cluster would appear as a full-dimensional cluster—within this selected subspace. Whereas in the full dimensional space, also including the irrelevant attributes, the cluster points form a hyperplane parallel to the irrelevant axes as they spread out in these directions. Due to this geometrical appearance, this type of cluster is addressed as ‘axis-parallel subspace cluster’.

Since the number of axis-parallel subspaces where clusters can possibly reside scales exponentially with the dimensionality of the data, the main task of research in the field was the development of appropriate subspace search heuristics. Starting from the pioneering approaches to axis-parallel subspace clustering, there have been pursued two opposite basic techniques for searching subspaces, namely (a) top-down search (Aggarwal et al. 1999) and (b) bottom-up search (Agrawal et al. 1998).

-

(a)

The rationale of top-down approaches is to determine the subspace of a cluster starting from the full-dimensional space. This is usually done by determining a subset of attributes for a given set of points—potential cluster members—such that the points meet the given cluster criterion when projected onto the corresponding subspace.

Obviously a main dilemma is that when determining the subspace of a cluster, we need to know at least some of the cluster members. On the other hand, in order to determine cluster membership, we need to know the subspace of the cluster. To escape from this circular dependency, most of the top-down approaches rely on a rather strict assumption, which has been called the locality assumption (Kriegel et al. 2009). It is assumed that the subspace of a cluster can be derived from the local neighborhood of the cluster center or its members. In other words, it is assumed that even in the full-dimensional space, the subspace of each cluster can be learned from the local neighborhood of the cluster. Other top-down approaches that do not rely on the locality assumption use random sampling as a heuristic in order to generate a set of potential cluster members.

-

(b)

For a bottom-up search, we need to traverse the exponential search space of all possible subspaces of the data space. This is strongly related to the search space of the frequent item set mining problem in analysis of market baskets in transaction databases (Agrawal and Srikant 1994).

In our setting, each attribute represents an item and each subspace cluster is a transaction of the items representing the attributes that span the corresponding subspace. Finding item sets with a frequency of at least 1 then relates to finding all combinations of attributes that constitute a subspace containing at least one cluster.Footnote 1

Let us note that there are bottom-up algorithms that do not use an Apriori-like search, but instead apply other heuristics, such as randomization or greedy-search strategies.

Subspace clustering in a narrower sense pursues the goal to find all clusters in all subspaces of the entire feature space. This goal obviously is defined to correspond to the bottom-up technique used by these approaches, based on some anti-monotonic property of clusters allowing the application of the Apiori search strategy.Footnote 2 The pioneer approach to finding all clusters in all subspaces coining the term ‘subspace clustering’ for this specific task has been clique (Agrawal et al. 1998). Numerous variants have been proposed, e.g., by Cheng et al. (1999), Nagesh et al. (2001), Kailing et al. (2004a), Assent et al. (2007, 2008), Moise and Sander (2008), Müller et al. (2009a), Liu et al. (2009).

Like for frequent itemset mining, one can question the original problem formulation of finding ‘all clusters in all subspaces’, as by retrieving a huge and highly redundant set of clusters the result will not be very useful or insightful. Subsequent methods therefore often concentrated on possibilities of concisely restricting the resulting set of clusters by somehow assessing and reducing the redundancy of clusters, for example to keep only clusters of highest dimensionality. It also should be noted that the statistical significance of subspace clusters (as defined by Moise and Sander 2008), is not an anti-monotonic property and hence does in general not allow for Apriori-like bottom-up approaches finding only meaningful clusters.

2.2 Ensemble clustering

Ensemble methods are most well-known for boosting performance in classification tasks. In the area of supervised learning, combining several self-contained predicting algorithms to an ensemble to yield a better performance than any of the base predictors, is backed by a sound theoretical background (Hansen and Salamon 1990; Dietterich 2000, 2003; Valentini and Masulli 2002; Brown et al. 2005).

In short, a predictive algorithm can suffer from several limitations such as statistical variance, computational variance, and a strong bias. Statistical variance describes the phenomenon that different prediction models result in equally good performance on training data. Choosing arbitrarily one of the models can then result in deteriorated performance on new data. Voting among equally good classifiers can reduce this risk. Computational variance refers to the fact, that computing the truly optimal model is usually intractable and hence any classifier tries to overcome computational restrictions by some heuristic. These heuristics, in turn, can lead to local optima in the training phase. Obviously, considering multiple random starting points reduces the risk of ending up in the wrong local optimum.

A restriction of the space of hypotheses a predictive algorithm may create is referred to as bias of the algorithm. Usually, the bias allows for learning an abstraction and is, thus, a necessary condition of learning a hypothesis instead of learning by heart the examples of the training data (the latter resulting in random performance on new data). However, a strong bias may also hinder the representation of a good model of the true laws of nature (or other types of characteristics of the data set at hand) one would like to learn. A weighted sum of hypotheses may then expand the space of possible models.

To improve over several self-contained classifiers by building an ensemble of those classifiers requires the base algorithms being accurate (i.e., at least better than random) and diverse (i.e., making different errors on new instances). It is easy to understand why these two conditions are necessary and also sufficient. If several individual classifiers are not diverse, then all of them will be wrong whenever one of them is wrong, as nothing would be gained by voting over wrong predictions.

On the other hand, if the errors made by the classifiers are uncorrelated, more individual classifiers may be correct while some individual classifiers are wrong. Therefore, a majority vote by an ensemble of these classifiers is more likely to be correct. More formally, suppose an ensemble consisting of k hypotheses, and the error rate of each hypothesis is equal to a certain p<0.5 (assuming a dichotomous problem), though errors occur independently in different hypotheses. The ensemble will be wrong, if more than k/2 of the ensemble members are wrong. Thus the overall error rate \(\bar{p}\) of the ensemble is given by the area under the binomial distribution, where k≥⌈k/2⌉, that is for at least ⌈k/2⌉ hypotheses being wrong:

The overall error-rate rapidly decreases for increasingly larger ensembles.

In the unsupervised task of clustering, although a fair amount of approaches has been proposed (see Ghosh and Acharya 2011, for an overview), the theory for building ensembles is still not fully developed.Footnote 3 Improvement by application of ensemble techniques has been demonstrated empirically, though. The influence of diversity on ensemble clustering has been acknowledged and studied by Kuncheva and Hadjitodorov (2004), Hadjitodorov et al. (2006), Hadjitodorov and Kuncheva (2007).

Existing approaches have concentrated on creating diverse base clusterings and then combining them somehow to a unified single clustering. The approaches differ in (a) how to create diversity and (b) how to combine different clusterings.

-

(a)

For obtaining diversity, Strehl and Ghosh (2002) discuss (i) non-identical sets of features, (ii) non-identical sets of objects, and (iii) different clustering algorithms.

Clearly, the first of these strategies is related to subspace clustering. It has been pursued in different ensemble clustering approaches (Fern and Brodley 2003; Topchy et al. 2005; Bertoni and Valentini 2005). Usually, however, the projections used here are random projections and not different clusters are sought in different subspaces but true clusters are supposed to be more or less equally apparent in different random projections. It is probably interesting to account for the possibility of different, yet meaningful, clusters in different subspaces. For example, Fern and Brodley (2003) are aware of possibly different clusters existing in different subspaces. Nevertheless, their approach aims at a single unified clustering solution, based on the ensemble framework of Strehl and Ghosh (2002).

-

(b)

For meaningfully combining different clustering solutions, the other dominant question in research on clustering ensembles is how to derive or measure the correspondence between different clustering solutions, see e.g. Topchy et al. (2004, 2005), Long et al. (2005), Fred and Jain (2005), Caruana et al. (2006), Domeniconi and Al-Razgan (2009), Gullo et al. (2009b), Hahmann et al. (2009), Singh et al. (2010). The correspondence between different clusterings is a problem not encountered in classification ensembles where the same class always gets the same label. In clustering, the correspondence between labels assigned by different instances of a clustering learner is to discover by some mapping procedure which makes part of the variety of solutions to ensemble clustering. From the point of view of subspace clustering, this label correspondence problem is interesting as there are no suitable automatic evaluation procedures for possibly highly complex and overlapping clusters over different subspaces.

It is our impression that these two topics in research on ensemble clustering directly relate to certain questions discussed in subspace clustering:

-

(a)

A lesson research in ensemble clustering may want to learn from subspace clustering could be that diversity of clusterings could be a worthwhile goal in itself. We should differentiate here between significantly differing clusterings and just varying yet similar (i.e., correlated) clusterings (Li and Ding 2008; Azimi and Fern 2009). We believe, however, that it can be meaningful to unify the latter by some sort of consensus while it is in general not meaningful to try to unify substantially different clusterings.

-

(b)

As we have seen above (Sect. 2.1), redundancy is a problem in traditional subspace clustering. Possibly, subspace clustering can benefit from advanced clustering diversity measures in ensemble clustering (Strehl and Ghosh 2002; Fern and Brodley 2003; Hadjitodorov et al. 2006; Gionis et al. 2007b; Fern and Lin 2008). These measures, however, are usually based on some variant of pairwise mutual information where the overlap of clusters (i.e., the simultaneous membership of some subsets of objects in different clusters) is a problem.

Interestingly, there have been recent attempts to combine techniques from both fields, called ‘Subspace Clustering Ensembles’ (Domeniconi 2012) or ‘Projective Clustering Ensembles’ (Gullo et al. 2009a, 2010, 2011).

2.3 Alternative (or: constraint) clustering

While in classification (supervised learning) a model is learned based on complete information about the class structure of a data set, and in clustering (cast as unsupervised learning) a model is fit to a data set without using prior information, semi-supervised clustering is using some information.

For example, some objects may be labeled, and this class information is used to derive must-link constraints (objects with same labels should end up in the same cluster) or cannot-link constraints (objects with different class labels should be separated into different clusters). In a weaker setting, we could treat these as should-link and should-not-link constraints and only demand a solution to satisfy a certain percentage of these constraints. However, constraint clustering does come in many variants (e.g. Klein et al. 2002; Bade and Nürnberger 2008; Basu et al. 2008; Davidson and Ravi 2009; Lelis and Sander 2009; Zheng and Li 2011).

Interesting in our context is one specific variant of constraint clustering, which imposes a constraint of diversity, or non-redundancy, on the results. In this case, the constraints are based on a given clustering but in an opposite way to what is the common use of class labels. Objects that are clustered together in the given clustering should not be clustered together in a new clustering. The clustering process should result in the discovery of different clustering solutions. Hence this variant is known as alternative clustering. In the algorithmic variants, these different solutions can be derived sequentially or simultaneously (some variants have been discussed, e.g., by Gondek and Hofmann 2004, 2005; Bae and Bailey 2006; Davidson and Qi 2008; Jain et al. 2008; Davidson et al. 2010; Niu et al. 2010; Dang and Bailey 2010; Dang et al. 2012).

The motivation behind these approaches is that some results may already be known for a specific data set yet other results should be obtained that are new and interesting (cf. the classical definition of knowledge discovery in data as ‘the non-trivial process of identifying valid, novel, potentially useful, and ultimately understandable patterns in data’ by Fayyad et al. (1996)). Reproducing known or redundant patterns by clustering algorithms does not qualify as identifying novel patterns. A problem could be that the already known facts are dominant in a data set. The idea is, thus, to constrain some clustering algorithm by a set of patterns or links between objects that are not to identify. As Gondek and Hofmann (2005) put it: ‘users are often unable to positively describe what they are looking for, yet may be perfectly capable of expressing what is not of interest to them’.

Some of these approaches again use ensemble techniques. Here, however, we are more interested in the relationship between these approaches and the area of subspace clustering. Hence, for our context, the interesting idea in this area is to use different subspaces as one possibility to find different clustering solutions (Qi and Davidson 2009). As these approaches seek diversity usually constrained by non-redundancy, clearly subspace clustering research tackling the high redundancy level of subspace clusters can learn from these approaches.

Alternatively, allowing a certain degree of redundancy can be meaningful, as in turn can be learned from subspace clustering. In different subspaces, one subset of objects could belong to two different but meaningful clusters and hence increase the redundancy level of these clusters without rendering the report of both overlapping clusters meaningless. Indeed, considerations in this direction can actually be found in the research area of subspace clustering (Günnemann et al. 2009). Also on part of alternative clustering research it has been conceded that it may be desirable to enable the preservation of certain already known properties of known concepts while seeking different clusters (Qi and Davidson 2009).

2.4 Multiview clustering

There are methods of clustering (and other data mining tasks) that treat different subsets of attributes or different data representations separately. The intuition of this separation could be a semantic difference of these subsets such as color features versus texture features of images. Learning distances in the combined color-texture space may easily end up being a completely meaningless effort. Hence, the different representations are treated separately as they are semantically different. Examples for this idea are so called ‘multi-represented clustering’ approaches or distance-learning in multiple views (e.g. Kailing et al. 2004b; Achtert et al. 2005, 2006c; Kriegel et al. 2008; Horta and Campello 2012). Especially in the setting of ‘co-learning’, i.e., learning one concept simultaneously using different sources of information, the goal is here to find one clustering (or, more general, one similarity measure) guided by the information from different (and separated) subspaces (Kumar and Daumé 2011; Kriegel and Schubert 2012). This work has its roots in the semi-supervised concept of co-training (Blum and Mitchell 1998) and their compatibility-assumption. While the transfer to the unsupervised setting of clustering has often been named ‘multi-represented’ clustering, the name ‘multi-view’ clustering was used for such approaches as well (Bickel and Scheffer 2004; Sridharan and Kakade 2008; Chaudhuri et al. 2009). There are even approaches that connect this idea with the idea of having constraints (see Sect. 2.3) that can guide the distance-learning (Yan and Domeniconi 2006). Let us note that, for this general approach of learning one (combined) result based on several representations, strong connections to ensemble clustering (Sect. 2.2) could be pointed out. Essentially, the theory of ensemble learning can explain why ‘co-learning’ approaches in general work very well.

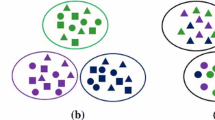

However, in the context of our study on the blind men and the elephant, we are much more interested in the treatment of different aspects of truth and in deriving different results that are potentially equally valid. This has also been an important motivation for those multiview clustering approaches that are technically somewhat similar to multi-represented clustering but do not aim at deriving a combined solution from the different representations but to find actually different solutions, where the difference could be explained in light of the underlying different representations. This principle is illustrated in Fig. 1: different feature subsets are related to different concepts. As a consequence, it makes perfectly sense to find different clustering solutions in (semantically) different subspaces. If we consider the consensus only, we lose information and only see the obvious clusters in this case.

In Fig. 2, we give some examples for this principle, taken from the ALOI data (Geusebroek et al. 2005), where each object is represented by a multitude of different images, taken from different angles, with different illumination conditions and so on. All images from the same object form most naturally a cluster. Aside from this basic ground truth, in image data it can be sensible to cluster objects with similar shape but different color or vice versa. The examples in Figs. 2(a) and 2(b) illustrate these two most straightforward cases. However, sub-clusters, super-clusters, and combinations of parts of the images from different objects can very well also be seen as forming sensible clusters (Kriegel et al. 2011c). Consider, e.g., the possibly meaningful separation of images of the same object seen from different angles as illustrated in Fig. 2(c). Front and back of the same object can be substantially different and in fact relate to meaningful concepts.

Examples for different meaningful concepts based on different aspects (subspaces, views) of objects. Concepts could group together different objects or separate on object into different clusters. Examples are taken from the ALOI data set (Geusebroek et al. 2005)

Another popular example is the clustering of faces. Clusters of faces of the same person are of course perfectly meaningful. But so are clusters of the same emotional expression on faces of different persons, or clusters of the same orientation of the faces of different persons.

The idea of multiview clustering in this notion (e.g. Cui et al. 2007; Jain et al. 2008), i.e., not aiming at a combination and at eventually learning one single concept but in allowing for different concepts in different views, is therefore to enforce clustering results to reside in different but not previously defined subsets of the attributes, i.e., in different ‘views’ of the data space. In many cases there is no semantically motivated separation of different subsets of attributes available (or, at least, not a single one) but the different representations are found and separated during the clustering process. For example, given a first clustering residing in some subspace, a second clustering result has the additional constraint of being valid in a subspace that is perpendicular to the subspace of the first clustering.

Multiview clustering can be seen as a special case of seeking alternative clusterings, with the additional constraint of the orthogonality of the subspaces (Dang et al. 2012). This is also highlighted by the example of clustering faces, where the cluster of images of the same person could be seen as trivial and uninteresting. The related similarity should therefore not mislead the search for clusters of similar emotional expression. Multiview clustering can also be seen as a special case of subspace clustering allowing maximal overlap yet seeking minimally redundant clusters by accommodating different concepts (as proposed by Günnemann et al. 2009).

Because of these close relationships, we do not go into more detail here. Let us note, though, that these approaches shed light on the observation learned in subspace clustering that highly overlapping clusters in different subspaces need not be redundant nor meaningless. That is, certain subsets of objects may meaningfully belong to several clusters simultaneously.

2.5 Summary

In this section on different variants of clustering, we shortly surveyed the research areas of subspace clustering, ensemble clustering, alternative clustering, and multiview clustering. We restricted our survey to the essential parts in order to highlight where similar problems are touched in different areas. As we summarize in Table 1, the different families of clustering algorithms differ in the definition of their goal and, accordingly, face somewhat different problems. However, it is also obvious from this synopsis, that their goals and their problems are related. We figure that these families, trying to escape their specific problems, move on towards a common understanding of the problem.

Based on this overview, we identify as possible topics for the discussion between different areas:

-

1.

How to treat diversity of clustering solutions? Should diverse clusterings always be unified? Allegedly, they should not—but under which conditions is a unification of divergent clusterings meaningful and when is it not?

-

2.

Contrariwise, can we learn also from diversity itself? If in an ensemble of several clusterings in several arbitrary random subspaces, one clustering is exceptionally different from the others, it will be outnumbered in most voting procedures and lost. Could it not be especially interesting to report? Speaking in the figure of the elephant discovery: the tip of the elephant’s tail, or its tusks, though seemingly insignificant when compared to the belly, may be well worth being described and appreciated.

-

3.

How to treat redundancy of clusters, especially in the presence of overlap between clusters? When does a cluster qualify as redundant w.r.t. another cluster, and when does it represent a different concept although many objects are part of both concepts? We have seen research on subspace clustering more and more trying to get rid of too much redundancy in clustering results while research on alternative clustering recently tends to allow some degree of redundancy. May there be a point where both research directions meet?

-

4.

How to assess similarity between clustering solutions or overlapping clusters within a single clustering? Again, the presence of overlap between clusters increases the complexity of a mapping of clusters where the label correspondence problem makes it already non-trivial.

2.6 Discussion—clustering evaluation considering multiple ground truths?

While we pointed out several questions for discussion, here we set out to discuss one central question: if we admittedly can face several valid solutions to the clustering problem, such as in different subspaces, how do we evaluate and compare these results? This problem, in our opinion, is somehow forming the basis of all dispute between these areas, and also of evaluation of clusterings in general: the possibility that more than one observation or rather clustering solution could be true and worthwhile, as the story of the blind men and the elephant is setting out to teach us. This is truly a fundamental problem not only in clustering. The presence of multiple ground truths has been touched by various data mining areas, as surveyed by Färber et al. (2010). Consequently, it is a problem for clustering evaluation in general that classification data with a single layer of class labels is not necessarily the best choice for testing a new clustering algorithm.

We conjecture that it is even an inherent flaw in design of clustering algorithms if the researcher designing the algorithm evaluates it only w.r.t. the class labels of classification data sets. It is an important difference between classifiers and clustering algorithms that most classification algorithms aim at learning borders of separation of different classes while clustering algorithms aim at grouping similar objects together. Hence the design of clustering algorithms oriented towards learning a class structure may be strongly biased in the wrong direction. The clustering algorithm may be designed to overfit on the class-structure of some data sets.

That is, in classification we are explicitly looking for structure in the data that aids us in predicting the class labels. In unsupervised, exploratory data mining on the other hand, we are after learning about the structure of the data in general—regardless of whether this helps us to predict. As such, evaluating unsupervised methods on how well their results predict class labels only makes sense when the data and class labels at hand correlate with the type of structure the method can discover.

In fact, in general it can be highly desirable if a clustering algorithm detects structures that deviate considerably from annotated classes. If it is a good and interesting result, the clustering algorithm should not be punished for deviating from the class labels. The judgement on new clustering results, however, requires difficult and time-consuming validation based on external domain-knowledge beyond the existing class-labels. Reconsider Fig. 2: all the pairs of images from the ALOI data could sensibly be part of the same cluster as well as being separated in meaningful different clusters. The MultiClust workshop series has seen some first steps in these directions, theoretically (Färber et al. 2010) as well as practically (Kriegel et al. 2011c).

While there is no satisfying solution to this problem around, let us point out with a broader survey, that this problem of evaluation when there exist multiple ‘ground truths’ has been acknowledged in other research areas as well (but did not find a solution in these other areas either):

-

As an example concerned with traditional clustering, Chakravarthy and Ghosh (1996) note the difference between clusters and classes though they do not take it into account for the evaluation. The clustering research community overall did not pay much attention, however, on this rather obvious possibility. For example, although the observation of Chakravarthy and Ghosh (1996) is quoted and used for motivating ensemble clustering in Strehl and Ghosh (2002), the evaluation in the latter study uses uncritically classification benchmark data sets—including the Pen Digits data set (Frank and Asuncion 2010) which is known to contain several meaningful clustering solutions, as elaborated by Färber et al. (2010).

-

In the research on classification, the topic of multi-label classification is highly related. Research on this topic is concerned with data where each object can be multiply labeled, i.e., belong to different classes simultaneously. An overview on this topic has been provided by Tsoumakas and Katakis (2007). In this area, the problem of different simultaneously valid ground truths is usually tackled by transforming the complex, nested or intersecting class labels to flat label sets. One possibility is to treat each occurring combination of class labels as an artificial class in its own for training purposes. Votes for this new class are eventually mapped to the corresponding original classes at classification time, i.e., if some objects could belong to either class A, class B, or both, in fact three classes are considered, namely A′, B′, and (A′,B′). (A′,B′) could actually be defined (in this simple case) as A∩B, while A′=A∖B and B′=B∖A which shows that the typical treatment of the class labels in this area is quite heuristically deviating from the actual ground truth. There are, of course, many other possibilities of creating a flat set of class labels, see, e.g., work of Schapire and Singer (2000), Godbole and Sarawagi (2004), Boutell et al. (2004) or Tsoumakas and Katakis (2007). It is, however, remarkable that none of these methods treat the multi-label data sets in their full complexity. This is only achieved when algorithms are adapted to the problem, as, e.g., by Clare and King (2001), Thabtah et al. (2004). But even if training of classifiers takes the complex nature of a data set into account, the other side of the coin, evaluation, remains traditional. For clustering, no comparable approaches exist yet.

-

As a special case of multi-label classification let us consider hierarchical classification (see e.g. Koller and Sahami 1997; McCallum et al. 1998; Chakrabarti et al. 1998; Barutcuoglu et al. 2006; Cai and Hofmann 2004; Zimek et al. 2010; Fürnkranz and Sima 2010; Silla and Freitas 2011). Here, each class is either a top-level class or a subclass of some other class or several other classes. Overlap among different classes is present only between superclass and its subclasses (i.e., comparing different classes vertically), but not between classes on the same level of the hierarchy (horizontally), i.e., if we have classes A, B, and (A,B), it holds that A⊆(A,B) and B⊆(A,B) and A∩B=∅. Evaluation of approaches for hierarchical classification is usually based on one specific level of choice, corresponding to a certain granularity of the classification task.

-

Hierarchical problems have also been studied in clustering and actually represent the majority of classical work in this area (Sneath 1957; Wishart 1969; Sibson 1973; Hartigan 1975)—see also the overview by Kriegel et al. (2011a). Recent work includes papers by Ankerst et al. (1999), Stuetzle (2003), Achtert et al. (2006b, 2007a, 2007b). There are only some early approaches to evaluate several or even all levels of the hierarchy (Fowlkes and Mallows 1983), or some comparison measures to compare a clustering with the so called cophenetic matrix (see Jain and Dubes 1988, pp. 165–172). The cluster hierarchy can be used to retrieve a flat clustering if a certain level of the hierarchy is selected, for example at a certain density level (Kriegel et al. 2011a).

-

In the areas of bi-clustering or co-clustering (Madeira and Oliveira 2004; Kriegel et al. 2009) it is also a common assumption in certain problem settings that one object can belong to different clusters simultaneously. Surprisingly, although methods in this field have been developed for four decades (initiated by Hartigan 1972), there has not been described a general method of evaluation of clustering results in this field either.

What is usually done is a (manual) inspection of clusters, reviewing them for prevalent representation of some meaningful concept. We could figure this as ‘evaluation by example’. There are attempts to formalize this as ‘enrichment’ w.r.t. some known concept.

-

This technique is automated to a certain extent in biological analysis of gene or protein data.

-

A couple of methods has been proposed in order to evaluate clusters retrieved by arbitrary clustering methods (Ashburner et al. 2000; Zeeberg et al. 2003; Al-Shahrour et al. 2004). These methods assume that a class label is assigned to each object of the data set (in the case of gene expression data to each gene/ORF), i.e., a class system is provided. In most cases, the accuracy and usefulness of a method is proven by identifying sample clusters containing ‘some’ genes/ORFs with functional relationships, e.g. according to Gene Ontology (GO).Footnote 4 For example, FatiGO (Al-Shahrour et al. 2004) tries to judge whether GO terms are over- or under-represented in a set of genes w.r.t. a reference set.

-

More theoretically, cluster validity is here measured by means of how good they match the class system where the class system exists of several directed graphs, i.e., there are hierarchical elements and elements of overlap or multiple labels. However, examples of such measures include precision/recall values, or the measures reviewed by Halkidi et al. (2001). This makes it methodically necessary to concentrate the efforts of evaluation at one set of classes at a time. In recent years, multiple class-driven approaches to validate clusters on gene expression data have been proposed (Datta and Datta 2006; Gat-Viks et al. 2003; Gibbons and Roth 2002; Lee et al. 2004; Prelić et al. 2006).

-

Previous evaluations do in fact report many found clusters to not obviously reflect known structure. This is possibly due to the fact that the used biological knowledge bases are very incomplete (Clare and King 2002). Others, however, report a clear relationship between strong expression correlation values and high similarity and short distance values w.r.t. distances in the GO-graph (Wang et al. 2004) or a relationship between sequence similarity and semantic similarity (Lord et al. 2003).

-

In the context of multiple clusterings and overlapping clusters—as are expected in the specialized clustering areas we surveyed above, like subspace clustering, ensemble clustering, alternative or multiview clustering—it becomes even more important to find methods of evaluating clusterings w.r.t. each other, w.r.t. existing knowledge, and w.r.t. their usefulness as interpreted by a human researcher.

Though the problem of overlapping ground truths, and hence the impossibility of using a flat set of class labels directly, is pre-eminent in such research areas as subspace clustering (Kriegel et al. 2009, 2012; Sim et al. 2012; Assent 2012), alternative clustering (Qi and Davidson 2009), or multiview clustering (Cui et al. 2007), it is, in our opinion, actually relevant for all non-naïve approaches that set out to learn something new and interesting about the world—where ‘naïve’ approaches would require the world to be simple and the truth to be one single flat set of propositions only. Also, instead of sticking to one evaluation measure only, visual approaches and approaches involving several measures of similarities between different partitions of the data (e.g., Achtert et al. 2012) seem more promising, although a lot of work needs to be done in the area of clustering evaluation measures (even when given a ground truth—let alone several truths). For some pointers here see, e.g., Halkidi et al. (2001), Campello (2010), Vendramin et al. (2010).

In summary, we conclude that (i) the difference between clusters and classes, and (ii) the existence of multiple truths in data (i.e., overlapping or alternative—labeled or unlabeled—natural groups of data objects) are important problems in a range of different research areas. These problems have been observed partly since decades yet they have not found appropriate treatment. Especially for clustering and the specialized areas we have surveyed, this is the core problem. The point we are making in this position paper is, however, that this is a common problem where researchers working in different areas should probably not only focus on their own version of the problem but learn from approaches taken in other areas, since we eventually want to describe the one elephant. While there probably is no satisfactory general solution for describing elephants—there is no free lunch, after all—we argue for increasing, within these highly related fields, the awareness that we are investigating highly similar problems.

3 Pattern mining—considering the same elephant?

Let us next consider how clustering relates to that other branch of exploratory data mining, namely pattern mining. Whereas in clustering research we are after identifying sets of objects that are highly similar, in pattern mining we are typically after discovering recurring structures. While at first glance these goals and the accompanying problem definitions seem quite different, in the following we will argue that they in fact have quite a lot in common and that researchers in the two fields can learn much from each other. As sketched in Sect. 2.1, ‘subspace clustering’ and pattern mining share common roots, as well as a number of technical approaches. It seems, however, important to us to note that clustering, esp. subspace clustering, and pattern mining, are currently all considering problems similar to those that have been studied (or, sometimes, even solved) by one of the others.

As such, it seems that the blind men of pattern mining and those of subspace clustering are not only examining the same elephant but also the same parts. Alas, as both fields do not share a common language, much of their work appears unrelated to each other—or when it actually does seem related, it is not easily understood from the other’s perspective. Therefore, we will here try to bridge the linguistic gap. However, let us note that we do not argue that clustering, subspace clustering, or pattern mining are one and the same; there are of course genuine differences between the fields. Here, however, we emphasize the similarities, as well as those points in research from which researchers in the different fields might learn from each other’s insights and mutually benefit from a common language.

Let us first coarsely sketch the two fields. Pattern mining, to start with, is concerned with developing theory and algorithms for identifying groups of attributes and some selection criteria on those; such that the most ‘interesting’ attribute-value combinations in the data are returned. That is, by that selection we identify objects in the data that somehow stand out. The prototypical example is supermarket basket analysis, in which by frequent itemset mining we identify items that are frequently sold together—with the infamous beer and nappies as an example of an ‘interesting’ pattern.

In contrast to models, patterns describe only part of the data. While there are many different formulations for pattern mining, we are here particularly interested in patterns that describe interesting subsets of the database. That is, we are concerned with theory mining. Formally, this task has been described by Mannila and Toivonen (1997) as follows. Given a database \(\mathcal{D}\), a language \(\mathcal{L}\) defining subsets of the data, and a selection predicate q that determines whether an element \(\phi \in \mathcal{L}\) describes an interesting subset of \(\mathcal{D}\), the task is to find

or in other words, the task is to find all interesting subsets.

Clearly, this formulation allows us a lot of freedom; the choice of \(\mathcal{L}\) and q together determine what we are after, and what can be discovered in the data. For the traditional frequent itemset mining setting, the pattern language \(\mathcal{L}\) consists of all possible subsets of all items \(\mathcal{I}\) in the data, and the selection predicate q corresponds to checking whether this combination of values occurs sufficiently frequently, or has a sufficient support. Typically, and different from clustering approaches, the selection predicate q does not specify much about how the data is distributed within the selected sub-part of the data.

Clustering, on the other hand, in general aims at finding groups of similar objects in a database. Aside from algorithmic variations in the process of identifying these groups, the major differences between various clustering approaches are in the actual meaning of ‘similar’. If we can agree that, in an abstract sense, both pattern mining and (subspace) clustering identify sub-parts of the data, the key aspect of clustering is that, by requiring similarity, the ‘q’ here specifies a requirement on the distribution within this part of the data. Further, we note that a key difference between the two is how we (typically) define what sub-parts of the data we want to find; whether these are required to be tall enough (i.e., frequency), or sufficiently similar. Defining similarity, however, is not easy—especially in high-dimensional data the notion of similarity is not a trivial one.

One obvious difference between clustering and pattern mining we need to mention is that, in traditional pattern mining one typically ‘simply’ wants to find ‘all’ interesting sub-parts of the data, while in clustering one often aims at finding a partitioning of the data such that within each part similarity is high. In clustering, groups of objects, or rows, of the data are the first class citizens, whereas in pattern mining any sub-matrix of the data is what we consider interesting—not necessarily full rows. This more general view on sub-matrices is what it shares with subspace clustering.

We argue that research in subspace clustering, while having a common origin with pattern mining, and even sharing some early ideas, has seen significantly different developments than those made in pattern mining. Interestingly, both fields now face problems already studied by the other. In this section we will survey what main developments both fields have seen, and in particular aim to point out those recent developments in pattern mining from which research on subspace clustering can possibly benefit, and vice versa. For example, the explosion in numbers of results, and reducing their redundancy, are currently open problems in subspace clustering (although some papers already addressed this issue, we will discuss that below) while this has recently has been studied in detail by the pattern mining community. On the other hand, the notion of alternative results, as well as the generalization beyond binary data, are topics where pattern miners may draw much inspiration from recent work in (subspace) clustering or the other specialized clustering approaches we have discussed above (Sect. 2).

The goal of this section is to identify a number of developments in these fields that should not go unnoticed; we are convinced that solutions for pattern mining problems are applicable in subspace clustering, and vice versa.

3.1 Patterns—and the curse of dimensionality

The so-called ‘curse of dimensionality’ is often credited for causing problems in similarity computations in high-dimensional data, and, hence, is given as motivation for specialized approaches such as ‘subspace clustering’ (Kriegel et al. 2009). Let us consider two aspects of the ‘curse’ that are often confused in the literature: (i) the concentration effect of L p -norms and (ii) the presence of irrelevant attributes.

Regarding the concentration effect (i), the key result of Beyer et al. (1999) states that, if the ratio of the variance of the length of any point vector \(\vec{x} \in \mathbb{R}^{d}\) (denoted by \(\|\vec{x}\|\)) with the length of the mean point vector (denoted by \(E [ \|\vec{x}\| ]\)) converges to zero with increasing data dimensionality, then the proportional difference between the farthest-point distance D max and the closest-point distance D min (the relative contrast) vanishes, i.e., all distances concentrate around a mean, and look alike.

This observation is often quoted for motivating subspace clustering as a specialized procedure. It should be noted, though, that the problem is neither well enough understood (see e.g. François et al. 2007) nor actually relevant when the data follows different, well separated distributions (Bennett et al. 1999; Houle et al. 2010; Bernecker et al. 2011).

Regarding the separation of clusters, the second problem (ii) is far more important for subspace clustering: In order to find structures describing phenomena of the world some researcher or businessman is interested in, abundances of highly detailed data are collected. Among the features of a high-dimensional data set, for any given query object, many attributes can be expected to be irrelevant to describing that object. Irrelevant attributes can easily obscure clusters that are clearly visible when we consider only the relevant ‘subspace’ of the dataset. Hence, they interfere with the performance of similarity measures in general, but in a far more fundamental way for clustering. The relevance of certain attributes may differ for different groups of objects within the same data set. Thus, many subsets of objects are defined only by some of the available attributes, and the irrelevant attributes (‘noise’) will interfere with the efforts to find these subsets. This second problem is actually the true motivation for designing specialized methods to look for clusters in subspaces of a dataset.

Pattern mining, on the other hand, typically does not suffer at all from this aspect of the curse of dimensionality. This is because most interestingness measures do not consider similarity, but rather compute a statistic over the selected objects; frequency for instance, is a linear statistic over objects, and when calculating the frequency of a pattern we simply have to count which objects satisfy the constraints (e.g., which transactions contain all the items identified by an itemset). Alternatively, many recent interestingness measures consider patterns that are more surprising given a probabilistic background model (Wang and Parthasarathy 2006; Webb 2007; Tatti 2008; Kontonasios and De Bie 2010; Mampaey et al. 2011); a simple example is lift as defined by Brin et al. (1997), which considers the observed frequency of a pattern, and compares it to the expected frequency under the independence model.

As long as the employed interestingness measure does not define a comparison within the selected sub-part of the data, which is where the curse stems from, there is no fundamental problem in pattern mining w.r.t. high-dimensional data. However, this is not to say that high-dimensional data does not come with any problems. Computationally, things naturally become much more complex; if anything by the simple fact that the number of possible subsets grows exponentially—which is actually the problem that Bellman (1961), more generally and in a more abstract manner, originally described coining the figurative term ‘curse of dimensionality’.

Which leads us to the key problem in traditional pattern mining, the so-called pattern explosion. In short, whenever we set a high threshold on interestingness (e.g., a high frequency) we will get returned only few patterns. These patterns are typically not very interesting, as they convey common knowledge; the super-marked manager long since knew that many people that buy bread also buy butter. However, whenever we lower the threshold, we very quickly will find ourselves swamped with extremely many patterns. All of these patterns have passed our interestingness test, and are hence potentially interesting. However, many of the returned patterns will be variations of the same theme, essentially convey the same message, but including or excluding an extra clause (i.e., item). It has proven to be rather difficult to predict at which frequency threshold the pattern explosion hits (Geerts et al. 2005; Boley and Grosskreutz 2008).

When, and how badly, the pattern explosion hits is determined by the characteristics of the data. For dense data, it is often already impossible to mine frequent itemsets below trivially high support thresholds without ending up with unreasonably many results; making it inherently difficult to analyze such data with traditional frequent itemset mining techniques. The link to high-dimensional data here being that for higher number of dimensions, there are more possibilities to create variations of a pattern. And hence, for higher dimensional data, we are likely to discover even more (redundant variations of) patterns.

In short, in pattern mining, controlling the number of results, and the redundancy within has been a major research focus. We will discuss the here relevant advances in Sect. 3.4.

3.2 Common roots of pattern mining and subspace clustering

In general, in subspace clustering similarity is defined in some relation to subsets or combinations of attributes (dimensions) of database objects. Hence, a clustering with n clusters for a database \(\mathcal{D}\times\mathcal{A}\), with the set of objects \(\mathcal {D}\) and with the full set of attributes \(\mathcal{A}\), can be seen as a set \(\mathcal{C}= \{(\mathcal{C}_{1},\mathcal{A}_{1}),\ldots,(\mathcal{C}_{n},\mathcal {A}_{n})\}\), where \(\mathcal{C}_{i} \subseteq\mathcal{D}\) and \(\mathcal{A}_{i} \subseteq\mathcal{A}\), i.e., a cluster is defined w.r.t. a set of objects and w.r.t. a set of attributes (a.k.a. a subspace). Which set of attributes is most appropriate to describe which cluster is dependent on the cluster members. Membership to a cluster is dependent on the similarity-measure which, in turn, depends on the selected attributes (or their combination). This circular dependency is the ultimate reason responsible for subspace clustering being an even harder problem than just clustering.

Subspace clustering algorithms are typically categorized into two groups; in ‘projected clustering’ objects belong to at most one cluster, while ‘subspace clustering’ (in a more narrow sense) seeks to find all possible clusters in all available subspaces, allowing overlap (Kriegel et al. 2009). The distinction (and terminology) originates from the two pioneering papers in the field, namely clique (Agrawal et al. 1998) for ‘subspace clustering’ and proclus (Aggarwal et al. 1999) for projected clustering; and the two definitions allow a broad field of hybrids.

Since we are interested in the relationship between pattern mining and subspace clustering, we will let aside projected clustering and hybrid approaches and concentrate on subspace clustering in the narrower sense as defined by Agrawal et al. (1998). In this setting, subspace clustering is usually related to a bottom-up traversal of the search space of all possible subspaces, i.e., starting with all one-dimensional subspaces, two-dimensional combinations of these subspaces, three-dimensional combinations of the two-dimensional subspaces and so on, all (relevant) subspaces are searched for clusters residing therein.

Considering clique, we find the intuition of subspace clustering promoted there closely related to pattern mining. To this end, we consider frequent itemset mining (Agrawal and Srikant 1994), in which we consider binary transaction data, where transactions are sets of items A, B, C, etc. The key idea of Apriori (Agrawal and Srikant 1994) is to start with itemsets of size 1 that are frequent, and exclude all itemsets from the search that cannot be frequent anymore, given the knowledge which smaller itemsets are frequent. For example, if we count a 1-itemset containing A less than the given minimum support threshold, all 2-itemsets, 3-itemsets, etc. containing A (e.g., {A,B},{A,C},{A,B,C}) cannot be frequent either and need not be considered. While theoretically the search space remains exponential, in practice searching becomes feasible even for very large datasets.

Transferring this problem to subspace clustering, each attribute represents an item, and each subspace cluster is then an itemset containing the items representing the attributes of the subspace. This way, finding itemsets with support 1 relates to finding all combinations of attributes constituting a subspace of at least one cluster. This observation is the rationale of most bottom-up subspace clustering approaches: subspaces containing clusters are determined starting from all one-dimensional subspaces accommodating at least one cluster, employing a search strategy similar to that of itemset mining algorithms. To apply any efficient algorithm, the cluster criterion must implement a downward closure property (i.e., (anti-)monotonicity): If subspace \(\mathcal{A}_{i}\) contains a cluster, then any subspace \(\mathcal{A}_{j} \subseteq\mathcal{A}_{i}\) must also contain a cluster.

The anti-monotonic reverse implication, if a subspace \(\mathcal{A}_{j}\) does not contain a cluster, then any superspace \(\mathcal{A}_{i} \supseteq\mathcal{A}_{j}\) also cannot contain a cluster, can subsequently be used for pruning.

Clearly, this is a rather naïve use of the concept of frequent itemsets in subspace clustering. What constitutes a good subspace clustering result is defined here apparently in close relationship to the design of the algorithm, i.e., the desired result appears to be defined according to the expected result (as opposed to: in accordance to what makes sense). The tool ‘frequent itemset mining’ was first, and the problem of ‘finding all clusters in all subspaces’ has apparently been defined in order to have some new problem where the tool can be applied straightforwardly—as has been discussed by Kriegel et al. (2009, 2012), the resulting clusters are usually highly redundant and, hence, mostly useless.

This issue is strongly related to the pattern explosion we discussed above. Recently, pattern miners have started to acknowledge they have been asking the wrong question: instead of asking for all patterns that satisfy some constraints, we should ask for small, non-redundant, and high quality sets of patterns—where by high-quality we mean that each of the patterns in the set satisfies the thresholds we set on interestingness or similarity, and that the set is optimal with regard to some criterion, e.g. mutual information (Knobbe and Ho 2006a, 2006b), data description (or compression) (Vreeken et al. 2011; Kontonasios and De Bie 2010; De Bie 2011), or covered area (Geerts et al. 2004; Xiang et al. 2011).

Research on subspace clustering inherited all the deficiencies from this originally ill-posed problem. However, early research on subspace clustering as follow-ups of clique apparently also tried to transfer improvements from pattern mining. As an example, enclus (Cheng et al. 1999) uses several quality criteria for subspaces, not only implementing the downward closure property, but also an upward closure (i.e., allowing search for interesting subspaces as specializations as well as generalizations). This most probably relates to the concept of positive and negative borders known from closed frequent itemsets (Pasquier et al. 1999a). Both can be seen as implementations of the classical concept of version spaces (Mitchell 1977).

However, while pattern mining and subspace clustering share common roots, research in both fields has subsequently diverged in different directions.

3.3 Patterns, subspace clusters—and the elephant

Methods aside, there are two important notions we have to discuss before we can continue; notions that may, or may not make the two fields fundamentally different. First and foremost, what is a result? And, second, can we relate interestingness and similarity? To start with the former, in subspace clustering, a single result is defined by the Cartesian product of objects \(C \subseteq\mathcal{D}\) and attributes \(A \subseteq\mathcal{A}\). In order to be considered as a result, each of the objects in the selection should be similar to the others, over the selected attributes, according to the employed similarity function.

In order to make a natural connection to pattern mining, we adopt a visual approach; if we are allowed to re-order both attributes and objects freely, we can reorder \(\mathcal{D}\) and \(\mathcal{A}\) such that C and A define a rectangle in the data, or in pattern mining vocabulary, a tile. We give a simple overview in Fig. 3. The left-most plot shows, for some toy data, a traditional pattern bcd for which some minimal interestingness measure holds, such as minimal frequency of a value instantiation over these attributes. Note that we do not specify which rows support this pattern. Opposing, the right-most plot of the figure shows an example cluster. Here, we are reported that given some similarity measure, rows 2, 3, and 4 are particularly similar regarded over all attributes.

(left) Traditional pattern mining returns patterns, sets of attributes, that satisfy some statistic, such as minimal frequency, over all rows of the data (e.g., here bcd). That is, we are told a particular value-instantiation over attributes bcd occurs (at least) σ times—but not which rows support this pattern. (right) Traditional clustering returns object groups, or clusters, (e.g., here {2,3,4}), that satisfy some similarity constraint, over all attributes of the data. That is, we are told the group has similarity y calculated over all attributes, possibly including irrelevant ones. (center) Modern pattern mining aims at discovering tiles, or sub-matrices, that satisfy some statistical property, such as a minimal density of 1s. Likewise, subspace clustering aims at finding sub-matrices, those with a minimal similarity over the rows within the sub-matrix

In the middle plot, we show both modern pattern mining and subspace clustering. Both these branches of exploratory data mining research focus on identifying informative sub-matrices of the data. In pattern mining we are generally interested in discovering all sub-matrices, or tiles, that satisfy some statistic that captures our notion of interestingness. For example, a sub-matrix with very many 1s, or with very skewed value-instantiations. In subspace clustering this ‘statistic’ is made concrete through a similarity measure, and we are after finding all sub-matrices for which the rows show at least a certain similarity.

In recent years the notion of a tile has become very important in pattern mining (Geerts et al. 2004; Kontonasios and De Bie 2010; De Bie 2011; Gionis et al. 2004). Originally the definition of a pattern was very much along the lines of an SQL query, posing selection criteria on which objects in the data are considered to support the pattern or not. As such, beyond whether they contribute to such a global statistic, the selected objects were not really taken into account. However, we can trivially create a tile by simply selecting those objects that support the pattern, and only consider the attributes the pattern identifies. In many recent papers, however, the supporting objects are explicitly taken into account, and by doing so, patterns also naturally define tiles.

As such, both fields identify tiles, sub-parts of the data. However, both have different ways of arriving at these tiles. In pattern mining, results are typically selected by some measure of ‘interestingness’—of which support, the number of selected objects, is the most well-known example. In subspace clustering, on the other hand, we measure results by how similar the selected objects are over the considered attributes. Clearly, while this may lead to discovering rather different tiles, it is important to realize that both approaches do find tiles, and provide some statistics for the contents of each tile.

We observe that in pattern mining the selection of the objects ‘belonging’ to the pattern is very strict—and that as such those objects will exhibit high similarity over the subspace of attributes the pattern identifies. For example, in standard frequent itemset mining, transactions (i.e., objects) are only selected if they are a strict superset of the pattern at hand—and in fault-tolerant itemset mining (see, e.g., Hébert and Crémilleux 2005; Poernomo and Gopalkrishnan 2009) typically only very few attribute-value combinations are allowed to deviate from the template the pattern identifies. Linking this to similarity, in this strict selection setting, it is easy to see that for the attributes identified by the pattern, the selected objects are highly similar.

The same also holds for subgroup discovery (Wrobel 1997). Subgroup discovery is a supervised branch of pattern mining, aimed at mining patterns by which a target attribute can be predicted well. It is also known, amongst others, as contrast set mining, as correlated pattern mining, or as emerging pattern mining (see Novak et al. 2009, for an overview of the differences and similarities). The main differences between these sub-areas are geographically, as well as in what measure is employed for calculating the correlation to the target attribute. Recently, Leman et al. (2008) introduced a generalized version of subgroup discovery, where multiple target attributes are allowed.

In subgroup discovery the patterns typically strongly resemble SQL range-based selection queries, where the goal is to identify those patterns (intention) that select objects (extension) that correlate strongly to some target attribute(s). In other words, the goal is to find a pattern by which we can select those rows for which the distribution of values over the target is significantly different from the global distribution. Intuitively, the more strict the selection criteria are per attribute, the more alike the selected objects will be on those attributes.

Consequently, in the traditional sense, patterns identified as interesting by a measure using support, are highly likely to correspond to highly-similar subspace clusters, the more strict conditions the pattern defines on its attributes. The other way around, we can say that the higher the similarity of a subspace cluster, the easier it will be to define a pattern that covers the same area of the database. And, the larger this highly-similar subspace cluster is, the more likely it is that it will be discovered by pattern mining using any support-based interestingness measure. In particular for the branch of pattern mining research concerned with mining dense areas in binary matrices (Seppanen and Mannila 2004; Gionis et al. 2004; Miettinen et al. 2008), there exists a clear link to subspace clustering; sub-parts of the data with very many, or very few ones, are likely to exhibit high similarity.

Besides this link, it is interesting to consider what the main differences are. In our view, it is a matter of perspective; whether to take a truly local stance at the objects, and from within a tile, like in subspace clustering, or, whether to take a slightly more global stance and look at how we can select those objects by defining conditions on the attributes.

Let us further remark that both interestingness and similarity are very vague concepts, for which many proposals exist. A unifying theory, likely from a statistical point of view, would be very welcome. Although not yet unifying, recently Tatti and Vreeken (2011) indirectly gave an attempt in this direction. They discuss how results from possibly different methods can be compared by taking an information theoretic perspective. By transforming data mining results into sets of tiles, where the tiles essentially specify some statistics on a sub-part of the data; and subsequently constructing probabilistic maximum entropy models (Jaynes 1982) of the data based on these sets of tiles (De Bie 2011), we can use information theoretic measures to see how much information is shared between these distributions (Cover and Thomas 2006). The paper gives a proof of concept for noisy tiles in binary data. The recent paper by Kontonasios et al. (2011) gives theory for modelling real-valued data by maximum entropy, and may hence serve as a stepping stone for comparing results on real-valued data.

3.4 Advances of interest in pattern mining

In this section we discuss some recent advances in pattern mining research that may likewise be applicable for issues in subspace clustering.

3.4.1 Summarizing sets of patterns

Reducing redundancy, which we have identified as a major issue in subspace clustering (Sect. 2), has been studied for a long period of time in pattern mining. Very roughly speaking, two main approaches can be distinguished: finding those patterns that best summarize a large collection of patterns, and mining that set of patterns that together best summarize the data.

In this subsection we discuss the former, in which we find well-known examples. The main idea of this approach is that we have a set of results \(\mathcal{F}\), consisting of results that have passed the constraints that we have set, e.g. they all pass the interestingness threshold. Now, with the goal of reducing redundancy in \(\mathcal{F}\), we want to select a subset \(\mathcal{S} \subseteq \mathcal{F}\) such that \(\mathcal{S}\) contains as much information on the whole of \(\mathcal{F}\) while being minimal in size.

Perhaps the most well-known examples of this approach are closed (Pasquier et al. 1999b) and maximal (Bayardo 1998) frequent itemsets, by which we only allow elements \(X \in\mathcal{F}\) into \(\mathcal{S}\) for which no superset exists that has the same support, or no superset exists that does not meet the mining constraints, respectively. As such, for closed sets, given \(\mathcal{S}\) we can reconstruct \(\mathcal{F}\) without loss of information—and for maximal sets we can reconstruct only the itemsets, not their frequencies. Non-derivable itemsets (Calders and Goethals 2007) follow a slightly different approach, and only provide those itemsets for which their frequency cannot be derived from the rest. While the concepts of closed and maximal have been applied in subspace clustering, non-derivability has not been explored yet, to the best of our knowledge.

Reduction by closure only works well when the data are highly structured. Its usefulness deteriorates rapidly with noise. A recent improvement to this end is margin-closedness (Fradkin and Mörchen 2010; Mörchen et al. 2011), where we consider elements into the closure for which our measurement falls within a given margin. This provides strong reduction in redundancy, and higher noise resistance; we expect it to be applicable for subspace clusters. Tatti and Mörchen (2011) discuss how to determine by sampling how robustly a pattern has a particular property, such as closedness. The idea is that if the itemset remains, e.g., closed, in many subsamples of the data, it is more informative than itemsets that lose the property more easily. As a side note, this seems closely related to the concept of lifetime of a cluster within a hierarchy that has been used sometimes in cluster validation (see, e.g., Ling 1972, 1973; Jain and Dubes 1988; Fred and Jain 2005).

Perhaps not trivially translatable to subspace clusters, another branch of summarization is that of picking or creating a number of representative results. Yan et al. (2005) choose \(\mathcal{S}\) such that the error of predicting the frequencies in \(\mathcal{F}\) is minimized. Here, it may well be reasonable to replace frequency with similarity. There are some attempts in this direction, e.g. in biclustering (Segal et al. 2001). Mampaey et al. (2011) give an information theoretic approach to identifying that \(\mathcal{S}\) by which the frequencies in either \(\mathcal{F}\), or the data in general, can best be approximated. To this end, they define a maximum entropy model for data objects, given knowledge about itemset frequencies. The resulting models do capture the general structure of the data very well, without redundancy.

3.4.2 Pattern set mining

While the above-mentioned techniques do reduce redundancy, they typically still result in large collections of patterns that still do contain many variations of the same theme. A recent major insight in pattern mining is that we were asking the wrong question. Instead of asking for all patterns that satisfy some constraints, we should be asking for a small non-redundant group of high-quality patterns. With this insight, the attention shifted from attempting to summarize the full result \(\mathcal{F}\), to provide a good summarization of the data. In terms of subspace clustering, this means that we would select a certain group of subspace clusters such that we can approximate (explain, describe, etc.) the data optimally. This comes close to the general idea of seeing data mining in terms of compression of data (Faloutsos and Megalooikonomou 2007). Here we discuss a few examples of such pattern set mining techniques that we think are applicable to subspace clustering in varying degrees.