Abstract

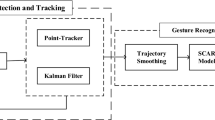

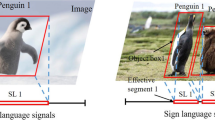

This paper presents an isolated sign language recognition system that comprises of two main phases: hand tracking and hand representation. In the hand tracking phase, an annotated hand dataset is used to extract the hand patches to pre-train Convolutional Neural Network (CNN) hand models. The hand tracking is performed by the particle filter that combines hand motion and CNN pre-trained hand models into a joint likelihood observation model. The predicted hand position corresponds to the location of the particle with the highest joint likelihood. Based on the predicted hand position, a square hand region centered around the predicted position is segmented and serves as the input to the hand representation phase. In the hand representation phase, a compact hand representation is computed by averaging the segmented hand regions. The obtained hand representation is referred to as “Hand Energy Image (HEI)”. Quantitative and qualitative analysis show that the proposed hand tracking method is able to predict the hand positions that are closer to the ground truth. Similarly, the proposed HEI hand representation outperforms other methods in the isolated sign language recognition.

Similar content being viewed by others

References

Aran O, Campr P, Hrúz M, Karpov A, Santemiz P, Zelezny M (2009) Sign-language-enabled information kiosk. In: Proceedings of the 4-th summer workshop on multimodal interfaces eNTERFACE. Orsay, France, pp 24–33

Arulampalam MS, Maskell S, Gordon N, Clapp T (2002) A tutorial on particle filters for online nonlinear/non-gaussian Bayesian tracking. IEEE Trans Signal Process 50(2):174–188

Assan M, Grobel K (1997) Video-based sign language recognition using hidden markov models. In: International gesture workshop. Springer, pp 97–109

Athitsos V, Neidle C, Sclaroff S, Nash J, Stefan A, Yuan Q, Thangali A (2008) The american sign language lexicon video dataset. In: IEEE computer society conference on computer vision and pattern recognition workshops, 2008. CVPRW’08. IEEE, pp 1–8

Babu RV, Ramakrishnan K (2004) Recognition of human actions using motion history information extracted from the compressed video. Image Vis Comput 22 (8):597–607

Belgacem S, Chatelain C, Ben-Hamadou A, Paquet T (2012) Hand tracking using optical-flow embedded particle filter in sign language scenes. In: Computer vision and graphics, pp 288–295

Bishop G, Welch G (2001) An introduction to the Kalman filter. Proc SIGGRAPH Course 8(27599–23175):41

Camgoz NC, Hadfield S, Koller O, Bowden R (2017) Subunets: end-to-end hand shape and continuous sign language recognition. In: IEEE international conference on computer vision (ICCV)

Chen S (2012) Kalman filter for robot vision: a survey. IEEE Trans Ind Electron 59(11):4409–4420

Chen F, Fu CM, Huang CL (2003) Hand gesture recognition using a real-time tracking method and hidden Markov models. Image Vis Comput 21(8):745–758

Comaniciu D, Ramesh V, Meer P (2003) Kernel-based object tracking. IEEE Trans Pattern Anal Mach Intell 25(5):564–577

Coogan T, Awad G, Han J, Sutherland A (2006) Real time hand gesture recognition including hand segmentation and tracking. In: Advances in visual computing, pp 495–504

Dai Q, Hou J, Yang P, Li X, Wang F, Zhang X (2017) The sound of silence: end-to-end sign language recognition using smartwatch. In: Proceedings of the 23rd annual international conference on mobile computing and networking. ACM, pp 462–464

Debevc M, Kožuh I, Kosec P, Rotovnik M, Holzinger A (2012) Sign language multimedia based interaction for aurally handicapped people. In: International conference on computers for handicapped persons. Springer, pp 213–220

Dreuw P, Forster J, Deselaers T, Ney H (2008) Efficient approximations to model-based joint tracking and recognition of continuous sign language. In: IEEE international conference on automatic face and gesture recognition. Amsterdam, pp 1–6

Dreuw P, Forster J, Deselaers T, Ney H (2008) Efficient approximations to model-based joint tracking and recognition of continuous sign language. In: 8th IEEE international conference on automatic face & gesture recognition, 2008. FG’08. IEEE, pp 1–6

Elmezain M, Al-Hamadi A, Niese R, Michaelis B (2010) A robust method for hand tracking using mean-shift algorithm and kalman filter in stereo color image sequences. World Acad Sci Eng Technol (WASET) 3:131–135

Fan J, Xu W, Wu Y, Gong Y (2010) Human tracking using convolutional neural networks. IEEE Trans Neural Netw 21(10):1610–1623

Fang G, Gao W, Zhao D (2004) Large vocabulary sign language recognition based on fuzzy decision trees. IEEE Trans Syst Man Cybern Part A Syst Hum 34 (3):305–314

Fels SS, Hinton GE (1993) Glove-talk: a neural network interface between a data-glove and a speech synthesizer. IEEE Trans Neural Netw 4(1):2–8

Funk N (2003) A study of the kalman filter applied to visual tracking. University of Alberta, Project for CMPUT 652(6)

Gattupalli S, Ghaderi A, Athitsos V (2016) Evaluation of deep learning based pose estimation for sign language recognition. In: Proceedings of the 9th ACM international conference on PErvasive technologies related to assistive environments. ACM, p 12

Gaus YFA, Wong F (2012) Hidden markov model-based gesture recognition with overlapping hand-head/hand-hand estimated using Kalman filter. In: 2012 third international conference on intelligent systems, modelling and simulation (ISMS). IEEE, pp 262–267

Gordon NJ, Salmond DJ, Smith AF (1993) Novel approach to nonlinear/non-gaussian bayesian state estimation. In: IEE proceedings F (radar and signal processing), vol 140. IET, pp 107–113

Han J, Bhanu B (2006) Individual recognition using gait energy image. IEEE Trans Pattern Anal Mach Intell 28(2):316–322

Han J, Awad G, Sutherland A (2009) Automatic skin segmentation and tracking in sign language recognition. IET Comput Vis 3(1):24–35

He T, Mao H, Yi Z (2017) Moving object recognition using multi-view three-dimensional convolutional neural networks. Neural Comput Appl 28(12):3827–3835

Hinton GE, Srivastava N, Krizhevsky A, Sutskever I, Salakhutdinov RR (2012) Improving neural networks by preventing co-adaptation of feature detectors. arXiv preprint arXiv:1207.0580

Hubel DH, Wiesel TN (1962) Receptive fields, binocular interaction and functional architecture in the cat’s visual cortex. J Physiol 160(1):106–154

Imagawa K, Lu S, Igi S (1998) Color-based hands tracking system for sign language recognition. In: Proceedings of the 3rd IEEE international conference on automatic face and gesture recognition, 1998. IEEE, pp 462–467

Jeyakar J, Babu RV, Ramakrishnan K (2008) Robust object tracking with background-weighted local kernels. Comput Vis Image Underst 112(3):296–309

Kadous MW et al (1996) Machine recognition of Auslan signs using powergloves: towards large-lexicon recognition of sign language. In: Proceedings of the workshop on the integration of gesture in language and speech, vol 165

Karpathy A, Toderici G, Shetty S, Leung T, Sukthankar R, Fei-Fei L (2014) Large-scale video classification with convolutional neural networks. In: 2014 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 1725–1732

Kim JH, Kim N, Park H, Park JC (2016) Enhanced sign language transcription system via hand tracking and pose estimation. J Comput Sci Eng 10 (3):95–101

Kong W, Ranganath S (2008) Signing exact english (see): modeling and recognition. Pattern Recognit 41(5):1638–1652

Kosmidou VE, Hadjileontiadis LJ (2009) Sign language recognition using intrinsic-mode sample entropy on semg and accelerometer data. IEEE Trans Biomed Eng 56(12):2879–2890

Krizhevsky A, Sutskever I, Hinton GE (2012) Imagenet classification with deep convolutional neural networks. In: Advances in neural information processing systems, pp 1097–1105

Li Y, Chen X, Zhang X, Wang K, Wang ZJ (2012) A sign-component-based framework for chinese sign language recognition using accelerometer and semg data. IEEE Trans Biomed Eng 59(10):2695–2704

Morshidi M, Tjahjadi T (2014) Gravity optimised particle filter for hand tracking. Pattern Recognit 47(1):194–207

Mujacic S, Debevc M, Kosec P, Bloice M, Holzinger A (2012) Modeling, design, development and evaluation of a hypervideo presentation for digital systems teaching and learning. Multimed Tools Appl 58(2):435–452

Murakami K, Taguchi H (1991) Gesture recognition using recurrent neural networks. In: Proceedings of the SIGCHI conference on human factors in computing systems. ACM, pp 237–242

Nadgeri SM, Sawarkar S, Gawande AD (2010) Hand gesture recognition using camshift algorithm. In: 2010 3rd international conference on emerging trends in engineering and technology (ICETET). IEEE, pp 37–41

Nair V, Hinton GE (2010) Rectified linear units improve restricted Boltzmann machines. In: Proceedings of the 27th international conference on machine learning (ICML-10), pp 807–814

Neidle C, Vogler C (2012) A new web interface to facilitate access to corpora: development of the ASLLRP data access interface (dai). In: Proceedings of the 5th workshop on the representation and processing of sign languages: interactions between Corpus and Lexicon, LREC

Neidle C, Michael N, Nash J, Metaxas D, Bahan I, Cook L, Duffy Q, Lee R (2009) A method for recognition of grammatically significant head movements and facial expressions, developed through use of a linguistically annotated video corpus. In: Proceedings of 21st ESSLLI workshop on formal approaches to sign languages. Bordeaux

Neidle C, Thangali A, Sclaroff S (2012) Challenges in development of the american sign language lexicon video dataset (asllvd) corpus. In: Proceedings of the 5th workshop on the representation and processing of sign languages: interactions between Corpus and Lexicon

Oliveira M, Chatbri H, Little S, O’Connor NE, Sutherland A (2017) A comparison between end-to-end approaches and feature extraction based approaches for sign language recognition. In: IEEE international conference on image and vision computing New Zealand (IVCNZ), pp 1–6

Otsu N (1975) A threshold selection method from gray-level histograms. Automatica 11(285–296):23–27

Pigou L, Dieleman S, Kindermans PJ, Schrauwen B (2014) Sign language recognition using convolutional neural networks. In: European conference on computer vision, workshop

Prince SJ (2012) Computer vision: models, learning, and inference. Cambridge University Press, Cambridge

Ross DA, Lim J, Lin RS, Yang MH (2008) Incremental learning for robust visual tracking. Int J Comput Vis 77(1–3):125–141

Ruffieux S, Lalanne D, Mugellini E, Khaled OA (2014) A survey of datasets for human gesture recognition. In: International conference on human-computer interaction. Springer, pp 337–348

Rybach D, Ney IH, Borchers J, Deselaers DIT (2006) Appearance-based features for automatic continuous sign language recognition. Diplomarbeit im Fach Informatik Rheinisch-Westfälische Technische Hochschule Aachen

Shan C, Tan T, Wei Y (2007) Real-time hand tracking using a mean shift embedded particle filter. Pattern Recognit 40(7):1958–1970

Smeulders AW, Chu DM, Cucchiara R, Calderara S, Dehghan A, Shah M (2014) Visual tracking: an experimental survey. IEEE Trans Pattern Anal Mach Intell 36(7):1442–1468

Starner T, Pentland A (1997) Real-time american sign language recognition from video using hidden markov models. In: Motion-based recognition. Springer, pp 227–243

Su R, Chen X, Cao S, Zhang X (2016) Random forest-based recognition of isolated sign language subwords using data from accelerometers and surface electromyographic sensors. Sensors 16(1):100

Thangali A, Nash JP, Sclaroff S, Neidle C (2011) Exploiting phonological constraints for handshape inference in asl video. In: 2011 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 521–528

Valli C (2005) The Gallaudet dictionary of American sign language. Gallaudet University Press, Washington, DC

Viola P, Jones M (2001) Rapid object detection using a boosted cascade of simple features. In: Proceedings of the 2001 IEEE computer society conference on computer vision and pattern recognition, 2001. CVPR 2001, vol 1. IEEE, pp I–511

Vogler C, Metaxas D (1998) Asl recognition based on a coupling between HMMS and 3d motion analysis. In: Sixth international conference on computer vision, 1998. IEEE, pp 363–369

Wang RY, Popović J (2009) Real-time hand-tracking with a color glove. In: ACM transactions on graphics (TOG), vol 28. ACM, p 63

Wang Q, Chen F, Yang J, Xu W, Yang MH (2012) Transferring visual prior for online object tracking. IEEE Trans Image Process 21(7):3296–3305

Wang D, Lu H, Yang MH (2013) Online object tracking with sparse prototypes. IEEE Trans Image Process 22(1):314–325

Weng SK, Kuo CM, Tu SK (2006) Video object tracking using adaptive kalman filter. J Vis Commun Image Represent 17(6):1190–1208

Wu Y, Lim J, Yang MH (2015) Object tracking benchmark. IEEE Trans Pattern Anal Mach Intell 37(9):1834–1848

Yang H, Shao L, Zheng F, Wang L, Song Z (2011) Recent advances and trends in visual tracking: a review. Neurocomputing 74(18):3823–3831

Yilmaz A, Javed O, Shah M (2006) Object tracking: a survey. ACM Comput Surv (CSUR) 38(4):13

Zahedi M, Keysers D, Deselaers T, Ney H (2005) Combination of tangent distance and an image distortion model for appearance-based sign language recognition. In: Pattern recognition. Springer, pp 401–408

Zaki MM, Shaheen SI (2011) Sign language recognition using a combination of new vision based features. Pattern Recognit Lett 32(4):572–577

Zhang Z, Huang F (2013) Hand tracking algorithm based on superpixels feature. In: 2013 international conference on information science and cloud computing companion (ISCC-C). IEEE, pp 629–634

Zhang T, Liu S, Ahuja N, Yang MH, Ghanem B (2015) Robust visual tracking via consistent low-rank sparse learning. Int J Comput Vis 111(2):171–190

Zhong W, Lu H, Yang MH (2012) Robust object tracking via sparsity-based collaborative model. In: 2012 IEEE conference on computer vision and pattern recognition (CVPR). IEEE, pp 1838–1845

Zhong W, Lu H, Yang MH (2014) Robust object tracking via sparse collaborative appearance model. IEEE Trans Image Process 23(5):2356–2368

Zhou SK, Chellappa R, Moghaddam B (2004) Visual tracking and recognition using appearance-adaptive models in particle filters. IEEE Trans Image Process 13 (11):1491–1506

Zou X, Wang H, Zhang Q (2013) Hand gesture target model updating and result forecasting algorithm based on mean shift. J Multimed 8(1):1–8

Acknowledgements

This research is supported by Multimedia University Mini Fund, Grant No. MMUI/180182. We gratefully acknowledge the support of NVIDIA Corporation with the donation of the Quadro P6000 GPU used for this research.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Lim, K.M., Tan, A.W.C., Lee, C.P. et al. Isolated sign language recognition using Convolutional Neural Network hand modelling and Hand Energy Image. Multimed Tools Appl 78, 19917–19944 (2019). https://doi.org/10.1007/s11042-019-7263-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-019-7263-7