Abstract

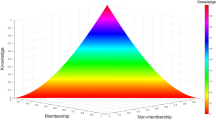

We examine the respective roles of substantive understanding (i.e., understanding of factual knowledge, concepts, laws and theories) and procedural understanding (an understanding of ideas about evidence; concepts such as reliability and validity, measurement and calibration, data collection, measurement error, the ability to interpret evidence and the like) required to carry out an open-ended science investigation. Our chosen method of analysis is Charles Ragin’s Fuzzy Set Qualitative Comparative Analysis which we introduce in the paper. Comparing the performance of undergraduate students on two investigation tasks which differ with regard to the amount of substantive content, we demonstrate that both substantive understanding and an understanding of ideas about evidence are jointly involved in carrying out such tasks competently. It might be expected that substantive knowledge is less important when carrying out an investigation with little substantive demand. However, we find that the contribution of substantive understanding and an understanding of ideas about evidence is remarkably similar for both tasks. We discuss possible reasons for our findings.

Similar content being viewed by others

Notes

For more details see Gott and Roberts (2008).

We have a Type 3 task, which has high substantive and procedural demands but that is not used in this research.

Together with others, he also has developed the software fs/QCA (for “fuzzy set/Qualitative Comparative Analysis”) (Ragin et al. 2006) which performs the required analyses. This is the software we use.

We deliberately avoid the use of the terms “cause” or “causal condition” as the relationships described here are patterns of association. Causal statements can only be made based on theoretical considerations.

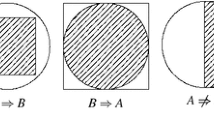

Note that, in conducting research, temporal order and substantive knowledge need to be used in determining the causal order, i.e. the difference between Fig. 1 and 2 lies in what is considered cause and effect. It is conceivable that this may vary or not be clear in a research situation. For our purposes, however, we have decided that A is the cause and O the outcome. The determination of sufficiency and necessity is based on this decision.

In this simple case, another way of thinking about consistency/sufficiency and coverage/necessity is in terms of inflow and outflow: in a crosstabulation such as Table 4, the proportion of condition A in O which we called consistency can be called outflow because it refers to the percentage of people with A who subsequently obtain O. The proportion of O with condition A as described in Table 6 (called coverage) can also be called inflow because it refers to the percentage of people with O who got there after having also experienced A.

Note that it is not possible directly to obtain the number of cases with the outcome from a truth table such as Table 8. It can be calculated from the number of cases in a given row together with the consistency figure, which in effect is the proportion.

This would be a rather generous threshold, however, and was chosen only in order to demonstrate a solution with several pathways. It is more common to choose a threshold of at least 0.70.

Another instance of arbitrariness is the choice of threshold for conventional significance testing in inferential statistics.

In most circumstances (and this is true given our calibrations), each case will have just one such membership > 0.5. This situation ceases to be the case where the dataset includes cases with exactly 0.5 values (for details see Ragin 2005).

Of course, the fact that some rows are low in n is informative in itself. It indicates so-called limited diversity, i.e. the finding that, empirically, some combinations of conditions are rare or non-existent.

There are several measures of fuzzy consistency implemented in the software fs/QCA. The one we are using here, the “truth table algorithm” which is implemented in the current version of the fs/QCA software (Ragin et al. 2006), does not simply take into account whether cases conform to the “less than or equal to” rule, but it also takes near misses into account. See Ragin (2005).

References

Abbott, A. (1988). Transcending general linear reality. Sociological Theory, 6, 169–186. doi:10.2307/202114.

Abd-El-Khalick, F., Boujaoude, S. R, Lederman, N. G., Mamlok-Naaman, R., Hofstein, A., Niaz, M., Treagust, D., & Tuan, H.-L. (2004). Inquiry in science education: international perspectives. Science Education, 88(3), 397–419. doi:10.1002/sce.10118.

Adey, P. (1992). The CASE results: implications for science teaching. International Journal of Science Education, 14(2), 137–146. doi:10.1080/0950069920140202.

American Association for the Advancement of Science. (1967). Science—a process approach. Washington DC: Ginn & Co.

Baxter, G. P., Shavelson, R. J., Goldman, S. R., & Pine, J. (1992). Evaluation of procedure-based scoring for hands-on assessment. Journal of Educational Measurement, 29(1), 1–17. doi:10.1111/j.1745-3984.1992.tb00364.x.

Bryce, T. G. K., McCall, J., Macgregor, J., Robertson, I. J., & Weston, R. A. J. (1983). Techniques for the assessment of practical skills in foundation science. London: Heinemann.

Buffler, A., Allie, S., & Lubben, F. (2001). The development of first year physics students’ ideas about measurement in terms of point and set paradigms. International Journal of Science Education, 23(11), 1137–1156. doi:10.1080/09500690110039567.

Cheli, B., & Lemmi, A. (1995). A ‘totally fuzzy and relative’ approach to the measurement of poverty. Economic Notes, 94(1), 115–134.

Chen, Z., & Klahr, D. (1999). All other things being equal: Acquisition and transfer of the control of variables strategy. Child Development, 70(5), 1098–1120. doi:10.1111/1467-8624.00081.

Chinn, C. A., & Malhotra, B. A. (2002). Children’s responses to anomalous scientific data: How is conceptual change impeded? Journal of Educational Psychology, 94, 327–343. doi:10.1037/0022-0663.94.2.327.

Cooper, B. (2005). Applying Ragin’s Crisp and Fuzzy Set QCA to large datasets: social class and educational achievement in the National Child Development Study. Sociological Research Online, 10(2). URL: http://www.socresonline.org.uk/10/12/cooper.html.

Cooper, B. (2006). Using Ragin’s Qualitative Comparative Analysis with longitudinal datasets to explore the degree of meritocracy characterising educational achievement in Britain. Paper presented at the Annual Meeting of the American Educational Research Association, San Francisco, 07—11/04/2006.

Cooper, B., & Glaesser, J. (2007). Exploring social class compositional effects on educational achievement with fuzzy set methods: a British study. Paper presented at the Annual Meeting of the American Educational Research Association, Chicago, 09—13/04/2007.

Curriculum Council, Western Australia (1998). Science learning area statement. Website: http://www.curriculum.wa.edu.au/files/pdf/science.pdf.

Davis, B. C. (1989). GASP: Graded assessment in science project. London: Hutchinson.

Duschl, R.A., Schweingruber, H.A., & Shouse, A.W. (Eds.).(2006). Taking science to school: learning and teaching science in grades K-8. Committee on Science Learning, Kindergarten Through Eighth Grade. Board on Science Education, Center for Education, Division of Behavioral and Social Sciences and Education. Washington, DC: The National Academies.

Erickson, G., Bartley, R. W., Blake, L., Carlisle, R., Meyer, K., & Stavey, R. (1992). British Columbia assessment of Science 1991 technical report II: Student performance component. Victoria, B.C.: Ministry of Education.

Germann, P. J., & Aram, R. (1996). Student performances on the science processes of recording data, analyzing data, drawing conclusions, and providing evidence. Journal of Research in Science Teaching, 33(7), 773–798. doi:10.1002/(SICI)1098-2736(199609)33:7<773::AID-TEA5>3.0.CO;2-K.

Germann, P. J., Aram, R., & Burke, G. (1996). Identifying patterns and relationships among the responses of seventh-grade students to the science process skill of designing experiments. Journal of Research in Science Teaching, 33(1), 79–99. doi:10.1002/(SICI)1098-2736(199601)33:1<79::AID-TEA5>3.0.CO;2-M.

Glaesser, J., Gott, R., Roberts, R., & Cooper, B. (forthcoming). Underlying success in open-ended investigations in science: using qualitative comparative analysis to identify necessary and sufficient conditions. Research in Science & Technological Education.

Gott, R., & Duggan, S. (2007). A framework for practical work in science and scientific literacy through argumentation. Research in Science & Technological Education, 25(3), 271–291. doi:10.1080/02635140701535000.

Gott, R., & Murphy, P. (1987). Assessing investigations at ages 13 and 15. Science report for teachers: 9. London: DES.

Gott, R., & Roberts, R. (2004). A written test for procedural understanding: a way forward for assessment in the UK science curriculum? Research in Science & Technological Education, 22(1), 5–21. doi:10.1080/0263514042000187511.

Gott, R., & Roberts, R. (2008). Concepts of evidence and their role in open-ended practical investigations and scientific literacy; background to published papers. The School of Education, Durham University, UK. URL: http://www.dur.ac.uk/education/research/current_research/maths/msm/understanding_scientific_evidence.

Gotwals, A., & Songer, N. (2006). Measuring students’ scientific content and inquiry reasoning. In Barab, S.A., K.E. Hay, N.B. Songer, & D.T. Hickey (Eds.), Making a difference: The proceedings of the Seventh International Conference of the Learning Sciences (Icls). (International Society of the Learning Science).

Haigh, M. (1998). Investigative practical work in year 12 biology programmes. Unpublished Doctor of Philosophy Thesis. University of Waikato, Hamilton, NZ.

Hart, C., Mulhall, P., Berry, A., Loughran, J., & Gunstone, R. (2000). What is the purpose of this experiment? Or can students learn something from doing experiments? Journal of Research in Science Teaching, 37(7), 655–675. doi:10.1002/1098-2736(200009)37:7<655::AID-TEA3>3.0.CO;2-E.

Hodson, D. (1991). Practical work in science: time for a reappraisal. Studies in Science Education, 19, 175–184. doi:10.1080/03057269108559998.

House of Commons, Science and Technology Committee (2002). Science education from 14 to 19 (Third report of session 2001-2 ed.). London: The Stationery Office.

Inhelder, B., & Piaget, J. (1958). The growth of logical thinking. London: Routledge and Kegan Paul.

Jenkins, E. W. (1979). From Armstrong to Nuffield: studies in twentieth century science education in England and Wales. London: John Murray.

Jones, M., & Gott, R. (1998). Cognitive acceleration through science education: alternative perspectives. International Journal of Science Education, 20(7), 755–768. doi:10.1080/0950069980200701.

Kosko, B. (1994). Fuzzy Thinking: the new science of fuzzy logic. London: HarperCollins.

Klahr, D., & Nigam, M. (2004). The equivalence of learning paths in early science instruction: effects of direct instruction and discovery learning. Psychological Science, 15(10), 661–667. doi:10.1111/j.0956-7976.2004.00737.x.

Kuhn, D., & Dean, D. (2005). Is developing scientific thinking all about learning to control variables? Psychological Science, 16(11), 866–870.

Kuhn, D., Amsel, E., & O’Loughlin, M. (1988). The development of scientific thinking skills. San Diego: Academic Press.

Kunda, Z. (1990). The case for motivated reasoning. Psychological Bulletin, 108, 480–498. doi:10.1037/0033-2909.108.3.480.

Lieberson, S. (1985). Making it count. The improvement of social research and theory. Berkeley, Los Angeles, London: University of California Press.

Lubben, F., & Millar, R. (1996). Children’s ideas about the reliability of experimental data. International Journal of Science Education, 18(8), 955–968. doi:10.1080/0950069960180807.

Martin, J. R. (1993). Literacy in science: learning to handle text as technology. In M. A. K. Halliday, & J. R. Martin (Eds.), Writing science: literacy and discursive power (pp. 166–220). Pittsburgh, P.A.: University of Pittsburgh Press.

Millar, R., Lubben, F., Gott, R., & Duggan, S. (1994). Investigating in the school science laboratory: conceptual and procedural knowledge and their influence on performance. Research Papers in Education, 9(1), 207–248. doi:10.1080/0267152940090205.

Osborne, J. F., Collins, S., Ratcliffe, M., Millar, R., & Duschl, R. (2003). What “Ideas about science” should be taught in school science? A Delphi study of the expert community. Journal of Research in Science Teaching, 40(7), 692–720. doi:10.1002/tea.10105.

Polanyi, M. (1966). The tacit dimension. Gloucester, MA: Peter Smith.

Qualifications and Curriculum Authority. (2004). Reforming science education for the 21st century; a commentary on the new GCSE criteria for awarding bodies. London: QCA.

Qualifications and Curriculum Authority (QCA) (undated). GCSE Criteria for Science. Website: http://www.qca.org.uk/libraryAssets/media/5685_gcse_sc_criteria.pdf.

Ragin, C. C. (1987). The comparative method. Moving beyond qualitative and quantitative strategies. Berkeley, Los Angeles, London: University of California Press.

Ragin, C. C. (2000). Fuzzy-set social science. Chicago and London: University of Chicago Press.

Ragin, C. C. (2003). Recent advances in fuzzy-set methods and their application to policy questions. URL: http://www.compasss.org/Ragin2003.pdf.

Ragin, C. C. (2005). From fuzzy sets to crisp truth tables. URL: <http://www.compasss.org/Raginfztt_April05.pdf>.

Ragin, C. C. (2006a). How to lure analytic social science out of the doldrums. Some lessons from comparative research. International Sociology, 21(5), 633–646. doi:10.1177/0268580906067834.

Ragin, C. C. (2006b). Set Relations in social research: evaluating their consistency and coverage. Political Analysis, 14, 291–310. doi:10.1093/pan/mpj019.

Ragin, C. C. (forthcoming). Fuzzy sets: calibration versus measurement. In J. Box-Steffensmeier, H. Brady & D. Collier (Eds.), The Oxford handbook of political methodology Oxford: Oxford University Press.

Ragin, C. C., Drass, K. A., & Davey, S. (2006). Fuzzy-set/qualitative comparative analysis 2.0. Tucson, Arizona: Department of Sociology, University of Arizona Press Website: http://www.u.arizona.edu/%7Ecragin/fsQCA/software.shtml).

Roberts, R., & Gott, R. (2000). Procedural understanding in biology: how is it characterised in texts? The School Science Review, 82, 83–91.

Roberts, R., & Gott, R. (2003). Assessment of biology investigations. Journal of Biological Education, 37(3), 114–121.

Roberts, R., & Gott, R. (2004). Assessment of Sci1: alternatives to coursework? The School Science Review, 85(131), 103–108.

Roberts, R., & Gott, R. (2006). Assessment of performance in practical science and pupil attributes. Assessment in Education, 13(1), 45–67. doi:10.1080/09695940600563652.

Ryder, J., & Leach, J. (1999). University science students’ experiences of investigative project work and their images of science. International Journal of Science Education, 21(9), 945–956. doi:10.1080/095006999290246.

Sandoval, W. A. (2005). Understanding students’ practical epistemologies and their influence on learning through inquiry. Science Education, 89, 634–656. doi:10.1002/sce.20065.

Schauble, L. (1996). The development of scientific reasoning in knowledge-rich contexts. Developmental Psychology, 32(1), 102–119. doi:10.1037/0012-1649.32.1.102.

Screen, P. A. (1986). The Warwick Process Science project. The School Science Review, 72(260), 17–24.

Shayer, M., & Adey, P. S. (1992). Accelerating the development of formal thinking in middle and high school students, II: post project effects on science achievement. Journal of Research in Science Teaching, 29, 81–92. doi:10.1002/tea.3660290108.

Smithson, M., & Verkuilen, J. (2006). Fuzzy set theory. applications in the social sciences. Thousand Oaks: Sage.

Solano-Flores, G., & Shavelson, R. J. (1997). Development of performance assessments in science: conceptual, practical and logistical issues. Educational Measurement: Issues and Practice, 16(3), 16–25. doi:10.1111/j.1745-3992.1997.tb00596.x.

Solano-Flores, G., Jovanovic, J., Shavelson, R. J., & Bachman, M. (1999). On the development and evaluation of a shell for generating science performance assessments. International Journal of Science Education, 21(3), 293–315. doi:10.1080/095006999290714.

Star, J. R. (2000). On the relationship between knowing and doing in procedural learning. In B. Fishman, & S. O’Connor-Divelbiss (Eds.), Fourth international conference of the learning sciences (pp. 80–86). Mahwah, NJ: Erlbaum.

Toh, K. A., & Woolnough, B. E. (1994). Science process skills: are they generalisable? Research in Science & Technological Education, 12(1), 31–42. doi:10.1080/0263514940120105.

Toth, E. E., Klahr, D., & Chen, Z. (2000). Bridging research and practice: a cognitively based classroom intervention for teaching experimentation skills to elementary school children. Cognition and Instruction, 18(4), 423–459. doi:10.1207/S1532690XCI1804_1.

Tytler, R., Duggan, S., & Gott, R. (2001a). Dimensions of evidence, the public understanding of science and science education. International Journal of Science Education, 23(8), 815–832. doi:10.1080/09500690010016058.

Tytler, R., Duggan, S., & Gott, R. (2001b). Public participation in an environmental dispute: implications for science education. Public Understanding of Science, 10(4), 343–364. doi:10.1088/0963-6625/10/4/301.

Verkuilen, J. (2005). Assigning membership in a fuzzy set analysis. Sociological Methods & Research, 33(3), 462–496. doi:10.1177/0049124105274498.

Welford, G., Harlen, W., & Schofield, B. (1985). Practical Testing at Ages 11, 13 and 15. London: DES.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Glaesser, J., Gott, R., Roberts, R. et al. The Roles of Substantive and Procedural Understanding in Open-Ended Science Investigations: Using Fuzzy Set Qualitative Comparative Analysis to Compare Two Different Tasks. Res Sci Educ 39, 595–624 (2009). https://doi.org/10.1007/s11165-008-9108-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11165-008-9108-7