Abstract

This paper discusses a study of students learning core conceptual perspectives from recent scientific research on complexity using a hypermedia learning environment in which different types of scaffolding were provided. Three comparison groups used a hypermedia system with agent-based models and scaffolds for problem-based learning activities that varied in terms of the types of text based scaffolds that were provided related to a set of complex systems concepts. Although significant declarative knowledge gains were found for the main experimental treatment in which the students received the most scaffolding, there were no significant differences amongst the three groups in terms of the more cognitively demanding performance on problem solving tasks. However, it was found across all groups that the students who enriched their ontologies about how complex systems function performed at a significantly higher level on transfer problem solving tasks in the posttest. It is proposed that the combination of interactive representational scaffolds associated with NetLogo agent-based models in complex systems cases and problem solving scaffolding allowed participants to abstract ontological dimensions about how systems of this type function that, in turn, was associated with the higher performance on the problem solving transfer tasks. Theoretical and design implications for learning about complex systems are discussed.

Similar content being viewed by others

Notes

Agent-based models computationally represent phenomena as a number of agents or elements that each has particular rules they follow, and for which the apparent complexity of the system being modeled emerges from the interactions of the agents in the systems. This approach to computer modeling contrasts with equation-based modeling (EBM) in which the execution of the model provides an evaluation of the equations. There are, of course, advantages and disadvantages of all computational modeling techniques. For a discussion, see Parunak et al. (1998).

We will use the terms “ontological categories” and “ontologies” as synonyms in this paper. Chi (1992, 2005) has employed the construct of ontological categories in her theoretical work on conceptual change, building on earlier work by researchers such as Lakoff (1987) who argue humans have a fundamental capacity to assign phenomena to categories.

The Complex Systems Ontology Framework (CSOF) is based on the earlier Complex Systems Mental Model Framework (Jacobson 2001), which had included both ontological and epistemic beliefs. The new CSOF focuses on the ontologies associated with complex systems, however, one of the epistemic dimensions (Understanding: Reductive versus Non-reductive) is included in this study as it correlates highly with the ontologies of the framework.

The main purpose of the collaborative activity was to provide some variety to the three sessions of 2 hours each, rather than just having the participants individually read the cases and run the NetLogo models for such extended periods of time. We control for the effect of the collaboration by spreading this activity across all three treatment groups.

Stephen Hawking, quoted in the San Jose´ Mercury News, January 23, 2000.

References

Azevedo, R., & Hadwin, A. F. (2005). Scaffolding self-regulated learning and metacognition: Implications for the design of computer-based scaffolds. Instructional Science, 33, 367–379.

Bar-Yam, Y. (1997). Dynamics of complex systems. Reading, MA: Addison-Wesley.

Bransford, J. D., Brown, A. L., Cocking, R. R., & Donovan, S. (Eds.). (2000). How people learn: Brain, mind, experience, and school (expanded edition). Washington, DC: National Academy Press.

Casti, J. L. (1994). Complexificantion: Explaining a paradoxical world through the science of surprise. New York: HarperCollins.

Charles, E. S. (2003). An ontological approach to conceptual change: The role that complex systems thinking may play in providing the explanatory framework needed for studying contemporary sciences. Unpublished doctoral dissertation, Concordia University, Montreal, Canada.

Charles, E. S., & d’Apollonia, S. (2004). Developing a conceptual framework to explain emergent causality: Overcoming ontological beliefs to achieve conceptual change. In K. Forbus, D. Gentner, & T. Reiger (Eds.), Proceedings of the 26th annual cognitive science society. Mahwah, NJ: Lawrence Erlbaum Associates.

Chi, M. T. H. (1992). Conceptual change within and across ontological categories: Implications for learning and discovery in science. In R. Giere (Ed.), Minnesota studies in the philosophy of science: Cognitive models of science (Vol. XV, pp. 129–186). Minneapolis: University of Minnesota Press.

Chi, M. T. H. (2005). Commonsense conceptions of emergent processes: Why some misconceptions are robust. The Journal of the Learning Sciences, 14(2), 161–199.

Chi, M. T. H., Slotta, J. D., & de Leeuw, N. (1994). From things to processes: A theory of conceptual change for learning science concepts. Learning and Instruction, 4, 27–43.

Collins, A., Brown, J., & Newman, S. (1989). Cognitive apprenticeship: Teaching the crafts of reading, writing, and mathematics. In L. Resnick (Ed.), Knowing, learning, and instruction (pp. 453–494). Hillsdale, NJ: Lawrence Erlbaum Associates.

Davis, E. A., & Miyake, N. (2004). Explorations of scaffolding in complex classroom systems. The Journal of the Learning Sciences, 13(3), 265–272.

diSessa, A. A. (2006). A history of conceptual change research: Threads and fault lines. In R. K. Sawyer (Ed.), The Cambridge handbook of the learning sciences (pp. 265–281). Cambridge, UK: Cambridge University Press.

Duschl, R. A., Schweingruber, H. A., & Shouse, A. W. (Eds.). (2007). Taking science to school: Learning and teaching science in grades K08. Washington, DC: The National Academies Press.

Gell-Mann, M. (1994). The quark and the jaguar: Adventures in the simple and the complex. New York: Freeman and Company.

Gentner, D., Loewenstein, J., & Thompson, L. (2003). Learning and transfer: A general role for analogical encoding. Journal of Educational Psychology, 95(2), 393–408.

Goldstone, R. L. (2006). The complex systems see-change in education. The Journal of the Learning Sciences, 15(1), 35–43.

Goldstone, R. L., & Wilensky, U. (2008). Promoting transfer through complex systems principles. Journal of the Learning Sciences, 17(4), 465–516.

Hmelo-Silver, C. E., Marathe, S., & Liu, L. (2007). Fish swim, rocks sit, and lungs breathe: Expert-novice understanding of complex systems. The Journal of the Learning Sciences, 16(3), 307–331.

Holland, J. H. (1995). Hidden order: How adaptation builds complexity. Reading, MA: Addison-Wesley.

Jacobson, M. J. (2001). Problem solving, cognition, and complex systems: Differences between experts and novices. Complexity, 6(3), 41–49.

Jacobson, M. J. (2008). Hypermedia systems for problem-based learning: Theory, research, and learning emerging scientific conceptual perspectives. Educational Technology Research and Development, 56, 5–28.

Jacobson, M. J., & Archodidou, A. (2000). The design of hypermedia tools for learning: Fostering conceptual change and transfer of complex scientific knowledge. The Journal of the Learning Sciences, 9(2), 149–199.

Jacobson, M. J., Maouri, C., Mishra, P., & Kolar, C. (1996). Learning with hypertext learning environments: Theory, design, and research. Journal of Educational Multimedia and Hypermedia, 5(3/4), 239–281.

Jacobson, M. J., & Spiro, R. J. (1993). Hypertext learning environments and cognitive flexibility: Research into the transfer of complex knowledge (Technical No. 573). Champaign, IL: University of Illinois Center for the Study of Reading.

Jacobson, M. J., & Spiro, R. J. (1995). Hypertext learning environments, cognitive flexibility, and the transfer of complex knowledge: An empirical investigation. Journal of Educational Computing Research, 12(5), 301–333.

Jacobson, M. J., & Wilensky, U. (2006). Complex systems in education: Scientific and educational importance and implications for the learning sciences. The Journal of the Learning Sciences, 15(1), 11–34.

Kapur, M. (2008). Productive failure. Cognition and Instruction, 26(3), 379–424.

Kapur, M. (2009). Productive failure in mathematical problem solving. Instructional Science. doi:10.1007/s11251-11009-19093-x.

Kapur, M. (2010). A further study of productive failure in mathematical problem solving: Unpacking the design components. Instructional Science. doi:10.1007/s11251-11010-19144-11253.

Kauffman, S. (1995). At home in the universe: The search for laws of self-organization and complexity. New York: Oxford University Press.

Lakoff, G. (1987). Women, fire, and dangerous things: What categories reveal about the mind. Chicago: University of Chicago Press.

Landis, J. R., & Koch, G. G. (1977). The measurement of observer agreement for categorical data. Biometrics, 33(1), 159–174.

Levin, J. A., Stuve, M. J., & Jacobson, M. J. (1999). Teachers’ conceptions of the internet and the world wide web: A representational toolkit as a model of expertise. Journal of Educational Computing Research, 21(1), 1–23.

Parunak, H. V. D., Savit, R., & Riolo, R. L. (1998). Agent-based modeling vs. equation-based modeling: A case study and users’ guide. In Proceedings of multi-agent systems and agent-based simulation (MABS’98) (pp. 10–25). Heidelberg: Springer-Verlag.

Resnick, M. (1994). Turtles, termites, and traffic jams: Explorations in massively parallel microworlds. Cambridge, MA: MIT Press.

Resnick, M., & Wilensky, U. (1993). Beyond the deterministic, centralized mindsets: A new thinking for new science. In Paper presented at the annual meeting of the American Educational Research Association, Atlanta, GA.

Resnick, M., & Wilensky, U. (1998). Diving into complexity: Developing probabilistic decentralized thinking through role-playing activities. Journal of Learning Science, 7(2), 153–172.

Sabelli, N. (2006). Understanding complex systems strand: Complexity, technology, science, and education. The Journal of the Learning Sciences, 15(1), 5–9.

Tergan, S. O. (1997). Conceptual and methodological shortcomings in hypertext/hypermedia design and research. Journal of Educational Computing Research, 16(3), 209–235.

Thompson, K. (2008). The value of multiple representations for learning about complex systems. In Paper presented at the International Conference for the Learning Sciences, Utrecht, The Netherlands.

Vosniadou, S., & Brewer, W. F. (1994). Mental models of the day/night cycle. Cognitive Science, 18(1), 123–183.

Wilensky, U. (1998). NetLogo wolf sheep predation model. Evanston, IL: Center for Connected Learning and Computer-Based Modeling, Northwestern University. (http://ccl.northwestern.edu/netlogo/models/WolfSheepPredation.).

Wilensky, U. (1999). NetLogo. Evanston, IL: Center for Connected Learning and Computer-Based Modeling. Northwestern University (http://ccl.northwestern.edu/netlogo).

Wilensky, U., & Reisman, K. (1998). Learning biology through constructing and testing computational theories: An embodied modeling approach. In Paper presented at the Second International Conference on Complex Systems, Nashau, NH.

Wilensky, U., & Reisman, K. (2006). Thinking like a wolf, a sheep or a firefly: Learning biology through constructing and testing computational theories—an embodied modeling approach. Cognition & Instruction, 24(2), 171–209.

Wilensky, U., & Resnick, M. (1995). New thinking for new sciences: Constructionist approaches for exploring complexity. In Paper presented at the annual meeting of the American Educational Research Association, San Francisco, CA.

Wilensky, U., & Resnick, M. (1999). Thinking in levels: A dynamic systems perspective to making sense of the world. Journal of Science Education and Technology, 8(1), 3–19.

Wood, D., Bruner, J. S., & Ross, G. (1976). The role of tutoring in problem solving. Journal of Psychology and Psychiatry, 17, 89–100.

Acknowledgments

The research discussed in this paper has been funded in part by support to the first author from the University of Sydney Faculty of Education and Social Work, the Singapore Ministry of Education to the Learning Sciences Laboratory at the National Institute of Education (NIE), Nanyang Technological University (NTU), and from the Korean IT Industry Promotion Agency. Phoebe Chen Jacobson designed the Complex Systems Knowledge Mediator hypermedia learning environment used in this study. The contributions of the research assistants to this project are also gratefully acknowledged: Seo-Hong Lim, Lynn Low, and HyungShin Kim.

Author information

Authors and Affiliations

Corresponding author

Appendix: selected texts of mini-lessons for complex systems ideas and concepts

Appendix: selected texts of mini-lessons for complex systems ideas and concepts

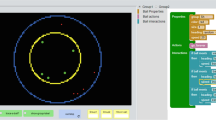

This Appendix provides texts from the Complex Systems Knowledge Mediator hypermedia program for the complex systems concepts (referred to as Formal Ontology Scaffolds) of Self Organization and Emergent Properties and Levels and the complex systems ideas (referred to as Implicit Ontology Scaffolds) of System Control and Agent Actions. The learner would access these by clicking on the Mini-Lessons link on the left side of the CSKM screen (see Fig. 1).

Complex systems concepts

Self-organization

The concept of self-organization is really about hidden order that is all around us. It is a fundamental construct in the sciences of complexity, but it is often misunderstood. This is because many people assume that a system behaves in an orderly or organized manner because “something” or “someone” causes it to be orderly. However, most phenomena in life, such as atoms and molecules, cells in the body, or companies in an economy, are actually complex systems that are made up of many, many independent but interacting parts or agents. These parts interact with each other and with the environment in various ways—including random or chance interactions. Over time, the interactions of the parts may be looked at as patterns or order in the overall system. Take, for example, an ordinary day in New York, or any city in the world. People buy breakfast, drink coffee, put gas in their cars, and generally go about their business. How did all of the different kinds of food “show up?” Who knew how much coffee or gasoline to buy for everyone in the city? And so on… Is there a central planning group that figured all of this out? Obviously not. Magic? Unlikely. A city is in fact a complex system and the various kinds of decentralized interactions (e.g., feedback loops) of the parts (i.e., people, things) lead to the emergence of patterns and order without any centrally controlling mechanism. The dynamics of self-organization are effective at allowing people to get a wide range of food and services. Also, self-organizing processes are quite powerful and often long lasting. Consider, for example, how a city survives even catastrophes such as major fires or earthquakes that cause serious loss of life and major structural damage. Many scientists have been documenting how self-organization is found at all levels of nature, from the quantum and sub-atomic levels through our social and ecological levels to the very large-scale structures of galaxy clusters in the universe. Tip: Note that not all systems self-organize. Does your car, which is a mechanical system, “self-organize” or fix itself? Of course not, you need a mechanic to fix a system like a car. However, the flow of cars in the transportation system of a city does self-organize as there is no master computer that controls the speed and direction of all the cars, trucks, and motorscooters on all of the streets and highways in a city.

Emergent properties and levels

The formation of a flock from the interactions of the individual birds illustrates the concept of emergence or emergent properties. That is, emergence is when a new property is exhibited by a complex system at higher level of the system that is different than what you might expect from the agents or parts of the system. The concept of emergence is in many ways what is at the center of the most important research in the study of complex systems. How is it that molecules might interact in ways in which cell processes emerge? How is it the cells and organs of our body interact in ways from which our human personalities emerge? How is it that the individuals in a company interact in ways in which “organizational personalities” emerge? How is it that companies and people interact such that the characteristics of the global economy emerge? Tip: The new properties that might emerge from the interactions of the agents or parts in a complex system typically are observed “up” a level. For example, birds looking at each other while flying may be regarded as one level of their complex system, while the emergence of the flock formation is one level higher in that system.

Complex systems ideas

Control ideas: centralized vs. decentralized

What controls a clock? How is a classroom of kids controlled? Who controls an army? For each of these questions, most people would probably answer that something or someone is in control. In the case of the clock, it would be the interaction of the gears, while for the classroom, a teacher would be in control, and for the army, a general. We call these examples of central control. Now think about these questions. What controls a rain forest? What controls gas molecules in the air? What controls the flocking formations of birds? (You might want to investigate this model of birds flocking [link]. Is there a “leader” bird that you can identify in each flock? If not, then what “controls” the formation of the flocks?) In these systems, you might notice that there is no readily apparent “controller.” In the rain forest, the animals and vegetation have multiple different ways of interacting with each other and that the overall result of these interactions is the rain forest. Gas molecules, have certain properties that determine how they interact with each other in a room. And a careful examination of the flocking behavior of birds would reveal that there is no “leader” bird. These types of systems, which can be very stable and consist in terms of how they function, are examples of decentralized control. This idea of Decentralized Control is an important concept about complex systems. Note, though, that it is quite different than the more common idea of central control. Tip: When you think about different types of systems, try to decide if the system is controlled in a decentralized manner or a centralized manner. If there is decentralized control in the system, then it is probably an example of a complex system.

Actions: random vs. predicatable

Perhaps the most common example of the effect of an action is hitting a pool ball. Since the time of Newton, if the force, direction, and shape of an object such as a pool ball was known, then it could be calculated in a predictable manner from the actions of the ball, both individually and in interaction with other balls. But a pool table is not a complex system. However, one of the great lessons of modern physics, specifically from quantum mechanics and the Heisenberg uncertainty principle, is “The more precisely the position of a subatomic particle is determined, the less precisely the momentum is known in this instant, and vice versa”. This insight shows how nature fundamentally operates within parameters of randomness and chance. We also find chance factors in our day-to-day lives, with the weather, traffic jams, or when we meet someone who becomes a good friend. What is important from a complex systems perspective is that even though there are random factors in phenomena at all levels of nature, we still see emergent patterns and self-organization—other concepts we study in these lessons. The study of why these patterns occur is at the heart of cutting edge research in the cross-disciplinary fields of complexity. Tip: When you think about actions that occur in a particular system, try to decide if the actions are predictable or random. If the actions have random elements, then it is probably an example of a complex system.

Rights and permissions

About this article

Cite this article

Jacobson, M.J., Kapur, M., So, HJ. et al. The ontologies of complexity and learning about complex systems. Instr Sci 39, 763–783 (2011). https://doi.org/10.1007/s11251-010-9147-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11251-010-9147-0