Abstract

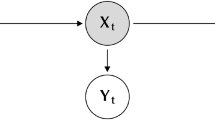

Linear regression for Hidden Markov Model (HMM) parameters is widely used for the adaptive training of time series pattern analysis especially for speech processing. The regression parameters are usually shared among sets of Gaussians in HMMs where the Gaussian clusters are represented by a tree. This paper realizes a fully Bayesian treatment of linear regression for HMMs considering this regression tree structure by using variational techniques. This paper analytically derives the variational lower bound of the marginalized log-likelihood of the linear regression. By using the variational lower bound as an objective function, we can algorithmically optimize the tree structure and hyper-parameters of the linear regression rather than heuristically tweaking them as tuning parameters. Experiments on large vocabulary continuous speech recognition confirm the generalizability of the proposed approach, especially when the amount of adaptation data is limited.

Similar content being viewed by others

Notes

Strictly speaking, since transformation parameters are not observables and are marginalized in this paper, these can be regarded as latent variables in a broad sense, similar to HMM states and mixture components of Gaussian Mixture Models (GMMs). However, these have different properties, e.g., transformation parameters can be integrated out in the VB-M step, while HMM states and mixture components are computed in the VB-E step, as discussed in Section 3. Therefore, to distinguish transformation parameters from HMM states and mixture components clearly, this paper only treats HMM states and mixture components as latent variables, which follows the terminology in variational Bayes framework [22]

k denotes a combination of all possible HMM states and mixture components. In the common HMM representation, k can be represented by these two indexes.

We use the following matrix formulate for the derivation: \(\begin {array}{rll} \frac {\partial }{\partial \mathbf {X}} \text {tr} [\mathbf {X}' \mathbf {A}] &=& \mathbf {A}\\ \frac {\partial }{\partial \mathbf {X}} \text {tr} [\mathbf {X}' \mathbf {X} \mathbf {A}] &=& 2 \mathbf {X} \mathbf {A} \quad (\mathbf {A} \text {is a symmetric matrix}) \end {array} \)

Ψ and m can also be marginalized by setting their distributions. This paper point-estimates Ψ and m by a MAP approach.

We can also marginalize the HMM parameters Θ. This corresponds to jointly optimize HMM and linear regression parameters.

The following sections assume factorization forms of \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V})\) to make solutions mathematical tractable. However, this factorization assumption weakens the relationship between the KL divergence and the variational lower bound. For example, if we assume \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}, \mathbf {V}) = q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}) q(\mathbf {V})\), and focus on the KL divergence between \(q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}})\) and \(p (\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}} | \mathbf {O})\), we obtain the following inequality:

\( \text {KL} [ q(\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}}) || p (\boldsymbol {\Lambda }_{{\mathcal {I}}_{m}} | \mathbf {O}) ] \leq \log p(\mathbf {O}; \boldsymbol {\Theta }, m, \mathbf { \Psi }) - {\mathcal {F}} (m, \mathbf { \Psi }). \)

Compared with Eq. (16), the relationship between the KL divergence and the variational lower bound are less direct due to the inequality relationship. In general, the factorization assumption distances optimal variational posteriors from the true posterior within the VB framework.

Matrix variate normal distribution in Eq. 19 is also represented by the following multivariate normal distribution [36]:

\( \begin {aligned} {} {\mathcal {N}}(\mathbf {W}_{i}|\mathbf {M}_{i}, \mathbf { \Phi }_{i}, \mathbf { \Omega }_{i})\\ {} \propto \exp \left (- \frac {1}{2}\ \text {vec}(\mathbf {W}_{i} - \mathbf {M}_{i})' (\mathbf { \Omega }_{i} \otimes \mathbf { \Phi }_{i})^{-1} \text {vec}(\mathbf {W}_{i} - \mathbf {M}_{i})^{-1} \right), \end {aligned} \)

where vec(W i −M i ) is a vector formed by the concatenation of the columns of (W i −M i ), and ⊗ denotes the Kronecker product. Based on this form, a VB solution in this paper could be extended without considering the variance normalized representation used in this paper according to [16].

Since we approximate the posterior distribution for a non-leaf node to that for a leaf node, the contribution of the variational lower bounds from the non-leaf nodes to the total lower bounds can be disregarded, and Eq. 55 is used as a pruning criterion. If we don’t use this approximation, we just compare the difference between the values ℒ i (ρ i , ρ l (i), ρ r (i)) of the leaf and non-leaf node cases in Eq. 50.

References

Leggetter, C.J., & Woodland, P.C. (1995). Maximum likelihood linear regression for speaker adaptation of continuous density hidden Markov models. Computer Speech and Language, 9, 171–185.

Digalakis, V., Ritischev, D., Neumeyer, L. (1995). Speaker adaptation using constrained reestimation of Gaussian mixtures. IEEE Transactions on Speech and Audio Processing, 3, 357–366.

Lee, C.-H., & Huo, Q. (2000). On adaptive decision rules and decision parameter adaptation for automatic speech recognition. In Proceedings of the IEEE (Vol. 88, pp. 1241–1269).

Shinoda, K. (2010). Acoustic model adaptation for speech recognition. IEICE Transactions on Information and Systems, 93(9), 2348–2362.

Sankar, A., & Lee, C.-H. (1996). A maximum-likelihood approach to stochastic matching for robust speech recognition. IEEE Transactions on Speech and Audio Processing, 4(3), 190–202.

Chien, J.-T., Lee, C.-H., Wang, H.-C. (1997). Improved bayesian learning of hidden Markov models for speaker adaptation. In Processing of ICASSP (Vol. 2, pp. 1027–1030). IEEE

Chen, K.-T., Liau, W.-W., Wang, H.-W., Lee, L.-S. (2000). Fast speaker adaptation using eigenspace-based maximum likelihood linear regression. In Proceedings of ICSLP (Vol. 3, pp. 742–745).

Mak, B., Kwok, J.T., Ho, S. (2005). Kernel eigenvoice speaker adaptation. IEEE Transactions on Speech and Audio Processing, 13(5), 984–992.

Delcroix, M., Nakatani, T., Watanabe, S. (2009). Static and dynamic variance compensation for recognition of reverberant speech with dereverberation preprocessing. IEEE Transactions on Audio, Speech and Language Processing, 17(2), 324–334.

Tamura, M., Masuko, T., Tokuda, K., Kobayashi, T. (2001). Adaptation of pitch and spectrum for HMM-based speech synthesis using MLLR. In Proceedings of ICASSP (Vol. 2, pp. 806–808).

Stolcke, A., Ferrer, L., Kajarekar, S., Shriberg, E., Venkataraman, A. (2005). MLLR transforms as features in speaker recognition. In Proceedings of Interspeech (pp. 2425–2428).

Sanderson, C., Bengio, S., Gao, Y. (2006). On transforming statistical models for non-frontal face verification. Pattern Recognition, 39(2), 288–302.

Maekawa, T., & Watanabe, S. (2011). Unsupervised activity recognition with user’s physical characteristics data. In Proceedings of international symposium on wearable computers (ISWC 2011), (pp. 89–96).

Young, S., Evermann, G., Gales, M., Hain, T., Kershaw, D., Liu, X., Moore, G., Odell, J., Ollason, D., Povey, D. (2006). The HTK book (for HTK version 3.4). Cambridge: Cambridge University Engineering Department.

Chesta, C., Siohan, O., Lee, C.-H. (1999). Maximum a posteriori linear regression for hidden Markov model adaptation. In Proceedings of Eurospeech (Vol. 1, pp. 211–214).

Chien, J.-T. (2002). Quasi-Bayes linear regression for sequential learning of hidden Markov models. IEEE Transactions on Speech and Audio Processing, 10(5), 268-278

Shinoda, K., & Lee, C.-H. (2001). A structural Bayes approach to speaker adaptation. IEEE Transactions on Speech and Audio Processing, 9, 276–287).

Siohan, O., Myrvoll, T.A., Lee, C.H. (2002). Structural maximum a posteriori linear regression for fast HMM adaptation. Computer Speech & Language, 16(1), 5–24.

MacKay, D.J.C. (1997). Ensemble learning for hidden Markov models. Technical report, Cavendish Laboratory: University of Cambridge.

Neal, R.M., & Hinton, G.E. (1998). A view of the EM algorithm that justifies incremental, sparse, and other variants. Learning in Graphical Models, 355–368.

Jordan, M.I., Ghahramani, Z., Jaakkola, T.S., Saul, L.K. (1999). An introduction to variational methods for graphical models. Machine Learning, 37(2), 183–233.

Attias, H. (1999). Inferring parameters structure of latent variable models by variational Bayes. In Proceedings of uncertainty in artificial intelligence (UAI) (Vol. 15, pp. 21-30).

Ueda, N., & Ghahramani, Z. (2002). Bayesian model search for mixture models based on optimizing variational bounds. Neural Networks, 15, 1223–1241.

Watanabe, S., Minami, Y., Nakamura, A., Ueda, N. (2002). Application of variational Bayesian approach to speech recognition. NIPS 2002: MIT Press.

Valente, F., & Wellekens, C. (2003). Variational Bayesian GMM for speech recognition. In Proceedings of Eurospeech (pp. 441–444).

Watanabe, S., Minami, Y., Nakamura, A., Ueda, N. (2004). Variational bayesian estimation and clustering for speech recognition. IEEE Transactions on Speech and Audio Processing, 12, 365–381.

Somervuo, P. (2004). Comparison of ML, MAP, and VB based acoustic models in large vocabulary speech recognition. In Proceedings of ICSL (Vol. 1, pp. 830–833).

Jitsuhiro, T., & Nakamura, S. (2004). Automatic generation of non-uniform HMM structures based on variational Bayesian approach. In Proceedings of ICASSP (Vol. 1, pp. 805–808).

Hashimoto, K., Zen, H., Nankaku, Y., Lee, A., Tokuda, K. (2008). Bayesian context clustering using cross valid prior distribution for HMM-based speech recognition. In Proceedings of Interspeech.

Ogawa, A., & Takahashi, S. (2008). Weighted distance measures for efficient reduction of Gaussian mixture components in HMM-based acoustic model. In Proceedings of ICASSP (pp. 4173–4176).

Ding, N., & Ou, Z. (2010). Variational nonparametric Bayesian hidden Markov model. In Proceedings of ICASSP (pp. 2098–2101).

Watanabe, S., & Nakamura, A. (2004). Acoustic model adaptation based on coarse/fine training of transfer vectors and its application to a speaker adaptation task. In Proceedings of ICSLP (pp. 2933–2936).

Yu, K., & Gales, M.J.F. (2006). Incremental adaptation using bayesian inference. In Proceedings of ICASSP (Vol. 1, pp. 217–220).

Winn, J., & Bishop, C.M. (2006). Variational message passing. Journal of Machine Learning Research, 6(1), 661.

Gales, M.J.F., & Woodland, P.C. (1996). Variance compensation within the MLLR framework, Technical Report 242: Cambridge University Engineering Department.

Dawid, A.P. (1981). Some matrix-variate distribution theory: notational considerations and a Bayesian application. Biometrika, 68(1), 265–274.

Watanabe, S., Nakamura, A., Juang, B.H. (2011). Bayesian linear regression for hidden Markov model based on optimizing variational bounds. In Proceedings of MLSP (pp. 1–6).

Odell, J.J. (1995). The use of context in large vocabulary speech recognition. PhD thesis: Cambridge University.

Maekawa, K., Koiso, H., Furui, S., Isahara, H. (2000). Spontaneous speech corpus of Japanese. In Proceedings of LREC (Vol. 2, pp. 947–952).

Nakamura, A., Oba, T., Watanabe, S., Ishizuka, K., Fujimoto, M., Hori, T., McDermott, E., Minami, Y. (2006). Evaluation of the SOLON speech recognition system: 2006 benchmark using the corpus of spontaneous japanese. IPSJ SIG Notes, 2006(136), 251–256. (in Japanese).

McDermott, E., Hazen, T.J., Le Roux, J., Nakamura, A., Katagiri, S. (2007). Discriminative training for large-vocabulary speech recognition using minimum classification error. IEEE Transactions on Audio, Speech, and Language Processing, 15(1), 203–223.

Hori, T. (2004). NTT speech recognizer with outlook on the next generation: SOLON. In Proceedings of NTT workshop on communication scene analysis (Vol. 1, p. SP-6.)

Hahm, S.J., Ogawa, A., Fujimoto, M., Hori, T., Nakamura, A. (2012). Speaker adaptation using variational Bayesian linear regression in normalized feature space. In Proceedings of Interspeech (pp. 803-806).

Hahm, S.J., Ogawa, A., Fujimoto, M., Hori, T., Nakamura, A. (2013). Feature space variational Bayesian linear regression and its combination with model space VBLR. In Proceedings of ICASSP (pp. 7898-7902).

Blei, D.M., & Jordan, M.I. (2006). Variational inference for Dirichlet process mixtures. Bayesian Analysis, 1(1), 121–144.

Jebara, T. (2004). Machine learning: discriminative and generative (Vol. 755). Springer.

Kubo, Y., Watanabe, S., Nakamura, A., Kobayashi, T. (2010). A regularized discriminative training method of acoustic models derived by minimum relative entropy discrimination. In Proceedings of Interspeech (pp. 2954–2957).

Author information

Authors and Affiliations

Corresponding author

Additional information

The work was mostly done while Shinji Watanabe was working at NTT Communication Science Laboratories.

Appendix: Derivation of Posterior Distribution of Latent Variables

Appendix: Derivation of Posterior Distribution of Latent Variables

This section derives the posterior distribution of latent variables \(\tilde {q}(\mathbf {V}_{i})\), introduced in Section 3.5, based on the VB framework. To obtain VB posteriors of latent variables, we consider the following integral (this is the same equation as Eq. 43).

In this derivation, we omit indexes i, k, and t for simplicity. By substituting the concrete form (Eq. 4) the multivariate Gaussian distribution into Eq. 56, the equation is represented as:

where we use

Now, we focus on the quadratic form (∗1)of the second line of Eq. 57. By considering Σ = C(C)′ in Eq. 6, (∗1) is rewritten as follows:

where we use the cyclic and transpose properties of the trace, as follows:

We also define (D+1) × (D+1) matrix Γ, D × (D+1) matrix Y, and D × D matrix U in Eq. 59 as follows:

The integral of Eq. 59 over W can be decomposed into the following three terms:

where we use the following property:

and use Eq. 58 in the third term of the second line in Eq. 63.

We focus on the integrals (∗2) and (∗3). Since \(\tilde {q}(\mathbf {W})\) is a scalar value, (∗3) is rewritten as follows:

Here, we use the following matrix properties:

Thus, the integral is finally solved as

where we use

Similarly, we also rewrite (∗2) in Eq. 63 based on Eqs. 66 and 67, as follows:

Thus, the integral is finally solved as

where we use

Thus, we solve the all integrals in Eq. 63.

Finally, we substitute the integral results of (∗2) and (∗3) (i.e., Eqs. 71 and 71) into Eq. 63, and rewrite Eq. 63 based on the concrete forms of Γ, Y, and U defined in Eq. 62 as follows:

Then, by using the cyclic property in Eq. 60 and Σ = C(C)′ in Eq. 6, we can further rewrite Eq. 63 as follows:

Thus, we obtain the quadratic form with respect to o, which becomes a multivariate Gaussian distribution form. By recovering the omitted indexes i, k, and t, and substituting integral result in Eq. 74 into Eq. 57, we finally solve Eq. 43 as:

Here, we use the concrete form of the multivariate Gaussian distribution in Eq. 4.

Rights and permissions

About this article

Cite this article

Watanabe, S., Nakamura, A. & Juang, BH.(. Structural Bayesian Linear Regression for Hidden Markov Models. J Sign Process Syst 74, 341–358 (2014). https://doi.org/10.1007/s11265-013-0785-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11265-013-0785-8