Abstract

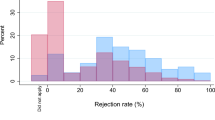

This paper applies data envelopment analysis (DEA) to assess technical efficiency in a big public university. Particular attention has been paid to two main activities, teaching and research, and on two large groups, the Science and Technology (ST) sector and the Humanity and Social Science (HSS) sector. The findings, based to data from 2005 to 2009, suggest that the ST sector is more efficient in terms of quality of research than the HSS sector, that instead achieves higher efficiency in teaching activities. The efficiency estimates strongly depend on the output specification, given that the use of several quality proxies, such as three research and two student questionnaire-based teaching alternative indices, reduce performance and its differentials for both research and teaching activities. A bootstrap technique is also used to provide confidence intervals for efficiency scores and to obtain bias-corrected estimates. The Malmquist index is calculated to measure changes in productivity.

Similar content being viewed by others

Notes

See Buzzigoli et al. (2010) for a brief review of the university system in Italy.

For an evaluation of HEIs using parametric methods on Italian data, see Laureti (2008).

The reform was approved by the Law 240/2010, even though it was actually implemented by the University under analysis only at the end of 2013.

In DEA, the organization under study is called the DMU.

However, if these assumptions were too weak, efficiency levels would be systematically underestimated in small samples, generating inconsistent estimates.

We search for all outliers in the dataset using super-efficiency (Andersen and Petersen 1993) and rho–Tørgersen (Tørgersen et al. 1996). The super-efficiency captures the maximum radial change such that the observations will remain effective. Instead, the rho-Tørgersen measures the share of potential efficiency associated with actual observations. We find no difference in the efficiency estimates with and without outliers. Then, we report all efficiency scores for the DMUs (in our case, it is very relevant to check the evaluation of the efficiency for all DMUs under investigation).

Technical efficiency refers to the capacity of DMUs, given the technology used, to produce the highest level of output from a given combination of inputs, or to use the least possible amount of inputs for a given output. Specifically, given that the focus is on the higher education system, technical efficiency means, according to Abbot and Doucouliagos (2003, p. 91), that “the technically efficient university is not able to deliver more teaching plus research output (without reducing quality) given its existing labor, capital and other inputs.”

As described by university guidelines, in each department there are professors and researchers who, due to similar research approaches and objectives, are part of the same scientific disciplinary sector and are grouped according to a large scientific and cultural project, consistent with teaching and training activities to which the department concurs. They promote and manage research, organise doctoral programmes, carry out research and consultancy work, according to specific agreements and contracts, on request of external organisations. The department is run by the department council and the director.

Through the faculties, universities organise their action in various subject areas. Faculties coordinate subject courses and arrange them within different degree programmes. They appoint academic staff and decide, always respectful of the principle of teaching freedom, how to distribute roles and workload among university teachers and researchers. The faculty is run by the faculty council and the dean.

In order to classify into two groups, university guidelines were used. The HSS sector has 18 departments and six faculties while the ST sector has 10 departments and three faculties.

We also consider non-academic staff in order to take into account the administrative staff who support the academic staff and the students.

They did not consider the administrative staff in the aggregate measure. They divided the academic staff into four categories so that the distance between two ranks is 1/4 = 0.25.

The weights have been chosen so that the distance between two ranks is 1/5 = 0.2.

The inclusion of this variable would be important if drop-out rates varied between faculties. Unfortunately, it could not be used for departments because the university statistical office only counts students by faculties.

We did not include the number of citations due to the lack of available data (see Harris 1988 on the debate about the use of citations as a measure of research quality).

The weights have been chosen so that the distance between two ranks is \( 1/4=0.25 \).

This is a measure of the financial resources the departments receive from the central government in order to take into account the scientific production and it represents a good signal of research productivity.

Johnes and Johnes (1993) argued that the amount of money received as grants for research will not only be spent on research but also on other facilities which are inputs into the production process. Thus, grants do not completely reflect the academic research but income for other research activities.

According to them, “research grants represent an output variable,” as indicator of a department’s research capability.

The first index is the weighted sum of the publications in international journals, the number of the patents and the total number of the academic staff (for ST sector departments), weighted sum of the publications in the national and international books and monographs and of the total number of academic staff (for HSS sector departments). The second index is calculated as the ratio between the total amount of money obtained for research over the total number of academic staff. Finally, the third index is represented by the number of research products per €10000 of academic staff costs.

We also use, for robustness check, just the number of graduates without weighing by their degree classification and the results are similar.

We are aware that this measure might represent a potential limitation of our analysis. Indeed, according to Kao and Hung (2008, p. 655), “student evaluation for teachers may be biased by the nature of courses and does not have a common base for comparison if the students have not been taught by all teachers.” Similarly, student satisfaction is a subjective measure and seems to be dominated by course difficulty and average grades. On the other hand, student satisfaction is an important qualitative indicator for higher education institutions. According to Elliott (2002) because of the positive relationship between student satisfaction and institutional characteristics such as student retention and graduation rates, many universities have incorporated some measure of satisfaction in their marketing campaigns, recruitment initiatives, and planning processes and to Elliott and Shin (2002) the assessment of student opinions and attitudes is a modern day necessity as institution of higher education are challenged by a climate of decreased funding, demands for public accountability, and increased competition for student enrollments. Thus, keeping the mentioned concerns in mind, we believe that a lesson could still be learned from the use of both the satisfaction indices.

ER does not include any labour input (i.e., researchers), thus there is no double counting.

ET does not cover any staffing costs, thus we can exclude any double counting.

Moreover, as underlined by Johnes and Johnes (1995, p. 305), “a technically inefficient DMU could apparently become efficient merely by producing (however wastefully) an unusual type of output, or by forgoing the use of one type of input employed by all other DMUs.” Being aware of this, we carefully select inputs and outputs, also from the quality point of view, taking into account what Kao and Hung (2008) considered as the two main difficulties to deal with, namely the data availability and the difficulty in measuring performance quality. See Johnes (2004) for a discussion of the problems of defining and measuring the inputs and outputs of the higher education production process.

Mean values are calculated over the period 2005–2009. Descriptive statistics related to each year are not presented in the paper and are available on request.

We also estimate the efficiency scores on average over the 2005–2009 period for each department and faculty. The results, for the sake of brevity, are not presented in the paper and are available on request. Overall 28 departments (DEP), named D1, D2 up to D28 and of 9 faculties (FAC), named F1, F2 up to F9, have been considered. For the sake of anonymity, numbers have been assigned to the DMUs randomly.

It is also interesting to notice that Model 4a (where the index is used as output) is not as efficient as the others. This could be due to the nature of the capacity of attracting resources index which is calculated as the ratio between total amount of money obtained for research over total number of academic staff.

We find the same evidence even when the number of graduates is not weighed by their degree classification.

A potential limitation of these results is represented by the decision to assign weights to the input EP and to the output NP. Therefore, we also test how alternative weights given to those variables would change the results. We did not find any statistically significant difference in the results either for departments or faculties. Results are available upon request.

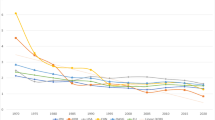

Through the Malmquist analysis, we provide four efficiency/productivity indices for each DMU and a measure of technical progress over time. These are: a) E, under a CRS technology without convexity constraint. It represents the change in technical efficiency as DMUs get closer to or further away from the efficiency frontier. It is also called “catching-up effect”; b) TC which measures the change in technology such as the shifts in the efficiency frontier. In other words it measures whether the production frontier is moving outwards over time. It is also called “frontier shift” effect. Technical progress (regress) has occurred if TC is greater (less) than one; c) PEFC, under a variable returns-to-scale technology with convexity constraint. It measures the change in pure technical efficiency; d) SC which is obtained by dividing the technical efficiency under a constant returns-to-scale without convexity constraint (E) by PEFC under variable returns-to-scale with convexity constraint (PEFC). It measures the changes in efficiency due to movement toward or away from the point of optimal scale. In other words, it measures the degree to which a unit gets closer to its most productive scale size over the periods under examination; e) TFPC measuring the change in total output relative to the change in the usage of all inputs. It indicates the degree of productivity change; when TFPC is >1 then productivity gains occur, whilst if TFPC <1 productivity losses occur. Specifically, it can be decomposed into two components: E and TC.

We also calculate the Malmquist index for each department and faculty. The results, for the sake of brevity, are not presented in the paper and are available on request.

If E > TC, the productivity gains are driven by improvements in efficiency while in case E < TC productivity gains are instead driven by the technological progress

More specifically, E is the product of PEPC and scale efficiency (SC) such as that E = PEFC*SC. If PEFC > SC, then the main source of efficiency change is driven by PEPC while if PEFC < SC, then the major source of efficiency is, instead, due to changes in SC.

Even though there is still a productivity gain (TFPC > 1).

E decreases from 1.0108 to 1.0048 while TC increases from 0.9716 to 1.0179.

E increases from 1.2356 to 1.3031 and TC decreases from 0.9464 to 0.9235 in Model 2b; E increases from 1.2369 to 1.6218 while TC decreases from 0.8802 to 0.6221 in model 3b.

TC increases from 0.9634 to 1.0743 and E decreases from 1.057 to 1.0161

E increases from 0.8852 to 0.9939 and TC decreases from 1.1339 to 0.9494 in Model 1b; E increases from 0.9093 to 1.2281 while TC decreases from 1.3236 to 0.8758 in model 3b.

References

Abbot, M., & Doucouliagos, C. (2003). The efficiency of Australian universities: a data envelopment analysis. Economics of Education Review, 22, 89–97.

Agasisti, T. (2011). Performances and spending efficiency in higher education: a European comparison through non-parametric approaches. Education Economics, 19(2), 199–224.

Agasisti, T., & Dal Bianco, A. (2009). Reforming the university sector: effects on teaching efficiency. Evidence from Italy. Higher Education, 57(4), 477–498.

Agasisti, T., & Johnes, G. (2010). Heterogeneity and the evaluation of efficiency: the case of Italian universities. Applied Economics, 42(11), 1365–1375.

Agasisti, T., Dal Bianco, A., Landoni, P., Sala, A., & Salerno, M. (2011). Evaluating the efficiency of research in academic departments: an empirical analysis in an Italian region. Higher Education Quarterly, 65(3), 267–289.

Aigner, D., Lovell, K., & Schmidt, P. (1977). Formulation and estimation of stochastic frontier production function models. Journal of Econometrics, 6, 21–37.

Andersen, P., & Petersen, N. C. (1993). A Procedure for ranking efficient units in data envelopment analysis. Management Science, 39, 1261–1264.

Atkinson, S. E., & Wilson, P. W. (1995). Comparing mean efficiency and productivity scores from small samples: a bootstrap methodology. Journal of Productivity Analysis, 6, 137–52.

Banker, R. D., Charnes, A., & Cooper, W. W. (1984). Some models for estimating technical and scale inefficiencies in DEA. Management Science, 32, 1613–1627.

Blöndal, S., Field, S. and Girouard, N. (2002). Investment in Human Capital Through Post-Compulsory Education and Training: Selected Efficiency and Equity Aspects. OECD Economics Department Working Papers, No. 333, OECD Publishing. 10.1787/778845424272.

Bonaccorsi, A., Daraio, C., & Simar, L. (2006). Advanced indicators of productivity of universities. An application of robust nonparametric methods to Italian data. Scientometrics, 66(2), 389–410.

Buzzigoli, L., Giusti, A., & Viviani, A. (2010). The evaluation of university departments. A case study for Firenze. International Advances in Economic Research, 16, 24–38.

Carrington, R., Coelli, T., & Rao, D. S. P. (2005). The performance of Australian universities: conceptual issues and preliminary results. Economic Papers, 24, 145–163.

Catalano, G., Mori, A., Silvestri, P., and Todeschini, P., (1993). Chi paga l’istruzione universitaria? Dall’esperienza europea una nuova politica di sostegno agli studenti in Italia, Franco Angeli, Milano.

Caves, D. W., Christensen, L. R., & Diewert, W. E. (1982). The economic theory of index numbers and the measurement of input, output, and productivity. Econometrica, 50, 1393–1414.

Cazals, C., Florens, J. P., & Simar, L. (2002). Nonparametric frontier estimation: a robust approach. Journal of Econometrics, 106, 1–25.

Charnes, A., Cooper, W. W., & Rhodes, E. (1978). Measuring the efficiency of decision making units. European Journal of Operational Research, 2, 429–444.

Chizmar, J. F., & Zak, T. A. (1983). Modeling multiple outputs in educational production functions. American Economic Review, 73(2), 18–22.

Chizmar, J. F., & Zak, T. A. (1984). Canonical estimation of joint educational production functions. Economics of Education Review, 3(1), 37–43.

Coelli, T., Rao, D. S. P., & Battese, G. E. (1998). An introduction to efficiency and productivity analysis. Boston: Kluwer Academic Publishers.

Cooper, W.W., Seiford, L.M. and Zhu, J. (2004). Handbook on data envelopment analysis. Springer (Kluwer Academic Publishers)

Efron, B. (1979). Bootstrap methods: another look at the jackknife. Annals of Statistics, 7, 1–16.

Efron, B., & Tibshirani, R. J. (1993). An introduction to the bootstrap. London: Chapman &Hall.

Elliott, K. M. (2002). Key determinants of student satisfaction. Journal of College Student Retention, 4, 271–279.

Elliott, K. M., & Shin, D. (2002). Student satisfaction: an alternative approach to assessing this important concept. Journal of Higher Education Policy and Management, 24, 197–209.

Fare, R., Grosskopf, S., Norris, M., & Zhang, Z. (1994). Productivity growth, technical progress, and efficiency changes in industrialised countries. American Economic Review, 84, 66–83.

Farrel, M. J. (1957). The measurement of productive efficiency. Journal of the Royal Statistical Society, 120, 253–290.

Ferrier, G.D. and Hirschberg, J.G. (1997). Bootstrapping Confidence Intervals for Linear Programming Efficiency Scores: With an Illustration Using Italian Banking Data. Journal of Productivity Analysis, 8, 19–33.

Flegg, A. T., Allen, D. O., Field, K., & Thurlow, T. W. (2004). Measuring the efficiency of British universities: a multi-period data envelopment analysis. Education Economics, 12(3), 231–249.

Giannakou, M. (2006). Chair’s Summary, Meeting of OECD Education Ministers: Higher Education - Quality, Equity and Efficiency, Athens, Greece. Available from www.oecd.org/edumin2006

Greene, W. H. (1980). On the estimation of a flexible frontier production model. Journal of Econometrics, 13, 101–115.

Halkos, G., Tzeremes, N. G., & Kourtzidis, S. A. (2012). Measuring public owned university departments’ efficiency: a bootstrapped DEA approach. Journal of Economics and Econometrics, 55(2), 1–24.

Harris, G. T. (1988). Research output in Australian university economics departments, 1974–83. Australian Economic Papers, 27, 102–110.

Johnes, J. (2004). Efficiency measurement. In G. Johnes & J. Johnes (Eds.), The international handbook on the economics of education. Cheltenham: Edward Elgar.

Johnes, J. (2008). Efficiency and productivity change in the english higher education sector from 1996/97 to 2004/05. The Manchester School, 76(6), 653–674.

Johnes, G., & Johnes, J. (1993). Measuring the research performance of UK economics departments: an application of data envelopment analysis. Oxford Economic Papers, 45, 332–347.

Johnes, G., & Johnes, J. (1995). Research funding and performance in UK university departments of economics: a frontier analysis. Economics of Education Review, 14(3), 301–314.

Kao, C., & Hung, H. T. (2008). Efficiency analysis of university departments: an empirical study. Omega, 36, 653–664.

Kocher, M. G., Luptàcik, M., & Sutter, M. (2006). Measuring productivity of research in economics. A cross-country study using DEA. Socio-Economic Planning Sciences, 40, 314–332.

Koksal, G., & Nalcaci, B. (2006). The relative efficiency of departments at a Turkish engineering college: a data envelopment analysis. Higher Education, 51, 173–289.

Laureti, T. (2008). Modelling exogenous variables in human capital formation through a heteroscedastic stochastic frontier. International Advances in Economic Research, 14(1), 76–89.

Leitner, K.-H., Prikoszovits, J., Schaffhauser-Linzatti, M., Stowasser, R., & Wagner, K. (2007). The impact of size and specialisation on universities’ department performance: a DEA analysis applied to Austrian universities. Higher Education, 53, 517–538.

Madden, G., Savage, S., & Kemp, S. (1997). Measuring public sector efficiency: a study of economics departments at Australian universities. Education Economics, 5(2), 153–167.

OECD (2008), Tertiary Education for the Knowledge Society, OECD Publishing, Paris. Available at www.oecd.org/edu/tertiary/review

Ray, S. C., & Desli, E. (1997). Productivity growth, technical progress and efficiency change in industrialized countries: comment. American Economic Review, 87(5), 1033–1039

Sarrico, C. S., Teixeira, P. N., Rosa, M. J., & Cardoso, M. F. (2009). Subject mix and productivity in Portuguese universities. European Journal of Operational Research, 197(2), 287–295.

Simar, L., & Wilson, P. W. (1998). Sensitivity analysis of efficiency scores: how to bootstrap in non-parametric frontier models. Management Science, 44(1), 49–61.

Simar, L., & Wilson, P. W. (1999). Estimating and bootstrapping malmquist indices. European Journal of Operational Research, 115, 459–71.

Tauer, L. W., Fried, H. O., & Fry, W. E. (2007). Measuring efficiencies of academic departments within a college. Education Economics, 15, 473–489.

Thursby, J. G. (2000). What do we say about ourselves and what does it mean? Yet another look at economics department research. Journal of Economic Literature, 38, 383–404.

Tomkins, C., & Green, R. (1988). An experiment in the use of data envelopment analysis for evaluating the efficiency of UK university departments of accounting. Financial Accountability & Management, 4, 147–164.

Tørgersen, A. M., Førsund, F. A., & Kittelsen, S. A. C. (1996). Slack-adjusted efficiency measures and ranking of efficient units. Journal of Productivity Analysis, 7, 379–398.

Tremblay, K., Lalancette, D. and Roseveare, D. (2012). Assessment of Higher Education Learning Outcomes - Feasibility Study Report - Volume 1 – OECD.

Tyagi, P., Yadav, S. P., & Singh, S. P. (2009). Relative performance of academic departments using DEA with sensitivity analysis. Evaluation and Program Planning, 32, 168–177.

Worthington, A., & Lee, B. L. (2008). Efficiency, technology and productivity change in Australian universities, 1998–2003. Economics of Education Review, 27, 285–298.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Barra, C., Zotti, R. Measuring Efficiency in Higher Education: An Empirical Study Using a Bootstrapped Data Envelopment Analysis. Int Adv Econ Res 22, 11–33 (2016). https://doi.org/10.1007/s11294-015-9558-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11294-015-9558-4