Abstract

Back-Projection is the major algorithm in Computed Tomography to reconstruct images from a set of recorded projections. It is used for both fast analytical methods and high-quality iterative techniques. X-ray imaging facilities rely on Back-Projection to reconstruct internal structures in material samples and living organisms with high spatial and temporal resolution. Fast image reconstruction is also essential to track and control processes under study in real-time. In this article, we present efficient implementations of the Back-Projection algorithm for parallel hardware. We survey a range of parallel architectures presented by the major hardware vendors during the last 10 years. Similarities and differences between these architectures are analyzed and we highlight how specific features can be used to enhance the reconstruction performance. In particular, we build a performance model to find hardware hotspots and propose several optimizations to balance the load between texture engine, computational and special function units, as well as different types of memory maximizing the utilization of all GPU subsystems in parallel. We further show that targeting architecture-specific features allows one to boost the performance 2–7 times compared to the current state-of-the-art algorithms used in standard reconstructions codes. The suggested load-balancing approach is not limited to the back-projection but can be used as a general optimization strategy for implementing parallel algorithms.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

X-ray tomography is a powerful tool to investigate materials and small animals at the micro- and nano-scale [1]. Information about X-ray attenuation or/and phase changes in the sample is used to reconstruct its internal structure. Recent advances in X-ray optics and detector technology have paved the way for a variety of new X-ray imaging experiments aiming to study dynamic processes in materials and to analyze small organisms in vivo. At the Swiss Light Source (SLS) scientists were able to take high quality 3D snapshots of 150 Hz oscillations of a blowfly flight motor [2]. A temporal resolution of 20 ms was achieved during a stencil test performed at SLS [3] and also in the analysis of morphological dynamics of fast-moving weevils at the ANKA synchrotron at KIT [4].

To achieve these results, the instrumentation used at imaging beamlines has recently undergone a major update. The installed streaming cameras are able to deliver up to hundreds of thousands of frames per second with a continuous data rate up to 8 GB/s [5]. Newly developed control systems at ANKA [6], SLS [5], and other synchrotron facilities use the acquired imaging information to track the processes under study and adjust the instrumentation accordingly. These control systems rely highly on the performance of the integrated image processing frameworks. Faster acquisition and a high level of automation is essential to study dynamic phenomena and at the same time enables experiments with significantly increased sample throughput. For example, in 2015 Diamond Light Source (DLS) reported that typically about 3000 scans are recorded during 5 days of operation at a single imaging beamline [7]. Consequently, the amount of data generated at imaging beamlines quickly grows and results in a steep rise of the required computing power. In order to achieve higher temporal resolution and to prolong the duration of experiments, advanced methods are developed that incorporate a priori knowledge in the reconstruction procedure. These methods are able to produce high-quality images from undersampled and underexposed measurements, as demonstrated by [8, 9]. Unfortunately these methods are computationally significantly more demanding than traditional reconstruction algorithms and further increase the load on the computing infrastructure [10].

To tackle the performance challenge several reconstruction frameworks have been developed and optimized to utilize the parallel capabilities of nowadays computing architectures. At SLS GridRec, a fast reconstruction approach optimized for conventional CPU technology, has been adopted [11]. The reconstruction is scheduled across a dedicated cluster and reconstructs a 3D image within a couple of minutes [5]. Other frameworks use GPUs to accelerate the computation and are able to achieve minute-scale reconstructions at a single node equipped with multiple GPU adapters. PyHST is developed at ESRF and uses the CUDA framework to offload image reconstruction to NVIDIA GPUs [12]. The second version of PyHST provides also a number of iterative reconstruction techniques [13]. The UFO parallel computing framework is used at ANKA synchrotron to realize in-vivo tomography and laminography experiments [14, 15]. It constructs a data processing workflow by combining basic building blocks in a graph structure. OpenCL is used to execute the reconstruction at parallel accelerators with a primary focus on NVIDIA and AMD GPUs. ASTRA is a fast and flexible development platform for tomographic algorithms with MATLAB and python interfaces [16, 17]. It is implemented in C++ and uses CUDA to offload computations to GPU. Several other frameworks are based on the ASTRA libraries to provide GPU-accelerated reconstruction, for instance the Savu framework at DLS [7] or TomoPy at the Advanced Photon Source (APS) [18]. Recent versions of TomoPy also support UFO and GridRec as backends. All of the GPU-accelerated frameworks are capable to distribute the computation to a GPU cluster as well.

While most of the nowadays imaging frameworks rely heavily on parallel hardware to speed-up the reconstruction, specific features of the GPU architecture are rarely considered. On other hand, the hardware architectures differ significantly [19]. Organization of memory and cache hierarchies, performance balance between different types of operations, and even the type of parallelism varies. A significant speed-up is possible if details of the specific architecture are taken into account as illustrated in [20]. Fast execution is especially important if the reconstruction is embedded in a control workflow. Minimal latency is essential to track faster processes and to improve the achieved spatial and temporal resolutions. Due to unavoidable communication overhead, it is not always possible to reduce the latencies by scaling the reconstruction cluster.

For online monitoring and control, normally fast analytical methods are used to reconstruct 3D images. There are two main approaches: Filtered Back Projection (FBP) and methods based on the Fourier Slice Theorem [21]. The later methods are asymptotically faster, but due to the involved interpolation in the Fourier domain are more sensitive to the quality of the available projections. For typical geometries Fourier-based methods are several times faster using the same computing hardware [22] and should be preferred if the computing infrastructure is limited to general-purpose processors only [5]. A recent study suggests to implement back projection as convolution in log-polar coordinates in order to gain high reconstruction speed with interpolation in the image domain [23]. However, this new method has not yet been adopted in production environments. Still, Filtered Back Projection is the method of choice, largely due to it simplicity and robustness. Therefore, the efficiency of the FBP implementation is still crucial for the operated monitoring and control systems. Furthermore, methods used for low dose tomography normally consist out of sequences of forward and back projections. And, thus, a faster implementation of the back projection lowers also the computational demands for high-quality offline reconstruction and might reduce the required hardware investments.

While there are several articles aiming at optimization of Back Projection for general-purpose processors and Intel Xeon-Phi accelerators [24], up to our knowledge there are no publications considering the variety of GPU architectures. A number of papers addresses specific GPU architectures [25, 26]. Multiple papers perform a general analysis of a range of GPU architectures, reveal undisclosed details trough micro-benchmarking, and propose guidelines for performance optimization [27,28,29]. This information is invaluable to understand factors limiting performance on a specific architecture and to find an alternative approach to achieve a better performance. Several papers propose methods to auto-tune computation kernels [30]. However, the tuning is limited to finding optimal configuration of pre-defined parameters like desired occupancy, dimensions of execution blocks, etc. For instance, there are no automated solutions to tune the balance between the texture engine and the computational cores.

In [31], we presented two highly-optimized back-projection algorithms for NVIDIA Pascal GPUs and a hybrid approach to balance the load between different GPU subsystems using both in parallel. While the algorithms can be used on different hardware, multiple modifications are required to address the differences in the architectures efficiently. Furthermore, the proposed hybrid approach is only suitable for the NVIDIA GPUs of a few latest generations. A different scheme to balance load is required for AMD, Intel, and older NVIDIA GPUs. In this paper, we review a variety of parallel architectures presented in the last 10 years and establish a methodology to expand the original work to different parallel hardware. We discuss hardware differences in detail, build performance model, and demonstrate how these differences can be addressed to optimize the performance of the FBP algorithm. Particularly, we suggest modifications to adapt the developed algorithms for the architectures with on-chip memory optimized for 64-bit access. To address further differences in memory subsystems, we propose several alternative caching methods. We introduce an approach to reduce the overall number of executed instructions for systems with a bottleneck in the instruction throughput. We discuss optimal blocking strategies in great detail and suggest how the code-generation can be tweaked on the NVIDIA platform. We also propose two new methods to balance the load between different GPU subsystems. One targets NVIDIA Kepler architecture and another can be applied universally but with a minor penalty to the quality. The proposed performance model allows us to estimate the speed also for future architectures and select the appropriate modification and parametrization of the algorithms. Up to our knowledge, we present the first comprehensive overview of the GPU architectures across multiple vendors and GPU generations. Furthermore, using the back-projection algorithm as an example, we also illustrate how specific hardware features can be addressed and estimate possible gains. So the contribution of this paper goes beyond the proposed back-projection algorithm and also suggests optimization strategies suitable for other applications.

In this paper we focus on the optimizations of the back-projection algorithm and only briefly mention the organization of data flow as it is already explained in literature [12, 15]. We also do not cover scaling issues since the proposed optimizations can be easily integrated in existing frameworks like ASTRA, PyHST, or UFO which provide multi-GPU and GPU-cluster support already. The article is organized as follows. The hardware setup, software configuration, and pseudo-code conventions are listed in Sect. 2. A short introduction to parallel architectures that is required to understand the proposed optimizations is given in Sect. 3. In this section we also highlight the differences between the considered parallel architectures. The Filtered Back Projection algorithm and its state-of-the-art implementation are presented in Sect. 4. A number of optimizations to this implementation are proposed in Sect. 5. An alternative implementation relaying on a different set of hardware resources is developed in Sect. 6. A hybrid approach combining both approaches to fully utilize all hardware resources is presented in Sect. 7. The achieved performance improvements are finally discussed in Sect. 8.

2 Setup, methodology, and conventions

2.1 Hardware platform

To evaluate the performance of the proposed methods, we have selected 9 AMD and NVIDIA GPUs with different micro-architectures. Table 1 summarizes the considered GPUs. These GPUs were assembled into the 3 GPU servers. The newer NVIDIA cards with Maxwell and Pascal architectures were installed in a Supermicro 7047GT based server specified in Table 2. The older NVIDIA cards and all AMD cards were installed in two identical systems based on the Supermicro 7046GT platform. The full specification is given in Table 3. Additionally, we have tested how the developed code is performing on an Intel Xeon Phi 5110P accelerator. The accelerator was installed in the first platform along with the newer NVIDIA cards.

2.2 Software setup

All described systems were running OpenSuSE 13.1. The code for the NVIDIA cards was developed using the CUDA framework. As newer versions of the framework have dropped support for older GPUs, we have used CUDA 6.5 for the NVIDIA GeForce GTX295 card and CUDA 8.0 for other NVIDIA GPUs. The AMD version of the code is based on OpenCL and was compiled using AMD APPSDK 3.0. Additionally, we have tested the performance of Xeon CPUs and a Xeon Phi accelerator using Intel SDK for OpenCL. Since the latest version of Intel OpenCL SDK does not support Xeon Phi processors any more, again we needed to use two different SDK versions. The newer one was used to evaluate the performance of the Xeon processors while the older one served to execute the developed methods on the Xeon Phi accelerator. All installed software components are summarized in Table 4.

2.3 Benchmarking strategy

In this article we are not aiming to precisely characterize the performance of the graphics cards, but rather validate the efficiency of the proposed optimizations. For this reason we take a relatively lax approach to the performance measurements. In most tests, we use a data set consisting of 2048 projections with dimensions of 2048 by 2048 pixels each. 512 slices with same dimensions are reconstructed and the median reconstruction time is used to estimate the performance.

Starting with the Kepler architecture, NVIDIA introduces the GPUBoost technology to adapt the clock speed according to the current load and the processor temperature [32]. To avoid significant performance discrepancies, we run a heat-up procedure until the performance stabilizes. Furthermore, we verify that the actual hardware clock measured before start of measurements (but after the heat-up procedure) does not significantly differ from the clock measured after the measurements. Otherwise, we re-run the test. Finally, we exclude all I/O operations in the benchmarks. The reconstructions are executed using dummy data and the results are discarded without transferring them back to the system memory.

2.4 Quality evaluation

Some of the suggested optimizations alter the resulting reconstruction. To assess the effect on quality, we compare the obtained results with the standard reconstruction in such cases. The standard Shepp Logan Head Phantom with a resolution of 1024 \(\times\) 1024 pixels is used for the evaluation [33]. We also illustrate the differences between standard and reduced quality methods using a cross-section slice from a real volume with a fossilized wasp from a recent experiment conducted at ANKA synchrotron [34]. The projection images were recorded using a 12-bit pco.dimax camera [35]. More details about the setup of the imaging system are available in the referenced article. As the changes are typically small and are hardly visible in the 2D image, we show a profile along vertical line crossing most of the features in the slice, see Fig. 1.

2.5 Pseudo-code conventions

To avoid long code listings we use pseudo-code to describe the algorithms. We use mixture of a mathematical and a C-style notation to keep it minimalistic and easy to follow. C syntax is mostly adapted for operations, loops, and conditionals. We use \(\mathbin {/}\) to denote integer division and \(\%\) for modulo operation. No floating point division is performed in any of algorithms. The division is always executed on positive integer arguments and produces integer number which is rounded towards zero. The standard naming scheme for variables is used across all presented algorithms. We group related variables together. The same letter is used to refer all variables of the group and the actual variable is specified using subscript. Furthermore, some algorithms use shared memory to cache the data stored in global or constant memory. In such cases, we keep the variable name, but add superscript indicating the memory domain. For instance, \(c_s^S\) points to the sine of the projection angle stored in the shared memory. c is a group of variables storing the projection constants. \(c_s\) refers specifically the sine of the projection angle and the superscript \(\cdot ^S\) indicates that the copy in shared memory is accessed. All variables used across the algorithms are listed in Tables 5, 6, and 7. The superscripts used to indicate memory segment are specified in Table 8.

We use \(\mathbf {\cdot }\) symbol to denote all vector variables, i.e. float2, float4, etc. Furthermore, all proposed algorithms are capable to reconstruct 1, 2, or 4 slices in parallel. If more than 1 slice is reconstructed, the accumulator and a few other temporary variables use the floating-point vector format to store values for multiple slices. These variables are marked with \(\tilde{\cdot }\). All arithmetic operations in this case are performed in vector form and affect all slices. The vector multiplication is performed element wise as it would be in CUDA and OpenCL. We use the standard C notation to refer array indexes and components of the vector variables. The arrays are indexed from 0. For instance \(\tilde{s}[0].x\) refers to the first component of the accumulator. The assignment between vector variable and scalars are shown using curly braces, like \(\{x, y\} = \tilde{s}[0]\). The floating point constants are shown without C type specification. However, it is of utmost importance to qualify all floating-point constants as single precision in the C code, i.e. using 0.5f in place of 0.5. Otherwise the double-precision arithmetic will be executed severely penalizing performance on majority of consumer-grade GPUs.

To perform thread synchronization and to access the texture engine, the algorithms rely on a few functions provided by CUDA SDK or defined in the OpenCL specifications. To preserve neutrality of notation, we use abbreviated keywords to reference this functions. This list of used abbreviations along with the corresponding CUDA and OpenCL functions are listed in Table 9. Actually, the syntax of OpenCL and CUDA kernels is very closely related. Only a few language keywords are named differently. It is a trivial task to generate both CUDA and OpenCL kernels based on the provided pseudo-code.

We use integer division and modulo operations across the code listings. These operations are very slow on GPUs and actually should be performed as bit mangling operations instead. However, the optimizing compilers can replace them automatically by the faster bit-mangling instructions. So, we are free to use notation which is easier to read. There are a few other cases where the optimization is left to the compiler.

3 Parallel architectures

The architectures of nowadays GPUs are rather heterogeneous and includes multiple types of computational elements. The performance balance between these elements is shifting with each release of a new GPU architecture. To feed the fast computational units with data, a complex hierarchy of memories and caches is introduced. But the memories are very sensitive to the access patterns and the optimal patterns also differ between the hardware generations [36]. In this section we briefly explain the GPU architecture and elaborate differences between the considered GPUs with a focus on the aspects important to implement back projection efficiently. To simplify reading for a broader audience, we use the more common CUDA terminology across this paper.

3.1 Hardware architecture

The typical GPU consists of several semi-independent Streaming Multiprocessors (SM) which share global GPU memory and L2 cache [37]. Several Direct Memory Access (DMA) engines are included to move data to and from system memory. Each SM includes a task scheduler, computing units, a large register file, a fast on-chip (shared) memory, and several different caches. There are a few types of computing units. The number crunching capabilities are provided by a large number of Arithmetic Units (ALU) also called Core units by NVIDIA. ALUs are aimed on single-precision floating point and integer arithmetic. Some GPUs also include specialized half precision and double precision units to perform operations with these types faster. There are also architecture-specific units. All NVIDIA devices include Special Function Units (SFU) which are used to quickly compute approximates of transcendent operations. The latest Volta architecture includes Tensor units aimed on fast multiplication of small matrices to accelerate deep learning workloads [38]. AMD architectures adapt scalar units to track loop counters, etc [39]. The memory operations are executed by Load/Store (LD/ST) units. The memory is either accessed directly or Texture units are used to perform a fast linear interpolation between the neighboring data elements while loading the data.

The computing units are not operating independently, but grouped in multiple sets which are operating in a Single Instruction Multiple Data (SIMD) fashion. Each set is able to execute the same instruction on multiple data elements simultaneously. Several such sets are included in SM and, often, can be utilized in parallel. The SM scheduler employs data- and instruction-level parallelism to distribute the work-load between all available sets of units. However, it is architecture depended which combination of instructions can be executed in parallel. The simplified and generalized scheme of GPU architecture is presented in Fig. 2 and is further explained in the next subsections.

Generalized scheme of GPU architecture. A typical GPU includes DMA engines, Global GPU memory, L2 cache, and multiple Streaming Multiprocessors (SM). The integrated DMA engines are primarily used to exchange data between GPU and system memory over PCIe bus, but also can be utilized to communicate with other devices at the PCIe bus (right). Each SM includes several types of caches and computational units (left)

3.2 Execution model

The GPU architectures rely on SIMT (Single Instruction Multiple Threads) processing model [36]. The problem is represented as a 3D grid of tasks or threads in CUDA terminology. All threads are executing the same code which is called kernel. The actual work of a thread is defined by its index (x, y, z) within the grid. Typically, a mapping between a thread index and image coordinates is established and each GPU thread processes the associated pixel or a group of pixels. Since memory access patterns matter, finding a suitable mapping has a very significant impact on the performance. In many practical applications, multiple mappings are used during the execution of a kernel. Particularly, all presented algorithms use 2 to 4 different mappings during the kernel execution.

The grid is split in multiple blocks of the same size. The blocks are assigned to a specific SM and are executed on this SM exclusively. Consequently, the information between threads of the same block can be exchanged using the fast shared memory local to SM. When a block is scheduled, all threads belonging to this block are made resident on the selected SM and all required hardware resources are allocated. A dedicated set of registers is assigned to each of the threads. However, not all threads of the block are executed simultaneously. The SM distributes resident threads between computational units in portions of 32/64 threads which are called warps. All threads of a warp are always executed simultaneously using one of available sets of units. If the execution flow within the warp diverges, it is executed sequentially: first all threads of the first branch are executed while others are kept idle and, then, vice-versa. To achieve optimal performance it is important to keep all threads of a warp synchronized, but the execution of complete warps may diverge if necessary. Similarly, the memory access patterns and locality are extremely important within a warp, less important within a block, but rather irrelevant between different blocks. GPUs always assign threads with consecutive indexes to the same warp and the thread mappings are always constructed with these considerations in mind.

At each given moment, the SM executes a few warps while several others are idle, either waiting for memory transaction to complete or for a set of units to become available. This is one of the mechanisms used to hide latencies associated with long memory operations. While one warp is set aside waiting for the requested data, the computational units are kept busy executing other resident warps. As the registers are assigned to all threads permanently and are not saved/restored during scheduling, the switching of the running warp inflicts no penalty.

3.3 Memory hierarchy

Compared to a general-purpose processor the ratio between computational power and throughput of the memory subsystem is significantly higher on GPUs. To feed the computation units with data, the GPU architectures rely on multiple types of implicit and explicit caches which are optimized for different use cases. Furthermore, the maximum bandwidth of GPU memory is only achieved if all threads of a warp are accessing neighboring locations in memory. For optimal performance some architectures may require even stricter access patterns.

There are 3 types of general-purpose memory available in the GPU. A large amount of global memory is accessible to all threads of the task grid. Much smaller, but significantly faster shared memory is local to a thread block. The thread-specific local variables are normally hold in registers. If there is not enough register space, a part of variables may be offloaded to the local memory. The thread-specific, but dynamically addressed arrays are always stored in the local memory (i.e. if array addresses can’t be statically resolved during the compilation stage). In fact, the local memory is a special area of the global memory. But the data will be actually written and read to/from L1 or L2 cache unless an extreme amount of local memory is required. Even then, access to variables in the local memory inflicts a severe performance penalty compared to the variables kept in the registers and should be avoided if possible.

To reduce the load on the memory subsystem, GPUs try to coalesce the global memory accesses into as few transactions as possible. This can only be realized if the threads of a warp are addressing adjacent locations in the memory. The memory controller aggregates the addresses requested by all threads of a warp and issues a minimal possible amount of 32- to 128-byte wide transactions. These transactions are subject to alignment requirements as well. It does not matter in which order the addresses are requested by the threads of a warp. The maximum bandwidth is achieved if as few as possible of such transactions are issued to satisfy the data request of the complete warp. This was different in older hardware when the stricter access patterns had to be followed. If it is not possible to implement coalesced access strategy, the shared memory is often used as explicit cache to streamline accesses to the global memory [40].

The shared memory is composed out of multiple data banks. The banks are 32- or 64-bit wide and are organized in a such way that successive words are mapped to successive banks. The shared memory bank conflict occurs if the threads of a warp are accessing multiple memory locations belonging to the same bank. The conflicts causes warp serialization and may inflict a significant penalty to the shared memory bandwidth. Furthermore, the achieved bandwidth depends on a bit-width of the accessed data. The Kepler GPUs are equipped with 64-bit shared memory and only deliver full bandwidth if 64-bit data is accessed. While the AMD Cypress and Tahiti GPUs are equipped with 32-bit shared memory, the performance is still considerably improved if 64-bit operations are performed. Increasing the data size beyond 64-bit has a negative impact on the performance on some architectures. 128-bit loads from shared memory always cause bank conflicts on NVIDIA GT200, NVIDIA Fermi, and all AMD architectures. We tackle the differences between shared memory organization in Sects. 6.3 and 6.4.

Most of the GPU architectures provide both L1 and L2 caches. However, the amount of the cache per compute element is quite low. On NVIDIA Fermi and Kepler GPUs, both L1 cache and shared memory are provided using the same hardware unit and the ratio between the size of L1 cache and the shared memory is configured at compilation stage [37, 41]. Only buffers that are read-only during a complete execution of a kernel are usually cached in L1. This property is not always detected by the compiler and should be either hinted in the code or enforced using a special CUDA intrinsic instruction [41]. There are two additional caches optimized for specific use-cases. The constant memory is used to store parameters which are broadcasted to all threads of the grid. For optimal performance 64-bit or 128-bit access is required [42]. The texture engine provides a cache optimized for spatial access. While the line of L1 cache is typically 128-byte long, the texture cache operates with lines of 32-bytes allowing to fetch the data from multiple rows of an image as required to perform bi-linear interpolation.

3.4 Texture engine

The texture engine associates a dimensional information with buffers in the global GPU memory [43]. By doing so, it is able to interpret the memory as a multi-dimensional object and perform implicit interpolation if a texel with fractional coordinates is requested. Nearest-neighbor or linear interpolation modes are supported. The texture engines are able to work with a variety of data types. Besides simple integer and floating-point numbers, they are also capable to interpolate and return the values encoded in standard vector types. The performance is defined by the number of texels processed per time unit and is called texture filter rate. Up to a threshold, the filter rate is independent from the actually used data type. The same number of texels is returned per second if either 8, 16, or 32-bits are used to encode the texel values. For the larger vector types the theoretical filter rate, however, is not actually reached. Depending on the GPU architecture, a maximum 32-, 64-, or 128-bit values are processed at a full speed.

To achieve maximum performance it is also necessary to ensure the spatial locality of the texture fetches. The locality is important at several levels. At a block level it results in a high level of texture cache utilization. A more dense access layout within a warp reduces the number of required transactions to the texture cache. While it is not documented, the distribution of the fetch locations between groups of 4 consecutive threads impacts performance significantly if a bi-linear interpolation is performed. To verify it, we developed a small benchmark using the techniques proposed by Konstantinidis and Cotronis for gpumembench and mixbench suites [42, 44]. Figure 3 shows two different thread assignments to fetch 16 texels from a 4-by-4 pixel square. The fetched coordinates are always slightly shifted from the pixel centers to ensure that the bi-linear interpolation is actually executed. There is a little difference if 32-bit data is accessed. For 64-bit data, however, the thread assignments following Z-order curve reach almost 100% of the theoretical maximum while only 50% is achieved if simple linear layout is used. Section 5.5 discusses the effect of the optimized fetch locality on a performance of tomographic reconstruction.

We also used the developed benchmark to find the maximum size of fetched data which is still filtered at full speed. Our results show that all NVIDIA GPUs starting with Fermi benefit from the 64-bit texture fetches if requests are properly localized. It is also supported by the latest of the considered chips from AMD. However, the OpenCL kernel must be compiled with OpenCL 2.0 support enabled. It is done by passing -cl-std=CL2.0 flag to clBuildProgram() call. Otherwise, the full performance is only achieved if the nearest-neighbor interpolation is performed. This is always the case for older AMD devices. If the texture engine is configured to perform linear-interpolation on 64-bit data, only the half of throughput is delivered on these AMD architectures. On other hand, all AMD devices are able to deliver the full performance using the 128-bit data if the nearest-neighbor interpolation is utilized. The NVIDIA devices are limited by 64-bit in both cases.

3.5 Task partitioning

The number of resident threads directly affects the ability of the SM to hide memory latencies. Each architecture limits the maximum number of resident warps per SM. Since SM has only a limited amount of registers and shared memory, the actual number of resident warps could be bellow this limit. The ratio between the actual and the maximum number of resident warps is called occupancy and has a significant impact on the performance. The complexity of the kernel dictates how many registers is required per thread and, hence, restricts the maximum amount of resident threads on the SM. It is possible to target occupancy on NVIDIA platform. If a higher occupancy is requested, the CUDA compiler either reduces the number of used registers in a price of repeating some computations or offloads part of the used variables in the local memory. Vice-versa, the compiler may perform more aggressive caching and pre-fetching if lower occupancy is targeted. Both approaches may significantly improve the performance under different conditions. The optimal occupancy depends on both, work-load and hardware capabilities. On one hand, it should be high enough to ensure that the SM scheduler always has warps ready to execute. On other hand, prefetching may significantly improve performance of memory bound applications. Furthermore, offloading variables to local memory will not necessarily harm the performance if the local memory is fully backed by L1 cache. Consequently, more registers can be made available for prefetching also without decreasing occupancy. However, the shared memory available to applications is reduced on Fermi and Kepler platforms if a large amount of L1 cache is dedicated to the local memory. A very detailed study of the optimal occupancy under different workloads is performed in the Ph.D. thesis of Vasiliy Volkov [27]. We study the effect of occupancy tuning on the performance of the back projection kernel in Sects. 5.7 and 6.9. Both reduced and increased occupancy are found practically useful in different circumstances.

GPUs have varying limits on a number of threads allowed per block. To achieve a higher occupancy, multiple thread blocks can be scheduled on the same SM simultaneously. The maximum number of resident blocks is architecture dependent and is further restricted by the requested amount of shared memory. The required shared memory is not always proportional to the size of a thread block. The larger blocks may require less shared memory per thread. As the block is always made resident as a whole, some configurations are better mapped to available resources while other leave part of the memory unused.

3.6 Code generation

Even the fast shared memory has a relatively high latency [28]. Consequently, GPU vendors provide several mechanisms to hide this latency and preserve the high memory bandwidth. The thread is not stalled until the executed memory operation is finished. The GPU scheduler launches the operation, but proceeds issuing independent instructions from the execution flow of the thread until the requested data is actually required. If the next instruction in the flow depends on the result of the memory operation which is not completed yet, the SM puts the thread aside and schedules another resident thread as stipulated by SIMT execution model. For compute-bound applications, the optimizing compiler re-arranges instructions to interleave memory operations with independent arithmetic instructions and uses both described mechanisms to avoid performance penalties due to memory latencies [27].

If an application is memory bound, the compiler vice-versa groups multiple load operations together to benefit from streaming. The latency, then, has to be hidden only a single time for all load operations which are streamed together. This mechanism is of a great importance to perform texture fetches as a texture cache hit reduces usage of memory bandwidth, but not the fetch latency [36]. Furthermore, several 32-bit loads from the consecutive addresses may be re-combined by a compiler in a single 64- or 128-bit memory instruction. It reduces the number of issued instructions and gives the warp scheduler an opportunity to increase the Instruction Level Parallelism (ILP) by launching additional instructions in the vacated execution slots. With the Kepler architecture, this scheme may even double the shared memory bandwidth by utilizing 64-bit memory banks more efficiently. Several papers show a significant performance improvement also on other architectures [29].

The described optimizations are performed automatically by the compilers from AMD and NVIDIA. The loops are unrolled and instructions are re-arranged as necessary to increase the hardware utilization. The loop unrolling not only allows the compiler to optimize the instruction flow, but also reduces the load on the ALUs. In particular, the computation of array indexes is replaced by static offsets at compilation stage. In some cases, however, it is possible to further improve the generated code by enforcing the desired unrolling factors and by targeting the occupancy. This is discussed in Sect. 6.9. Furthermore, the data layout may be adjusted in order to give compiler more options in optimizing the code flow. The algorithm described in Sect. 5.6 relies on a large number of independent operations to compensate the low occupancy. In Sect. 6.8 we optimize the data layout to enable the re-combination of memory instructions.

3.7 Scheduling

To provide high performance, the GPU architectures include multiple components operating independently. Texture fetches, memory operations, several types of arithmetic instructions are executed by different blocks of GPU in parallel. Hence, the kernel execution time is not determined as a sum of all operations, but rather is given by the slowest execution pipeline. One strategy to implement an efficient algorithm is to balance operations between available GPU blocks uniformly and minimize the time required to execute the slowest pipeline. Using this methodology we were able to gain significant performance improvements. Section 6.6 discusses balancing of SFU and ALU operations to speed-up the linear interpolation on the Kepler architecture. Two different back-projection algorithms are combined in Sect. 7.1 to balance the load across all major GPU subsystems. As result the proposed hybrid approach outperforms the fastest of the algorithms by 40% on Pascal and Maxwell architectures.

Each SM includes one or more warp schedulers which execute instructions of resident warps. Each scheduler is able to issue either a single instruction per-clock or at each clock to dual-issue two independent instructions from the same warp. On most architectures the number of warp schedulers is synchronized with the number of independent ALU units. All available units are fully utilized if a single ALU instruction is scheduled by each warp scheduler at every clock cycle. The SM processor on Kepler, however, includes 6 sets of ALUs, but only 4 warp schedulers [41]. To achieve 100% utilization all SM schedulers are expected at each second clock cycle to select two independent instructions from the execution flow and dispatch them to 2 different sets of ALU units. The VLIW architecture adopted by the older AMD GPUs requires 4 to 5 independent instructions in the flow for optimal performance [45]. The flow of independent instructions and dual-issue capabilities are also required to utilize multiple functional blocks of GPU in parallel.

Only a little official information is available about instructions which can be schedulled in parallel. The CUDA C Programming Guide states that SFUs are used to compute approximates of transcendent functions [36]. In fact, they are also used to perform bit-mangling, type-conversions, and integer multiplication on the NVIDIA Kepler, Maxwell, and Pascal GPUs. We developed a micro-benchmark to verify if certain instructions can be dual-issued. The idea is to measure the throughput of each individual instruction and, then, compare it to throughput of their combination. The instructions are assumed to be executed by the same function unit if the combination runs slower than the slowest of the individual instructions. In particular we found out that NVIDIA GPUs starting with Kepler execute rounding, type conversion, and bit-shift operations in parallel with ALU instructions, but slow down the computation of sine and cosine approximates. Consequently, we assume that SFUs are used to execute these operations. On Maxwell and Pascal, the bit-wise operations also slow down ALU instructions slightly. Both SFUs and ALUs are used in this case. However, the decrease is small and additional ALU-operations are still possible to execute in parallel. There is no parallelism of these operations on the AMD platform.

3.8 Synchronization

The GPU memory hierarchy and a few synchronization primitives are used to efficiently coordinate work between threads. The fast shared memory is used to exchange information between threads of the same block. An even faster shuffle instruction is available on NVIDIA GPUs since the Kepler generation. It allows to exchange data stored in the registers of multiple threads belonging to the same warp [41]. Both CUDA and OpenCL provide a fast synchronization instruction which ensures that all threads of a block have completed the assigned part of the work and reached the synchronization point. This allows to split execution of a kernel in multiple phases with different thread mappings. For example in the algorithm described in Sect. 6.2, the threads are first mapped to the elements of a cache and are used to prefetch data from global memory. After synchronization the threads are re-assigned to the pixels of output image and use the cached information to compute their intensities.

The synchronization may restrict the ability of the SM schedulers to benefit from the ILP parallelism if the groups of instructions aimed on different functional units are separated by a synchronization primitive. Partial remedy is to allocate more resident blocks to SM by increasing occupancy or by using smaller blocks. Still, a well composed code usually results in better performance if it allows the compiler to re-arrange execution flow and dual-issue instructions.

3.9 Communication

Most GPUs include a pair of DMA engines and are able to perform data transfers over the PCIe bus in both directions in parallel with kernel executions. This, however, requires page-locked (non swappable) host memory. While OpenCL does not define how the page-locked memory can be obtained, in practice it can be done by allocating a host-mapped GPU buffer. This is realized by calling clCreateBuffer with CL_MEM_ALLOC_HOST_PTR flag. While only the host buffer is required in this case, the command allocates also the GPU buffer. The memory overcommitting is, however, supported on NVIDIA platform. Consequently, only the host memory is actually reserved. The corresponding GPU buffer is never accessed and, correspondingly, the GPU memory is not reserved. On the AMD platform, however, the memory is actually set aside for both buffers immediately. Consequently, the amount of GPU memory available to application is reduced. To enable parallel data transfer and computations, double buffering technique along with asynchronous CUDA/OpenCL API are typically used. The CUDA/OpenCL events are used for synchronization.

In addition to the DMA engines used for communication with the host memory, the professional series of GPUs also support a slave mode of DMA operation. In this mode the other devices on the PCIe bus are able to write data directly into the GPU memory. Starting with the Kepler micro-architecture, this feature is supported by the NVIDIA Tesla cards using the GPUDirect technology [46]. AMD provides the DirectGMA technology to enable the feature on the GCN-based AMD FirePro cards [47]. The GPUDirect technology is already used in several MPI frameworks to speed-up communication in Infiniband networks [48].

3.10 Summary

We summarize the properties of target GPUs in Table 10. Besides the hardware specification available in the vendor white papers, we present architecture-specific information obtained using micro-benchmarking and further investigate the performance balance of different operations. Only characteristics important to implement fast back-projection kernel are included. For this reason, we only report throughput of the floating-point, bit-mangling, and type-conversion instructions.

Compared to the GT200, the Fermi architecture significantly improved the arithmetic capabilities, but the texture filter rate has not changed. Instead, the texture units got the ability to fetch 64-bit data at full speed. The Fermi GPUs also lost the capability to dual-issue instructions from the same warp and are the most restricted architecture of the considered ones concerning the ability to schedule instructions to different execution pipelines in parallel. Consequently, the Fermi performance is likely improved if the number of the required instructions is reduced. One option is to organize the data in a way allowing wider 64/128-bit memory operations and texture fetches. The Kepler architecture massively improved the performance of the texture engine. But the throughput of integer, bit-mangling, and type-conversion operations has actually slowed down compared to the Fermi devices. Furthermore, the ILP become a necessity for optimal performance. On Pascal, the amount and performance of the shared memory has doubled. While the amount of available registers has not changed, the generated code is typically requires less registers. Consequently, it is either possible to achieve higher occupancy or execute more sophisticated kernels at the same occupancy.

There is a few important differences between NVIDIA and AMD platforms. AMD provides less control over the code-generation. The NVIDIA compiler can be parametrized to use less registers for generated code. This option is not available for AMD. Neither of the considered AMD devices support the half-precision extension of the OpenCL specification. While we can use the smaller data representation to reduce texture and shared memory bandwidth on NVIDIA platform, it is not possible to achieve it with AMD. On the other hand, the AMD devices are capable to perform full-speed texture filtering also using 128-bit data if the nearest-neighbor interpolation is selected. Furthermore, the ratio between the shared memory throughput and the performance of the texture engine is 2 - 4 times higher on AMD devices. Consequently, it is more likely that caching of the fetched data in the shared memory will result in performance improvements. The organization of AMD Cypress GPUs differs from the other considered architectures significantly. It has very slow constant memory and relies on ILP parallelism extensively. Five instructions has to be scheduled at each clock cycle for optimal performance. Vice-versa the GCN-based devices do not provide ILP. There is also no parallelism between floating-point and bit-mangling/type-conversion instructions. The throughput of arithmetic operations is comparatively slow and is bottleneck for the proposed algorithms. There are also minor differences between two generation of GCN platform. The first generation of GCN chips performs better if 64-bit operations are performed on the shared memory. This is not required in the second generation of the architecture anymore. Starting with GCN2, the AMD devices are capable to perform 64-bit texture fetches at full pace also if bi-linear interpolation is employed.

To build an efficient implementation of the algorithm it is important to account for the described architectural differences. Across all architectures a good locality of the texture fetches has to be ensured and optimal access patterns to global and shared memory has to be followed. It is necessary to adjust the algorithm flow to balance the load between different execution pipelines according to their hardware capabilities. Finally, also the right balance between ILP, streaming memory operations, and achieved occupancy has to be found.

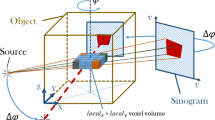

4 Tomographic reconstruction

At synchrotron imaging beamlines, information about X-ray attenuation or/and phase changes in the sample is used to reconstruct its internal structure. The objects are placed on a rotation stage in front of a pixel detector and rotated in equiangular steps. As the object rotates, the pixel detector registers a series of two-dimensional intensity images of the incident X-rays. Typically the X-rays are not detected directly, but converted to visible light using a scintillator placed between the sample and pixel detector. Then, the conventional CCD cameras are used to record intensities which actually correspond to projections of the sample volume. Due to the rather large source-to-sample distance, imaging at synchrotron light sources is usually well described by a parallel-beam geometry. The beam direction is perpendicular to the rotation axis and to the lines of the pixel detector. Therefore, the 3D reconstruction problem can be split into a series of 2D reconstructions performed with cross-sectional slices. An origin of coordinate system coincides with center of sample rotation stage and rotation axis is anti-parallel to gravity. To reconstruct a slice, the projection values are “smeared” back over the 2D cross section along the direction of incidence and are accumulated over all projection angles. To compensate blurring effects, high-pass filtering of the projection data is performed prior to back projection [21].

The typical reconstruction data flow using parallel accelerators is represented on Fig. 4. The projections are loaded into the system memory either from a storage facility or directly from a camera and, then, transferred into the GPU memory before executing pre-processing or reconstruction steps. From cameras equipped with PCIe-interface it is also possible to transfer the projections directly into the GPU memory using GPUDirect or DirectGMA technologies. The later is supported by UFO framework [15]. The loaded projections are pre-processed with a chain of filters to compensate the defects of optical system. Then, the projections are rearranged in order to group together the chunks of data required to reconstruct each slice. These chunks are called sinograms and are distributed between parallel accelerators available in the system in a round-robin fashion. Filtering and back-projection on each slice are performed on each GPU independently, the results are transferred back, and are either stored or passed further for online processing and visualization. To efficiently utilize the system resources, usually all described steps are pipelined. The output volume is divided into multiple subvolumes, each encompassing multiple slices. The data required to reconstruct each subvolume is loaded and send further trough the pipeline. While next portion of the data is loaded, the already loaded data is pre-processed, assembled in sinograms, and reconstructed. The preprocessing is significantly less computational-intensive compared to the reconstruction and is often, but not always, performed on CPUs. OpenCL, OpenMP, or POSIX threads are used to utilize all CPU cores. The pre-processed sinograms are, then, distributed between GPUs for reconstruction. For each GPU a new data pipeline is started. While one sinogram is transferred into the GPU memory, the sinograms already residing in GPU memory are first filtered, then back projected to the resulting slice, and finally transferred back to the system memory. Event-based asynchronous API and double-buffering are utilized to execute data transfer in parallel with reconstruction. Basically, such approach allows to use all system resources including Disk/Network I/O, PCIe bus, CPUs, and GPUs in parallel.

A single row from each of the projections is required to reconstruct a slice of output 3D volume. These rows are grouped together in a sinogram. For the sake of simplicity, we refer to these rows as projections while discussing reconstruction from sinograms. Each slice is reconstructed independently. First, each sinogram row is convolved with a high-pass filter to reduce blurring - an effect inherent to back-projection. The convolution is normally performed as multiplication in Fourier domain. The implementation is based on available FFT libraries. NVIDIA cuFFT is used on CUDA platform and either AMD clFFT or Apple oclFFT is utilized for OpenCL. For optimal FFT performance, multiple sinogram rows are converted to and from FFT domain together using batched transformation mode. After filtering, the buffer with filtered sinograms is either bind to texture on CUDA platform or copied into the texture if OpenCL is used. The pixel-driven approach is used to compute back-projection. For each pixel (x, y) of the resulting slice, the impact of all projections is summed. This is done by computing the positions \(r_p\) where the corresponding back projection rays are originated and interpolating the values of projection bins around this position.

If \(\alpha\) is an angle between consecutive projections, the positions are computed as follows:

As computation of trigonometric function is relatively slow on all GPU architectures, the values of \(\cos (p\alpha )\) and \(\sin (p\alpha )\) are normally pre-computed on CPU for all projections, transferred to GPU constant memory, and, then, re-used for each slice. Assuming this optimization, the back projection performance is basically determined by how fast the interpolation could be made. Two interpolation modes are generally used. The nearest neighbor interpolation is faster and better at preserving the edges while the linear interpolation reconstructs the texture better. While more sophisticated interpolation algorithms can be used as well, they are significantly slower and are rarely if ever used. All reviewed reconstruction frameworks rely on GPU texture engine to perform interpolation. This technique was first proposed in the beginning of the nineties for the SGI RealityEngine [55].

5 Back-projection based on texture engine

The standard implementation described in previous section performs fairly good. The compilers included in the CUDA Framework and AMD APPSDK are optimize the execution flow automatically. The loops are unrolled and the operations are re-arranged to allow streaming texture loads as explained in the Sect. 3.6. Still, the default implementation does not utilize all capabilities of texture engine and significant improvement can be achieved on all architectures.

5.1 Standard version

First, we will detail how the standard implementation works. Each GPU thread is responsible for a single pixel of output slice and loops over all projections to sum the contribution from each one. At each iteration, a projection is performed to find a coordinate where the ray passing through the reconstructed image pixel hits the detector. The value at the corresponding position in the sinogram row is fetched using the texture engine and summed up with the contributions from other projections. The texture engine is configured to perform either nearest-neighbor or linear interpolation as desired. The projection is computed according to Eq. 1. To align the coordinate system with rotational axis, the position of the rotational axis is first subtracted from the pixel coordinates and, then, added to the computed detector coordinate to find the required position in the sinogram. To compensate for possible distortions of imaging system during the experiment, the rotational center is not constant, but may include per-projection corrections. Sine and cosine of each projection angle as well as the corrected position of the rotation axis are read from a buffer in the constant memory which is generated during the initialization phase. The computation grid is split in square blocks of 16-by-16 threads. It results in optimal occupancy across all considered platforms. The corresponding pseudo-code is presented in Algorithm 1.

The CUDA platform supports two slightly different approaches to manage textures: the texture reference API and the texture object API [36]. The texture reference API is universal and is supported by all devices. The texture object API is only supported since Kepler architecture. While the reference API can be used on all devices, as we found out the object API outperforms it on the devices with compute compatibility 3.5 and later. Therefore, we use the reference API for GT200, Fermi, and the first generation of Kepler devices and the object API for all newer architectures.

5.2 Multi-slice reconstruction

The texture engines integrated in all recent generations of GPUs are capable filter 8-byte data at the full pace, see Sect. 3.4. The standard reconstruction algorithm can benefit from this feature only if changed to double-precision for better accuracy. But this have a little use in practice. In parallel tomography, however, exactly the same operations are performed for all the reconstructed slices. Therefore, it is possible to reconstruct multiple slices in parallel if the back projection operator is applied to a compound sinogram which encodes bins from the several individual sinograms as vector data. Particularly, it is possible to construct such sinogram using float2 vector type and interleave values from one sinogram as x components and from another as y, see Fig. 5. With float2-typed texture mapped on this interleaved sinogram, it is possible to fully utilize the bandwidth of the texture engine and reconstruct two slices in parallel. The interleaving is done as an additional data preparation step between filtering and back projection steps. The back projection kernel is, then, adjusted to use the float2 type and writes the x component of the result into the first output slice and the y component into the second. There is a considerable speed-up on all relevant architectures as can be seen on Fig. 6.

The figure evaluates efficiency of optimizations proposed for texture-based back-projection kernel. The measured throughput is compared to the maximum filter rate of a texture engine and the performance is reported as a percent of achieved utilization. The results are reported also for processing multiple slices in parallel. The nearest-neighbour interpolation is used to measure performance if 4 slices are reconstructed in parallel. On NVIDIA platform the data is also stored in the half float data representation in this case. For single- and dual-slice reconstruction, the performance is measured for bi-linear interpolation mode and the sinogram is stored in the single-precision floating point format on all platforms. The blue bars show performance of the standard Algorithm 1 just modified to process multiple slices in parallel. The green bars show improvements due to a better fetch locality. The red bars show the maximum performance achieved by using Algorithm 3 with optimal combination of tweaks explained through Sect. 5. Table 13 summarizes the architecture-specific parameters used at each GPU. The utilization is reported according to the supported filter rate, not the bandwidth. While the lower utilization is achieved for multi-slices reconstruction modes, the actual performance is higher as 2-/4-slices are processed using a single texture access

5.3 Using half-precision data representation

Since the NVIDIA texture engine is currently limited to 8-byte vectors, the proposed approach can’t be scaled to 4 slices if the single-precision input is used. However, CUDA supports half-precision data type which encodes each floating-point number using 16 bits only. While reduced precision might affect the quality of reconstruction, the majority of cameras has only a dynamic range of 16 bits or bellow. High-speed cameras actually used for time-resolved synchrotron tomography have even a lower resolution of 10-12 bits only. Since the higher frequencies in a sinogram are amplified during the filtering step, it is impossible to represent the filtered sinograms by integer numbers without loss of precision. However, using a half-precision floating-point representation to store the input data should have a limited impact on the resulting image quality if all further arithmetic operations are performed in single-precision. Unfortunately, the half-precision textures are not supported in the latest available version of CUDA yet (CUDA 8.0). While one can store the half-precision numbers in the GPU memory, it is impossible to map the corresponding texture. Still, it is possible to speed-up the reconstruction if the nearest-neighbor interpolation mode is selected. After filtering, the sinograms are down-sampled to the half-precision format and interleaved. The texture-mapping is created using the float2 data type. Upon request the texture engine returns the nearest value without performing any operations on it. Therefore, the appropriate data is returned even if an incompatible format is configured. It is important that the data size is correct. To avoid further penalty to the precision, the half-precision numbers are immediately casted to single-precision using __half22float2 instruction and all further operations are performed in single-precision as usual.

The Fig. 6 indicates a significant speed-up on all NVIDIA architectures except Kepler. As can be seen from Table 10, the type casting is very slow on Kepler and caps the performance gains. The proposed method is also not viable on AMD platform. Neither of the considered AMD GPUs support half-precision extension of OpenCL specification. Without this extension, no hardware instruction is available to convert between half-precision and single-precision. While such conversion can be performed using several bit mangling operations, it would cap the possible performance gain as well.

The penalty to the quality of the reconstruction induced by reduced precision is evaluated in Figs. 7 and 8. It is negligible for both synthetic Shepp Logan phantom and the selected dataset with the measured data. However, the behavior for different real-world measurements may vary, especially if projections are obtained using a camera with high dynamic range. As the optimization proposed in this subsection changes the reconstruction results, it is important to verify that the achieved quality is still satisfactory for the considered application.

Comparison of two reconstructions of the Shepp Logan Head Phantom using a single-precision (red) or half-precision (green) input. A profile (top) and absolute difference between reconstructions (bottom) are shown along the line crossing maximum of features on the phantom. Due to very small differences between reconstructions, the red and green lines completely overlap in the top plot

Comparison of two reconstructions of the fossilized wasp dataset using a single-precision (red) or half-precision (green) input. A profile (top) and absolute difference between reconstructions (bottom) are shown along the selected line crossing multiple features. Due to very small differences between reconstructions, the red and green lines completely overlap in the top plot

5.4 Efficiency of the standard algorithm

The Fig. 6 evaluates efficiency of texture engine utilization. While performance in a single-slice processing mode is close to theoretical maximum on a majority of the considered architectures, the efficiency drops significantly if multiple slices are reconstructed in parallel. The AMD cards and the cards based on the NVIDIA Kepler architecture show sub-optimal performance also in a single-slice reconstruction mode.

As was discussed in Sect. 3.7, GPU architectures include multiple functional blocks operating independently. The performance of the GPU application is typically restricted by the slowest and/or most loaded of these blocks. Secondly, complex algorithms require a large amount of hardware resources like registers and shared memory. Large footprint on resources may constrain parallelism and, consequently, limit an GPU ability to hide memory latencies and schedule load across all available functional units. The discussed algorithm relies on:

-

Texture engine to fetch and interpolate data

-

ALUs to find the ray incidence point

-

Constant memory to load projection constants

-

The SFU units are used for type conversions and integer multiplication on the recent NVIDIA devices. The major load is from conversion between half- and single-precision formats in 4-slice reconstruction mode. The SFUs are also used for addressing constant memory arrays and to convert a loop index to the texture-coordinate along the projection axis.

The standard algorithm has a small register footprint and all GPUs provide enough computing power to find incidence points. The performance of the texture engine, however, is sub-optimal across all architectures if multi-slice reconstruction is performed. The reason is the bad locality of the texture fetches. The AMD GPUs are also restricted by the performance of the texture cache if only a single-slice is reconstructed. On top of that, the Kepler and AMD VLIW systems have comparatively slow constant memory which also bounds the performance bellow the theoretical throughput. Finally, the low SFU performance on the Kepler GPUs restricts the reconstruction if half-float format is used to store the sinograms. More information about GPU capabilities and the relative performance of GPU components is given in Table 10.

5.5 Optimizing locality of texture fetches

The standard algorithm maps each GPU thread to a single pixel of output slice. The default mapping is linear: the thread with coordinates (x, y) in a computational grid is used to reconstruct the pixel with coordinates (x, y) in a slice. Since every thread in a wrap reconstructs consecutive pixel along x axis, a large range of sinogram bins is always accessed. Up to 16 different locations is fetched by a warp if 16-by-16 thread blocks are utilized. As it was discussed in the Sect. 3.4, the locality of fetches within a block, a warp, and also within a group of 4 consecutive threads is important to keep the texture engine running at full speed.

To improve the locality of the texture fetches, a new thread-to-pixel mapping is proposed. The thread blocks assignments are kept exactly the same as in the standard version. I.e. each block of 256 threads is responsible for an output area of 16-by-16 pixels. However, this area is further subdivided into 4-by-4 pixel squares. Within each square, the threads are mapped along Z-order curve as illustrated in Fig. 10, left. Then, a group of 4 threads fetches positions in a sinogram row which are maximum 3 bins apart. And only up to 5 elements are required to perform corresponding linear interpolations. The data required for 16 threads is limited to 8 bins only. Table 11 shows the effect of remapping for the 1- and 2-slice reconstruction on NVIDIA Titan X GPU. According to Fig. 6 a significant speed-up is also achieved on other architectures unless the performance is also capped by other factors.

The pseudo-code to compute the new thread indexes is given in Algorithm 2. The only required modification in Algorithm 1 is to use the updated indexes \(m_t'\) in place of ones reported by CUDA/OpenCL.

5.6 Optimizing memory bandwidth

Even though the new thread mapping gives a significant speed-up on a majority of considered architectures, the performance on Kepler and AMD VLIW GPUs is still bound by the slow constant memory. To process a projection, GPU threads load several geometric constants to locate point of incidence as defined in Eq. 1. These constants can be re-used multiple times if each GPU thread would reconstruct several pixels. Since pixels are reconstructed independently, it will also increase the number of independent instructions in the execution flow and improve a scheduler ability to hide memory latencies and to issue multiple instructions per clock. There are two approaches how to adapt thread-to-pixel mapping. Either the number of threads in a computational grid is reduced proportionally or a new mapping scheme is constructed in a way that the same amount of threads is running but each thread contributes to multiple resulting pixels. The later can be achieved by processing several projections in parallel. Then, each thread is responsible for a group of pixels but loops over a subset of all projections only. Another thread would contribute to the same group of pixels but from a different subset of projections.

Both methods perform similarly if properly optimized for the target GPU. Using the second approach, however, the dimensions of computational grid stay unchanged. Consequently, it has advantage for region of interest (ROI) and small-scale reconstructions. For this reason, we focus on this method and elaborate how it is implemented and tuned to run efficiently across platforms. To preserve good locality of texture fetches, the mapping described in previous section is adapted with small changes. The thread blocks assignments are kept the same. Each block is responsible for an output area of 16-by-16 pixels and this area is further subdivided into 4-by-4 pixel squares. In contrast to original mapping, however, 64 threads are assigned per square. Each thread is responsible to compute a contribution to the pixel value from a quarter of all available projections. Hence, each thread processes 4 pixels and each pixel is reconstructed using 4 threads. To avoid costly atomic operations, the contributions of the projection subsets are summed independently. Then, the threads are re-assigned to perform reduction in the shared memory and compute the final value of a pixel. To preserve a good spatial locality of the texture fetches, 4 neighboring projections are processed in parallel and the threads step over 4 projections at each loop iteration.

There are 256 threads in a block and 64 threads are assigned to reconstruct each 4-by-4 pixel square. Therefore, 4 such squares are processed in parallel and a complete set of 16 squares requires 4 steps of a loop. Figure 9 shows several possible sequences to serialize processing. The first mapping is sparse and results in a reduced cache hit rate as compared to the other options. Since only a single pixel coordinate has to be incremented in a pixel loop, the third option requires less registers compared to the second. While the second mapping has a better access locality within the 64-thread warps of the AMD platform, it does not affect performance in practice. On other hand, the register usage is very high in multi-slice reconstruction modes and the extra registers cause reduced occupancy or the spillage of registers into the local memory. Therefore, the third approach is preferred.

The figure illustrates several ways to assign a block of GPU threads to an area of 16-by-16 pixels. Since 4 projections are processed at once, only 64 threads are available for entire area and it take 4 steps to process it completely. For each possible scheme in gray are shown all pixels which are processed during the first step in parallel. The first mapping (left) is sparse and results in increased cache misses. The second mapping (center) requires more registers and may cause reduced occupancy. So, the third mapping (right) is preferred

A request to multiple locations in the constant memory by a warp is serialized on NVIDIA platform. To avoid such serialization, all threads of a warp are always assigned to the same projection. The following mapping scheme is adopted. The lowest 4 bits of the thread number in a block define the mapping within a 4-by-4 pixel square. A group of 16 threads follows Z-curve as explained in the Sect. 5.5. Next 2 bits define a square and the top 2 bits define the processed projection. Figure 10 illustrates the proposed mapping and Algorithm 2 provides the corresponding pseudo-code.

Mapping of a block with 256 threads to reconstruct a square of 16-by-16 pixels along 4 projections. 4 steps are required to process all pixels. A group of 64 consecutive threads is responsible to process a rectangular area of 16 by 4 pixels (middle). 4 projections are processed in parallel using 4 such groups (right). Each 4-by-4 pixel square is reconstructed by 16 threads arranged along Z-order curve (left). For each output pixel or block of pixels, the assigned range of threads is shown in the figure

The pseudo-code for the complete approach is presented in Algorithm 3. There are two distinct processing steps. First the partial sums are computed in an 4-element array. It is declared as a local variable and both NVIDIA and AMD compilers are able to back it with registers because of the fixed size. The outer loop starts from the first projection assigned to a thread and steps over the projections which are processed in parallel. The large loop-unrolling factor requested with pragma preprocessor directive has a positive impact on performance, especially on Kepler architecture. At each iteration constants are loaded and inner loop is executed to process 4 pixels the thread is responsible for. After completion of all projections, the reduction loop is executed. The partial sums are written into shared memory and reduction is performed. To avoid non-coalesced global memory writes, first all results are stored in a shared memory buffer \(\tilde{r}^S\) and, then, written in the coalesced manner. The synchronization is needed when switching different mapping modes. Since each reduction is performed by a single warp only, it is sufficient to prevent compiler from reordering read and write operations in-between of reduction steps using fence operation. Alternatively, the shuffle operation may be utilized to perform reduction on Kepler and newer NVIDIA architectures. Then, neither fence nor if-condition are required. The reduction loop using the shuffle instruction is shown in Algorithm 4.

On GTX295 using CUDA6, there are a few glitches significantly affecting performance. The fence instruction prevents unrolling of the reduction loop. Consequently, the array with partial sums is referenced indirectly using the loop index. This forces the compiler to allocate array in the local memory instead of using registers and causes enormous penalty to the performance. Therefore, a standard __syncthreads is used instead. The loop is also not unrolled if the inner reduction loop is implemented directly as written in Algorithm 3. The following formulation causes no issues:

The GPU constant memory is optimized with the assumption that always the same constants are accessed by all threads of a computational grid. Since the new algorithm goes over several projections in parallel, this assumption is not valid any more. While the proposed mapping avoids major penalty due to warp serialization, slow constant memory is still a bottleneck on older AMD devices. To avoid performance penalty, faster and larger shared memory is used instead in this case. The projection constants are initially stored in global GPU memory and, then, are cached in shared memory. The Algorithm 5 contains alternative implementation of the accumulation step for Algorithm 3. Shared memory is additionally configured to store constants for up to 256 projections. In fact, the same shared memory buffer may be used in the both steps of algorithm, first for caching constants and later for a data exchange while performing reduction. An outer loop processing blocks of 256 projections is introduced. At each iteration of the loop, the threads of a block are, first, used to read the constants from global memory and fill the cache. To allow 64-bit loads, we use a float2 variable to store values of both trigonometric functions. After synchronization, the inner projection loop is started to compute partial sums. The inner loop is implemented as in Algorithm 3 with only difference that constants are loaded from shared memory. This method, however, cannot be used across all platforms. While majority of NVIDIA GPUs showed similar performance for both implementations, Kepler-based GPUs perform better if constant memory is utilized.

5.7 Optimizing occupancy

Similarly to the standard algorithm, the optimized version can be easily adapted to process 2- and 4-slices in parallel. Only accumulators and intermediate buffers have to be declared with the appropriate vector type. However, the usage of hardware resources grows significantly if multiple slices are processed in parallel. In a 4-slice mode, 16 registers (32-bit each) are required only to accumulate the partial sums. The large register footprint reduces occupancy and may result in a sub-optimal performance unless treated properly.