Abstract

The importance of cultural and natural heritage documentation is well recognized at international level, and there is an increasing pressure to document and preserve heritage also digitally. The continuous development of new sensors, data capture methodologies, and multi-resolution 3D representations and the improvement of existing ones can contribute significantly to the 3D documentation, conservation, and digital presentation of heritages and to the growth of the research in this field. The article reviews some important documentation requirements and specifications, the actual 3D surveying and modeling techniques and methodologies with their limitations and potentialities as well some visualization issues involved in the heritage field. Some examples of world heritage sites 3D documentation are reported and discussed.

Similar content being viewed by others

Introduction

The heritage sites in the world (natural, cultural, or mixed) suffer from wars, natural disasters, weather changes, and human negligence. According to UNESCO, a heritage can be seen as an arch between what we inherit and what we leave behind. In the last years, great efforts focused on what we inherit as cultural heritage and on their documentation, in particular for visual man-made or natural heritages, which received a lot of attention and benefits from sensor and imaging advances. The importance of cultural heritage documentation is well recognized, and there is an increasing pressure to document and preserve them also digitally. Therefore, 3D data are nowadays a critical component to permanently record the shapes of important objects so that they might be passed down to future generations. This has produced firstly a large number of projects, mainly led by research groups, which have realized very good quality and complete digital models (Levoy et al. 2000; Beraldin et al. 2002; Stumpfel et al. 2003; Guidi et al. 2004; Gruen et al. 2004; Ikeuchi et al. 2007; El-Hakim et al. 2008; Guidi et al. 2009a; Remondino et al. 2009a) and secondly has alerted the creation of guidelines describing standards for correct and complete documentations.

The actual technologies and methodologies for cultural heritage documentation (Ikeuchi and Miyazaki 2008) allow the generation of very realistic 3D results (in terms of geometry and texture) used for many scopes like archaeological documentation, digital conservation, restoration purposes, VR/CG applications, 3D repositories and catalogs, web geographic systems, visualization purposes, etc. But despite all the possible applications and the constant pressure of international organizations, a systematic and well-judged use of 3D models in the cultural heritage field is still not yet employed as a default approach for different reasons: (a) the “high cost” of 3D, (b) the difficulties in achieving good 3D models by everyone, (c) the consideration that it is an optional process of interpretation (an additional “aesthetic” factor) and documentation (2D is enough), and (d) the difficulty to integrate 3D worlds with other more standard 2D material. But the availability and use of 3D computer models of heritages opens a wide spectrum of further applications and permits new analysis, studies, interpretations, conservation policies as well as digital preservation and restoration. Thus, virtual heritages (Fig. 1) should be more and more frequently used due to the great advantages that the digital technologies are giving to the heritage world and to recognize the documentation needs stated in the numerous charters and resolutions. This contribution reviews some important documentation requirements and specifications, the actual surveying and modeling methodologies with their limitations and potentialities as well some visualization and preservation issues involved in the heritage field. Some examples related to the 3D documentation and modeling of world heritage sites are also presented and discussed.

Reality-based 3D modeling

“It is essential that the principles guiding the preservation and restoration of ancient buildings should be agreed and be laid down on an international basis, with each country being responsible for applying the plan within the framework of its own culture and traditions” (The Venice Charter, i.e., The International Charter for the Conservation and Restoration of Monuments and Sites, 1964). Even if this was stated more than 40 years ago, the need for a clear, rational, standardized terminology and methodology, as well as an accepted professional principle and technique for interpretation, presentation, digital documentation, and presentation, is still not evident. Furthermore “...Preservation of the digital heritage requires sustained efforts on the part of governments, creators, publishers, relevant industries and heritage institutions. In the face of the current digital divide, it is necessary to reinforce international cooperation and solidarity to enable all countries to ensure creation, dissemination, preservation and continued accessibility of their digital heritage” (UNESCO Charter on the Preservation of the Digital Heritage, 2003). Therefore, although digitally recorded and modeled, our heritages require more international collaborations and information sharing to digitally preserve them and make them accessible in all the possible forms and to all the possible users and clients. Nowadays, the digital documentation and 3D modeling of cultural heritage should always consist of Patias (2004, 2007):

-

Recording and processing of a large amount of 3D (possibly 4D) multi-source, multi-resolution, and multi-content information

-

Management and conservation of the achieved 3D (4D) models for further applications

-

Visualization and presentation of the results to distribute the information to other users allowing data retrieval through the Internet or advanced online databases

-

Digital inventories and sharing for education, research, conservation, entertainment, walkthrough, or tourism purposes

Techniques

The continuous development of new sensors, data capture methodologies, multi-resolution 3D representations, and the improvement of existing ones are contributing significantly to the documentation, conservation, and presentation of heritage information and to the growth of research in the cultural heritage field. This is also driven by the increasing requests and needs for digital documentation of archaeological sites at different scales and resolutions.

A technique is intended as a scientific procedure (e.g., image processing) to accomplish a specific task while a methodology is a group or combination of techniques and activities combined to achieve a particular task. Reality-based techniques (e.g., photogrammetry, laser scanning, etc.; Gruen 2008) employ hardware and software to survey the reality as it is, documenting the actual or as-built situation of a site and reconstructing it from real data. Non-real approaches are instead based on computer graphics software (3D Studio, Maya, Sketchup, etc.) or procedural modeling approaches (Mueller et al. 2006), and they allow the generation of 3D data without any particular survey or knowledge of a site (Fig. 2).

The generation of reality-based 3D models of heritage sites and objects is nowadays performed using methodologies based on passive sensors and image data (Remondino and El-Hakim 2006), active sensors and range data (Blais 2004), classical surveying (e.g., total stations or GPS), 2D maps (Yin et al. 2009), or an integration of the aforementioned techniques (El-Hakim et al. 2004; Guidi et al. 2004; De Luca et al. 2006; Stamos et al. 2008; Remondino et al. 2009a). The choice or integration depends on required accuracy, object dimensions, location constraints, system’s portability and usability, surface characteristics, working team experience, project’s budget, final goal, etc. Although aware of the potentialities of the image-based approach and its recent developments in automated and dense image matching (Goesele et al. 2006; Remondino et al. 2008; Hiep et al. 2009; Hirschmueller 2008), the usability by non-experts and the reliability of optical active sensors (with related range-based modeling software) in certain projects are still much higher, although time-consuming and expensive. Nevertheless, many discussions are still opened on which approach and technique is better in which situation. So far the best answer is the combination and integration of the different sensors and techniques, in particular when surveying large and complex sites. Indeed, the generation of digital 3D models of large heritage sites for documentation and conservation purposes requires a technique with the following properties:

-

Accuracy: Precision and reliability are two important factors of the surveying work, unless the work is done for simple and quick visualization.

-

Portability: The technique for terrestrial acquisitions should be portable due to accessibility problem of many sites, absence of electricity, location constraints, etc.

-

Low cost: Most archaeological and documentation missions have limited budgets, and they cannot effort expensive surveying instruments.

-

Fast acquisition: Most sites or excavation areas have limited time for documentation not to disturb works or visitors.

-

Flexibility: Due to the great variety and dimensions of sites and objects, the technique should allow different scales and it should be applicable in any possible condition.

As all these properties are often not eligible in a unique technique, most of the surveying projects related to large and complex sites integrate and combine multiple sensors and techniques in order to achieve more accurate and complete surveying, modeling, interpretation, and digital conservation results.

3D range sensors

Optical range sensors (Blais 2004; Vosselman and Maas 2010) like pulsed, phase-shift, triangulation-based laser scanners, or stripe projection systems have received in the last years a great attention, also from non-experts, for 3D documentation and modeling purposes. Range sensors deliver directly ranges (i.e., distances thus 3D information in form of unstructured point clouds) and are getting quite common in the heritage field, despite their high costs, weight and the usual lack of good texture. During the surveying, the instrument should be placed in different locations or the object needs to be moved in a way that the instrument can see it under different viewpoints. Successively, the 3D raw data need errors and outliers removal, noise reduction, and sometimes holes filling before the alignment or registration of the data into a unique reference system is performed in order to produce a single point cloud of the surveyed scene or object. The registration is generally done in two steps: (a) manual or automatic raw alignment using targets or the data itself and (b) final global alignment based on iterative closest points (Salvi et al. 2007) or least squares method procedures (Gruen and Akca 2005). After the global alignment, redundant points should be removed before a surface model is produced and textured. The range-based modeling pipeline is quite straightforward, and many commercial or open source packages are available (Cignoni and Scopigno 2008).

According to Beraldin et al. (2007), the 3D scanning results are a function of:

-

Intrinsic characteristics of the instrument (calibration, measurement principle, etc.)

-

Characteristics of the scanned material in terms of reflection, light diffusion, and absorption (amplitude response)

-

Characteristics of the working environment

-

Coherence of the backscattered light (phase randomization)

-

Dependence from the chromatic content of the scanned material (frequency response)

Terrestrial range sensors works from very short ranges (few centimeters) up to few kilometers, in accordance with surface proprieties and environment characteristics, delivering 3D data with positioning accuracy from some hundreds of microns up to some millimeters. Range sensors, coupled with GPS/INS sensors, can also be used on airborne platforms (generally called LiDAR or airborne laser scanning; Shan and Toth 2008), mainly for digital terrain model (DTM)/digital surface model (DSM) generation and city modeling. LiDAR data are generally representing a DSM; therefore, for many applications, a filtering and reduction is required to obtain a DTM.

The main research issues involved in range-based data processing and modeling are the automated extraction of features (like man-made objects) and the automated generation of structured 3D data from the recorded 3D point clouds.

Image-based methods

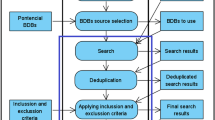

Image data require a mathematical formulation to transform the 2D image measurements into 3D information. Generally, at least two images (Fig. 3) are required, and 3D data can be derived using perspective or projective geometry formulations. Image-based modeling techniques (mainly photogrammetry and computer vision; Remondino and El-Hakim 2006) are generally preferred in cases of lost objects, monuments or architectures with regular geometric shapes, small objects with free-form shape, mapping applications, deformation analyses, low budgets, good experience of the working team, and time or location constraints for the data acquisition and processing. Between the available image acquisition platforms (space, airborne, and terrestrial), of particular interest are the UAVs, e.g., unmanned aerial vehicles (like model helicopters) which can fly in an autonomous mode, using integrated GPS/INS, stabilizer platform, and digital cameras (or even a small range sensor) and which can be used to get data from otherwise hardly accessible areas. Image-based 3D modeling generally requires some user’s interaction in the different steps of the 3D reconstruction and modeling pipeline, reducing its use mainly to experts. The pipeline (Fig. 4) is generally composed of different steps which can be performed in an automated or interactive way, according to the user requirements and project specifications. Accurate feature extraction from satellite and aerial images is still a manually driven procedure. In terrestrial applications, more automation is available for scene reconstruction. Fully automated methods based on a “structure from motion” approach (Pollefeys et al. 2004; Vergauwen and Van Gool 2006; Goesele et al. 2007; Agarwal et al. 2009) are getting quite common in the 3D heritage community, although mainly useful for visualization, object-based navigation, annotation transfer, or image browsing purposes and not for metric and accurate 3D reconstructions and documentations. However, the automation of the procedures has reached a significant maturity with the capability to orient huge numbers of images (Snavely et al. 2008), and open source programs are also available (e.g., Blunder or its graphical implementation Photosynth). But the complete automation in image-based modeling is still an open research’s topic, in particular for the 3D surveying and modeling of architectural scenes and man-made objects (Patias et al. 2008). Nevertheless, the camera calibration and image orientation steps can be achieved fully automatically (Barazzetti et al. 2009) as well as the surface measurement and the texturing for a large number of free-form objects (Remondino et al. 2008), but the user interaction is still necessary in the geo-referencing and for the quality control part.

3D information could also be derived from a single image using object constraints (Van den Heuvel 1998; Criminisi et al. 1999; El-Hakim 2000) or estimating surface normals instead image correspondences (shape from shading (Horn and Brooks 1989), shape from texture (Kender 1978), shape from specularity (Healey and Binford 1987), shape from contour (Meyers et al. 1992), and shape from 2D edge gradients (Winkelbach and Wahl 2001)).

Photogrammetry is considered the primary technique for the processing of image data, being able to deliver at any scale of application accurate and detailed 3D information with estimates of precision and reliability of the unknown parameters from measured image correspondences (tie points). The correspondences can be extracted automatically or semi-automatically according to the object and project requirements. Photogrammetry is employed in different applications like mapping, 3D documentation, conservation, digital restoration, reverse engineering, monitoring, visualization, animation, urban planning, deformation analysis, etc. Photogrammetric 3D reconstructions are generally performed with interactive procedures for man-made objects or architectural structures where sparse point clouds and few geometric primitives are sufficient to describe the 3D geometry (Fig. 5). Automated image matching procedures are instead employed for free-form objects where dense point clouds are required to correctly describe all the object discontinuities and features (Fig. 6).

Many authors (Pomaska 2001; D’Ayala and Smars 2003; Heritage 2005) reported how the photogrammetric image-based approach allows surveys at different levels and in all possible combinations of object complexities, with high quality requirements, easy usage and manipulation of the final products, few time restrictions, good flexibility, and low costs. Different comparisons between photogrammetry and range sensors were also presented in the literature (Böhler 2005; Remondino et al. 2005; Grussenmeyer et al. 2008).

Multi-sensor and multi-source data integration

As previously mentioned, nowadays, the state-of-the-art approach for the 3D documentation and modeling of large and complex sites uses and integrates multiple sensors and technologies (photogrammetry, laser scanning, topographic surveying, etc.) to (a) exploit the intrinsic potentials and advantages of each technique, (b) compensate for the individual weaknesses of each method alone, (c) derive different geometric levels of detail (LOD) of the scene under investigation, and (d) achieve more accurate and complete geometric surveying for modeling, interpretation, representation, and digital conservation issues. 3D modeling based on multi-scale data and multi-sensors integration is indeed providing the best 3D results in terms of appearance and geometric detail. Each LOD is showing only the necessary information while each technique is used where best suited.

Since the 1990s, multiple data sources were integrated for industrial, military, and mobile mapping applications. Sensor and data fusion were then applied also in the cultural heritage domain, mainly at terrestrial level (Stumpfel et al. 2003; El-Hakim et al. 2004), although some projects mixed and integrated satellite, aerial, and ground information for a more complete and multi-resolution 3D survey (Gruen et al. 2005; Rönholm et al. 2007; Guidi et al. 2009a).

The multi-sensor and multi-resolution concept (Fig. 7) should be distinguished between (a) geometric modeling (3D shape acquisition, registration, and further processing) where multiple resolutions and sensors are seamlessly combined to model features with the most adequate sampling step and derive different geometric LOD of the scene under investigation and (b) appearance modeling (texturing, blending, simplification, and rendering) where photo-realistic representations are sought taking into consideration variations in lighting, surface specularity, seamless blending of the textures, user’s viewpoint, simplification, and LOD.

Beside images acquired in the visible part of the light spectrum, it is often necessary to acquire extra information provided by other sensors working in different spectral bands (e.g., IR, UV) in order to study deeper the object. Thermal infrared information is useful to analyze historical buildings, their state of conservation, reveal padding, older layers, back structure of frescoes while near IR is used to study paintings, revealing pentimenti, and preparatory drawings. On the other hand, the UV radiations are very useful in heritage studies to identify different varnishes and over-paintings, in particular with induced visible fluorescence imaging systems (Pelagotti et al. 2006). All those multi-modal information need to be aligned and often overlapped to the geometric data for information fusion, multi- spectral analysis, or other diagnostic applications (Remondino et al. 2009b).

Standards in digital 3D documentation

Many image-based modeling packages as well as range-based systems came out on the market in the last decades to allow the digital documentation and 3D modeling of objects or scenes. Many new users are approaching these methodologies, and those who are not really familiar with them need clear statements and information to know if a package or system satisfies certain requirements before investing. Therefore, technical standards for the 3D imaging field must be created, like those available for the traditional surveying or CMM. A part from standards, comparative data, and best practices are also needed, to show not only advantages but also limitations of systems and software. In these respects, the German VDI/VDE 2634 contains acceptance testing and monitoring procedures for evaluating the accuracy of close-range optical 3D vision systems (particularly for full-frame range cameras and single scan). The American Society for Testing and Materials with its E57 standards committee is trying to develop standards for 3D imaging systems for applications like surveying, preservation, construction, etc. The International Association for Pattern Recognition (IAPR) created the Technical Committee 19—Computer Vision for Cultural Heritage Applications—with the goal of promoting Computer Vision Applications in Cultural Heritage and their integration in all aspects of IAPR activities. TC19 aims at stimulating the development of components (both hardware and software) that can be used by researchers in cultural heritage like archaeologists, art historians, curators, and institutions like universities, museums, and research organizations.

As far as the presentation and visualization of the achieved 3D models concerns, the London Charter (http://www.londoncharter.org/) is seeking to define the basic objectives and principles for the use of 3D visualization methods in relation to intellectual integrity, reliability, transparency, documentation, standards, sustainability, and access of cultural heritage.

The Open Geospatial Consortium (OGC) developed the GML3, an extensible international standard for spatial data exchange. GML3 and other OGC standards (mainly the OpenGIS Web Feature Service Specification) provide a framework for exchanging simple and complex 3D models. Based on the GML3, the CityGML standard was created, an open data model and XML-based format for storing, exchanging, and representing 3D urban objects and in particular virtual city models.

Problems and bottlenecks

The actual problems and main challenges in the 3D surveying of large and complex sites or objects arise in every phase, from the data acquisition to the visualization of the achieved 3D results. The actual great challenges lie in selecting the appropriate methodology (sensor, hardware, software), the appropriate data processing procedure, designing the production workflow, and assuring that the final result is in accordance with all the given technical specifications and being able to fluently display and interact with the achieved 3D model.

During the data acquisition and processing for large sites 3D modeling, in particular in those projects realized mainly with active optical sensors, we should consider that:

-

Reality-based surveying and 3D modeling is highly dependent on the quality of the acquired or available data.

-

The huge amount of (range) data makes very time-consuming and difficult their processing at high resolution, yet processing at low resolution creates accuracy problems and a possible lose of geometric details.

-

Combining data acquired with different sensors, at different geometric resolution, and under different viewpoints can affect the overall accuracy of the entire 3D model if not properly considered and afterward merged.

-

Despite combining several sensors, some gaps and holes can still be present in the produced 3D model, requiring filled and interpolated surface patches not to leave them visible and unpleasant.

-

The used sampled distance in scanning is rarely optimal for the entire site or object, producing under-sampled regions where edges and high curvature surfaces are present and over-sampled regions where flat areas are.

Data acquisition

In case of satellite and aerial images, the availability of the data could be a problem due to weather conditions or restrictions on flights. For terrestrial acquisition, size, location, and surface (geometry and material) of the object or site can create several problems. The dimensions and accessibility problems (due to location, obstructions, rough or sloped terrain with stones, rocks and holes, unfavorable weather conditions, etc.) can cause delays, occlusions, and can result in missing sections or enforce wide-baseline images and poor geometric configurations. The complexity of some parts can create self-occlusions or holes in the coverage, in addition to the occlusions from plants, trees, restoration scaffolds, or tourists. The absence of high platforms for a higher location of the data acquisition might cause missing parts, e.g., for the roofs.

For active sensors, the object material (e.g., marble) has often an important influence on the acquired data since it can cause penetration (Godin et al. 2001; Lichti and Harvey 2002; Guidi et al. 2009b) or bad reflection effects. Moreover, transportability and usability problems arise in certain field campaigns located in remote areas.

Data processing and point cloud generation

For image-based approaches, terrestrial digital cameras must be accurately calibrated, preferably in a controlled lab environment, with a 3D testfield and a bundle adjustment solution with additional parameters to fully compensate for systematic errors (Remondino and Fraser 2006). As no commercial procedure is readily available for automated markerless tie point extraction from terrestrial convergent images, the image orientation phase is still highly interactive, although some recent works seem to be promising in terms of both accuracy and automation (Barazzetti et al. 2009). In case of aerial and satellite imagery, more automation is present in the data processing, although the control points still need to be measured manually. For the surface measurement, manual and semi-automated measurements are still much more reliable in particular for complex architectural scenes or man-made objects. For small free-form objects or ornaments rich of details, dense matching techniques can be instead applied to derive dense 3D point clouds (Remondino et al. 2008).

As far as range-based approaches concerns, the first operations performed on the acquired data are possible errors and outliers removal, noise reduction, and holes filling (Weyrich et al. 2004), followed by the alignment (or registration) of the multiple scans (Salvi et al. 2007). The registration phase is quite straightforward although the identification of homologous points between the overlapping point clouds is still fully interactive unless some targets are placed in the surveyed scene.

3D modeling

Once a point cloud (i.e., unstructured data) is available, a polygonal model (i.e., structured data) needs to be generated to produce the best digital representation of the surveyed object or scene. For architectural scenes and objects, generally described with sparse point clouds, a segmentation and structuring phase is necessary before producing a mesh model. Dense point clouds derived with automated image matching methods or measured with range sensors can be directly converted into meshes, following some possible editing and cleaning. Then some repairing to close holes, fix incorrect faces, or non-manifold parts are often demanding (and time-consuming). Those errors are visually unpleasant, might cause lighting blemishes due to the incorrect normals and the computer model will also be unsuitable for reverse engineering or physical replicas. Moreover, over-sampled areas should be simplified while under-sampled regions should be subdivided. Finally, photo-realism, defined as having no difference between a view rendered from the model and a photograph taken from the same viewpoint, is generally required and achieved with the texture mapping phase, e.g., projecting one or more images (or orthophotos) onto the 3D geometry. Generally, problems might rise from the time-consuming image-to-geometry registration or because of variations in lighting, surface specularity, and camera settings. Often the images are exposed with the illumination at imaging time, but it may need to be replaced by illumination consistent with the rendering point of view and the reflectance properties (bidirectional reflectance distribution function) of the object (Lensch et al. 2003). High dynamic range (HDR) images might also be acquired to recover all scene details (Reinhard et al. 2005) while color discontinuities and aliasing effects must be removed (Debevec et al. 2004; Umeda et al. 2005; Callieri et al. 2008).

Realistic visualization of the 3D results

The ability to easily interact with a huge 3D model is a continuing and increasing problem, in particular with the new demand of sharing and offering online and real-time visualizations. Indeed, model sizes (both in geometry and texture) are increasing at faster rate than computer hardware and software advances, and this limits the possibilities for interactive and real-time visualization of the 3D results. Due to the generally large amount of data and its complexity, the rendering of large 3D models is done with a multi-resolution approach displaying large textured meshes with different levels of detail and simplification approaches (Luebke et al. 2002; Cignoni et al. 2005; Dietrich et al. 2007).

Examples

The Etruscan necropolis of Tarquinia (Italy)

Together with Cerveteri, these are the two large Etruscan cemeteries with different types of burial practices from the ninth to the first century BC, bearing witness to the achievements of Etruscan culture which over nine centuries developed the earliest urban civilization in the northern Mediterranean. Some of the tombs are monumental, cut in rock, and topped by impressive tumuli (burial mounds). Many feature carvings on their walls; others have wall paintings of outstanding quality. The necropolis near Cerveteri, known as Banditaccia, contains thousands of tombs organized in a city-like plan, with streets, small squares, and neighborhoods. The site contains very different types of tombs: trenches cut in rock, tumuli, and some, also carved in rock, in the shape of huts or houses with a wealth of structural details. These provide the only surviving evidence of Etruscan residential architecture. The necropolis of Tarquinia, also known as Monterozzi, contains 6,000 graves cut in the rock. It is famous for its 200 painted tombs, the earliest of which date from the seventh century BC (http://whc.unesco.org). A multi-resolution and multi-modal 3D model of one important grave in Tarquinia (“Caccia e Pesca”, composed of two rooms of spanning approximately 5 ×5 ×2 m each one) was realized as a pilot project for the “Soprintendenza per i Beni Archeologici dell’ Etruria Meridionale” and in collaboration with the company Art-Test (http://www.art-test.com) which was responsible for the multi-spectral data acquisition. As surveying strategy, we selected the most appropriate method in relationship to the research goals and object’s scale. The acquired data comprise:

-

Geometric data: a TOF scanner surveying acquired a large amount of range data for the exterior (ten stations @ 1 cm geometric resolution, ca. 2 Mil. points) and the underground interior rooms (13 stations @ 4 mm sampling step, ca. 14 Mil. points). After the geometric alignment and data reduction, a complete mesh was produced for further rendering, interactive visualization, and archaeological documentation purposes.

-

Appearance data, constituted of:

-

Visible images for texturing purposes: For the photo-realistic rendering of the final 3D model, ca. 160 HDR textures were acquired with a 13.5 Mpixel Kodak DCS camera pre-calibrated in the lab at a focal length setting of 50 mm. A constant illumination in the underground rooms was achieved using cold neon lights (to avoid heating effects on the frescoes) and a spot-meter.

-

Multi-spectral images for diagnostics studies: On some selected areas, visible reflectance, IR reflectography, and UV-induced fluorescence images were acquired using interferential filters in front of a calibrated cooled CCD camera. Those images were afterward calibrated, registered, processed, and overlapped onto the 3D geometry to perform quantitative analysis and differentiate pigments, being present, hidden, or disappeared to the naked eye. Indeed, all the materials having the same color in a certain light have different chemical compositions and reflectance spectra and can therefore identified with multi-spectral imaging.

-

The integration between the different data sources was essential to overcome some limits of each method and to have as result the complete geometric and appearance information about materials and techniques used to build the heritage.

In Fig. 8, some results of the geometric and appearance modeling are reported with also examples of multi-spectral data. The final photo-realistic 3D model with its multi-spectral layers is now a fundamental basis for conservation and restoration policies to help the local superintendence in preserving the vanishing of the frescoes and correctly restoring the damaged areas.

The geometric model of the underground tomb in Tarquinia with the stairs and its two rooms (a). Two views of the photo-realistic 3D model of the grave, with the second room in the background (b) and a closer view of the frescos (c). RGB false color (d) and UV fluorescence (e) of an investigated area. Diagnostic analysis of colored pigments and identification of non-original colors (f)

Pompeii and its Roman Forum

When Vesuvius erupted on 24 August A.D. 79, it engulfed the two flourishing Roman towns of Pompeii and Herculaneum, as well as the many wealthy villas in the area. These have been progressively excavated and made accessible to the public since the mid-nineteenth century. The vast expanse of the commercial town of Pompeii contrasts with the smaller but better-preserved remains of the holiday resort of Herculaneum, while the superb wall paintings of the Villa Oplontis at Torre Annunziata give a vivid impression of the opulent lifestyle enjoyed by the wealthier citizens of the Early Roman Empire (http://whc.unesco.org).

The large and complex Roman Forum (approximately 150 ×80 m large with more than 300 scattered archaeological finds on the ground) was digitally reconstructed (Fig. 9a) integrating aerial images (1:3,500 scale, 5 cm GSD), TOF terrestrial laser scanning (1.2 Bil points), close-range images (ca. 5,000), and GPS data for the absolute geo-referencing (Guidi et al. 2009a). The geometric resolution of the 3D data spans from some 20 cm (from the aerial data) down to few millimeters (3D models of relieves derived with terrestrial photogrammetry), with an intermediate LOD given by the TOF range data. Some objects of particular archaeological interest were geometrically modeled in high resolution (Fig. 9b) by means of advanced image matching method (Remondino et al. 2008).

The entire Roman Forum in Pompeii (ca. 180 ×50 m with more than 300 scattered finds on the ground) digitally reconstructed integrating photogrammetry and TOF scanning (a). High-resolution 3D modeling of a bass-relief realized with advanced image matching techniques (b). 3D modeling and semantic classification of an archaeological find for the successive connection with the information contained in a database (c)

The entire 3D model of the forum was afterward linked to the existing superintendence archaeological databases (Fig. 9c). The relationship database–3D model was implemented in two ways: (a) from the geometrical 3D data to the archaeological 2D data, for explaining historical and conservation details of a specific artifact in the forum, and (b) from a specific document or philological detail to its corresponding location in the 3D space (Manferdini et al. 2008).

Laces’s prehistorical stela (Italy)

A prehistoric stela dating back to 2800–2400 B.C. was found in 1992 by H. Nothdurfter beneath an altar of a eighteenth century church in Laces, near Bolzano (Italy). The stelae are stones modeled by the man’s activity with some carved story representing the daily life, humans, animals, instruments, etc. The digital reconstruction of the find in Laces was performed with a triangulation-based laser scanner while for the texturing separate high-resolution images were acquired. Afterward, the different figures and realization phases carved on the stela were digitized and stored in separated layers to allow their individual analysis and interpretation (Fig. 10). Together with other discovered stelae, the archaeologists organized an exhibition—Uomini di Pietra (Men of stones)—where the new analyses and interpretations of these finds realized using the digital data were presented.

Conclusions and outlook

The article reviewed the actual 3D surveying and modeling methodologies for reality-based 3D documentation of heritage sites and objects. Limitations and potentialities of the techniques were also presented. In case of heritage sites and objects, photogrammetry provides for accurate 3D reconstructions at different scales and for hybrid 3D models (e.g., terrain model plus archaeological structures as shown in Fig. 11). Nowadays, 3D scanners are also becoming a standard source for 3D data in many application areas, but image-based modeling still remains the most complete, cheap, portable, flexible, and widely used approach although for large sites the integration with range sensors is generally the best solution.

Despite the fact that the 3D documentation is not yet the state-of-the-art in the heritage field, the reported examples show the potentialities of the modern surveying technologies to digitally document and preserve our heritages as well as share and manage them. But it is clear that the image-based 3D documentation approach, together with active optical sensors, spatial information systems, 3D modeling procedures, visualization, and animation software, is still all in a dynamic state of development, with even better application prospects for the near future.

References

Agarwal S, Snavely N, Simon I, Seitz S, Szelinski R (2009) Building Rome in a day. In: Proc. ICCV2009, Kyoto, Japan

Barazzetti L, Remondino F, Scaioni M (2009) Combined use of photogrammetric and computer vision techniques for fully automated and accurate 3d modeling of terrestrial objects. In: Videometrics, range imaging and applications X, proc of SPIE Optics+Photonics, vol 7447, San Diego, CA, USA

Beraldin JA, Picard M, El-Hakim SF, Godin G, Valzano V, Bandiera A, Latouche D (2002) Virtualizing a Byzantine crypt by combining high resolution textures with laser scanner 3d data. In: Proc of VSMM 2002, pp 3–14

Beraldin A, Rioux M, Cournoyer L, Blais F, Picard M, Pekelsky J (2007) Traceable 3d imaging metrology. In: Proc. videometrics IX—SPIE electronic imaging proceedings, vol 6491, pp B.1–B–11

Blais F (2004) A review of 20 years of range sensors development. J Electron Imaging 13(1):231–240

Böhler (2005) Comparison of 3D scanning and other 3D measurement techniques. In: Baltsavias E, Gruen A, Van Gool L, Pateraki M (eds) Recording, modeling and visualization of cultural heritage. Taylor and Francis, London, pp 299–313. ISBN 0-415-39208-X

Callieri M, Cignoni P, Corsini M, Scopigno R (2008) Masked photo blending: mapping dense photographic dataset on high-resolution sampled 3d models. Comput Graph 32(4):464–473

Cignoni P, Scopigno R (2008) Sampled 3d models for CH applications: a viable and enabling new medium or just a technological exercise. ACM Journal on Computing and Cultural Heritage 1(1):Article 2

Cignoni P, Ganovelli F, Gobbetti E, Marton F, Ponchio F, Scopigno R (2005) Batched multi triangulation. In: Proc IEEE visualization, pp 207–214

Criminisi A, Reid I, Zisserman A (1999) Single view metrology. In: Proc international conference on computer vision, pp 434–442

D’Ayala D, Smars P (2003) Minimum requirement for metric use of non-metric photographic documentation. University of Bath Report

De Luca L, Veron P, Florenzano M (2006) Reverse engineering of architectural buildings based on a hybrid modeling approach. Comput Graph 30:160–176

Debevec P, Tchou C, Gardner A, Hawkins T, Poullis C, Stumpfel J, Jones A, Yun N, Einarsson P, Lundgren T, Fajardo M, Martinez P (2004) Estimating surface reflectance properties of a complex scene under captured natural illumination. USC ICT Technical Report ICT-TR-06

Dietrich A, Gobbetti E, Yoon SE (2007) Massive-model rendering techniques: a tutorial. Computer graphics and applications. IEEE Comput Graph Appl 27(6):20–34

El-Hakim S (2000) A practical approach to creating precise and detailed 3d models from single and multiple views. Int Arch Photogramm Remote Sens 33(B5):122–129

El-Hakim S, Beraldin J, Picard M, Godin G (2004) Detailed 3d reconstruction of large-scale heritage sites with integrated techniques. IEEE Comput Graph Appl 24(3):21–29

El-Hakim S, Beraldin J, Remondino F, Picard M, Cournoyer L, Baltsavias E (2008) Using terrestrial laser scanning and digital images for the 3d modelling of the Erechtheion, Acropolis of Athens. In: Proc of DMACH conference on digital media and its applications in cultural heritage, Amman, Jordan, pp 3–16

Godin G, Rioux M, Beraldin J, Levoy M, Cournoyer L (2001) An assessment of laser range measurement of marble surfaces. In: Proc. of the 5th conference on optical 3-D measurement techniques, Vienna, Austria, pp 49–56

Goesele M, Curless B, Seitz SM (2006) Multi-view stereo revisited. In: Proc. CVPR 2006, pp 2402–2409

Goesele M, Snavely N, Curless B, Hoppe H, Seitz SM (2007) Multi-view stereo for community photo collections. In: Proc. ICCV 2007, Rio de Janeiro, Brasil

Gruen A (2008) Reality-based generation of virtual environments for digital earth. Int J Digital Earth 1(1):88-106

Gruen A, Akca D (2005) Least squares 3d surface and curve matching. ISPRS J Photogramm Remote Sens 59(3):151–174

Gruen A, Wang X (2008) Integration of landscape and city modeling: the pre-Hispanic site Xochicalco. Int Arch Photogramm Remote Sens 34(5/W3) (on CD-ROM)

Gruen A, Remondino F, Zhang L (2004) Photogrammetric reconstruction of the Great Buddha of Bamiyan. The Photogrammetric Record 19(107):177–199

Gruen A, Remondino F, Zhang L (2005) The Bamiyan project: multi-resolution image-based modeling. In: Baltsavias E, Gruen A, Van Gool L, Pateraki M (eds) Recording, modeling and visualization of cultural heritage. Taylor & Francis, London, pp 45–53. ISBN 0-415-39208-X

Grussenmeyer P, Landes T, Voegtle T, Ringle K (2008) Comparison methods of terrestrial laser scanning, photogrammetry and tacheometry data for recording of cultural heritage buildings. Int Arch Photogramm Remote Sens Spat Inf Sci 37(5):213–218

Guidi G, Beraldin JA, Atzeni C (2004) High accuracy 3d modeling of cultural heritage: the digitizing of Donatello. IEEE Trans Image Process 13(3):370–380

Guidi G, Remondino F, Russo M, Menna F, Rizzi A, Ercoli S (2009a) A multi-resolution methodology for the 3d modeling of large and complex archaeological areas. Int J Archit Comput 7(1):40–55

Guidi G, Remondino F, Russo M, Spinetti A (2009b) Range sensors on marble surfaces: quantitative evaluation of artefacts. In: Videometrics, range imaging and applications X, proc. of SPIE optics+photonics, San Diego, CA, USA, vol 7447

Healey G, Binford T (1987) Local shape from specularity. In: Proc ICCV 1987 London, UK

Heritage E (2005) Metric survey specifications for english heritage. In: English heritage. Available at www.english-heritage.org.uk

Hiep V, Keriven R, Labatut P, Pons J (2009) Towards high-resolution large-scale multi-view stereo. In: Proc. CVPR2009, Kyoto, Japan

Hirschmueller H (2008) Stereo processing by semi-global matching and mutual information. IEEE Trans Pattern Anal Mach Intell 30(2):328–341

Horn B, Brooks M (1989) Shape from shading. MIT, Cambridge, 586 pp. ISBN: 978-0-262-08183-2

Ikeuchi K, Miyazaki D (2008) Digitally archiving cultural objects. Springer, New York, 503 pp. ISBN: 978-0-387-75806-0

Ikeuchi K, Oishi T, Takamatsu J (2007) Digital bayon temple—e-monumentalization of large-scale cultural-heritage objects. In: Proc. ASIAGRAPH, vol 1, no 2, pp 99–106

Kender J (1978) Shape from texture. In: Proc. DARPA IU workshop

Lensch HPA, Kautz J, Goesele M, Heidrich W, Seidel HP (2003) Image-based reconstruction of spatial appearance and geometric detail. ACM Trans Graph 22(2):234–257

Levoy M, Pulli K, Curless B, Rusinkiewicz S, Koller D, Pereira L, Ginzton M, Anderson S, Davis J, Ginsberg J, Shade J, Fulk D (2000) The digital Michelangelo project: 3d scanning of large statues. In: SIGGRAPH computer graphics proceedings, pp 131–144

Lichti DD, Harvey BR (2002) The effects of reflecting surface properties on time-of-flight laser scanner measurements. Int Arch Photogramm 34(4)

Luebke D, Reddy M, Cohne J, Varshney A, Watson B, Huebner R (2002) Level of detail for 3D graphics. Morgan Kaufmann, San Francisco, 432 pp. ISBN: 1-55860-838-9

Manferdini AM, Remondino F, Baldissini S, Gaiani M, Benedetti B (2008) 3d modeling and semantic classification of archaeological finds for management and visualization in 3d archaeological databases. In: Proc. of 14th int. conference on virtual systems and multimedia (VSMM 2008), pp 221–228

Meyers D, Skinner S, Sloan K (1992) Surfaces from contours. ACM Trans Graph 11(3):228–258

Mueller P, Wonka P, Haegler S, Ulmer A, Van Gool L (2006) Procedural modeling of buildings. In: Proc. of ACM SIGGRAPH 2006/ACM transactions on graphics, vol 25, no 3, pp 614–623

Patias P (2004) Overview of applications of close-range photogrammetry and vision techniques in architecture and archaeology. In: McGlone C (ed) Manual of photogrammetry, vol 59(3). American Society of Photogrammetry, Maryland, pp 1044–1050

Patias P (2007) Cultural heritage documentation. In: Fryer J, Mitchell H, Chandler J (eds) Application of 3D measurement from images, vol 59(3). Whittles, Dunbeath, pp 225–257

Patias P, Grussenmeyer P, Hanke K (2008) Applications in cultural heritage documentation. In: Advances in photogrammetry, remote sensing and spatial information sciences. 2008 ISPRS congress book, vol 7, pp 363–384

Pelagotti A, Pezzati L, Piva A, Del Mastio A (2006) Multispectral UV fluorescence analysis of painted surfaces. In: Proceedings of 14th European signal processing conference (EUSIPCO), Firenze, Italy

Pollefeys M, Van Gool L, Vergauwen M, Vergbiest F, Cornelis K, Tops J, Kock R (2004) Visual modelling with a hand-held camera. Int J Comput Vis 59(3):207–232

Pomaska G (2001) Image acquisition for digital photogrammetry using “off the shelf” and metric cameras. In: CIPA international symposium, Potsdam

Reinhard E, Ward G, Pattanaik S, Debevec P (2005) High dynamic range imaging: acquisition, display and image-based lighting. Morgan Kaufmann, San Francisco

Remondino F, El-Hakim S (2006) Image-based 3d modelling: a review. The Photogrammetric Record 21(115):269–291

Remondino F, Fraser C (2006) Digital camera calibration methods: considerations and comparisons. Int Arch Photogramm 36(5):266–272

Remondino F, Guarnieri A, Vettore A (2005) 3d modeling of close-range objects: photogrammetry or laser scanning? In: Proc. of videometrics VIII, SPIE-IS&T electronic imaging, vol 5665, pp 216–225

Remondino F, El-Hakim S, Gruen A, Zhang L (2008) Development and performance analysis of image matching for detailed surface reconstruction of heritage objects. IEEE Signal Process Mag 25(4):55–65

Remondino F, El-Hakim S, Girardi S, Rizzi A, Benedetti S, Gonzo L (2009a) 3d virtual reconstruction and visualization of complex architectures—the 3d-arch project. Int Arch Photogramm Remote Sens Spat Inf Sci 38(5/W10) (on CD-ROM)

Remondino F, Pelagotti A, Del Mastio A, Uccheddu F (2009b) Novel data registration techniques for art diagnostics and 3d heritage visualization. In: IX conference on optical 3D measurement techniques, vol 1, pp 1–10

Rönholm P, Honkavaara E, Litkey P, Hyyppä H, Hyyppä J (2007) Integration of laser scanning and photogrammetry. Int Arch Photogramm Remote Sens Spat Inf Sci 36(2/W52):355–362

Salvi J, Matabosch C, Fofi D, Forest J (2007) A review of recent range image registration methods with accuracy evaluation. Image Vis Comput 25(5):578–596

Shan J, Toth C (2008) Topographic laser ranging and scanning: principles and processing. CRC, Boca Raton, 590 pp. ISBN: 978-1420051421

Snavely N, Seitz S, Szeliski R (2008) Modelling the world from internet photo collections. Int J Comput Vis 80(2):189–210

Sonnemann T, Sauerbier M, Remondino F, Schrotter G (2006) Reality-based 3d modeling of the Angkorian temples using aerial images. In: Proc. 2nd international conference on remote sensing in archaeology, Vienna, Austria, pp 573–579

Stamos I, Liu L, Chen C, Woldberg G, Yu G, Zokai S (2008) Integrating automated range registration with multiview geometry for photorealistic modelling of large-scale scenes. Int J Comput Vis 78(2–3):237–260

Stumpfel J, Tchou C, Yun N, Martinez P, Hawkins T, Jones A, Emerson B, Debevec P (2003) Digital reunification of the Parthenon and its sculptures. In: Proc. of virtual reality, archaeology and cultural heritage (VAST 2003), pp 41–50

Umeda K, Shinozaki M, Godin G, Rioux M (2005) Correction of color information of a 3d model using a range intensity image. In: Proc. of 5th international conference on 3-D digital imaging and modeling (3DIM 2005), pp 229–236

Van den Heuvel F (1998) 3d reconstruction from a single image using geometric constraints. ISPRS J Photogramm Remote Sens 53(6):354–368

Vergauwen M, Van Gool L (2006) Web-based 3d reconstruction service. Mach Vis Appl 17(6):411–426

Vosselman G, Maas H (2010) Airborne and terrestrial laser scanning. CRC, Boca Raton, 318 pp. ISBN: 978-1904445-87-6

Weyrich T, Pauly M, Keiser R, Heinzle S, Scandella S, Gross M (2004) Post-processing of scanned 3d surface data. In: Eurographics symposium on point-based graphics, pp 85–94

Winkelbach S, Wahl F (2001) Shape from 2D edge gradient. In: Pattern recognition, vol 2191. Springer, New York

Yin X, Wonka P, Razdan A (2009) Generating 3d building models from architectural drawings: a survey. IEEE Comput Graph Appl 29(1):20–30

Acknowledgements

The authors are really thankful to Prof. A. Gruen and Dr. M. Sauerbier (ETH Zurich, Switzerland), Prof. G. Guidi and Dr. M. Russo (Politecnico of Milan, Italy), Dr. S. El-Hakim and Dr. A.J. Beraldin (NRC Canada), S. Girardi (FBK Trento, Italy), S. Benedetti (Benedetti Graphics), A. Pelagotti (INOA-CNR, Italy), Dr. L. Marras (Art-Test, Italy), Dr. S. Campana and M. Sordini (University of Siena, Italy), Dr. B. Benedetti (SNS Pisa, Italy), and Dr. A.M. Manferdini and Prof. M. Gaiani (Univ. of Bologna, Italy) co-authors in some publications, researches, and field works for the reported projects and examples.

Open Access

This article is distributed under the terms of the Creative Commons Attribution Noncommercial License which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

Open Access This is an open access article distributed under the terms of the Creative Commons Attribution Noncommercial License (https://creativecommons.org/licenses/by-nc/2.0), which permits any noncommercial use, distribution, and reproduction in any medium, provided the original author(s) and source are credited.

About this article

Cite this article

Remondino, F., Rizzi, A. Reality-based 3D documentation of natural and cultural heritage sites—techniques, problems, and examples. Appl Geomat 2, 85–100 (2010). https://doi.org/10.1007/s12518-010-0025-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12518-010-0025-x