Abstract

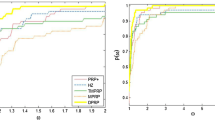

Modified Hestense–Stiefel, Polak–Ribière–Polyak and Liu–Storey conjugate gradient methods are developed using some new techniques. The proposed methods can generate sufficient descent directions without any line search. Under some conditions, global convergence results of the methods are established when the Wolfe or Armijo line search is used. Moreover, the \(r\)-linear convergence rate of the methods are analyzed. Numerical comparisons are given with some existing conjugate gradient methods using the unconstrained optimization problems in the CUTEr library.

Similar content being viewed by others

References

Al-Baali M (1985) Descent property and global convergence of the Fletcher–Reeves method with inexact line search. IMA J. Numer. Anal. 5:121–124

Bongartz I, Conn AR, Gould NIM, Toint PL (1995) CUTE: constrained and unconstrained testing environments. ACM Trans. Math. Softw. 21:123–160

Dai YH, Yuan Y (1996) Convergence properties of the Fletcher–Reeves method. IMA J. Numer. Anal. 16(2):155–164

Dai YH, Yuan Y (1996) Convergence properties of the conjugate descent method. Adv. Math. 25(6):552–562

Dai YH, Yuan Y (2000) A nonlinear conjugate gradient method with a strong global convergence property. SIAM J. Optim. 10:177–182

Dai YH, Liao LZ (2001) New conjugate conditions and related nonlinear conjugate gradient. Appl. Math. Optim. 43:87–101

Dolan ED, Moré JJ (2002) Benchmarking optimization software with performance profiles. Math. Program. 91:201–213

Flectcher R (1987) Practical method of optimization I: unconstrained optimization. Wiley, New York

Fletcher R, Reeves C (1964) Function minimization by conjugate gradients. Comput. J. 7:149–154

Gilbert JC, Nocedal J (1992) Global convergence properties of conjugate gradient methods for optimization. SIAM. J. Optim. 2:21–42

Grippo L, Lucidi S (1997) A globally convergent version of the Polak–Ribière gradient method. Math. Program. 78:375–391

Hager WW, Zhang H (2006) Algorithm 851: CG_DESCENT, a conjugate gradient method with guaranteed descent. ACM Trans. Math. Softw. 32:113–137

Hager WW, Zhang H (2012) The limited memory conjugate gradient method. Technical report

Hager WW, Zhang H (2005) A new conjugate gradient method with guaranteed descent and an efficient line search. SIAM J. Optim. 16:170–192

Hager WW, Zhang H (2006) A survey of nonlinear conjugate gradient methods. Pac. J. Optim. 2:35–58

Hestenes MR, Stiefel EL (1952) Methods of conjugate gradients for solving linear systems. J. Res. Nat. Bur. Stand. 49:409–436

Li D, Fukushima M (2001) A modified BFGS method and its global convergence in nonconvex minimization. J. Comput. Appl. Math. 129:15–35

Li M, Feng H (2011) A sufficient descent LS conjugate gradient method for unconstrained optimization problems. Appl. Math. Comput. 218:1577–1586

Li M, Li D (2012) A modified conjugate-descent method and its global convergence. Pac. J. Optim. 8:247–259

Liu Y, Storey C (1991) Efficient generalized conjugate gradient algorithms, Part 1: theory. J. Optim. Theory Appl. 69:177–182

Moré JJ, Thuente DJ (1994) Line search algorithms with guaranteed suficient decrease. ACM Trans. Math. Softw. 20:286–307

Polak B, Ribière G (1969) Note sur la convergence de méthodes de directions conjuguées. Rev. Française Informat. Recherche Opérationnelle 16:35–43

Polyak BT (1969) The conjugate gradient method in extreme problems. USSR Comp. Math. Math. Phys. 9:94–112

Powell MJD (1984) Nonvonvex minimization calculations and the conjugate gradient method. In: Lecture notes in mathematics. Springer, Berlin

Shanno DF (1979) Conjugate gradient methods with inexact searchs. Math. Oper. Res. 3:244–256

Wolfe P (1969a) Convergence conditions for ascent methods. SIAM Rev. 11:226–235

Wolfe P (1969b) Convergence conditions for ascent methods. II: Some corrections. SIAM Rev. 13:185–188

Zhang L, Zhou W, Li D (2006a) A descent modified Polak–Ribière–Polyak conjugate gradient method and its global convergence. IMA J. Numer. Anal. 26:629–640

Zhang L, Zhou W, Li D (2006b) Global convergence of a modified Fletcher–Reeves conjugate gradient method with Armijo-type line search. Numer. Math. 104:561–572

Zhang L, Zhou W, Li D (2006c) Global convergence of a modified Fletcher–Reeves conjugate gradient method with Armijo-type line search. Numer. Math. 104:561–572

Zhang L (2009a) A new Liu–Storey type nonlinear conjugate gradient method for unconstrained optimization problems. J. Comput. Appl. Math. 225:146–157

Zhang L (2009b) New versions of the Hestenes–Stiefel nonlinear conjugate gradient method based on the secant condition for optimization. Comput. Appl. Math. 28:111–133

Zoutendijk G (1970) Nonlinear programming, computational methods. In: Abadie J (ed) Integer and nonlinear programming. North-Holland, Amsterdam

Acknowledgments

The authors would like to thank the referees for the suggestions and comments which improved this paper greatly. We are grateful to Prof. Hager and Zhang for their codes as well.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Ernesto G. Birgin.

Rights and permissions

About this article

Cite this article

Li, M., Qu, A. Some sufficient descent conjugate gradient methods and their global convergence. Comp. Appl. Math. 33, 333–347 (2014). https://doi.org/10.1007/s40314-013-0064-0

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40314-013-0064-0