1. Introduction

Over the past decade, there has been an increased focus on the topic of student engagement in higher education in light of rising tuition costs and concerns about student success and retention rates [

1]. In order to address these issues, Littky and Grabelle [

2] advocate for a curriculum redesign that stresses relevance, rigor, and relationships (3R’s of engagement). It has been suggested that such a redesign would enable students to meaningfully engage in sustained learning experiences that may lead to a state of optimal flow, which Csíkszentmihályi [

3] defines as “the mental state of operation in which the person is fully immersed in what he or she is doing by a feeling of energized focus, full involvement, and success in the process of the activity” [

3] (p. 9). Pink [

4] suggests that students can achieve this state of “flow” by educators providing them with opportunities to become “driven” learners who have a sense of purpose, are autonomous, and are focused on mastery learning. And, Fullan [

5] stresses that optimal flow is achieved by creating learning environments that focus on purpose, passion, and play (3P’s of engagement).

In 1998, the National Survey of Student Engagement (NSSE) was developed as a “lens to probe the quality of the student learning experience at American colleges and universities” [

6] (p. 3). The NSSE defines student engagement as the amount of time and effort that students put into their classroom studies that lead to experiences and outcomes that constitute student success, and the ways the institution allocates resources and organizes learning opportunities and services to induce students to participate in and benefit from such activities. Five clusters of effective educational practice have been identified based on a meta-analysis of the literature related to student engagement in higher education. These benchmarks are [

6]:

- (1)

Active and collaborative learning

- (2)

Student interactions with faculty members

- (3)

Level of academic challenge

- (4)

Enriching educational experiences

- (5)

Supportive campus environment

Recently, the educational research literature [

7] has indicated that blended approaches to learning might provide an optimal environment for enhancing student engagement and success. The idea of blending different learning experiences has been in existence since humans started thinking about teaching [

8]. The on-going infusion of web-based technologies into the learning and teaching process has highlighted the potential of blended learning [

9,

10]. Collaborative web-based applications have created new opportunities for students to interact with their peers, teachers, and content.

Blended learning is often defined as the combination of face-to-face and online learning [

11,

12]. Ron Bleed, the former Vice Chancellor of Information Technologies at Maricopa College, argues that this is not a sufficient definition for blended learning as it simply implies “bolting” technology onto a traditional course, using digital technologies as an add-on to teach a difficult concept, or adding supplemental information. He suggests that blended learning should be viewed as an opportunity to redesign how courses are developed, scheduled, and delivered through a combination of physical and virtual instruction: “bricks and clicks” [

13]. Joining the best features of in-class teaching with the best features of online learning that promote active, self-directed learning opportunities with added flexibility should be the goal of this redesigned approach [

14,

15,

16]. Garrison and Vaughan [

17] echo this sentiment when they state that “blended learning is the organic integration of thoughtfully selected and complementary face-to-face and online approaches and technologies” [

17] (p. 148). A survey of e-learning activity conducted by Arabasz, Boggs and Baker [

18], over ten years ago, found that 80 percent of all higher education institutions and 93 percent of doctoral institutions offer hybrid or blended learning courses.

Most of the recent definitions for blended courses indicate that this approach to learning offers potential for improving how we deal with content, social interaction, reflection, higher order thinking, problem solving, collaborative learning, and more authentic assessment in higher education, which could potentially lead to a greater sense of student engagement [

16,

19,

20]. Moskal, Dziuban and Hartman [

21] further suggest that “blended learning has become an evolving, responsive, and dynamic process that in many respects is organic, defying all attempts at universal definition” [

21] (p. 15). In this chapter, the author defines blended learning as the intentional integration of synchronous and asynchronous learning opportunities.

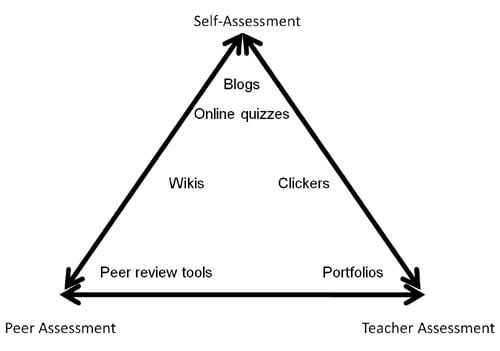

The literature also suggests that the use of collaborative learning applications such as social networking sites, blogs, and wikis has been increasing in higher education courses but that there has been a lack of corresponding research about how these tools are impacting student learning and engagement [

22]. Can these tools be used to design and support assessment activities that increase the level of student engagement with course concepts, their peers, faculty and external experts, potentially leading to increased student success and satisfaction in a blended learning environment?

A study was conducted to investigate the impact of collaborative learning applications (e.g., blogs, wikis, clickers, social media sharing, and networking applications) on student learning and engagement in first year undergraduate courses designed for blended learning. The study addressed the following questions:

- (1)

How are instructors designing assessment activities to incorporate student use of collaborative learning applications in blended courses?

- (2)

How do students perceive the value of these digital tools?

- (3)

Is there a correlation between the use of these tools, the level of perceived student engagement, and academic achievement in blended courses?

The use of collaborative learning applications was examined in seven, first-year blended learning courses from different disciplines at Mount Royal University in Canada (Biology, Business, Child and Youth Studies, Communication Studies, Economics and General Education—Controversies in Science and Creativity in the Workplace).

2. Theoretical Framework

The collaborative constructivism framework developed by Garrison and Archer [

23] and the theoretical foundations of the National Survey of Student Engagement (NSSE) underpin this research study. Garrison and Archer [

23] trace the origins of collaborative constructivism back to Dewey [

24] who argued that “meaningful and educationally worthwhile knowledge is a process of continuous and collaborative reconstruction of experience” [

23] (p. 11). They indicate that meaningful and worthwhile learning outcomes are facilitated in a collaborative environment where individual students are recognized and supported, a variety of perspectives are presented and examined, and misconceptions are diagnosed.

The NSSE examines the relationship between student engagement and student success in higher education institutions throughout the world [

25]. The NSSE conceptions of student engagement in higher education are grounded in several decades of prior research, and particularly in four key antecdents: Pace’s [

26] “quality of effort” concept, Astin’s [

27] theory of student involvement, Chickering and Gamson’s [

28] principles of good practice in undergraduate education, and Pascarella and Terenzini’s [

29] causal model of learning and cognitive development.

3. Methods of Investigation

An action research [

30] and case-based method [

31] were utilized for this study. This approach consisted of a mixture of quantitative (e.g., online surveys) and qualitative (e.g., interviews, focus groups) research methods.

3.1. Data Collection

Data was collected from two iterations of the seven, first-year blended learning courses over a two-year period. A total of 273 students and 8 instructors participated in this study. This project received Mount Royal University Ethics Approval and both students and instructors signed informed consent forms. The consent forms offered the participants confidentiality and the ability to withdraw from the study at any time.

The data collection process began with pre-course interviews with all the instructors involved in the seven blended courses. The purpose of these interviews was to identify how instructors were planning to use collaborative learning applications, in alignment with assessment activities, to help students achieve the intended course learning outcomes.

A 75 item online survey was designed to collect demographic data, information concerning student use of collaborative learning technologies, and perceptions about student engagement. Items used in the survey were derived from the Classroom Assessment of Student Engagement (CLASSE—the classroom version of the

National Survey of Student Engagement) [

32] and the

EDUCAUSE Centre for Applied Research Study of Undergraduate Students and Information Technology [

33]. The

Flashlight Online Survey Tool [

34] was used to administer the survey to both students and faculty in all seven blended courses. The survey was deployed during the tenth week of the semester, in two iterations of each course. The tenth week was selected so that students would have had sufficient exposure to the collaborative learning applications in their blended courses to provide the author with meaningful feedback and so that there would be time for a student focus group meeting before the end of the semester.

Student focus groups were facilitated and digitally recorded using a standardized protocol by an undergraduate research assistant (URA) during the eleventh week of the semester for the first iteration of each course. The URA was an education student who received training from the author of this study and she used a series of open-ended questions, generated from the survey results, to guide the focus groups. These focus groups were limited to the first iteration of each course due to budget constraints. Approximately ten students attended each of the seven focus groups (e.g., one for each blended course), which provided an opportunity to discuss and verify the findings from the online surveys.

Students’ level of use of Blackboard, the institutional learning management system (LMS), was also assessed using page hits per student per course. Academic achievement was defined as students’ final grade in the blended course under study.

Reports were prepared for each of the seven blended courses and post-course interviews were digitally recorded with each of the instructors at the end of the semester depending on when the second iteration of the course took place. In addition, a focus group lunch was held with all the instructors in order to review and discuss the preliminary data collected.

3.2. Data Analysis

3.2.1. Quantitative Data

Descriptive statistics (frequencies, means, and standard deviations) were calculated for individual survey items. Scale scores were computed for the following engagement-related parameters using methods described elsewhere (

http://nsse.iub.edu/#construction_of_nsse_benchmarks): active and collaborative learning; student-faculty interaction; level of academic challenge; and engagement in effective educational practices. A scale score reflecting intensity of students’ course-related digital technology use was calculated based on responses to selected survey items. Cronbach alpha coefficients were utilized to assess the internal reliability of calculated scales. Descriptive statistics (range, mean, and standard deviation) were used to depict level of use of the LMS. Pearson correlation coefficients and analysis of variance were used to assess the association between engagement measures, digital technology use, and academic achievement.

3.2.2. Qualitative Data

Interviews and focus group sessions were digitally recorded and transcribed by the URA. A constant comparative approach was used to identify patterns, themes, and categories of analysis that “emerge out of the data rather than being imposed on them prior to data collection and analysis” [

35] (p. 390). These transcripts were reviewed and compared with the responses from the open-ended online survey questions in order to triangulate themes and patterns.