1. Introduction

Public transport is one of the key issues in designing a large metropolitan area, one of the criteria that defines the quality of life in a city, and also strictly related to the social life of each inhabitant. A city without public transport would entail a random mass of people traveling in a random way without reaching their target. There are plenty of direct, indirect, and random factors that influence public transport, but by far the most disturbing one is human interaction in urban traffic. Drivers’ decisions are influenced by many factors and even though they are driving, driving is not always their only focus; sometimes drivers do not maintain a constant distance between their vehicles, do not always focus on signs and traffic lights, do not follow mandatory traffic rules and in their decisions, and always trust their emotions. If we eliminate the human factor from this equation, we will dramatically reduce traffic jams and increase road security. Usually, a driver spends 45 min to 1 h in traffic to reach work from home; if the same person uses public transport (the conventional mode), he or she will spend approximately the same amount of time (including lane changing and waiting time). In the case of a dedicated line for public transportation, the spent time according to several studies [

1] will decrease by 40%–60%.

The configuration of these means of transport is highly similar to the general platform of the AVs, yet there are several differences that differentiate them and these aspects have been pointed out in the following sections of the paper: the section related to the powertrain, the section related to the steering, the section related to the braking, section explaining the sensors (the positioning, the type, their coverage) all differ that the “classical” AV, autonomous driving algorithm, security.

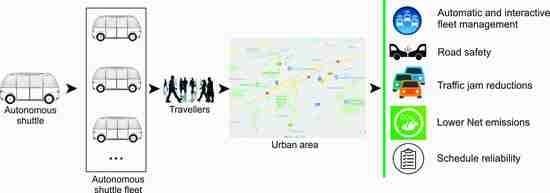

An overview of this paper, which contains the main objective of this paper and highlights all the chapters addressed during the review is presented in

Figure 1.

The main advantage of autonomous driving is not only the replacement of the human driver with emotional and physical limitations, but the possibility of the vehicle to make predictions and to communicate with infrastructure and other vehicles. Due to of this, AVs will become a key component of the foundations of smart cities. Cities without traffic jams related to public transportation will rapidly increase their quality of life, decrease their energy consumption (in all components related to traffic), and also become more nature friendly in this manner, adding quality to their urban air.

Before talking about autonomous driving history, we have to talk about autonomous driving as a means of transport. History has shown that long before automobiles started being developed in this direction, the aeronautic industry and railroad industry successfully replaced (partially or fully) the human intervention in the control of airplanes, trains, and subways.

The birth of autonomous driving was a complicated process, but it represented something exotic until only recently. This technology emerged behind the scenes without concrete applications to the economy of transport or to industry in general.

In the mid-1920s, Francis P. Houldina, an electrical engineer with a military background, equipped a Chandler vehicle with an antenna that received signals from a second vehicle in front. Control was accomplished through a small electric motor that performed tasks according to the movement of the first vehicle. The communication channel used radio waves [

2].

Another visionary was Norman Bel Geddes, an industrial designer who, with support from General Motors, presented a vehicle propelled by magnetic fields generated via circuits embedded in the road in 1939 during the “Futurama” exhibit at the World Fair [

3].

In 1957, RCA LABS-USA presented to the public, two vehicles provided by General Motors that have been equipped with receivers able to interpret the signals coming from the road. The vehicles have been able to manage automatic steering, acceleration and braking [

4].

Dr. Robert L. Cosgriff in 1960 working at Ohio State University, presented the idea that driver automation based on information from the road would become a reality in the next 15 years. He was working in the laboratory of communication and control systems [

5].

On the European continent, the pioneers in the field were a team form the Transport Road Research Laboratory that, in 1960, successfully tested a Citroen DS with speeds over 130 km/h without a driver in all kinds of weather conditions. This vehicle functioned by following the magnetic field of a series of electrical wires mounted on the road [

6].

The year 1980 introduced a new approach developed by Mercedes-Benz, one that considers “vision” as the decisive factor for a non-driver van, which was operated successfully on public roads. This project was conducted by Prof. Ernst Dickman, a pioneer in computer vision working at Bundeswehr University Munich.

Legislation then began to be promoted for this type of vehicle, and, in 1997, the United States Department of Transportation created the first laws related to the “demonstration of an automated vehicle and highway system” [

7].

Schiphol Airport in December 1997 was the first public entity to use the “Park Shuttle”, called an “automated people mover”. This technology was the foundation for future accomplishments in the field, representing the first time that autonomous driving capabilities were tested in public common usage [

8].

Since the year 2000, a revolution of autonomous driving has taken place. Independent researchers, automotive companies, software companies, and electronics companies all seek to claim ownership of this complex means of transport that will definitely change humans’ relationships with vehicles once and for all.

From a standardization point of view, the Society of Automotive Engineers (SAE) established a standard and issued the “SAE J3016™: Taxonomy and Definitions for Terms Related to On-Road Motor Vehicle Automated Driving Systems”, in part, to speed up the delivery of an initial regulatory framework and best practices to guide manufacturers and other entities in the safe design, development, testing, and deployment of highly automated vehicles.

An AV is a vehicle equipped with hardware and software that ensures driving capacity without the need for human intervention with the control mechanisms of the vehicle, with or without remote monitoring of the vehicle.

The clear advantages of autonomous driving in the short and medium term will have an obvious impact on public transportation once this solution is used on a large-scale. Current shuttle buses serving public roads reached Level 3 and 4 of the autonomy characteristics presented in

Figure 2, according to SAE J3016™ [

9].

Currently, there are several companies worldwide that have already produced autonomous driving shuttle buses for the market. These companies first developed pilot programs, validated their products, and can now replicate their products on a large scale. These companies include Apollo Baidu (Baidu, China) [

10], EasyMile EZ10 (EasyMile, Toulouse, France) [

11], Navya Arma (Navya, Lyon, France) [

12], and Olli (Local Motors, National Harbor, MD, USA) [

13].

Ordinary autonomous vehicles do not carry rear axle steering for better maneuverability in the city, the sensors positioning, and their range and versatility is especially designed for public transportation, the security aspects pointed in the paper are in direct link with the public transport. The concept of autonomous driving is the same, its benefits are somehow similar and some characteristics of the powertrain might be common, but at the end these two types of vehicle models belong to the same family.

The vast majority of these companies emerged from startups, and their backgrounds are strongly related to research and development. In terms of research, several scientific reviews cover this subject.

Ahmed et al. [

14] present autonomous driving from the perspective of pedestrian and bicycles recognition. Ainsalu et al. [

15], on the other hand, present the current implications of autonomous buses based on several projects and their outcomes. Dominguez et al. [

16] report research on the integration of autonomous driving in a smart city infrastructure. Rosique et al. [

17] focus on sensors and object perception as a source of information for autonomous driving control algorithms. Soteropoulos et al. [

18] present research on the integration of autonomous driving in modern traffic and its influence on current transport strategies. Taeihagh et al. [

19], on the other hand, focused their research on the cyber security of AVs and its influence on the privacy of the users of these technologies. Zheng et al. [

20] present a series of methodologies related to maps and roads as driving paths for AVs.

Such research must have a legal framework to accurately present its results and promote relevant products to the public. In the particular case of autonomous driving, there are several regulations by the United Nations (UN).

The regulatory framework for AVs at the international level (within the UN) was established on the basis of the Vienna International Convention on Road Traffic in 1968, which states in Article 8 that “every driver must constantly have control of his or her vehicle or to guide him” [

21]. The amendment UNECE ECE/TRANS/WP.1/145, pertaining to Article 8 of the Vienna International Convention, ensures the possibility that a driver can be assisted in his or her driving performance tasks and in the control of his or her motor vehicle through a driving assistance system. Thus, the annex to the amendment, paragraph 5b, specifies that “from today (23 March 2016), the automated control systems will be explicitly allowed on public roads, provided they comply with the provisions of the UN Regulations on motor vehicles or so that they can be controlled or deactivated by the driver” [

22].

Autonomous shuttle buses for public transport are present in many of the major metropolises of the world, where they have been implemented on urban routes, in public traffic, and under test regimes, where they are permanently monitored by a human operator (Level 3 according to SAE J3016™ (

Figure 3)) [

23,

24].

The next level of autonomy (Level 4) is being tested in various locations around the world (e.g., Waymo, Google’s self-driving car program, Phoenix, AZ, USA) [

25] and involves the use of AVs in urban traffic without being accompanied by a human operator. These AVs travel based only on the perceptions and decisions of an autonomous driving system (ADS) using machine learning or artificial intelligence (AI) technologies. For example, Amini et al. developed and implemented a machine learning methodology based on a minimum set of data collected from the real world [

26].

Table 1 presents the current fleet of autonomous shuttle buses in service all over the world, including the vehicle producer, number of vehicles per route, cost of transportation, and the road type where the vehicle is in service. The scientific world has a strong focus on this topic, and its diversity in terms of research topics brings together scientists from all engineering domains. Understanding of the environment and its dynamic changes relative to AVs; the integration of AVs in current road infrastructure; and their security risks, propulsion systems, sensors, computing processing power, machine learning elements, and AI, as well as the social aspects emerging from these technologies, are all topics that must be evaluated and presented as keystones in the future development of autonomous shuttle buses for urban transport.

2. General Technical Characteristics

In recent years, autonomous driving has become not only a hypothetical driving solution but a reality. The type of physical vehicle used in the implementation of these concepts is similar to pre-existing solutions, with a steering wheel, acceleration, and braking paddles, but also including a complex network of sensors. Depending on the degree of automation, these classic control elements start to disappear and will disappear completely once the degree of automation reaches the 5th level. In parallel, new decision management solutions have started to emerge (such as tables and joysticks) based on the control of AVs in cases of emergency or impermissible situations. The complexity of direct mechanical control from the driver side will decrease, and the degree of automated control over the dynamic behavior of the vehicle will start to increase, until the driver has no control over the behavior of the vehicle, except by means of indicating the destination. This physical elimination process works in parallel with upgrading the level of autonomy. However, completely replacing a driver requires a series of upgrades in the sensor areas and processing power of autonomous driving control units. The technical aspects of each component’s role are presented in the following sections.

2.1. Powertrain

2.1.1. Driveline

The AV propulsion system is equipped with asynchronous/synchronous traction engine/engines (the producer’s choice) powered by a DC/AC converter via a complex electronic power system.

The traction motors generate torque to the propulsion wheels according to the control algorithm of the electrical machine via the electronics and, subsequently, to the voltage limitations generated by the battery management system (BMS). The electrical torque and power delivered to the wheels are directly controlled by the desired speed of the AV. The electric motors also function as electric generators in the recovery braking mode, where they can recover a maximum amount of braking energy (energy that will be stored in a battery via an electronic AC/DC converter and/or supercapacitor solutions). Another solution is to convert the kinetic recovered energy (when braking or downhill cruising) via the power source (AC/DC and DC/DC) to low voltage electrical consumers of the AV (

Figure 4, where (1) standard charge plug-in; (2) main inverter; (3) power drive unit; (4) e-motor/generator; (5) power electronics).

Electric batteries are charged by charging stations connected to the plug-in terminal. The voltage/current flow into the battery from the charging stations is controlled first by the power electronics with transformed energy (AC/DC) and then by the BMS [

15,

38].

The command and the control of the propulsion system operation is realized by the electronic control unit (ECU), which is integrated into the ADS of the vehicles and intervenes in the permanent modification of the parameter values that influence AV performance in order to optimize electricity consumption.

2.1.2. High-Voltage Battery

A high-voltage battery consists of a series of low voltage cells (2.2 V up to 3.7 V) fixed in modules that are connected in series and in parallel in order to increase the power and voltage of the complete battery pack. The complete battery pack, depending on its size, can reach 280–390 V with power that can vary from 20 kWh to 35 kWh. This battery pack is continuously monitored by the BMS for equal cell voltage charging and discharging (state of charge, SOC), its optimal functional temperature, the optimum C-rate (rate at which the battery is being charged or discharged) of the complete pack, and the complete battery state of health (SOH). The battery pack is indirectly connected to the power electronics, the unit that controls the energy flow through all electrically powered systems.

Electric batteries have a capacity that will ensure the operation of the AV on the selected routes within a predetermined period of time (generally the duration of a working day or 8–10 h) at maximum load with passengers with the conditioning systems for the passenger compartment temperature in operation and in all possible situations under all environmental conditions.

The batteries use lithium-type technology, with a high stored energy density, the minimum volume and mass needed to achieve the required autonomy, and maximum operating safety under the climatic conditions in which the AV operates. Electric batteries allow a charging regime from 0% to 99% in a well-defined time interval depending on their capacity, regardless of the ambient thermal regime.

There are models of AVs that are equipped with solutions for extending their autonomy through an additional battery with a maximum of 10% from the main battery’s capacity (or a supercapacitor with the same specifications), which is separated from the main system of the electrical batteries and used only in situations where which the capacity of the main batteries falls below a level of 20%, which ensures additional autonomy. This battery/supercapacitor is separated from the main power source and is permanently maintained at a maximum charge level.

The main characteristics of the high voltage batteries equipped to each of the autonomous shuttle bus models presented in this paper are shown in

Table 2 [

11,

12,

13,

39].

2.1.3. Steering

When discussing the steering of AVs, one is first impacted by the lack of a steering wheel, which is a key component of classical vehicles. The steering of AVs still represents a key factor, but direct control is missing. Here, steering decisions more subtly use a decision algorithm supervised by the ADS. The steering of an AV is a reactive action generated by a series of external factors rather than by the decision of a “virtual drive” that reflects itself in a proactive mode based on the road, traffic, and surroundings. Steering in this case is the means, not the method, by which the vehicle reaches the desired target. This is why an AV operates based on a virtual/optimal trajectory.

Thus, there is no freedom to cut lines and no possibility to encounter dangerous situations, but there is always a safe method of driving and a controlled balance between the position of the gravitational center of the vehicle and the three axis forces that act on each steering wheel.

Autonomous shuttle buses can be equipped with two-wheel steering systems or four-wheel steering systems.

Figure 5 presents an overview of the steering system (a) and the other two steering systems that can be found on autonomous shuttle buses: a steering system with two steering wheels and (b) a steering system with four steering wheels (c).

In the case of autonomous shuttle buses, the rear axle wheels turn in the opposite direction of the front axle wheels. Four-wheel steering systems have major advantages. One of these advantages is a reduction of the steering angle, which increases safety by reducing the required response time. This system also improves the maneuverability of a vehicle during tight cornering or parking [

38].

Autonomous shuttle buses with four independent steering wheels are equipped with independent steering controllers on each wheel. This allows greater flexibility in controlling the vehicle’s movement via the independent control of the driving force and the rotation angle (δA, δB, δC, δD) of each wheel [

40,

41]. The steering system of the autonomous shuttle buses is built around a rack-type pinion mechanism and a steering linkage mechanism controlled by a 24 V DC motor.

Figure 6 shows a kinematic diagram of the steering mechanism: (0) chassis; (1) steering gear driven by gear motor; (2) rack; (3) left link; (4) right link; (5) left wheel; (6) right wheel [

23].

The steering system of the autonomous shuttle buses is powered by an electric gear motor that drives the steering gear (1), which turns and activates the rack (2). The electric gear motor is controlled by the Motion Control block by ADS. The rack-pinion gear transforms the rotational motion of the electric gear motor into translational movement that is transmitted to the wheel hubs by means of a link (3, 4), which causes the wheels (5, 6) to turn. The technical specifications of the gear motor type are presented in

Table 3 [

42]. These specifications are presented as general values, taken from the technical data sheets of the manufacturers of the most used elements from the categories described in the paper and used in equipping the autonomous shuttle bus models presented.

The complete chain of steering control includes the module for vehicle behavior, which generates a planned trajectory in the environment in which it circulates. The vehicle’s behavior is based on the use of a file to define the road network the AV must navigate, which includes the streets and a file that defines the points that the AV will cross during its travel to a certain destination. The generated trajectory is then entered in the direction controller [

43].

The task of the steering controller (

Figure 7) is to compare the current position of the AV with the alignment of the planned trajectory. Depending on other aspects, such as comfort and the current speed of the AV, the controller generates control over the steering system based on the vehicle’s speed. The centralized control structure is based on the characteristics of the vehicle’s dynamics, and the subsystems are controlled directly by a centralized controller. The controller’s design is generally based on linear or nonlinear models, and the method for designing a multi-output multi-input system (MIMO) is adopted to solve problems related to longitudinal and lateral vehicle dynamics [

44].

In

Figure 7, the notations represent the geographical coordinates of the track on which the AV runs, the steering angles of the steering wheel, the forces and moments acting on the vehicle from a dynamic point of view, and the moments at the wheel axle, with respect to the longitudinal and transverse speeds of the vehicle, where X

def, Y

def are the geographical coordinates of X respective to Y on the track, V

def is the vehicle’s speed on the track, ω

ro is the angular speed of the wheel necessary to follow the track, ∑F

x is the sum of the forces in the longitudinal direction, ∑F

y is the sum of the forces in the transverse direction, ∑F

z is the sum of the forces in the vertical direction, F

xi is the longitudinal forces on the wheel in the longitudinal direction, F

yi is the transverse forces on the wheel in the longitudinal direction, M

i is the torque of the driving wheels, δ

i is the steering angle of the wheels, v

x is the longitudinal speed of the vehicle, v

y is the transverse speed of the vehicle, and ω

r is the angular speed of the wheel. All these quantities are analyzed and processed by the blocks in

Figure 7 to ensure that steering decisions are performed optimally. The steering controller generates the desired steering angle, resulting in a torque to the steering system that depends on the voltage entered into the controller.

2.1.4. Braking

For classical vehicles, the driver uses his or her senses, experience, and abilities to make predictions. Usually, when using the braking pedal, the vast majority of drivers are optimists; they do not brake intensively until the last portion of the road, at which point they use the engine brake. Truck and bus drivers frequently use a retarder before using the brake pedal. In the end, braking is performed partially instinctively and partially by awareness. Taking into account all these variables, vehicle manufacturers do not ensure a certain braking distance according to different vehicle speeds but instead equip their vehicles with braking systems capable of ensuring a minimum deceleration measured in m/s2.

For AVs, the same legislation applies, and such vehicles will always reach the desired level of deceleration. The problem lies in how this deceleration is distributed over distance and how the occupants of the vehicle experience this deceleration, particularly those who are not seated and those who are not facing the road, in order to acknowledge the braking process. The algorithm that controls deceleration must be adaptable and able to distinguish between normal braking, smooth deceleration and braking, and emergency braking [

38].

The analyzed AVs are powered by an electric vehicle platform that permits regenerative braking and countercurrent braking. Thus, there are three ways to induce deceleration in an AV. The physical systems are already well known, but the keys to braking in autonomous driving are establishing the moment of braking, the force of braking, and the moment when braking is no longer necessary, taking into account that in the vast majority of cases, the total deceleration force of the braking system is not needed.

According to the desired deceleration and the distance between the vehicle and the desired maneuver, the braking control algorithm can decide to use the hydraulic system, the regenerative braking system, the countercurrent braking system, or the first and second in parallel.

A possible control solution for the braking control of an AV is presented in

Figure 8 [

45].

2.1.5. Charging System

The charging strategy of an industrial vehicle designated for public transportation has to take into consideration that there is a direct connection between the battery’s SOH and the charging solution used. The continuous usage of fast charging stations (high-power DC) will generate, over time, a rapid aging effect on the cell level of the battery by creating a chemical film on the negative electrode (the solid electrolyte interface), which will exert a direct influence on the reliability and usability of the complete battery pack. In order to increase the reliability and life-time of the battery so that a SOH of 80% can be maintained after only several thousand charging and discharging cycles, the ratio between fast charging (high power DC) and slow charging (low-power AC) should be 1/3–5 charging cycles.

Charging stations are equipment that charge autonomous shuttle bus batteries within a well-defined time interval depending on their capacity using special dedicated connectors/coupling devices [

46,

47]. The charging stations used for powering electric vehicles in general, and autonomous shuttle buses in particular, are characterized by the following specific terms [

48]:

Charging levels 1–3 is used to classify the power, voltage, and rated current of the charging stations according to the specifications defined by SAE J1772 (

Table 4) [

49];

Charging modes 1–4 is used to classify the mode of the power supply, protection, and communication/control of the charging system according to the specifications defined by the international standard IEC 61851-1 [

50]. Thus, autonomous shuttle buses are connected by an in-cable control and protection device (IC-CPD) cable to electric vehicle supply equipment (EVSE) compatible charging stations; and

Charger types are used to classify the different types of sockets used to supply power to the autonomous shuttle bus charging system according to the specifications defined by the international standard IEC 62196-2 [

51]. For example, Apollo Baidu uses a CHAdeMO 50 kW DC Type 2 charging connector [

52], EasyMile EZ10 uses a Type 2/Mennekes AC charging connector [

53], Navya Arma uses a Type 2/Mennekes AC [

12], and Olli uses a Type 2/Mennekes AC charging connector [

54].

2.2. Sensor Systems

The key question in the case of autonomous shuttle buses is whether sensors are capable of replacing a human driver, in urban traffic, especially for a passenger transport vehicle. Vehicle manufacturers, particularly the divisions responsible for autonomous driving, answer that they are. On what facts do such companies rely, and why are they so confident?

Replacing the decisive factor of the human brain with a series of control algorithms based on information from sensors presents series of unknowns. The element of decision-making is the brain of the driver, which receives driving information based on the senses (vision and hearing), which together give the driver awareness of his or her soundings, but this is not enough. There are persons who can hear and see very well and have no ability to drive because they lack the knowledge and trained reflexes to control a vehicle. Thus, perception based on sight and hearing together with trained knowledge provides the ability to drive, but an element remains missing—the experience of driving. This means that the reactions of the driver are learned by the driver’s brain in order to improve their driving skills in terms of their reflexes and appropriate reactions (driving style under various meteorological conditions).

This means that once a driver’s brain is trained to drive, the vast majority of functions will be made almost automatically (similar to typing without looking at the computer keys), which means that the mental effort becomes negligible. For example, for a driver that drives along the same road for a long period of time, the driver’s brain becomes so familiar with the route that that the driver is no longer able to recall the events that happened on the road unless they were exceptional.

In the development of autonomous driving, specialists have decided to train artificial brains using a similar approach to the training that a person engages in involuntarily when driving “automatically” based on prior information. Problems emerge once the routine becomes obsolete and an unpredictable event occurs, at which point the human brain’s processing power grows exponentially, and decisions can be made.

Based on its lack of large-scale processing power, the AV has to upgrade itself with sensorial strength. Humans do not have good night vision, do not possess superior abilities of motion detection by hearing, cannot see through fog or heavy rain, etc. However, they can compensate for these deficiencies with brain processing power and interact and react to the perturbating factors of the environment. On the other hand, the AV algorithm has no ability to attain the same level of brain processing power and compensates for this lack of independence by increasing its extra sensory abilities with a series of capabilities that humans are missing and even communicating with the environment (vehicle to vehicle communication and vehicle to infrastructure communication). In this way, vehicles can compensate for their lack of processing power in order to achieve a task independent of the driving decision factors. The sensing capabilities of actual autonomous shuttle buses designated for public transport are being presented step by step in each particular case so that an exhaustive understanding of the matter can be achieved.

Sensors are technical devices of specific sizes that react to certain environmental properties. An AV, which is devoid of the perception of a human operator, must be able to perceive its surroundings in order to function safely without the intervention of a human operator. For this process, AVs are equipped with a large number of sensors (

Figure 9) [

55,

56,

57] that scan the entire environment, identifying everything that materializes around the vehicle from road markings and traffic signs to static and dynamic objects.

The signals transmitted by the sensors are managed by intelligent platforms such as the self-driving computation platform, which is integrated into the ADS. Based on these, the perception capabilities of AVs are becoming increasingly more sophisticated, possessing the ability to identify and classify any static or dynamic objects in the proximity of the AV and follow these objects from frame to frame [

58,

59].

Sensors are equipped on the autonomous shuttle buses models presented in this work. The types, numbers of sensors, and their positions are determined by the manufacturers of autonomous shuttle buses. For example, the sensors equipped on Apollo Baidu autonomous shuttle bus are shown in

Table 5 (according to the public data on github.com/ApolloAuto).

The characteristics of the sensors on AVs (the maximum values for field of view, range, accuracy, frame-rate, resolution, color perception, and the minimum values for weather affections, maintenance, visibility, and price) are shown in

Figure 10 (where 0 is very poor, 1 is poor, 2 is very fair, 3 is fair, 4 is good, and 5 is very good) [

17,

20,

68,

69,

70].

2.2.1. LIDAR

LIDAR sensors (

Figure 11) based on laser beam distance measurement technology allow an AV to generate a virtual image of the environment in which it circulates to establish its precise position and detect the static or dynamic objects on the road for a 2D map (LIDAR 2D) or for a 3D map (LIDAR 3D) [

71].

The main role of LIDAR sensors is to detect existing objects (pedestrians, vehicles, etc.) and to determine the ADS to control emergency braking to avoid collisions. LIDAR 3D sensors use a set of laser diodes (between 4 and 128 laser channels) mounted on a rotary device that scans the environment in a 360° horizontal and 20–45° vertical field of view. The accuracy of the images that form the 3D map is given by the number of used laser beams. LIDAR 3D sensors are mounted in a way that minimizes the areas without coverage and allows them to scan the displacement area over a circular radius to create a 3D map of the virtual environment in which the existing objects (pedestrians, vehicles, etc.) are present [

17].

LIDAR 2D sensors capture information from the environment by applying a single circular laser beam to a flat surface perpendicular to the axis of rotation. LIDAR 2D sensors are generally mounted in two pieces to minimize the areas without coverage and to ensure continuous visibility on the entire circular surface of the AV [

70].

The main technical characteristics of the LIDAR sensors used in automotive applications are presented in

Table 6 (general values, taken from the technical sheets of the most used LIDAR sensor manufacturers) [

61,

72,

73].

Sualeh et al. presented a multiple object detection and tracking (MODT) algorithm that classifies the objects detected by LIDAR sensors and then applies Bayesian filters to estimate the kinematic evolution of the objects classified over time [

74].

2.2.2. Radar

Radar sensors equipped on AVs are placed in the front and rear of a vehicle to measure distance to the detected objects and to calculate their speed and estimate their direction of travel (

Figure 12) [

75,

76].

Mid-range radars (MRRs) are placed so that they cover the front, rear, and side corners of an AV to detect objects in the immediate vicinity of the vehicle [

77]. Long range radar (LRR) sensors are used to provide information on the vehicles traveling in front of the AV and determine adaptive cruise control (ACC) [

78].

The main technical characteristics of the radar sensors used in automotive applications are presented in

Table 7 (general values, taken from the technical sheets of the most used radar sensors manufacturers) [

77,

79].

2.2.3. Camera

An AV is equipped with a high-resolution stereo video camera system (two identical cameras that simulate human binocular vision), located in the front and back of the vehicle to provide a circular image of the environment.

These high-resolution video cameras have the role of permanently monitoring/recording the movement of the AV, the route followed, the traffic signals, etc., and are equipped with complementary metal–oxide–semiconductor (CMOS) sensors. The monocular video cameras capture 2D images without generating precise details about the distances to the objects sensed without estimating information that will help establish scenarios to avoid these objects. In contrast, stereo camcorders have the ability to estimate the distance to sensed objects by measuring the difference between two images from different angles [

15].

The video cameras (

Figure 13) have been applied to multiple tasks for AVs, such as lane detection, distance detection of other objects or vehicles from traffic and traffic sign detection.

The operation of a CMOS image sensor is based on the light passing through the lens, which is filtered by a matrix of color filter array (Bayer CFA) that provide information on the light intensity in the wavelength regions. The raw image data captured by the CMOS sensor are then converted into a color image by an algorithm that converts photons into photoelectrons [

80,

81].

After the image processing stage, visual perception is used to recognize and understand the detected objects in the environment using the classification algorithm (

Figure 14) [

14].

2.2.4. GPS/GNSS

The global navigation satellite system (GNSS) includes all satellite navigation systems, global, and regional systems and operates on the principle of receiver position detection (AV) against a fixed reference formed by a group of geostationary satellites orbiting over 20,000 km from the surface of the Earth. These satellites emit signals that contain information about their position, orbital parameters, etc. Based on these signals, the reception systems extract information about their position, travel speed, and the current time [

15,

76,

82].

The operating principle of the GNSS is based on the exact transmission time of the extrapolated signal emitted by at least four visible satellites and received by the receiver, from which the receiver extracts information about the geographical coordinates and time (x, y, z, t) [

17].

The most commonly used GNSS is the global positioning system (GPS), which uses a real-time kinematic (RTK) correction tool to position the AV with maximum accuracy (

Figure 15). GNSS provides precision that can deviate by a few meters, although the ADS requires a precision of several centimeters. This accuracy is achieved with the aid of a base station. The GNSS measures the distance to the satellites and the base station, thus achieving positioning with maximum accuracy for the AV. The transfer of GNSS/RTK differential corrections from the reference base stations to the AV is carried out via mobile communications or the Internet (3G/4G/5G) [

83].

The GNSS with RTK (used, for example, by Navya) has a low refresh rate (1–20 Hz), which is why some manufacturers of autonomous shuttle buses (EasyMile) use the visual capabilities of LIDAR sensors and video cameras to reconstruct the displacement space and locate the vehicle in this space. This technique is called simultaneous localization and mapping (SLAM) and consists of generating an environmental map based on virtual reality (VR) and simultaneous localization within this map [

15]. The disadvantage of this technique lies in the fact that there is no delimitation of the mapped space, which requires extra hardware and software resources.

2.2.5. Inertial Measurement Unit

The inertial measurement unit (IMU) sensor includes a three-axis accelerometer and a three-axis gyroscope and is intended to measure the acceleration of the AV and specify its location and orientation (

Figure 16).

When a vehicle is in motion, it can perform linear and rotational movements around each of the three axes: lateral, longitudinal, and vertical (pitch, roll, and yaw). Inertial measurements, which can be made without reference to an external point, include linear acceleration, angular velocity, and angular acceleration. This information can be used to increase or improve external measurements, such as GPS coordinates [

84].

An IMU is a device containing an accelerometer that measures a body-specific force vector, a gyroscope that measures the inertial angular velocity vector, and a magnetometer that measures the vector of the magnetic field around the device. These three sensors are mounted so that their measuring axes each constitute distinct orthogonal systems, resulting in six degrees of freedom. An IMU offers basic information about the vehicle on which it is mounted (acceleration, rotation, and orientation).

3. Autonomous Driving Systems

This section describes the core technological developments over the last few years related to processing power, flexibility, and—one of the most important aspects—mobility. For a computer that processes pre-deterministic calculus, the factors of processing power, memory, size, and grid power present no problem when in a lab connected to other computers, where it can borrow memory or processing power. However, once the computer is independent with limited processing power, every bit of processed information counts. AVs are simply large computers on wheels whose abilities depend on their software’s quality, bugs, flexibility, and processing power.

We no longer evaluate an AV in terms of its speed, dynamic performance, or braking distance. We instead evaluate AVs by their ability to make speed related decisions instead of asking for a decision, establish alternative routes before reaching a traffic restricted area, reduce their speed before reaching a bumpy road, reserve a charging station before reaching the desired destination, etc. These are the factors that differentiate AVs in general. The particularities of classic vehicles are immaterial (such as color selection), yet they help buyers choose their future vehicles.

Are the ADS capable of crossing the double line when a vehicle is parked in the road, or will it ask for control? Is the system capable of recognizing police signs in case of a crash or road block? Is the system able to increase its speed in case of a medical emergency, or is the code simply too “classic” to perform such tasks that a human driver can perform?

An ADS must not only perform its driving tasks using various sensors for input and actuators for control, but such systems must be resilient, redundant, flexible, reliable, versatile, and ensure cyber security. All these tasks have to be performed in a network with high-speed information exchange and multi-processing layers doubled by different types of physical data that require continuous processing power. These large volumes of information require tremendous processing speed. An AV with all its cameras can generate 2 gigapixels per second of data, which takes 250 trillion operations per second to process [

85]. This is translated into large amounts of computing power, which requires tremendous amounts of electricity. Some AVs use around 2500 watts [

86]. These are extreme cases, but they highlight some lesser-studied evaluation criteria for vehicles: Does the processing power influence the choice of an electric vehicle? Does the vehicle’s electronic equipment support future upgrades? For example, is the ADS capable of running software for the following 3–5 years? Is the cost of an update worthwhile in terms of benefits? Will there be “bugs” in the first update, or should one wait for the second update? These topics might become similar to current maintenance activities, such as changing the injector for better engine performance (better fuel consumption and fewer emissions).

All the presented aspects have a large impact on the debates surrounding autonomous shuttle buses designed for public transport. Ultimately, who is paying for the development of these platforms after the product is sold? What about software maintenance, electronics maintenance, upgrades, and compatibility? These are themes of interest to be analyzed in the future.

3.1. Autonomous Driving Algorithm

An ADS destined for public transportation is a hardware and software solution that has the ability to drive without the need for humans to use the vehicle control mechanisms (depending on the level of autonomy according to SAE J3016™ specifications), with remote vehicle monitoring accomplished via monitoring personnel through a software application called a management platform [

1,

9].

The degree of autonomy of an autonomous shuttle buses destined for public transportation that fits within Level 3/Level 4 allows the human operator to not intervene in the travel direction, vehicle speed, or braking (for all dynamic functions) while the vehicle is in operation. After stopping in the proximity of an object and after the object in question is removed from the proximity of the autonomous shuttle, the vehicle will resume its function of independent, autonomous driving without the intervention of the human operator. This is one of the existing solutions on the market in terms of human asset/inactive intervention.

In the event of a problem (a system error, an incident, a deviation from the route, etc.) for Level 4, the ADS will have sufficient control to overcome this situation, and the human operator will only intervene if he or she determines that the vehicle must deviate from the perception and planning of its autonomous management system’s actions. When a problem occurs for a Level 3 system, the human operator will be the one to intervene to reactivate the ADS after the problem is solved.

The ADS is a substitute for a real driver who, by delegating total driving control to the vehicle and describing the behavioral conditions, performs the following independent functions [

87]:

Avoidance of obstacles: discovering existing objects in the movement direction (pedestrians, vehicles, etc.); safely stopping or changing lanes as appropriate;

Centered travel: ensuring the vehicle moves in the center of the travel direction at the maximum travel speed;

Changing travel direction: for reasons other than avoiding or overtaking existing objects (pedestrians, vehicles, etc.); this is done by changing the travel direction when possible;

Passing over several lanes: insurance for existing objects (pedestrians, cars, etc.), changing travel direction, overtaking, and returning to the lane;

Passing over a single lane: insurance existing objects (pedestrians, vehicles, etc.), changing travel direction, overcoming when the maneuvering space allows, and returning to the lane;

Abandonment of overtaking: insurance against existing objects (pedestrians, vehicles, etc.), changing the travel direction, avoiding overtaking in the event of an unforeseen situation, and returning to the lane;

Complete overtaking: achieving and completing overtaking when maneuvering allows it to return to the lane;

Overcoming being overtaken: when another vehicle commits overtaking, that vehicle is avoided to increase travel speed and maintain a safe distance from the vehicle that overtakes;

Maintaining distance: ensuring and maintaining a maximum safe distance from existing objects (pedestrians, vehicles, etc.) in proximity to avoid any collisions;

Low speed operations: ensuring and maintaining a minimum safe distance from existing objects (pedestrians, vehicles, etc.) in proximity to avoid any collisions;

Interactions with other objects: avoiding interactions with other existing objects (pedestrians, vehicles, etc.) by keeping distance, adjusting movement speed, and passing or stopping as appropriate; and

Constant movement: in the travel direction when no object (pedestrians, vehicles, etc.) intervenes in the proximity of the vehicle.

The operation of the ADS uses a decision algorithm based on the following principles (

Figure 17) [

1,

12,

88]:

Perception of the AV position of the object’s position in the environment;

Prediction/planning of the dynamic behaviors of the vehicle resulting from its perception correlated with movement/operation rules; and

Motion control: command and control of the propulsion systems, steering, and braking based on the decisions established in the planning stage.

The main command and control parameters are collected in internal non-volatile memory as data from all sensors on the AV, namely:

The permanent position of the AV in real time, specifically a history of its movement;

Data on the main functional parameters of the propulsion, steering, and braking systems and a history of these systems’ operations (speed of movement, speed of the electric motor, consumption/electricity recovery, etc.);

Data on the main functional parameters of the comfort systems in the passenger compartment of the AV (lighting, air conditioning, etc.);

Data on the main events that took place during the entire period of the AVs operation (abnormal operations, emergency stops, etc.); and

Data on the data flow of the video cameras.

The main functions of the command and control unit are the following [

1]:

Logic and control—monitoring and diagnosing the autonomous operations of the main systems: propulsion, steering, and braking;

Logic and control—monitoring and diagnosing the autonomous operations of the auxiliary systems of lighting, door/ramp, comfort compartments for passengers, and multimedia;

Logic and command of the external access interlocks to the main/auxiliary systems for the safe operation of the AV; and

Interconnection with the management platform—recording and transmitting data, events, and operating errors to the platform.

All the information on object detection is used to manage system priorities and integrate the AV into road traffic through a programmed control area, in order to continue its movement in an autonomous driving regime. The vehicle’s movement speed can be adjusted to safely manage detected objects on the route via prediction. The AV accelerates/decelerates depending on the trajectory being followed and the movement speed of the dynamic objects. The planning of the dynamic behaviors allows the AV to interact with other traffic participants, preventing accidents and automatically adjusting travel speed. The planning features of this dynamic behavior are synchronized with the action control characteristics, activating the dynamic functions of the propulsion, steering, or braking systems and determining the best behavior of the AV according to any static or dynamic objects on the route.

The autonomous driving algorithm used on the NVidia drive platform (

Figure 18) allows for the perception of existing objects (pedestrians, vehicles, etc.), travel routes, and traffic signals based on the data received from several types of sensors (LIDAR, radar, camera, GPS/GNSS, IMU, etc.). This platform employs a neural network that, based on the deep learning method, responds to the following objectives [

58,

89]:

Permanent development of the perception algorithms for existing objects (pedestrians, vehicles, etc.), commuting routes, and traffic signs;

Detection of static and dynamic objects to avoid collisions, traffic lanes, and other traffic conditions;

Tracking detected objects (pedestrians, vehicles, etc.) from one frame to the next; and

Estimating the distances to detected objects.

The ADS, regardless of the technical solution adopted to implement the functional algorithm, provides decision support to achieve perception and vehicle movement and to stop under maximum safety conditions, based on the data provided by the sensors. According to these data, the system determines the characteristics that define the path of the AVs movement and regulates its speed so that it can stop under completely safe conditions depending on the route’s characteristics, the objects (pedestrians, vehicles, etc.) encountered on the route, and the points defined as stopping stations for boarding/disembarking the passengers. All these functions are characteristics of an autonomous driving solution destined for public transportation.

The functions of the ADS react to all the events and objects on the route, managing each of the scenarios to avoid an accident. These scenarios include the following:

The objects that appear on the route;

Respecting the traffic lanes;

The behavior of other vehicles in traffic;

The management of crossing priorities (interpretation of traffic light colors or when they are flashing yellow) at intersections or when changing the direction of travel; and

Rules of road traffic and traffic signs on the route.

The current analyzed autonomous shuttle buses (Level 3/Level 4) destined for public transportation are permanently monitored through the management platform by a human operator, who, in potentially dangerous situations, will command the autonomous shuttle bus to stop. In this situation, the ADS is automatically deactivated, at which point the autonomous shuttle bus can only be driven manually by the human operator on board through a mobile control device. Autonomous shuttle buses are also equipped with an “operator” button, which, when actuated, disables the autonomous driving mode and activates the manual driving mode. This solution is a particular design option in vehicles designed for public transportation.

3.2. The Electronic Control Unit of the Autonomous Driving Algorithm

The electronic control unit (ECU) is the central component of the ADS that receives information from sensors, actuators, etc. Based on the specifications of the autonomous system’s control algorithm, the ECU generates the command and control signals of the main drive systems, the propulsion system, the steering system, and the braking system. The sensors equipped to the AV perform a complete scan of the environment to distinguish all types of objects (pedestrians, vehicles, environment, particular roads, etc.), both static and dynamic and during both day and night under low visibility conditions.

The information from the sensors is collected by the ADS of the vehicle, which analyzes these data and controls the vehicle’s movement to ensure the safest conditions for its passengers using a series of actuators belonging to the propulsion, steering, and braking systems. This information is provided by:

Users/passengers, who provide data on the selected (defined) destination through the control panel in the on-demand mode;

Location sensors (LIDAR, radar, cameras, etc.) that allow the vehicle to be framed on a defined map using the data from these sensors; and

Position sensors (GPS/GNSS, IMU, odometers) that provide data on the AVs position.

The software architecture of the ECU of the ADS can be organized using an open platform and standardized AUTOSAR, which is organized into layers and can integrate and manage, in the application layer, the software components that perform different functions from different manufacturers so that they can use microcontroller resources and accept regular firmware updates (

Figure 19) [

60,

90].

The vehicle platform is the basic model (open vehicle platform) for the ECU of the ADS. The platform contains internal drivers, which include software modules with direct access to the microcontroller and internal modules.

The hardware platform includes sensors, regardless of their location (internal/external) (human machine interface (HMI) devices, LIDAR, radar, cameras, GPS/GNSS, etc.), with the appropriate drivers. This layer provides an application programming interface (API) for access and connection to the open vehicle platform.

The software platform includes the real-time operating system (RTOS) and runtime framework, which comprise the layer that provides the specific functions for the application software (between the software components and/or sensor components). The software platform components include local maps and localization (data generation and localization), a data integrator (collection and multiplexing of the information provided from the sensors), perception of object position, the prediction of object behavior, planning (planning the optimal trajectory), motion control (AV movement control), HMI control (control of the device’s interaction with the human operator), and a V2X adapter (connection with the vehicle-to-everything units, V2X).

The cloud platform provides a suite of cloud computing services running on the same infrastructure with a series of modular services that require a large storage space with high data traffic. These services feature a local HD map (local high definition maps), big data (reference data), security (secure protocols), AI (AI algorithms), simulation (virtual model simulations for prediction and planning validation), and V2X (transmission of the information from a vehicle to any entity that could influence the vehicle and vice versa).

The V2X technology involves the exchange of large volumes of data between AVs and all intelligent “entities” around the vehicle, and these data transfers can be done in real time only through 5G technology. Increasing of the communication capabilities by expanding the volumes of the transferred data and of the response time will improve the traffic flow in the road traffic, allowing increased speeds of AVs, and reduce speed in a timely manner when the situation requires it. As a result, some of the developers/manufacturers of AVs have now integrated technical solutions for the 5G connected on some vehicle models (series production, or prototype), anticipating the technological evolution of the coming years [

60,

91]. Compared to the cloud platform and the suite of services running on this platform (suite of cloud computing services running), 5G technology allows the use of edge computing solution [

92], which offers low network latency (5G latency at 1 ms vs. 4G latency at 50 ms [

93]), allowing local data processing without the need for cloud uploading.

Edge computing is a computing and data storage solution for cloud computing systems, which allows the data processing at the edge of a network (using network resources), so outside the cloud computing environment, thus reducing the time required to process this data. In order for this solution to be achieved, a high speed of network communication and a low latency in data processing are required, conditions that can be ensured by implementing of the 5G technology. This approach allows the autonomous vehicles interconnected in a network (V2V) to share their data on traffic, route, etc., in real-time.

The operation of the command and control unit as a central component of the autonomous steering system is presented in detail below (

Figure 20) [

94,

95,

96,

97].

Autonomous driving is based on the real-time position of the AV, which is generated by the localization module based on data from the sensor block, data that are taken over and managed by the data integrator block. These data are interpreted and compared with the reference data for the geographical coordinates (latitude, longitude, and altitude) of the selected and implemented route (local map), which are permanently updated with the data from the sensor block and stored in a memory block local map generator [

17,

98].

The perception module receives the location data of the AV from the localization block and the data from the sensor block for the objects in proximity to the AV (pedestrians, vehicles, etc.). This module detects (object detector) and classifies (object classifier) static and dynamic objects in proximity to the AV. This module estimates whether the dynamic objects in proximity to the AV can intersect their path with the route selected for travel. Based on the movement speed of these objects (the speed determined by the radar sensor) relative to the movement speed of the AV, the perception module will determine if there is a risk of collision between the vehicle and the monitored object.

The prediction/planning module receives the perception data from the perception module. These data contain a map of the static and dynamic objects in proximity to the AV superimposed over the selected and implemented path (local map). Based on these data, the prediction/planning module will select the optimal trajectory (optimal trajectory) based on certain predetermined criteria (the trajectory evaluator) to steer the AV in a way that allows safe travel without collisions.

The motion control transmits commands to the propulsion systems (velocity) for steering (angle) and braking (brake) based on the data received from the localization, perception, and prediction/planning modules. The braking system (brake) can be operated in the case of an emergency by the monitoring personnel on the management platform through a secure virtual private network (VPN).

On the data bus, the ECU of the ADS records information on electricity consumption, indicating the recovered energy, the electric battery’s SOC, and other relevant data on variations in the functional parameters of the main and auxiliary systems.

The Baidu Apollo autonomous shuttle bus is equipped with an ECU of the ADS called an industrial PC (IPC), which receives data from the sensors through a control unit, called the Apollo sensor unit (ASU). The ASU collects data from the main sensors (LIDAR, radar, camera, IMU, GPS, and GNSS) via four controller area network (CAN) buses, processes the signals, and then sends them via a PCI Express interface to the IPC that manages the autonomous shuttle bus control (

Figure 21) [

60].

The IPC is an industrial computer Nuvo-6108GC in the following configuration: Xeon E3 V5 CPU, Intel C236 chipset, 32 GB DDR4 RAM, PCIe ×8 ports, PCIe ×16, Gigabit Ethernet, and a PCIex CAN card. The resistance piece is represented by an ASUS NVidia GTX1080-A8G-Gaming graphics card, which supports the following AI technologies: VR, autonomous driving, and NVidia compute unified device architecture (CUDA) [

60,

99,

100].

3.3. Safety and Cybersecurity

The safety protocols implemented in the command and control of the ADS comply with the following criteria [

12]:

The AV will stop if a malfunction of any of the ADS sensors is detected;

The AV will stop if a communication error is detected between the components of the ADS;

The AV will stop if the power supply of the ADS is stopped; and

The AV will stop if an anomaly is detected by the ECU.

The protocols implemented for the verification, evaluation, and validation of the functional safety of the ADS are based on a set of steps corresponding to the safety criteria that correspond to the performance criteria (

Table 8) [

101]. These protocols aim at achieving a balance between the functional safety criteria and the performance criteria. The functional safety criteria will always have priority between the two. Thus, according to the defined protocols, the ADS will promptly order the AV to stop due to any deviations from these criteria.

The steps for the safety criteria, corresponding to the performance criteria, are as follows [

101,

102]:

Safety level: The ADS must recognize the limits for which the safe transfer from manual driving to autonomous driving is allowed and vice versa under minimum risk conditions;

Cybersecurity ensures the protection of the electronic systems, communication networks, control algorithms, and software products against computer attacks, especially unauthorized access;

Safety assessments—evaluating and validating the proper functioning of all vehicle systems in order to ensure that all the general safety objectives are met;

Safe operation: Ensuring that the operating state of the ADS corresponds to a level of autonomy that allows the safe operation of the AV;

Operational domain: The operating conditions under which the management system is designed to operate, and which reflect the technological capacity of the ADS;

The traffic behavior of the ADS must be predictable for all road traffic participants, and the traffic rules must be understood and respected by the system;

Manually assisted: The ADS controls the longitudinal or lateral movement of the vehicle (not both systems simultaneously) but is automatically deactivated via the driver’s intervention;

Partial automation: The ADS controls the longitudinal or lateral movement of the vehicle (both systems simultaneously) but is automatically deactivated when the driver intervenes;

Conditional automation: The ADS is activated by the driver and independently controls the movement of the vehicle. The ADS automatically deactivates in the event of an emergency situation or at the driver’s request;

High automation: The ADS is activated by the driver and independently controls the movement of the vehicle. The ADS handle emergency situations by itself and is automatically deactivated at the driver’s request; and

Fully autonomous: The ADS activates automatically according to the implemented autonomous driving algorithm and independently controls the movement of the vehicle. The ADS handles emergency situations by itself and is automatically deactivated at the driver’s request.

AVs are equipped with hundreds of sensors and dozens of ECUs, which are controlled by software code sequences and interconnected through the local interconnect network (LIN), CAN, FlexRay, and media-oriented system transport (MOST). The relevant Ethernet communication networks must be protected using cybersecurity solutions. The AV is commanded and controlled by a virtual driver using an ECU reliant on the algorithm of the ADS.

The future implementation of 5G technology in the management and control of the AVs will lead to an increase in the speed response of ADS to both safety protocols and safety criteria.

This aspect requires the presence of a strict protocol for computer security, particularly as a protective measure against unauthorized access of the AVs communication network. As a protective measure against unauthorized access to the AVs information and communication network, such access is possible only from the management platform through a secure network using a VPN channel via mobile data transmission technology (3G/4G/5G), and the information transmitted on this network is encrypted.

When several AVs are registered on the same management platform, communications with each autonomous shuttle bus are carried out on different VPN channels in order to ensure the security of the entire fleet in case of unauthorized access. A high level of protection against unauthorized access to critical safety systems requires that control of the actuation systems within the autonomous steering system (namely the propulsion system, the steering system, and the braking system) be completely separate from the infotainment system, regardless of any open Internet connection (

Table 9) [

101,

103,

104].

3.4. Route Specifications

For the operation of AVs in safe conditions, the ECU of the ADS analyzes and processes data from the sensors. Then, using the decision algorithms, the ECU creates a virtual model of the AVs moving environment. This virtual moving environment reproduces the following:

The conditions and nature of the road, including road signs/signals, speed limits, the widths of the roads, and the traffic conditions;

The geographical area, the longitudinal/transverse inclination of the road, the altitude profile of the route, etc.;

Climate, atmospheric conditions, ambient brightness (day/night), air temperature, and precipitation (fog, rain, snow, etc.); and

Operational safety measures.

The reference data on the geographical coordinates (latitude, longitude, and altitude) of the selected and implemented routes (local map) are stored in a memory block of the command and control unit and are permanently updated with data from the sensors or via updates made periodically. Programming the selected and implemented route will allow the AV to travel only on this route. The vehicle is designed to determine the method for crossing the selected route and engaging in transitions with the least amount of risk, thereby facilitating safe stopping.

5G technology, by transmitting real-time weather data to the ADS, offers solutions for autonomous driving in the event of difficult climatic phenomena (reduced visibility due to fog, rain, snow, etc.), and improper road conditions (wet, covered with ice or snow, etc.).

Two navigation techniques are available for AV orientation: 1) SLAM, which, with the help of sensors, monitors the environment and realizes perception and localization in real-time, and 2) HD maps, which memorize a detailed representation of the environment, which overlaps existing objects (pedestrians, vehicles, etc.) [

105]. The most advantageous orientation solution combines real-time perception and localization (SLAM) with HD maps.

The methodology by which an extended map of the environment is generated (a full 3D map) is presented in

Figure 22. Data from the LIDAR sensors (2D and 3D) generate a virtual image of the environment in which the AV moves, thereby providing an image formed by static and dynamic objects. The real-time position of the AV is determined with maximum precision using the geographical coordinates provided by the GNSS/GPS sensor.

An important aspect in digitizing and implementing the selected route is the quality of the GPS/GNSS signal, which must be at its maximum for the entire route to ensure safe movement of the AV [

71,

106]. This aspect can be improved through a 5G mobile data connection for the transfer of GNSS/RTK differential corrections from base stations to AVs. The two superimposed maps, both 2D and 3D, generate a local map containing all the existing objects (pedestrians, vehicles, etc.). This superimposed map of the environment with the map of the selected route that is implemented in the internal memory of the command and control unit in the ADS generates an extended map of the environment (a full 3D map) [

107].

4. Legal Framework

Ever since there have been means of transport that use external power sources, there has been a need for relevant controls and rules, even though such regulations are sometimes inappropriate and disproportionate to the harmful potential of the means of transport. For instance, the “Locomotive acts” passed in the British Parliament in 1865 stipulated that one could drive a steam powered carriage or motor car at no more than 2 mph inside town and 4 mph outside town only if there was a person walking in front of the vehicle blowing a whistle and carrying a red flag.

If we analyze the content of this legislation in the present day, it might seem to be a joke, but it was, nevertheless, outlined by the British Parliament. The need to change and update laws and their foundations based on current technology is being felt more and more strongly, as technology is dramatically changing every 3–5 years. Such legislation is sometimes made post-factum as reality fills the empty spaces with factors that have never been considered by any legislative system, sometimes leading to overregulation.

For autonomous driving, the technology is mature enough to require dedicated legislation, but in order to generate such a movement, there is a need for lobbying and persuasion so that the relevant producers can change the status of their research and development (R and D) AV products to accepted products with free access to consumers. Here, the three major players in this market (United States, Europe, and China) have not yet come to a common decision. For the moment, these products, although active on public roads, are neglected due to the small number of units.

Retrospectively, the British Parliament in 1865 was trying to protect horses and carriages against the “iron beasts”. However, in less than 100 years, there were no more horses on public roads in the UK (there is now a policy against the access of horses and carriages on certain public roads). This will also be the case for AVs. Presently, there are some fixed regulations preventing vehicles from being “driven” without a driver, but 100 years from now, vehicles driven by human drivers will not be allowed on certain roads due to a possible perturbance of the rhythmicity and traffic continuity afforded by AVs.

For now, the legal aspects related to public transportation focus on safety, public accessibility, rhythmicity, and emissions. These aspects can be naturally achieved using an autonomous shuttle bus. However, since there is no driver that is responsible for all these factors, the local/regional/national legislation has no ability to offer derogations from existing laws that connect drivers to their vehicles. Therefore, new legislation must be developed to provide a clear framework for vehicle manufacturers to obtain conformity approval for their products. A new topic based on the lack of drivers in AVs is being debated for the case of unfortunate crashes/accidents. This debate involves the responsibility and legal coverage of an autonomous driving license and coding and also the related insurance aspects. This uncharted legal territory must soon become populated with rules that are flexible enough that their validity can endure for at least several decades.

The legislative framework for regulating AV circulation on public roads is based on two essential components: the rules of behavior in traffic and the regulation of the technical equipment attached to these vehicles. According to [

108], in order to harmonize the specifications of Regulation 79/2008 [

109] with AV traffic conditions, it is necessary that the functions of automation, the monitoring of the driver’s attention, and the emergency maneuvers needed to ensure road safety are proportional to the degree of automation. According to such regulations, the ADS can allow a vehicle to follow a defined trajectory or modify its trajectory in response to the signals initiated and transmitted from outside the system. Reporting to the ADS, the driver will not necessarily exercise primary control of the vehicle.

4.1. United States

In the United States (U.S.)., the regulations regarding the safety of AVs are subject to state governments, while their registration and application to public roads are subject to the laws of the federal government. In 2017, the U.S. Department of Transportation (DOT) and the National Highway Transportation Safety Association (NHTSA) launched the “Automated Driving Systems 2.0: A Vision for Safety” program, which was designed to promote and improve the safety, mobility, and efficiency of AVs [

104]. The criteria imposed by the NHTSA regarding the establishment of the safety criteria for AVs refer in particular to the following issues [

110]:

System safety: compliance with and the implementation of International Standards Organization (ISO) and SAE International safety standards;

Operational design domain (ODD): documentation on at least the following information: types of roads, geographical area, speed of travel, environmental conditions, and other constraints;

Object and event detection and response: the detection and reaction of the AV in the event of the appearance of a static or dynamic object, regardless of the events;

Fallback (minimal risk condition): ensuring the minimum risk conditions of the ADS;

Validation methods: validation methods of the ADS control algorithm;

HMI: the interaction between the AV and the driver;

Cybersecurity: ensuring the security protocols implemented in the control of the ADS;

Crashworthiness: ensuring the protection of passengers in the event of an accident (active and passive safety systems);

Post-crash ADS behavior: ensuring the protection of post-accident passengers (disconnection of electricity and main systems);

Data Recording: recording the data of the monitoring events and operating errors;

Consumer education and training: programs for all the personnel involved in the servicing (operating) of AVs; and

Federal, state, and local Laws: compliance with federal, state, and local laws/regulations applicable to the operation of AVs.

The number of the states that have adopted legislation on AVs in recent years has gradually increased.

Figure 23 shows the current situation in the states that have adopted such legislation (blue), issued executive orders (green), adopted legislation and issued executive orders (orange), or have not taken any standalone action on motor vehicles (gray) [

111]. For state abbreviations, we used the traditional US abbreviations [

112].

According to the Constitution of the United States of America, each state has an independent group of laws that aim to promote their own interests. Consequently, the legislation concerning AVs differs significantly from one state to another. Thus, while some states (California, Connecticut, D.C., Florida, Massachusetts, New Mexico, New York, South Dakota, and Washington) clearly specify their requirements for a human operator to be on board an AV throughout its test run, other states (Arizona, Missouri, North Carolina, Tennessee, and Texas) allow the movement of AVs on public roads without being accompanied by a human operator [

113].

The concepts of AVs themselves are perceived and defined differently for each of the U.S. states. Thus, for D.C., in the Code of the District of Columbia, section 23A., AVs are defined as vehicles capable of navigating district roads and interpreting traffic control devices without an active driver operating any of the vehicle control systems [

114]. The State of Illinois, in Senate Bill 791/2017, defines an AV as a vehicle equipped with an ADS (hardware and software) that is capable of performing the entire dynamic driving task on a sustained basis without being limited to a particular operational area [

115]. Louisiana, in House Bill 1143/2016, defines autonomous technology as technology that is installed on a vehicle and ensures one’s ability to drive a vehicle under high or complete automation without oversight by a human operator, including the ability to automatically bring the vehicle to a minimum state of risk in the event of a critical failure of the vehicle [

116]. Georgia, in Senate Bill 219/2017, defines the minimum risk condition as a low-risk mode of operation in which the AV that operates without a driver provides reasonably safe conditions, namely the complete stopping of the vehicle in the event of a malfunction of the ADS—a failure that causes the vehicle to become unable to fulfill the full dynamic driving task [

117].