A Survey of Deep Learning-Based Human Activity Recognition in Radar

Abstract

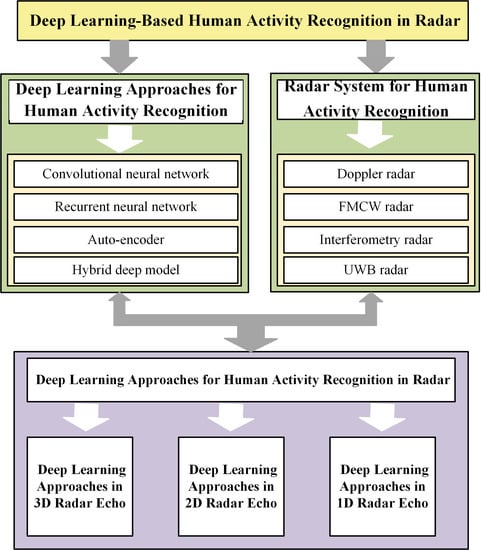

:1. Introduction

2. Deep Learning Techniques

2.1. Convolutional Neural Network

2.2. Recurrent Neural Network

2.3. Auto-Encoder

2.4. Hybrid Deep Model

3. Radar System for Human Activity Recognition

3.1. Continuous-Wave (CW) Radar

3.2. Ultra-Wide Band Radar

4. Deep Learning Approaches for Human Activity Recognition in Radar

4.1. Deep Learning Approaches in 3D Radar Echo

4.2. Deep Learning Approaches in 2D Radar Echo

4.3. Deep Learning Approaches in 1D Radar Echo

5. Future Directions

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| HAR | Human activity recognition |

| FMCW | Frequency-modulated continuous-wave |

| CV | Computer vision |

| CNN | Convolutional neural network |

| TSN | Temporal segment network |

| ML | Machine learning |

| SVM | Support vector machine |

| DTW | Dynamic time warping |

| DL | Deep learning |

| NLP | Natural language processing |

| RNN | Recurrent neural network |

| LSTM | Long short term memory |

| RD | range–Doppler |

| HRRP | High resolution range profile |

| CTC | Connectionist temporal classification |

References

- Cristani, M.; Raghavendra, R.; Bue, A.D.; Murino, V. Human behavior analysis in video surveillance: A Social Signal Processing perspective. Neurocomputing 2013, 100, 86–97. [Google Scholar] [CrossRef] [Green Version]

- Qian, W.; Li, Y.; Li, C.; Pal, R. Gesture recognition for smart home applications using portable radar sensors. In Proceedings of the 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 6414–6417. [Google Scholar]

- Wang, J.L.; Singh, S. Video analysis of human dynamics—A survey. Real-Time Imaging 2003, 9, 321–346. [Google Scholar] [CrossRef]

- Molchanov, P.; Gupta, S.; Kim, K.; Kautz, J. Hand gesture recognition with 3D convolutional neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 1–7. [Google Scholar]

- Chen, L.; Zhou, M.; Wu, M.; She, J.; Liu, Z.; Dong, F.; Hirota, K. Three-layer Weighted Fuzzy Support Vector Regression for Emotional Intention Understanding in Human-Robot Interaction. IEEE Trans. Fuzzy Syst. 2018, 26, 2524–2538. [Google Scholar] [CrossRef]

- Bulling, A.; Blanke, U.; Schiele, B. A tutorial on human activity recognition using body-worn inertial sensors. ACM Comput. Surv. (CSUR) 2014, 46, 33. [Google Scholar] [CrossRef]

- Huang, X.; Dai, M. Indoor Device-Free Activity Recognition Based on Radio Signal. IEEE Trans. Veh. Technol. 2017, 66, 5316–5329. [Google Scholar] [CrossRef]

- Jalal, A.; Kim, Y.H.; Kim, Y.J.; Kamal, S.; Kim, D. Robust human activity recognition from depth video using spatiotemporal multi-fused features. Pattern Recognit. 2017, 61, 295–308. [Google Scholar] [CrossRef]

- Jalal, A.; Kamal, S.; Kim, D. A Depth Video-based Human Detection and Activity Recognition using Multi-features and Embedded Hidden Markov Models for Health Care Monitoring Systems. Int. J. Interact. Multimed. Artif. Intell. 2017, 4, 54–62. [Google Scholar] [CrossRef]

- Yang, X.; Tian, Y. Super normal vector for human activity recognition with depth cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 1028–1039. [Google Scholar] [CrossRef] [PubMed]

- Simonyan, K.; Zisserman, A. Two-stream convolutional networks for action recognition in videos. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 568–576. [Google Scholar]

- Wang, L.; Xiong, Y.; Wang, Z.; Qiao, Y.; Lin, D.; Tang, X.; Van Gool, L. Temporal segment networks: Towards good practices for deep action recognition. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 20–36. [Google Scholar]

- Ren, Y.; Zhu, C.; Xiao, S. Deformable Faster R-CNN with Aggregating Multi-Layer Features for Partially Occluded Object Detection in Optical Remote Sensing Images. Remote Sens. 2018, 10, 1470. [Google Scholar] [CrossRef]

- Markman, A.; Shen, X.; Javidi, B. Three-dimensional object visualization and detection in low light illumination using integral imaging. Opt. Lett. 2017, 42, 3068–3071. [Google Scholar] [CrossRef] [PubMed]

- Bouachir, W.; Gouiaa, R.; Li, B.; Noumeir, R. Intelligent video surveillance for real-time detection of suicide attempts. Pattern Recognit. Lett. 2018, 110, 1–7. [Google Scholar] [CrossRef]

- Reyes-Ortiz, J.L.; Oneto, L.; Samà, A.; Parra, X.; Anguita, D. Transition-aware human activity recognition using smartphones. Neurocomputing 2016, 171, 754–767. [Google Scholar] [CrossRef]

- Liu, Y.; Nie, L.; Liu, L.; Rosenblum, D.S. From action to activity: Sensor-based activity recognition. Neurocomputing 2016, 181, 108–115. [Google Scholar] [CrossRef]

- Ronao, C.A.; Cho, S.B. Human activity recognition with smartphone sensors using deep learning neural networks. Expert Syst. Appl. 2016, 59, 235–244. [Google Scholar] [CrossRef]

- Shoaib, M.; Bosch, S.; Incel, O.D.; Scholten, H.; Havinga, P.J. Complex human activity recognition using smartphone and wrist-worn motion sensors. Sensors 2016, 16, 426. [Google Scholar] [CrossRef] [PubMed]

- Le, H.T.; Phung, S.L.; Bouzerdoum, A. Human Gait Recognition with Micro-Doppler Radar and Deep Autoencoder. In Proceedings of the IEEE 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 3347–3352. [Google Scholar]

- Lin, Y.; Le Kernec, J.; Yang, S.; Fioranelli, F.; Romain, O.; Zhao, Z. Human Activity Classification With Radar: Optimization and Noise Robustness with Iterative Convolutional Neural Networks Followed with Random Forests. IEEE Sens. J. 2018, 18, 9669–9681. [Google Scholar] [CrossRef]

- Shao, Y.; Guo, S.; Sun, L.; Chen, W. Human Motion Classification Based on Range Information with Deep Convolutional Neural Network. In Proceedings of the International Conference on Information Science and Control Engineering (ICISCE), Changsha, China, 21–23 July 2017; pp. 1519–1523. [Google Scholar]

- Chen, Z.; Li, G.; Fioranelli, F.; Griffiths, H. Personnel Recognition and Gait Classification Based on Multistatic Micro-Doppler Signatures Using Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 669–673. [Google Scholar] [CrossRef] [Green Version]

- Chen, V.C.; Li, F.; Ho, S.S.; Wechsler, H. Micro-Doppler effect in radar: Phenomenon, model, and simulation study. IEEE Trans. Aerosp. Electron. Syst. 2006, 42, 2–21. [Google Scholar] [CrossRef]

- Zenaldin, M.; Narayanan, R.M. Radar micro-Doppler based human activity classification for indoor and outdoor environments. In Proceedings of the SPIE Conference on Radar Sensor Technology XX, Baltimore, MD, USA, 18–21 April 2016. [Google Scholar]

- Qi, F.; Lv, H.; Liang, F.; Li, Z.; Yu, X.; Wang, J. MHHT-based method for analysis of micro-Doppler signatures for human finer-grained activity using through-wall SFCW radar. Remote Sens. 2017, 9, 260. [Google Scholar] [CrossRef]

- Smith, K.; Csech, C.; Murdoch, D.; Shaker, G. Gesture Recognition Using mm-Wave Sensor for Human-Car Interface. IEEE Sens. Lett. 2018, 2, 1–4. [Google Scholar] [CrossRef]

- Zhang, Z.; Tian, Z.; Zhou, M. Latern: Dynamic Continuous Hand Gesture Recognition Using FMCW Radar Sensor. IEEE Sens. J. 2018, 18, 3278–3289. [Google Scholar] [CrossRef]

- Kim, Y. Detection of eye blinking using Doppler sensor with principal component analysis. IEEE Antennas Wirel. Propag. Lett. 2015, 14, 123–126. [Google Scholar] [CrossRef]

- Kim, Y.; Ling, H. Human Activity Classification Based on Micro-Doppler Signatures Using a Support Vector Machine. IEEE Trans. Geosci. Remote Sens. 2009, 47, 1328–1337. [Google Scholar]

- Zhou, Z.; Cao, Z.; Pi, Y. Dynamic Gesture Recognition with a Terahertz Radar Based on Range Profile Sequences and Doppler Signatures. Sensors 2017, 18, 10. [Google Scholar] [CrossRef]

- Wang, J.; Chen, Y.; Hao, S.; Peng, X.; Hu, L. Deep learning for sensor-based activity recognition: A survey. Pattern Recognit. Lett. 2018, 119, 3–11. [Google Scholar] [CrossRef]

- Deng, L.; Yu, D. Deep learning: Methods and applications. Found. Trends Signal Process. 2014, 7, 197–387. [Google Scholar] [CrossRef]

- Lecun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the National Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 4278–4284. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Kang, M.; Ji, K.; Leng, X.; Lin, Z. Contextual region-based convolutional neural network with multilayer fusion for SAR ship detection. Remote Sens. 2017, 9, 860. [Google Scholar] [CrossRef]

- Lin, Z.; Ji, K.; Leng, X.; Kuang, G. Squeeze and Excitation Rank Faster R-CNN for Ship Detection in SAR Images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 751–755. [Google Scholar] [CrossRef]

- Lin, Z.; Ji, K.; Kang, M.; Leng, X.; Zou, H. Deep convolutional highway unit network for sar target classification with limited labeled training data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1091–1095. [Google Scholar] [CrossRef]

- Kim, Y.; Moon, T. Human Detection and Activity Classification Based on Micro-Doppler Signatures Using Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 8–12. [Google Scholar] [CrossRef]

- Kim, Y.; Toomajian, B. Hand Gesture Recognition Using Micro-Doppler Signatures with Convolutional Neural Network. IEEE Access 2016, 4, 7125–7130. [Google Scholar] [CrossRef]

- Kim, Y.; Toomajian, B. Application of Doppler radar for the recognition of hand gestures using optimized deep convolutional neural networks. In Proceedings of the European Conference on Antennas and Propagation, Paris, France, 19–24 March 2017; pp. 1258–1260. [Google Scholar]

- Lang, Y.; Hou, C.; Yang, Y.; Huang, D.; He, Y. Convolutional neural network for human micro-Doppler classification. In Proceedings of the European Microwave Conference, Nuremberg, Germany, 8–13 October 2017. [Google Scholar]

- Trommel, R.P.; Harmanny, R.I.A.; Cifola, L.; Driessen, J.N. Multi-target human gait classification using deep convolutional neural networks on micro-doppler spectrograms. In Proceedings of the European Radar Conference, London, UK, 5–7 October 2016; pp. 81–84. [Google Scholar]

- Yang, Y.; Hou, C.; Lang, Y.; Guan, D.; Huang, D.; Xu, J. Open-set human activity recognition based on micro-Doppler signatures. Pattern Recognit. 2019, 85, 60–69. [Google Scholar] [CrossRef]

- Le, H.T.; Phung, S.L.; Bouzerdoum, A.; Tivive, F.H.C. Human Motion Classification with Micro-Doppler Radar and Bayesian-Optimized Convolutional Neural Networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Calgary, AB, Canada, 15–20 April 2018; pp. 2961–2965. [Google Scholar]

- Shao, Y.; Dai, Y.; Yuan, L.; Chen, W. Deep Learning Methods for Personnel Recognition based on Micro-Doppler Features. In Proceedings of the 9th International Conference on Signal Processing Systems, AUT, Auckland, New Zealand, 27–30 November 2017; pp. 94–98. [Google Scholar]

- Zhang, J.; Tao, J.; Shi, Z. Doppler-Radar Based Hand Gesture Recognition System Using Convolutional Neural Networks. In Proceedings of the IEEE International Conference in Communications, Signal Processing, and Systems, Harbin, China, 14–16 July 2017; pp. 1096–1113. [Google Scholar]

- Wang, S.; Song, J.; Lien, J.; Poupyrev, I.; Hilliges, O. Interacting with Soli: Exploring Fine-Grained Dynamic Gesture Recognition in the Radio-Frequency Spectrum. In Proceedings of the ACM Symposium on User Interface Software and Technology, Tokyo, Japan, 16–19 October 2016; pp. 851–860. [Google Scholar]

- Lien, J.; Gillian, N.; Karagozler, M.E.; Amihood, P.; Schwesig, C.; Olson, E.; Raja, H.; Poupyrev, I. Soli: Ubiquitous gesture sensing with millimeter wave radar. ACM Trans. Graph. 2016, 35, 142. [Google Scholar] [CrossRef]

- Seyfioğlu, M.S.; Özbayğglu, A.M.; Gurbuz, S.Z. Deep Convolutional Autoencoder for Radar-Based Classification of Similar Aided and Unaided Human Activities. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 1709–1723. [Google Scholar] [CrossRef]

- Graves, A. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar]

- Klarenbeek, G.; Harmanny, R.I.A.; Cifola, L. Multi-target human gait classification using LSTM recurrent neural networks applied to micro-Doppler. In Proceedings of the European Radar Conference, Nuremberg, Germany, 11–13 October 2017; pp. 167–170. [Google Scholar]

- Erhan, D.; Bengio, Y.; Courville, A.; Manzagol, P.A.; Vincent, P.; Bengio, S. Why does unsupervised pre-training help deep learning? J. Mach. Learn. Res. 2010, 11, 625–660. [Google Scholar]

- Jokanovic, B.; Amin, M.; Ahmad, F. Radar fall motion detection using deep learning. In Proceedings of the IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar]

- Jokanovic, B.; Amin, M.; Erol, B. Multiple joint-variable domains recognition of human motion. In Proceedings of the IEEE Radar Conference, Seattle, WA, USA, 8–12 May 2017; pp. 0948–0952. [Google Scholar]

- Jokanović, B.; Amin, M. Fall detection using deep learning in range-Doppler radars. IEEE Trans. Aerosp. Electron. Syst. 2018, 54, 180–189. [Google Scholar] [CrossRef]

- Kang, M.; Ji, K.; Leng, X.; Xing, X.; Zou, H. Synthetic aperture radar target recognition with feature fusion based on a stacked autoencoder. Sensors 2017, 17, 192. [Google Scholar] [CrossRef]

- Li, C.; Peng, Z.; Huang, T.Y.; Fan, T.; Wang, F.K.; Horng, T.S.; Muñoz-Ferreras, J.M.; Gómez-García, R.; Ran, L.; Lin, J. A Review on Recent Progress of Portable Short-Range Noncontact Microwave Radar Systems. IEEE Trans. Microw. Theory Tech. 2017, 65, 1692–1706. [Google Scholar] [CrossRef]

- Peng, Z.; Li, C. Portable Microwave Radar Systems for Short-Range Localization and Life Tracking: A Review. Sensors 2019, 19, 1136. [Google Scholar] [CrossRef]

- Nanzer, J.A. A Review of Microwave Wireless Techniques for Human Presence Detection and Classification. IEEE Trans. Microw. Theory Tech. 2017, 65, 1780–1794. [Google Scholar] [CrossRef]

- Lukin, K.; Konovalov, V. Through wall detection and recognition of human beings using noise radar sensors. In Proceedings of the NATO RTO SET Symposium on Target Identification and Recognition Using RF Systems, Oslo, Norway, 11–13 October 2004; pp. 15-1–15-11. [Google Scholar]

- Lai, C.P.; Ruan, Q.; Narayanan, R.M. Hilbert-Huang transform (HHT) analysis of human activities using through-wall noise radar. In Proceedings of the International Symposium on Signals, Systems and Electronics, Montreal, QC, Canada, 30 July–2 August 2007. [Google Scholar]

- Narayanan, R.M. Through-wall radar imaging using UWB noise waveforms. J. Frankl. Inst. 2008, 345, 659–678. [Google Scholar] [CrossRef]

- Lai, C.P.; Narayanan, R.; Ruan, Q.; Davydov, A. Hilbert–Huang transform analysis of human activities using through-wall noise and noise-like radar. IET Radar Sonar Navig. 2008, 2, 244–255. [Google Scholar] [CrossRef]

- Lai, C.P.; Narayanan, R.M. Ultrawideband random noise radar design for through-wall surveillance. IEEE Trans. Aerosp. Electron. Syst. 2010, 46, 1716–1730. [Google Scholar] [CrossRef]

- Susek, W.; Stec, B. Through-the-wall detection of human activities using a noise radar with microwave quadrature correlator. IEEE Trans. Aerosp. Electron. Syst. 2015, 51, 759–764. [Google Scholar] [CrossRef]

- Wang, M.; Zhang, Y.D.; Cui, G. Human motion recognition exploiting radar with stacked recurrent neural network. Digit. Signal Process. 2019, 87, 125–131. [Google Scholar] [CrossRef]

- Mercuri, M.; Liu, Y.; Lorato, I.; Torfs, T.; Wieringa, F.P.; Bourdoux, A.; Van Hoof, C. A Direct Phase-Tracking Doppler Radar Using Wavelet Independent Component Analysis for Non-Contact Respiratory and Heart Rate Monitoring. IEEE Trans. Biomed. Circuits Syst. 2018, 12, 632–643. [Google Scholar] [CrossRef] [PubMed]

- Rahman, A.; Lubecke, V.; Boriclubecke, O.; Prins, J.; Sakamoto, T. Doppler Radar Techniques for Accurate Respiration Characterization and Subject Identification. IEEE J. Emerg. Sel. Top. Circuits Syst. 2018, 8, 350–359. [Google Scholar] [CrossRef]

- Kim, J.Y.; Park, J.H.; Jang, S.Y.; Yang, J.R. Peak Detection Algorithm for Vital Sign Detection Using Doppler Radar Sensors. Sensors 2019, 19, 1575. [Google Scholar] [CrossRef] [PubMed]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Short-range FMCW monopulse radar for hand-gesture sensing. In Proceedings of the IEEE Radar Conference, Arlington, VA, USA, 10–15 May 2015; pp. 1491–1496. [Google Scholar]

- Molchanov, P.; Gupta, S.; Kim, K.; Pulli, K. Multi-sensor system for driver’s hand-gesture recognition. In Proceedings of the 11th IEEE International Conference and Workshops on Automatic Face and Gesture Recognition (FG), Ljubljana, Slovenia, 4–8 May 2015; Volume 1, pp. 1–8. [Google Scholar]

- Peng, Z.; Li, C.; Muñoz-Ferreras, J.M.; Gómez-García, R. An FMCW radar sensor for human gesture recognition in the presence of multiple targets. In Proceedings of the 2017 First IEEE MTT-S International Microwave Bio Conference (IMBIOC), Gothenburg, Sweden, 15–17 May 2017; pp. 1–3. [Google Scholar]

- Zhou, H.; Cao, P.; Chen, S. A novel waveform design for multi-target detection in automotive FMCW radar. In Proceedings of the IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–5. [Google Scholar]

- Kim, B.S.; Jin, Y.; Kim, S.; Lee, J. A Low-Complexity FMCW Surveillance Radar Algorithm Using Two Random Beat Signals. Sensors 2019, 19, 608. [Google Scholar] [CrossRef]

- Nanzer, J.A. Millimeter-Wave Interferometric Angular Velocity Detection. IEEE Trans. Microw. Theory Tech. 2010, 58, 4128–4136. [Google Scholar] [CrossRef]

- Hariharan, P.; Creath, K. Basics of Interferometry. Phys. Today 1993, 46, 75. [Google Scholar] [CrossRef]

- Peng, Z.; Muñoz-Ferreras, J.M.; Tang, Y.; Liu, C.; Gómez-García, R.; Ran, L.; Li, C. A portable FMCW interferometry radar with programmable low-IF architecture for localization, ISAR imaging, and vital sign tracking. IEEE Trans. Microw. Theory Tech. 2017, 65, 1334–1344. [Google Scholar] [CrossRef]

- Wang, G.; Gu, C.; Inoue, T.; Li, C. A Hybrid FMCW-Interferometry Radar for Indoor Precise Positioning and Versatile Life Activity Monitoring. IEEE Trans. Microw. Theory Tech. 2014, 62, 2812–2822. [Google Scholar] [CrossRef]

- Nanzer, J.A. Micro-motion signatures in radar angular velocity measurements. In Proceedings of the IEEE Radar Conference, Philadelphia, PA, USA, 2–6 May 2016; pp. 1–4. [Google Scholar]

- Kim, Y.; Park, J.; Moon, T. Classification of micro-Doppler signatures of human aquatic activity through simulation and measurement using transferred learning. In Proceedings of the Radar Sensor Technology XXI. International Society for Optics and Photonics, Anaheim, CA, USA, 10–12 April 2017; Volume 10188, p. 101880V. [Google Scholar]

- Du, H.; He, Y.; Jin, T. Transfer Learning for Human Activities Classification Using Micro-Doppler Spectrograms. In Proceedings of the IEEE International Conference on Computational Electromagnetics (ICCEM), Chengdu, China, 26–28 March 2018; pp. 1–3. [Google Scholar]

- Lang, Y.; Wang, Q.; Yang, Y.; Hou, C.; Huang, D.; Xiang, W. Unsupervised Domain Adaptation for Micro-Doppler Human Motion Classification via Feature Fusion. IEEE Geosci. Remote Sens. Lett. 2018, 6, 392–396. [Google Scholar] [CrossRef]

- Yarovoy, A.; Ligthart, L.; Matuzas, J.; Levitas, B. UWB radar for human being detection. IEEE Aerosp. Electron. Syst. Mag. 2006, 21, 10–14. [Google Scholar] [CrossRef] [Green Version]

- Bryan, J.; Kim, Y. Classification of human activities on UWB radar using a support vector machine. In Proceedings of the IEEE Antennas and Propagation Society International Symposium, Toronto, ON, Canada, 11–17 July 2010; pp. 1–4. [Google Scholar]

- Bryan, J.; Kwon, J.; Lee, N.; Kim, Y. Application of ultra-wide band radar for classification of human activities. IET Radar Sonar Navig. 2012, 6, 172–179. [Google Scholar] [CrossRef]

- Seyfioğlu, M.S.; Gürbüz, S.Z. Deep neural network initialization methods for micro-Doppler classification with low training sample support. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2462–2466. [Google Scholar] [CrossRef]

- Park, J.; Javier, R.J.; Moon, T.; Kim, Y. Micro-Doppler based classification of human aquatic activities via transfer learning of convolutional neural networks. Sensors 2016, 16, 1990. [Google Scholar] [CrossRef]

- Cao, P.; Xia, W.; Ye, M.; Zhang, J.; Zhou, J. Radar-ID: Human identification based on radar micro-Doppler signatures using deep convolutional neural networks. IET Radar Sonar Navig. 2018, 12, 729–734. [Google Scholar] [CrossRef]

- He, Y.; Le Chevalier, F.; Yarovoy, A.G. Range-Doppler processing for indoor human tracking by multistatic ultra-wideband radar. In Proceedings of the 13th International Radar Symposium (IRS), Warsaw, Poland, 23–25 May 2012; pp. 250–253. [Google Scholar]

- Ji, S.; Xu, W.; Yang, M.; Yu, K. 3D convolutional neural networks for human action recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 221–231. [Google Scholar] [CrossRef] [PubMed]

- Sang, Y.; Shi, L.; Liu, Y. Micro Hand Gesture Recognition System Using Ultrasonic Active Sensing. IEEE Access 2017, 6, 49339–49347. [Google Scholar] [CrossRef]

- Tahmoush, D. Review of micro-Doppler signatures. IET Radar Sonar Navig. 2015, 9, 1140–1146. [Google Scholar] [CrossRef]

- Chen, V.C.; Qian, S. Joint time-frequency transform for radar range-Doppler imaging. IEEE Trans. Aerosp. Electron. Syst. 1998, 34, 486–499. [Google Scholar] [CrossRef]

- He, Y.; Molchanov, P.; Sakamoto, T.; Aubry, P.; Chevalier, F.L.; Yarovoy, A. Range-Doppler surface: A tool to analyse human target in ultra-wideband radar. IET Radar Sonar Navig. 2015, 9, 1240–1250. [Google Scholar] [CrossRef]

- Erol, B.; Amin, M.; Zhou, Z.; Zhang, J. Range information for reducing fall false alarms in assisted living. In Proceedings of the IEEE Radar Conference, Philadelphia, PA, USA, 2–6 May 2016; pp. 1–6. [Google Scholar]

- Erol, B.; Amin, M.G. Fall motion detection using combined range and Doppler features. In Proceedings of the 24th European Signal Processing Conference (EUSIPCO), Budapest, Hungary, 29 August–2 September 2016; pp. 2075–2080. [Google Scholar]

- Wang, Y.; Fathy, A.E. UWB micro-doppler radar for human gait analysis using joint range-time-frequency representation. Proc. SPIE 2013, 8734, 873404. [Google Scholar]

- Cammenga, Z.A.; Smith, G.E.; Baker, C.J. Combined high range resolution and micro-Doppler analysis of human gait. In Proceedings of the IEEE Radar Conference, Arlington, VA, USA, 10–15 May 2015; pp. 1038–1043. [Google Scholar]

- Graves, A.; Mohamed, A.R.; Hinton, G. Speech recognition with deep recurrent neural networks. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Vancouver, BC, Canada, 26–31 May 2013; pp. 6645–6649. [Google Scholar]

- Abdel-Hamid, O.; Mohamed, A.R.; Jiang, H.; Penn, G. Applying Convolutional Neural Networks concepts to hybrid NN-HMM model for speech recognition. In Proceedings of the IEEE International Conference on Acoustics, Speech and Signal Processing, Kyoto, Japan, 25–30 March 2012; pp. 4277–4280. [Google Scholar]

- Vignaud, L.; Ghaleb, A.; Kernec, J.L.; Nicolas, J.M. Radar high resolution range & micro-Doppler analysis of human motions. In Proceedings of the International Radar Conference—Surveillance for a Safer World, Bordeaux, France, 12–16 October 2010; pp. 1–6. [Google Scholar]

- Graves, A.; Gomez, F. Connectionist temporal classification: Labelling unsegmented sequence data with recurrent neural networks. In Proceedings of the International Conference on Machine Learning, Pittsburgh, PA, USA, 25–29 June 2006; pp. 369–376. [Google Scholar]

- Xing, T.; Sandha, S.S.; Balaji, B.; Chakraborty, S.; Srivastava, M. Enabling Edge Devices that Learn from Each Other: Cross Modal Training for Activity Recognition. In Proceedings of the 1st International Workshop on Edge Systems, Analytics and Networking, Munich, Germany, 10–15 June 2018; ACM: New York, NY, USA, 2018; pp. 37–42. [Google Scholar]

| Models | Descriptions and Advantages |

|---|---|

| CNN | capturing the spatial relationship by multiple convolutional layers, often utilized as an excellent localized feature extractor |

| RNN | exploring the temporal relationship in data, variants are often utilized, such as LSTM |

| Auto-encoder | a feed-forward neural network that learns deep features in an unsupervised fashion |

| Hybrid deep models | the combination of some deep models, built on each model’s own strength to obtain better performance |

| CW radar | Doppler radar | sending out single-tone radio waves able to acquire the Doppler/radial velocity information of targets |

| FMCW radar | providing range and speed information of targets simultaneously suitable for scenarios with the presence of multiple targets | |

| Interferometry radar | obtaining angular velocity of the target regardless of the targets’ moving direction, with the output of two antennas cross-correlated | |

| UWB radar | providing fine range resolution able to distinguish the major scattering centers of the target | |

| Echo Form | Literature | Radar Type | Central Frequency | Deep Model | |

|---|---|---|---|---|---|

| 3D echoes | time–range–Doppler maps | [50,51] | FMCW radar | 60 GHz | CNN + LSTM |

| 2D echoes | time–Doppler maps | [89] | CW radar | 4 GHz | CNN |

| [45] | CW radar | 8 GHz | CNN | ||

| [48] | CW radar | 24 GHz | CNN | ||

| [54] | CW radar | 8 GHz | LSTM | ||

| [56] | CW radar | 6 GHz | SAE | ||

| [52] | CW radar | 4 GHz | CAE | ||

| [41] | Doppler radar | 2.4 GHz | CNN | ||

| [90] | Doppler radar | 7.3 GHz | CNN | ||

| [47] | Doppler radar | 24 GHz | CNN | ||

| [49] | Doppler radar | 5.8 GHz | CNN | ||

| [69] | Doppler radar | 25 GHz | LSTM | ||

| [20] | Doppler radar | 24 GHz | SAE | ||

| [42] | pulse Doppler radar | 5.8 GHz | CNN | ||

| [43] | pulse Doppler radar | 5.8 GHz | CNN | ||

| [84] | UWB radar | 4 GHz | CNN | ||

| [85] | UWB radar | 4.3 GHz | CNN | ||

| [83] | UWB radar | 7.3 GHz | CNN | ||

| [46] | UWB radar | 4 GHz | CNN | ||

| [44] | UWB radar | 4 GHz | CNN | ||

| [91] | FMCW radar | 24 GHz | CNN | ||

| [21] | FMCW radar | 5.8 GHz | CNN | ||

| time–range maps | [22] | UWB radar | 3.9 GHz | CNN | |

| [28] | FMCW radar | 24 GHz | 3D CNN + LSTM | ||

| range–Doppler maps | [74] | FMCW radar | 24 GHz | 3D CNN | |

| time–Doppler maps and time–range maps | [58] | FMCW radar | 25 GHz | SAE | |

| time–Doppler maps, time–range maps and range–Doppler maps | [57] | FMCW radar | 24 GHz | SAE | |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, X.; He, Y.; Jing, X. A Survey of Deep Learning-Based Human Activity Recognition in Radar. Remote Sens. 2019, 11, 1068. https://doi.org/10.3390/rs11091068

Li X, He Y, Jing X. A Survey of Deep Learning-Based Human Activity Recognition in Radar. Remote Sensing. 2019; 11(9):1068. https://doi.org/10.3390/rs11091068

Chicago/Turabian StyleLi, Xinyu, Yuan He, and Xiaojun Jing. 2019. "A Survey of Deep Learning-Based Human Activity Recognition in Radar" Remote Sensing 11, no. 9: 1068. https://doi.org/10.3390/rs11091068