Dual Learning-Based Siamese Framework for Change Detection Using Bi-Temporal VHR Optical Remote Sensing Images

Abstract

:1. Introduction

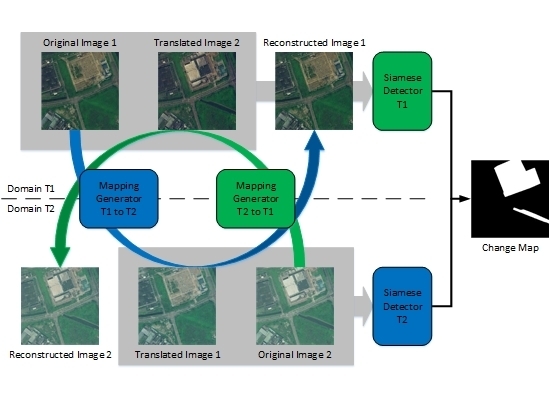

- We propose a novel hybrid end-to-end framework integrating strategies of dual learning and Siamese network to directly achieve supervised change detection using bi-temporal VHR optical remote sensing images without any pre- or post-processing.

- To the best of our knowledge, it is the first time applying the idea of dual learning in change detection to achieve a cross-domain translation between bi-temporal images.

- The CDLF with two conditional discriminators is designed to ensure the complete translations of paired images from the source domain to the target domain specifically in the unchanged regions.

- We adopt a weight shared strategy on discriminators and detectors to improve the training velocity and efficiency.

- We design a new loss function comprise of adversarial, cross-consistency, self-consistency, and contrastive losses as the decision maker to better train the DLSF for change detection.

2. Background

2.1. Dual Learning Framework

2.2. Fully Convolutional Siamese Network

3. Methodology

3.1. Problem Formulation

3.2. Framework Architecture

3.2.1. Domain Transfer Stream

3.2.2. Change Decision Stream

3.3. Loss Function

3.3.1. Adversarial Loss

3.3.2. Cross-Consistency Loss

3.3.3. Self-Consistency Loss

3.3.4. Contrastive Loss

3.4. Implementation

3.4.1. Network Architecture

3.4.2. Training Procedure

3.4.3. Predicting Detail

4. Experiments

4.1. Datasets Description

4.2. Methods Comparison

- CVA [19]: Derived from the simple difference algorithm, CVA is a classic method for unsupervised change detection in remote sensing. By using a magnitude of difference vectors, CVA is able to achieve pixel-level change detection. In this competitive method, we use pixel-level change vectors to calculate the threshold by K-means clustering to achieve change detection.

- SVM [28]: As the most typical case of machine learning, SVM aims to make a generalized linear classification on dataset, and then find the decision boundary in high dimensional space. It is used for both supervised and unsupervised change detection. In this competitive method, we perform the experiments using a Gaussian radial basis function (RBF) kernel. And the SVM hyper-parameters are selected by a three-fold cross-validation.

- CNN [34]: This network is not only used for image classification, but also is applied to extract positive and meaningful features. By purposefully designing the network architecture and loss function, the features from the CNN process provide guidance for the supervised change detection.

- GAN [35]: With basic CNN network, GAN adds a discriminator for adversarial learning. In many image processing tasks, as compared with CNN, GAN has better generalization ability, and displays almost the same performance of CNN with fewer input training samples.

- DSCN [38]: Derived from the Siamese network, DSCN aims to extract robust features from two paired images with one CNN, which has no down- or up-sampling layers. With the convergence of contrastive loss, the model is able to detect the changed regions by calculating the pairwise Euclidean distance.

- SCCN [39]: As an extension of the Siamese network, SCCN is specifically designed for supervised change detection on heterogeneous remote sensing images. It maps two input images into the same feature space with a deep neural network comprised of one convolutional layer and several coupling layers, then it detects the changed regions by calculating the distance of paired images in the target feature space.

4.3. Evaluation Metrics

4.4. Experimental Setup

4.5. Results Presentation

5. Discussion

5.1. Effect of Model Architectures

5.1.1. Mapping Generator

5.1.2. Conditional Discriminator

5.1.3. Siamese Detector

5.2. Effect of Loss Functions

6. Conclusions

Author Contributions

Conflicts of Interest

Appendix

References

- Singh, A. Review article digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Lu, D.; Mausel, P.; Brondizio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2401. [Google Scholar] [CrossRef]

- Radke, R.J.; Andra, S.; Al-Kofahi, O.; Roysam, B. Image change detection algorithms: A systematic survey. IEEE Trans. Image Process. 2005, 14, 294–307. [Google Scholar] [CrossRef] [PubMed]

- Demir, B.; Bovolo, F.; Bruzzone, L. Updating land-cover maps by classification of image time series: A novel change-detection-driven transfer learning approach. IEEE Trans. Geosci. Remote Sens. 2013, 51, 300–312. [Google Scholar] [CrossRef]

- Jin, S.; Yang, L.; Danielson, P.; Homer, C.; Fry, J.; Xian, G. A comprehensive change detection method for updating the national land cover database to circa 2011. Remote Sens. Environ. 2014, 29, 78–92. [Google Scholar] [CrossRef]

- Kennedy, R.E.; Townsend, P.A.; Gross, J.E.; Cohen, W.B.; Bolstad, P.; Wang, Y.Q.; Adams, P.; Gross, J.E.; Goetz, S.J.; Cihlar, J. Remote sensing change detection tools for natural resource managers: Understanding concepts and tradeoffs in the design of landscape monitoring projects. Remote Sens. Environ. 2009, 113, 1382–1396. [Google Scholar] [CrossRef]

- Rokni, K.; Ahmad, A.; Selamat, A.; Hazini, S. Water feature extraction and change detection using multitemporal landsat imagery. Remote Sens. 2016, 6, 4173–4189. [Google Scholar] [CrossRef]

- Awad, M. Sea water chlorophyll a estimation using hyperspectral images and supervised artificial neural network. Ecol. Inform. 2014, 24, 60–68. [Google Scholar] [CrossRef]

- Singh, D.; Chamundeeswari, V.V.; Singh, K.; Wiesbeck, W. Monitoring and Change Detection of Natural Disaster (like Subsidence) Using Synthetic Aperture Radar (SAR) Data. In Proceedings of the International Conference on Recent Advances in Microwave Theory and Applications, Jaipur, India, 21–24 November 2008; pp. 419–421. [Google Scholar]

- Hu, T.; Huang, X.; Li, J.; Zhang, L. A novel co-training approach for urban land cover mapping with unclear landsat time series imagery. Remote Sens. Environ. 2018, 217, 144–157. [Google Scholar] [CrossRef]

- Ridd, M.K.; Liu, J. A comparison of four algorithms for change detection in an urban environment. Remote Sens. Environ. 1998, 63, 95–100. [Google Scholar] [CrossRef]

- Malmir, M.; Zarkesh, M.M.K.; Monavari, S.M.; Jozi, S.A.; Sharifi, E. Urban development change detection based on multi-temporal satellite images as a fast tracking approach-A case study of Ahwaz county, southwestern Iran. Environ. Monit. Assess. 2015, 187, 4295. [Google Scholar] [CrossRef]

- Bruzzone, L.; Bovolo, F. A novel framework for design of change-detection systems for very-high-resolution remote sensing images. Proc. IEEE 2013, 101, 609–630. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A detail-preserving scale-driven approach to change detection in multitemporal SAR images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2963–2972. [Google Scholar] [CrossRef]

- Inglada, J.; Mercier, G. A new statistical similarity measure for change detection in multitemporal SAR images and its extension to multiscale change analysis. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1432–1445. [Google Scholar] [CrossRef]

- Kittler, J.; Illingworth, J. Minimum error thresholding. Pattern Recognit. 1986, 19, 41–47. [Google Scholar] [CrossRef]

- Yang, W.; Yang, X.; Yan, T.; Song, H.; Xia, G.S. Region-based change detection for polarimetric SAR images using Wishart mixture models. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6746–6756. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Zhang, L. Slow feature analysis for change detection in multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2858–2874. [Google Scholar] [CrossRef]

- Malila, W.A. Change Vector Analysis: An Approach for Detecting Forest Changes with Landsat. LARS Symposia 1980, 385. [Google Scholar]

- Bovolo, F.; Bruzzone, L. A theoretical framework for unsupervised change detection based on change vector analysis in the polar domain. IEEE Trans. Geosci. Remote Sens. 2007, 45, 218–236. [Google Scholar] [CrossRef]

- Liu, S.; Bruzzone, L.; Bovolo, F.; Du, P. A Novel Sequential spectral Change Vector Analysis for Representing and detecting Multiple Changes in Hyperspectral Images. In Proceedings of the IEEE Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; pp. 4656–4659. [Google Scholar]

- Nielsen, A.A.; Conradsen, K.; Simpson, J.J. Multivariate alteration detection (MAD) and MAF postprocessing in multispectral, bitemporal image data: New approaches to change detection studies. Remote Sens. Environ. 1998, 64, 1–19. [Google Scholar] [CrossRef]

- Nielsen, A.A. The regularized iteratively reweighted MAD method for change detection in multi-and hyperspectral data. IEEE Trans. Image Process. 2007, 16, 463–478. [Google Scholar] [CrossRef]

- Feng, W.; Sui, H.; Tu, J.; Huang, W.; Sun, K. A novel change detection approach based on visual saliency and random forest from multi-temporal high-resolution remote-sensing images. Int. J. Remote Sens. 2018, 39, 7998–8021. [Google Scholar] [CrossRef]

- Bueno, I.T.; Junior, F.W.A.; Silveira, E.M.O.; Mello, J.M.; Carvalho, L.M.T.; Gomide, L.R.; Withey, K.; Scolforo, J.R.S. Object-based change detection in the Cerrado biome using landsat time series. Remote Sens. 2019, 11, 570. [Google Scholar] [CrossRef]

- Benedek, C.; Sziranyi, T. Change detection in optical aerial images by a multilayer conditional mixed Markov model. IEEE Trans. Geosci. Remote Sens. 2009, 47, 3416–3430. [Google Scholar] [CrossRef]

- Moser, G.; Angiati, E.; Serpico, S.B. Multiscale unsupervised change detection by Markov random fields and wavelet transforms. IEEE Geosci. Remote Sens. Lett. 2011, 8, 725–729. [Google Scholar] [CrossRef]

- Volpi, M.; Tuia, D.; Bovolo, F.; Kanevski, M.; Bruzzone, L. Supervised change detection in VHR images using contextual information and support vector machines. Int. J. Appl. Earth Obs. Geoinform. 2013, 20, 70–85. [Google Scholar] [CrossRef]

- Deng, J.; Wang, K.; Deng, Y.; Qi, G. PCA-based land-use change detection and analysis using multitemporal and multisensor satellite data. Int. J. Remote Sens. 2008, 29, 4823–4838. [Google Scholar] [CrossRef]

- Wang, X.; Liu, S.; Du, P.; Liang, H.; Xia, J.; Li, Y. Object-based change detection in urban areas from high spatial resolution images based on multiple features and ensemble learning. Remote Sens. 2018, 10, 276. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.; Wang, X.; Chen, Y. Object-based change detection using multiple classifiers and multi-scale uncertainty analysis. Remote Sens. 2019, 11, 359. [Google Scholar] [CrossRef]

- Lyu, H.; Lu, H.; Mou, L. Learning a transferable change rule from a recurrent neural network for land cover change detection. Remote Sens. 2016, 8, 506. [Google Scholar] [CrossRef]

- Gong, M.; Zhao, J.; Liu, J.; Miao, Q.; Jiao, L. Change detection in synthetic aperture radar images based on deep belief networks. IEEE Trans. Neural Netw. Learn. Syst. 2016, 27, 125–138. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Yuan, Z.; Du, Q.; Li, X. GETNET: A general end-to-end 2-D CNN framework for hyperspectral image change detection. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3–13. [Google Scholar] [CrossRef]

- Gong, M.; Niu, X.; Zhang, P.; Li, Z. Generative adversarial networks for change detection in multispectral imagery. IEEE Geosci. Remote Sens. Lett. 2017, 14, 2310–2314. [Google Scholar] [CrossRef]

- Gong, M.; Yang, Y.; Zhan, T.; Niu, X.; Li, S. A generative discriminatory classified network for change detection in multispectral imagery. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2019, 12, 321–333. [Google Scholar] [CrossRef]

- Niu, X.; Gong, M.; Zhan, T.; Yang, Y. A conditional adversarial network for change detection in heterogeneous images. IEEE Geosci. Remote Sens. Lett. 2019, 16, 45–49. [Google Scholar] [CrossRef]

- Zhan, Y.; Fu, K.; Yan, M.; Sun, X.; Wang, H.; Qiu, X. Change detection based on deep Siamese convolutional network for optical aerial images. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1845–1849. [Google Scholar] [CrossRef]

- Liu, J.; Gong, M.; Qin, K.; Zhang, P. A deep convolutional coupling network for change detection based on heterogeneous optical and radar images. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 545–559. [Google Scholar] [CrossRef]

- Yu, L.; Xie, J.; Chen, S.; Zhu, L. Generating labeled samples for hyperspectral image classification using correlation of spectral bands. Front. Comput. Sci. 2016, 10, 292–301. [Google Scholar] [CrossRef]

- Xia, Y.; He, D.; Qin, T.; Wang, L.; Yu, N.; Liu, T.; Ma, W. Dual Learning for Machine Translation. arXiv 2016, arXiv:1611.00179. [Google Scholar]

- Yi, Z.; Zhang, H.; Tan, P.; Gong, M. DualGAN: Unsupervised Dual Learning for Image-to-Image Translation. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2849–2857. [Google Scholar]

- Kim, T.; Cha, M.; Kim, H.; Lee, J.; Kim, J. Learning to Discover Cross-Domain Relations with Generative Adversarial Networks. In Proceedings of the 34th International Conference on Machine Learn. (ICML), Sydney, Australia, 6–11 August 2017; pp. 1857–1865. [Google Scholar]

- Zhu, J.; Park, T.; Isola, P.; Efros, A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Bertinetto, L.; Valmadre, J.; Henriques, J.; Vedaldi, A.; Torr, P. Fully-Convolutional Siamese Networks for Object Tracking. In Proceedings of the European Conference on Computer Vision (ECCV). Amsterdam, The Netherlands, 8–16 October 2016; pp. 850–865. [Google Scholar]

- Daudt, R.C.; Saux, B.L.; Buolch, A.; Gousseau, Y. Urban change Detection for Multispectral Earth Observation Using Convolutional Neural Networks. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 22–27. [Google Scholar]

- Daudt, R.C.; Saux, B.L.; Buolch, A. Fully Convolutional Siamese Network for Change Detection. arXiv 2018, arXiv:1810.08462. [Google Scholar]

- Goodfellow, I.J.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative Adversarial Nets. In Proceedings of the International Conference Neural Information Processing Systems (NIPS), Montreal, QC, Canada, 8–13 December 2014. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Network for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar]

- Hadsell, R.; Chopra, S.; LeCun, Y. Dimensionality Reduction by Learning an Invariant Mapping. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR), New York, NY, USA, 17–22 June 2006; 100. [Google Scholar]

- Isola, P.; Zhu, J.; Zhou, T.; Efros, A.A. Image-to-Image Translation with Conditional Adversarial Networks. In Proceedings of the Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; p. 632. [Google Scholar]

- Kimgma, D.P.; Ba, J.L. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015; pp. 1–13. [Google Scholar]

- Benedek, C.; Sziranyi, T. A mixed Markov model for change detection in aerial photos with large time differences. Int. Conf. Pattern Recognit. 2008, 12, 8–11. [Google Scholar]

| Category | Feature Extraction | Decision Making |

|---|---|---|

| Conventional | Image differences [14], ratios [15] Image transformation Pixel vectors | Otsu [16], KL [17] SFA [18] CVA [19,20,21] |

| Machine learning based | MAD [22], IRMAD [23] Image saliency Wavelet transform Contexts PCA, Segments | CCA, EM RF [24,25] MRF [26,27] SVM [28] Multiple classification [29,30,31] |

| Deep learning based | LSTM [32] CNN [34], GAN [35,36,37] DSCN [38], SCCN [39] | Regression Softmax loss Contrastive loss |

| Inputs: | Paired images in domain : Paired images in domain : Corresponding binary change map references: |

| Forwards: | |

| Backwards: | Update and with and Update and with and Update and with |

| Outputs: | Well trained mapping generators: and Well trained Siamese detectors: and |

| Dataset | Metric | CVA | SVM | CNN | GAN | DSCN | SCCN | Ours |

|---|---|---|---|---|---|---|---|---|

| SZTAKI | OA KC F1 | 0.6223 0.2705 0.4406 | 0.7569 0.4617 0.5830 | 0.8239 0.6501 0.6942 | 0.7914 0.6568 0.6935 | 0.8127 0.7516 0.7692 | 0.8396 0.7751 0.7970 | 0.8672 0.7905 0.8061 |

| Shenzhen | OA KC F1 | 0.5109 0.2367 0.4054 | 0.7982 0.4855 0.5901 | 0.8350 0.6743 0.7214 | 0.8407 0.7356 0.7591 | 0.8263 0.7250 0.7394 | 0.8537 0.7541 0.7805 | 0.8986 0.7712 0.8149 |

| Model size (megabyte) Rate (second/patch) | - 0.17 | - 1.14 | 102.564 0.70 | 57.189 0.56 | 21.042 0.19 | 43.898 0.21 | 28.630 0.25 | |

| Dataset | SZTAKI | Shenzhen | ||

|---|---|---|---|---|

| OA | Training | Testing | Training | Testing |

| con GAN+con GAN+self+con GAN+cross+con GAN+cross+self+con | 0.9536 0.9815 0.9673 0.9208 0.9140 | 0.8127 0.8256 0.8381 0.8605 0.8672 | 0.9650 0.9903 0.9724 0.9409 0.9377 | 0.8263 0.8421 0.8517 0.8850 0.8986 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fang, B.; Pan, L.; Kou, R. Dual Learning-Based Siamese Framework for Change Detection Using Bi-Temporal VHR Optical Remote Sensing Images. Remote Sens. 2019, 11, 1292. https://doi.org/10.3390/rs11111292

Fang B, Pan L, Kou R. Dual Learning-Based Siamese Framework for Change Detection Using Bi-Temporal VHR Optical Remote Sensing Images. Remote Sensing. 2019; 11(11):1292. https://doi.org/10.3390/rs11111292

Chicago/Turabian StyleFang, Bo, Li Pan, and Rong Kou. 2019. "Dual Learning-Based Siamese Framework for Change Detection Using Bi-Temporal VHR Optical Remote Sensing Images" Remote Sensing 11, no. 11: 1292. https://doi.org/10.3390/rs11111292