A Hybrid Vehicle Detection Method Based on Viola-Jones and HOG + SVM from UAV Images

Abstract

:1. Introduction

- (1)

- Both V-J and HOG + SVM are sensitive to objects’ in-plane rotation, therefore they only can detect vehicles when the orientations of vehicles are known and horizontal. Because vehicle orientations in UAV images are usually unknown and even changing, the detection accuracy (i.e., effectiveness) of these two methods has been significantly lowered. As shown in Figure 1a using original V-J method to detect vehicles in an UAV image with non-horizontal roadways, many vehicles cannot be detected. Note some methods have been proposed to address this issue (e.g., Jones & Viola [18], Cao et al. [14], Leitloff et al. [15], Moranduzzo and Melgani [19,20], Liu and Mattyus [21], etc.), but most of these methods are either time-consuming or need extra resources which limit their applications.

- (2)

- The efficiency (i.e., detection speed) of both V-J and HOG + SVM is downgrading with the increase of the detection load (i.e., number of vehicles which need to be detected) in a frame. As shown in Figure 1b through our tests, the detection speeds of both methods are monotonically decreasing with the increase of the number of detected vehicles. But the descending rates of the detection speeds of these two methods demonstrate different characteristics. As shown in Figure 1b, the V-J method overall has a higher descending rate, but it detects much faster than HOG + SVM when the number of detected vehicles is relatively small. By contrast, HOG + SVM has lower detection speed than V-J when the number of detected vehicles is small, but it performs much better when the number of detected vehicles is large. These different characteristics suggest an intuitive idea that the overall efficiency could be improved by switching these two methods based on the number of vehicles which need to be detected, as the black line suggested in Figure 1b.

- (1)

- To address the challenge that both V-J and HOG + SVM are sensitive to on-road vehicles’ in-plane rotation, we adopt a roadway orientation adjustment method. The basic idea of this method is to first measure the orientation of the road, and then rotates the road according to the detected orientation so the road and on-road vehicles will be horizontal after rotation so the original V-J or HOG + SVM methods can be applied to achieve fast detection and high accuracy. More importantly, different with some existing solutions for the issue of unknown road orientation (e.g., [14,15,18]), the proposed road orientation adjustment method does not need any additional extra resource and only needs to rotate the image one time, so the new method significantly saves computational time and reduces false detection rates.

- (2)

- To address the issue of descending detection speeds for both V-J and HOG + SVM and achieve better efficiency, we integrate V-J and HOG + SVM methods based on their different descending trends of detection speed and propose a hybrid and adaptive switching strategy which sophistically searches for, if not the optimal, at lease improved solution, by switching V-J and HOG + SVM detection methods based on the change of detection speed of these two methods during the detection. This switching strategy, combined with the road orientation adjustment method, significantly improves the efficiency and effectiveness of vehicle detections from UAV images.

2. Background

2.1. Viola-Jones Object Detection Scheme

2.2. Linear SVM Classifier with HOG Feature

- Step 1:

- Gradient computation. Step 1 computes the gradient values and orientations of all pixel units in the image by applying the 1-D centered point discrete derivative mask with the filter kernel [−1, 0, 1] in one or both of the horizontal and vertical directions.

- Step 2:

- Orientation binning. Step 2 is to create the cell histograms. In this step, the image was divided into cells, and the 1-D histogram is generated by binning local gradients according to the orientation of each cell. Each pixel within the cell will cast a weighted vote for an orientation-based histogram channel based on the values found in the gradient computation.

- Step 3:

- Descriptor blocks. Step 3 groups cells together into larger, spatially connected blocks .

- Step 4:

- Block normalization. Step 4 is to normalize blocks in order to account for changes in illumination and contrast. A cell can be involved in several block normalizations for the overlapping block, since each block consists of a group of cells. By concatenating the histograms of all blocks, the feature vector is obtained. The HOG descriptor is then the concatenated vector of the components of the normalized cell histograms from all the block regions.

- Step 5:

- SVM classifier. The final step is to feed the descriptors into the SVM classifier. SVM is a binary classifier which looks for an optimal hyperplane as a decision function.

2.3. Limitations

3. Methodology

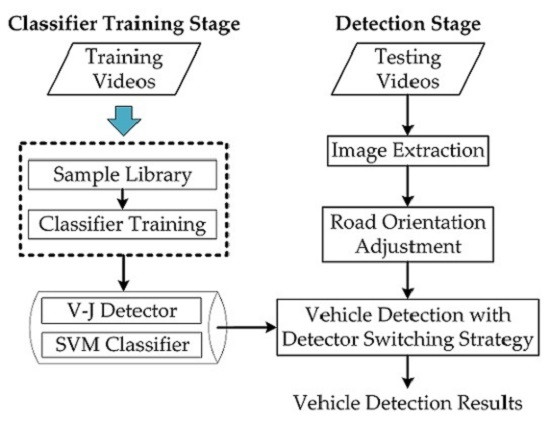

3.1. Overall Framework

- (1)

- A roadway orientation adjustment method. The proposed detection scheme adopts a roadway orientation adjustment method to address the roadway rotation issue. The idea is straightforward: first, measure the orientation of the road using the line segment detector (LSD) [27]; second, rotate the road according to the detected orientation so the road and on-road vehicles will be horizontal after rotation; and third, apply the original V-J or HOG + SVM methods to achieve fast detection and high accuracy. A highlight of this approach is that the proposed road orientation adjustment method only needs to rotate the image one time and does not need any additional extra resource. Therefore, the new method significantly saves computational time and reduces false detection rates.

- (2)

- A detector switching strategy. The proposed scheme further develops an adaptive switching strategy to integrate V-J and HOG + SVM methods based on their different descending trends of detection speed in order to improve the efficiency. Basically, this strategy “intelligently” switches the detection methods between V-J and HOG + SVM to choose the one which has faster detection speed. As shown in Figure 1b, the detection speed essentially is determined by the workload, i.e., the number of vehicles which need to be detected; and V-J and HOG + SVM shows different characteristics of detection speed. So the proposed switching method will detect the detection speeds of both methods periodically and choose the one with faster detection speed.

3.2. Road Orientation Adjustment Method

- (1)

- First, original color images are extracted from aerial videos and then transformed into gray scale images (Figure 7a);

- (2)

- (3)

- Third, the orientation of each detected line segment is calculated and the relative histogram of these line orientations is derived (Figure 7c). The angle corresponding to the maximum distribution frequency of relative histogram will be considered as the orientation of the road;

- (4)

- Last, to minimize in-plane rotation jitters, the final rotation angle for frame is smoothed by the first-order lag filtering algorithm, which considers the rotation angle for the last frame . After rotation, the directions of roads and on-road vehicles are aligned with the horizontal direction of the image (Figure 7d). The details of some key techniques will be elaborated in the following.

- Step 1:

- Identify : the total number of lines;

- Step 2:

- Define 180 class intervals: ;

- Step 3:

- Determine the frequency,, i.e., the number of lines with the angle within the angle interval of class ;

- Step 4:

- Calculate the relative frequency (i.e., proportion) of each class by dividing the class frequency by the total number n in the sample, i.e., ;

- Step 5:

- Draw a rectangle for each class with the class interval as the base and the height equal to the relative frequency of the class to form a relative histogram (Figure 7c);

- Step 6:

- Identify , which is corresponding to the highest rectangle in relative histogram, and is considered as the orientation of the road.

3.3. Detector Switching Strategy

4. Evaluation

4.1. UAV Data Collection

4.2. Performance Evaluation

4.3. Results and Comparison

- (1)

- ViBe, a universal background subtraction algorithm [31];

- (2)

- (3)

- (4)

- (5)

- Original V-J method combines with the proposed road orientation adjustment method only (referred as V-J + R in Table 2);

- (6)

- (7)

- (8)

- Original HOG + SVM method combines with the proposed road orientation adjustment method only (referred as SVM+R in Table 2);

- (9)

- Apply the proposed vehicle detector switching strategy to integrate V-J and HOG + SVM (without road orientation adjustment) (referred as V-J + SVM + S in Table 2);

- (10)

- Apply the proposed vehicle detector switching strategy and road orientation adjustment method to integrate V-J and HOG + SVM (the proposed method, V-J + SVM + R + S).

- (1)

- Vibe & Frame Difference: These two methods achieved fast detection speed but with low Quality (54.24% & 49.03%) which are too low to be accepted for real-world applications. The reason is that some non-vehicle objects (such as tricycles and moving pedestrians) lead to many false positives. Besides, slow-moving or stopped vehicles and some black vehicles which have similar colors with the road surface cannot be detected during detection.

- (2)

- V-J vs. V-J + 9: The Completeness (76.91%) of V-J is low, this is because many vehicles that are not parallel to horizontal cannot be detected, thus generating many false negatives. The Completeness (92.31%) of V-J + 9 is significantly higher than the original V-J. After images were rotated every 20° from 0° to 180° and detected 9 times, vehicles of different orientations can be detected. However, repeating detections of the same image lead to more false positives and greatly increase detection time. The Correctness of V-J + 9 (76.10%) is lower than that of the original V-J (83.22%). The detection speed of V-J + 9 (0.079 f/s) is also significantly slower than V-J (1.14 f/s).

- (3)

- SVM vs. SVM + 9: The comparison of SVM and SVM + 9 also demonstrates similar results as V-J vs. V-J + 9. SVM + 9 achieved higher Completeness (89.21%) than SVM (73.01%) but with low Correctness and detection speed.

- (4)

- V-J vs. V-J + R: V-J + R achieves higher Completeness (92.37%) than V-J (76.91%); because by incorporating road orientation adjustment, on-road vehicles of unknown orientations will be aligned with the horizontal direction which can be detected by the original V-J detector. V-J + R achieves higher Quality (82.09%) than V-J (66.79%), but the detection speed of V-J + R is slower than the original V-J due to two reasons: (1) the road orientation adjustment step will cost some time for road orientation detection and image rotation; and (2) after image rotation and road alignment, many more vehicles in the UAV image need to be detected.

- (5)

- SVM vs. SVM + R: The method SVM + R also achieves higher Quality (81.53%) than the original SVM method (64.28%). Similar to V-J + R, the detection speed of SVM + R is slower than the original SVM.

- (6)

- V-J + R vs. V-J + 9: V-J + R achieves slightly higher Completeness (92.37%) than V-J + 9 (92.31%), because those rotated vehicles in V-J + 9 are in fact not exactly aligned with the horizontal direction, therefore may not adapt the original V-J detector well. Also, V-J + R achieves faster detection speed (0.88 f/s) than V-J + 9 (0.079 f/s). The comparisons demonstrate that the proposed road orientation adjustment method can improve both the Completeness and Quality compared with the original V-J and HOG + SVM methods, but leads to a slightly slower detection speed.

- (7)

- SVM + R vs. SVM + 9: Similarly, SVM + R achieved higher Completeness (91.13%) than SVM + 9 (89.21%) and higher detection speed.

- (8)

- V-J vs. SVM vs. V-J + SVM + S: The proposed switching method (i.e., V-J + SVM + S) achieves faster detection speed (1.27 f/s) than both the original V-J (1.14 f/s) and SVM (1.07 f/s) methods, because the proposed switching strategy can automatically choose the faster method between V-J and HOG + SVM during the detection. Note that, without the road orientation adjustment, the proposed switching method only achieves low Quality (65.08%), which is similar to V-J (66.79%) and SVM (64.28%).

- (9)

- V-J + SVM + R + S: Our method, which combines the road orientation adjustment method, achieves the best Quality (82.32%) than other nine methods. The detection speed of our method is slower than V-J + SVM + S, which is a trade-off between high Quality and fast detection speed. However, our method is still faster than the original V-J and SVM methods. The detection speed of 1.17 f/s is acceptable for real-time applications.

5. Discussion

5.1. Road Orientation Adjustment Method for Roadways with More Than One Orientation

5.2. Straight Line Detection Using Other Algorithms

5.3. Road Orientation Adjustment on Imagery with Low Radiometric Quality

5.4. Detection Using Oriented and Mosaicked Images

5.5. Vehicle Detection for Turning Vehicles

5.6. Sensitivity Analysis of Switching Interval

6. Concluding Remarks

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| UAV | unmanned aerial vehicle |

| SVM | support vector machine |

| HOG | histogram of oriented gradient |

| LSD | line segment detector |

| DPM | deformable part model |

| V-J | Viola-Jones object detection scheme |

| HOG + SVM | linear SVM classifier with HOG feature |

| Cor. | Correctness |

| Com. | Completeness |

| Qua. | Quality |

References

- Rosser, K.; Pavey, K.; Fitzgerald, N.; Fatiaki, A.; Neumann, D.; Carr, D.; Hanlon, B.; Chahl, J. Autonomous Chemical Vapour Detection by Micro UAV. Remote Sens. 2015, 7, 16865–16882. [Google Scholar] [CrossRef]

- Gonzalez, L.F.; Montes, G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned Aerial Vehicles (UAVs) and Artificial Intelligence Revolutionizing Wildlife Monitoring and Conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef] [PubMed]

- Boccardo, P.; Chiabrando, F.; Dutto, F.; Tonolo, F.G.; Lingua, A. UAV Deployment Exercise for Mapping Purposes: Evaluation of Emergency Response Applications. Sensors 2015, 15, 15717–15737. [Google Scholar] [CrossRef] [PubMed]

- Agrawal, A.; Hickman, M. Automated extraction of queue lengths from airborne imagery. In Proceedings of the International IEEE Conference on Intelligent Transportation Systems, Washington, DC, USA, 3–6 October 2004; pp. 297–302.

- Coifman, B.; Mccord, M.; Mishalani, R.G.; Iswalt, M. Roadway traffic monitoring from an unmanned aerial vehicle. IEE Proc. Intell. Transp. Syst. 2006, 153, 11–20. [Google Scholar] [CrossRef]

- Yu, G.; Zhou, B.; Wang, Y.; Wu, X.; Wang, P. Measuring algorithm for the distance to a preceding vehicle on curve road using on-board monocular camera. Int. J. Bifurc. Chaos 2015, 25, 1540038. [Google Scholar] [CrossRef]

- Angel, A.; Hickman, M.; Mirchandani, P.; Chandnani, D. Methods of analyzing traffic imagery collected from aerial platforms. IEEE Trans. Intell. Transp. Syst. 2003, 4, 99–107. [Google Scholar] [CrossRef]

- Azevedo, C.L.; Cardoso, J.L.; Ben-Akiva, M.; Costeira, J.P.; Marques, M. Automatic Vehicle Trajectory Extraction by Aerial Remote Sensing. Procedia Soc. Behav. Sci. 2014, 111, 849–858. [Google Scholar] [CrossRef]

- Shastry, A.C.; Schowengerdt, R.A. Airborne video registration and traffic-flow parameter estimation. IEEE Trans. Intell. Transp. Syst. 2005, 6, 391–405. [Google Scholar] [CrossRef]

- Yalcin, H.; Hebert, M.; Collins, R.; Black, M.J. A Flow-Based Approach to Vehicle Detection and Background Mosaicking in Airborne Video. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005.

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; pp. 511–518.

- Dalal, N.; Triggs, B. Histograms of oriented gradients for human detection. In Proceedings of the 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 20–25 June 2005; Volume 1, pp. 886–893.

- Cao, X.; Wu, C.; Yan, P.; Li, X. Linear SVM classification using boosting HOG features for vehicle detection in low-altitude airborne videos. In Proceedings of the IEEE International Conference on Image Processing, Brussels, Belgium, 11–14 September 2011; pp. 2421–2424.

- Cao, X.; Wu, C.; Lan, J.; Yan, P. Vehicle Detection and Motion Analysis in Low-Altitude Airborne Video Under Urban Environment. IEEE Trans. Circuits Syst. Video Technol. 2011, 21, 1522–1533. [Google Scholar] [CrossRef]

- Leitloff, J.; Rosenbaum, D.; Kurz, F.; Meynberg, O.; Reinartz, P. An Operational System for Estimating Road Traffic Information from Aerial Images. Remote Sens. 2014, 6, 11315–11341. [Google Scholar] [CrossRef]

- Tuermer, S.; Kurz, F.; Reinartz, P.; Stilla, U. Airborne Vehicle Detection in Dense Urban Areas Using HoG Features and Disparity Maps. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 2327–2337. [Google Scholar] [CrossRef]

- Xu, Y.; Yu, G.; Wang, Y.; Wu, X. Vehicle Detection and Tracking from Airborne Images. In Proceedings of the 15th COTA International Conference of Transportation Professionals, Beijing, China, 24–27 July 2015; pp. 641–649.

- Jones, M.; Viola, P. Fast Multi-view Face Detection. In Proceedings of the 2003 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Madison, WI, USA, 16–22 June 2003; Volume 11, pp. 276–286.

- Moranduzzo, T.; Melgani, F. Detecting cars in UAV images with a catalog-based approach. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6356–6367. [Google Scholar] [CrossRef]

- Moranduzzo, T.; Melgani, F. Automatic car counting method for unmanned aerial vehicle images. IEEE Trans. Geosci. Remote Sens. 2014, 52, 1635–1647. [Google Scholar] [CrossRef]

- Liu, K.; Mattyus, G. Fast Multiclass Vehicle Detection on Aerial Images. IEEE Geosci. Remote Sens. Lett. 2015, 12, 1938–1942. [Google Scholar]

- Felzenszwalb, P.F.; Girshick, R.B.; McAllester, D.; Ramanan, D. Object Detection with Discriminatively Trained Part Based Models. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 1627–1645. [Google Scholar] [CrossRef] [PubMed]

- Friedman, J.; Hastie, T.; Tibshirani, R. Additive Logistic Regression: A Statistical View of Boosting. Ann. Stat. 2000, 28, 374–376. [Google Scholar] [CrossRef]

- Cucchiara, R.; Piccardi, M.; Mello, P. Image analysis and rule-based reasoning for a traffic monitoring system. IEEE Trans. Intell. Transp. Syst. 2000, 1, 119–130. [Google Scholar] [CrossRef]

- Tao, H.; Sawhney, H.; Kumar, R. Object tracking with Bayesian estimation of dynamic layer representations. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 75–89. [Google Scholar] [CrossRef]

- Ma, Y.; Wu, X.; Yu, G.; Xu, Y.; Wang, Y. Pedestrian Detection and Tracking from Low-Resolution Unmanned Aerial Vehicle Thermal Imagery. Sensors 2016, 16, 446. [Google Scholar] [CrossRef] [PubMed]

- Grompone, V.G.R.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A Fast Line Segment Detector with a False Detection Control. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 722–732. [Google Scholar]

- Ballard, D.H. Generalizing the Hough Transform to Detect Arbitrary Shapes. Pattern Recognit. 1981, 13, 111–122. [Google Scholar] [CrossRef]

- Gioi, R.G.V.; Jakubowicz, J.; Morel, J.M.; Randall, G. LSD: A Line Segment Detector. Image Process. Line 2012, 2, 35–55. [Google Scholar] [CrossRef]

- Maerivoet, S.; De Moor, B. Traffic Flow Theory. Physics 2005, 1, 5–7. [Google Scholar]

- Olivier, B.; Marc, V.D. ViBe: A universal background subtraction algorithm for video sequences. IEEE Trans. Image Process. 2011, 20, 1709–1724. [Google Scholar]

- Faugeras, O.; Deriche, R.; Mathieu, H.; Ayache, N.J.; Randall, G. The Depth and Motion Analysis Machine. Int. J. Pattern Recognit. Artif. Intell. 1992, 6, 353–385. [Google Scholar] [CrossRef]

- Burns, J.B.; Hanson, A.R.; Riseman, E.M. Extracting Straight Lines. IEEE Trans. Pattern Anal. Mach. Intell. 1986, 8, 425–455. [Google Scholar] [CrossRef]

- Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. 1998, 8, 679–698. [Google Scholar]

- Uyttendaele, M.; Eden, A.; Skeliski, R. Eliminating Ghosting and Exposure Artifacts in Image Mosaics. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001; pp. 509–516.

- Dollár, P.; Tu, Z.; Perona, P.; Belongie, S. Integral Channel Features. In Proceedings of the British Machine Vision Conference, London, UK, 7–10 September 2009.

- Chen, X.; Xiang, S.; Liu, C.L.; Pan, C.H. Vehicle detection in satellite images by hybrid deep convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1797–1801. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. In Proceedings of the Neural Information Processing Systems Conference, Montreal, QC, Canada, 7–12 December 2015.

- Faster R-CNN. Available online: https://github.com/rbgirshick/py-faster-rcnn (accessed on 20 June 2016).

| Scenarios | Traffic Condition; Weather; Location; Time; Flight Altitude |

|---|---|

| Freeway | Non-congested; cloudy; G6 Jingzang Expressway; 15:40–16:40, 18 November 2014; 150 m. |

| Urban road 1 | Non-congested; foggy; Xueyuan Road; 15:30–16:30, 26 March 2014; 170 m. |

| Urban road 2 | Congested; cloudy; North Fourth Ring Road; 16:20–17:20, 6 November 2014; 150 m. |

| Urban road 3 | Non Congested; cloudy; Xueyuan Road; 15:10–16:10, 12 August 2014; 100 m. |

| Urban road 4 | Congested ; cloudy; North Fourth Ring Road; 16:30–17:30, 5 May 2015; 115 m. |

| Scene | Metrics | ViBe | Frame Diff | V-J | V-J + 9 | V-J + R | SVM | SVM + 9 | SVM + R | V-J + SVM + S | V-J + SVM + R + S |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Freeway | Cor. (%) | 65.00% | 61.11% | 85.71% | 87.50% | 88.57% | 91.30% | 88.24% | 92.27% | 89.01% | 89.37% |

| Com. (%) | 86.67% | 78.57% | 75.00% | 92.11% | 93.94% | 55.26% | 85.71% | 87.04% | 66.76% | 94.24% | |

| Qua. (%) | 59.09% | 52.38% | 66.67% | 81.40% | 83.78% | 52.50% | 76.92% | 81.13% | 61.67% | 84.74% | |

| Speed (f/s) | 5.59 | 9.96 | 1.16 | 0.064 | 0.96 | 1.17 | 0.083 | 1.03 | 1.17 | 1.08 | |

| Urban road 1 | Cor. (%) | 49.06% | 77.78% | 89.47% | 73.53% | 88.00% | 82.05% | 80.81% | 85.64% | 88.86% | 87.89% |

| Com. (%) | 66.67% | 48.84% | 70.83% | 92.59% | 91.67% | 69.57% | 87.43% | 90.96% | 70.73% | 91.46% | |

| Qua. (%) | 39.39% | 42.86% | 65.38% | 69.44% | 81.48% | 60.38% | 72.40% | 78.92% | 62.89% | 81.23% | |

| Speed (f/s) | 6.64 | 10.40 | 2.08 | 0.16 | 1.57 | 1.15 | 0.079 | 1.03 | 2.01 | 1.80 | |

| Urban road 2 | Cor. (%) | 77.87% | 75.26% | 85.06% | 92.16% | 95.65% | 98.67% | 96.34% | 98.01% | 98.04% | 96.39% |

| Com. (%) | 86.36% | 68.87% | 89.16% | 94.95% | 94.62% | 77.08% | 91.20% | 92.96% | 77.74% | 94.24% | |

| Qua. (%) | 69.34% | 56.15% | 77.08% | 87.85% | 90.72% | 76.29% | 88.14% | 91.25% | 76.55% | 91.02% | |

| Speed (f/s) | 6.17 | 10.01 | 0.50 | 0.049 | 0.43 | 1.02 | 0.065 | 0.87 | 1.00 | 0.94 | |

| Urban road 3 | Cor. (%) | 51.11% | 53.49% | 64.29% | 40.54% | 77.78% | 66.39% | 61.45% | 75.94% | 64.43% | 78.77% |

| Com. (%) | 79.31% | 85.19% | 69.23% | 88.24% | 92.72% | 84.21% | 89.94% | 90.70% | 71.01% | 92.16% | |

| Qua. (%) | 45.10% | 48.94% | 50.00% | 38.46% | 73.30% | 59.04% | 57.50% | 70.46% | 51.01% | 73.82% | |

| Speed (f/s) | 6.22 | 10.26 | 1.37 | 0.063 | 0.97 | 1.16 | 0.077 | 1.04 | 1.36 | 1.25 | |

| Urban road 4 | Cor. (%) | 67.89% | 77.53% | 91.59% | 86.76% | 90.32% | 90.99% | 86.99% | 90.92% | 91.05% | 90.09% |

| Com. (%) | 80.43% | 51.49% | 80.33% | 93.65% | 88.89% | 78.91% | 91.73% | 93.96% | 78.98% | 88.64% | |

| Qua. (%) | 58.27% | 44.81% | 74.81% | 81.94% | 81.16% | 73.19% | 80.67% | 85.90% | 73.29% | 80.76% | |

| Speed (f/s) | 5.27 | 9.64 | 0.60 | 0.056 | 0.46 | 0.85 | 0.066 | 0.75 | 0.83 | 0.79 | |

| Average | Cor. (%) | 62.19% | 69.03% | 83.22% | 76.10% | 88.06% | 85.88% | 82.76% | 88.56% | 86.28% | 88.50% |

| Com. (%) | 79.89% | 66.59% | 76.91% | 92.31% | 92.37% | 73.01% | 89.21% | 91.13% | 73.04% | 92.15% | |

| Qua. (%) | 54.24% | 49.03% | 66.79% | 71.82% | 82.09% | 64.28% | 75.13% | 81.53% | 65.08% | 82.32% | |

| Speed (f/s) | 5.98 | 10.05 | 1.14 | 0.079 | 0.88 | 1.07 | 0.074 | 0.942 | 1.27 | 1.17 |

| Scene | Metrics | T = 24 (1 s) | T = 120 (5 s) | T = 240 (10 s) | T = 360 (15 s) | T = 480 (20 s) | T = 600 (25 s) | T = 720 (30 s) |

|---|---|---|---|---|---|---|---|---|

| Freeway | Cor. (%) | 89.37% | 88.76% | 89.60% | 88.96% | 88.72% | 89.66% | 90.52% |

| Com. (%) | 94.24% | 94.34% | 93.37% | 94.16% | 93.72% | 93.04% | 91.57% | |

| Qua. (%) | 84.74% | 84.27% | 84.24% | 84.30% | 83.74% | 84.03% | 83.55% | |

| Speed (f/s) | 1.0843 | 1.0811 | 1.0800 | 1.0847 | 1.0882 | 1.0730 | 1.0763 | |

| Urban road 1 | Cor. (%) | 87.89% | 87.21% | 88.00% | 87.84% | 87.17% | 87.50% | 87.21% |

| Com. (%) | 91.46% | 91.65% | 91.67% | 91.23% | 91.07% | 91.42% | 91.09% | |

| Qua. (%) | 81.23% | 80.79% | 81.48% | 81.00% | 80.30% | 80.86% | 80.36% | |

| Speed (f/s) | 1.8014 | 1.8255 | 1.8285 | 1.8329 | 1.8334 | 1.8337 | 1.8339 | |

| Urban road 2 | Cor. (%) | 96.39% | 96.31% | 96.90% | 97.22% | 96.59% | 96.51% | 96.36% |

| Com. (%) | 94.24% | 94.13% | 93.98% | 93.58% | 93.10% | 92.28% | 92.87% | |

| Qua. (%) | 91.02% | 90.86% | 91.24% | 91.15% | 90.14% | 89.30% | 89.72% | |

| Speed (f/s) | 0.9352 | 0.9406 | 0.9442 | 0.9475 | 0.9434 | 0.9453 | 0.9463 | |

| Urban road 3 | Cor. (%) | 78.77% | 78.33% | 78.75% | 78.49% | 77.65% | 77.72% | 77.47% |

| Com. (%) | 92.16% | 91.26% | 92.36% | 91.53% | 91.45% | 91.78% | 91.56% | |

| Qua. (%) | 73.82% | 72.87% | 73.94% | 73.18% | 72.40% | 72.66% | 72.31% | |

| Speed (f/s) | 1.2505 | 1.2519 | 1.2372 | 1.2608 | 1.2610 | 1.2453 | 1.2454 | |

| Urban road 4 | Cor. (%) | 90.09% | 90.41% | 89.93% | 90.26% | 90.30% | 90.33% | 90.52% |

| Com. (%) | 88.64% | 89.42% | 90.19% | 91.13% | 91.17% | 91.80% | 92.70% | |

| Qua. (%) | 80.76% | 81.68% | 81.91% | 82.97% | 83.04% | 83.58% | 84.49% | |

| Speed (f/s) | 0.7893 | 0.7918 | 0.7946 | 0.7981 | 0.7957 | 0.7977 | 0.7984 | |

| Average | Cor. (%) | 88.50% | 88.20% | 88.64% | 88.55% | 88.09% | 88.34% | 88.41% |

| Com. (%) | 92.15% | 92.16% | 92.31% | 92.32% | 92.10% | 92.06% | 91.96% | |

| Qua. (%) | 82.32% | 82.09% | 82.56% | 82.52% | 81.92% | 82.08% | 82.09% | |

| Speed (f/s) | 1.1722 | 1.1782 | 1.1769 | 1.1848 | 1.1843 | 1.1790 | 1.1801 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Y.; Yu, G.; Wang, Y.; Wu, X.; Ma, Y. A Hybrid Vehicle Detection Method Based on Viola-Jones and HOG + SVM from UAV Images. Sensors 2016, 16, 1325. https://doi.org/10.3390/s16081325

Xu Y, Yu G, Wang Y, Wu X, Ma Y. A Hybrid Vehicle Detection Method Based on Viola-Jones and HOG + SVM from UAV Images. Sensors. 2016; 16(8):1325. https://doi.org/10.3390/s16081325

Chicago/Turabian StyleXu, Yongzheng, Guizhen Yu, Yunpeng Wang, Xinkai Wu, and Yalong Ma. 2016. "A Hybrid Vehicle Detection Method Based on Viola-Jones and HOG + SVM from UAV Images" Sensors 16, no. 8: 1325. https://doi.org/10.3390/s16081325