Distributed Fast Self-Organized Maps for Massive Spectrophotometric Data Analysis †

Abstract

:1. Introduction

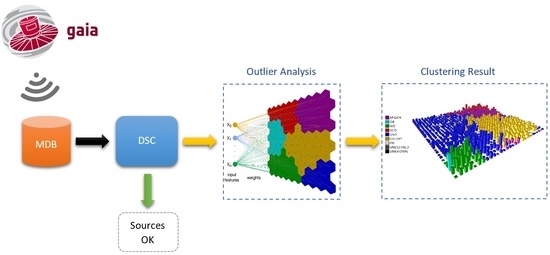

- Apsis is devoted to the classification of all the observed sources [11,12,13] by means of several work packages enclosed within this CU, the Discrete Source Classifier (DSC) [13] being the main one. It processes the whole Gaia dataset in order to classify the sources into a known astronomical type (star, quasar, galaxy, etc.) by means of Support Vector Machines (SVM) [14], tagging as outliers those sources that do not appropriately fit these models. The rest of the modules are aimed at more specific classification tasks for subgroups of sources that satisfy certain requirements.This article is associated with one of these packages, Outlier Analysis (OA, Figure 2), which is devoted to the analysis, using unsupervised Artificial Intelligence methods on spectrophotometric data (BP/RP spectra), of those sources tagged by the DSC module [13] as outlier sources because either they cannot be fitted into any of the models used by DSC so they are considered photometric outliers, or they cannot be classified with enough probability, i.e., weakly classified sources.These outlier sources are expected to be of the order of 10% of the whole Gaia dataset, i.e., approximately sources (100 Terabytes), which will be processed by the OA module in order to throw some light on their nature, pretending to provide not only plain astronomical object types (stars, ultra-cool dwarfs, white dwarfs, planetary nebulae, quasars, and galaxies), but also sub-types [11].

- The Catalog Access aims to design and implement the Gaia Archive, providing tools for the astronomical community in order to access the Gaia catalog, visualize data, or even perform data processing tasks. A Data Mining tool based on the OA module will be published, so that scientists can run their own unsupervised analysis on Gaia data. Finally, a visualization tool (Section 4) will be also released to ease the post-analysis stage for OA-based results.

- Dimensionality reduction lowers the number of features that is used to explore the data, making the process lighter and therefore faster without losing too much information. Principal Component Analysis (PCA) [15] and Linear Discriminant Analysis (LDA) [16] are the most popular algorithms for dimensionality reduction.

- Clustering organizes objects into a number of groups with no prior information, just according to the nature of the data. There are many different algorithms available, and they must be carefully selected according to the particular problem under study [17].

2. The Gaia Data

BP/RP Spectra Preprocessing for Outlier Analysis

- The low signal-to-noise ratio (SNR) pixels that lie on the extremes of BP/RP spectra are discarded due to the low efficiency of the passbands (less than 5%). It may be remarked that this is a common step for almost all the CU8 algorithms.

- The BP/RP spectra are sanitized, interpolating those pixels where the passband efficiency is acceptable and whose flux value is missing or wrong, such as negative values.

- GOG data is oversampled, and a downsampling is done on the given BP/RP spectra in order to reduce their dimensionality from 180 pixels to 60 pixels. Despite losing some information, it does save up to 60% of processing time and the clustering quality is not significantly affected by this decision (less than 1% effect in the tests conducted). Apart from OA, a number of modules are also taking similar approaches to speed up their execution.

- Both BP and RP spectra are joined into a single spectrum, removing the overlapping region to avoid redundant wavelengths at a cut point, which is empirically determined by a domain expert according to the response of the satellite’s instrument. Currently, the same cut point is being used for all the objects, although this may vary in the future since the flux and wavelengths calibration for the spectrophotometry is not final yet.

- The Cardelli extinction model [32] is applied to the joint spectrum in order to minimize the impact of interstellar reddening on the classification.

- Finally, the treated spectrum is scaled to fix its area to one unit, so that sources with different brightness can be compared using similarity distance functions on their spectra:where is the flux of a spectrum in band i, and S represents the bands of the spectrum.

3. Analyzing Gaia Outliers by Means of Self-Organized Maps

3.1. A Parallel Self-Organized Maps Learning Algorithm

3.2. Integration into SAGA Software Pipeline

4. A Visualization Tool to Explore Astronomical Self-Organized Maps

- Catalog labels (Figure 8a) shows the most probable astronomical class associated with each cluster, according to an offline cross-match performed on external astronomical databases, such as Simbad [38], using the web service provided by the database to perform a radial search on the sources’ celestial positions (right ascension and declination), and keeping just the closest one.The application can handle different cross-matches simultaneously, so that the user can visualize and compare them.

- Color distribution (Figure 8b) displays how the sources are distributed among the neurons according to their color. Magnitude differences at blue and red wavelengths are depicted in the map using a color gradient.

- Category distribution (Figure 8c) represents a particular type of astronomical object (i.e., stars, white dwarfs, quasars, etc.), displaying how such sources are distributed among the neurons.

- Combined visualizations (Figure 9) allow the user to explore different views by means of three-dimensional graphs, where a qualitative property (labels, category, etc.) is shown as the baseline, and a quantitative property (hits, distance, etc.) is represented as height bars. Information displayed from different perspectives allows the user to discover new relationships within the data.

- Template labels (Figure 8d) allow the user to observe the representative label associated with each neuron. These classes are determined by a template matching procedure on different pre-built model sets, and the user can select which one to display. In Figure 8d, a set on reference models based on Gaia simulations are displayed on top of the original dataset described in Section 2.

5. Performance Evaluation

6. Conclusions

- The use of the FastSOM algorithm can considerably speed up the execution, saving up to 60% of the run time compared to the regular implementation, without losing precision (less than 5% for reference datasets).

- A scalable and distributed design was achieved for the SOM learning algorithm, allowing for the analysis of very large data volumes in a reasonable term (Section 5).

- The Apache Spark implementation was found to be really beneficial for small and medium size datasets. However, for enormous volumes of data the intensive memory usage causes the algorithm to become unstable and eventually resource dependent, needing a fine-tuning to avoid excessive memory consumption that could make the algorithm crash at some point.

- The Apache Hadoop version is capable of handling and processing huge datasets in reasonable times, providing a scalable and stable solution for processing vast volumes of information.

- The OA module has been successfully integrated into the CU8 software pipeline (SAGA), and it is planned to produce its first scientific results for the third Gaia Data Release around 2020. According to the tests conducted during the validation stage, OA is expected to take approximately six days to be executed over a hundred million sources (a 10% of the whole Gaia dataset).

- The Apache Spark implementation will be available to the astronomical community as a DPAC CU9 Data Mining tool in order to conduct their own analysis on Gaia data.

- Finally, a visualization tool to explore astronomical SOMs will be published along with the Gaia Data Releases, so that the OA module results, as well as other samples of Gaia data processed using the CU9 Data Mining tool, can be further analyzed by the community.

7. Future Work

- Regarding the quality of the scientific results, alternative preprocessing stages are being studied, as well as different postprocessing methods oriented to the visualization tool (Section 4).

- The overhead caused by SAGA internal operations is expected to be significantly reduced in the upcoming SAGA implementations, so that it will speed up the execution of the modules, including the OA module.

- In order to improve the performance of the algorithm, some very promising CPU/GPU mixed computing tests have been conducted for an implementation based on Nvidia Compute Unified Device Architecture (CUDA) (Santa Clara, CA, USA) [40]. Although SAGA does not support GPU computing, this paradigm may be suitable for both Apache Hadoop and Apache Spark and it is currently being studied.

- Our clustering analysis tool based on Self-Organized Maps is being applied to study outlier sources in the Gaia mission, but it could be used to analyze other complex databases, even from other domains. Very promising results have been found for intrusion detection over communication networks, as well as for user profile identification in online marketing environments.

Author Contributions

Funding

Conflicts of Interest

References

- Karau, H.; Konwinski, A.; Wendell, P.; Zaharia, M. Learning Spark: Lightning-Fast Big Data Analytics, 1st ed.; O’Reilly Media, Inc.: Newton, MA, USA, 2015. [Google Scholar]

- White, T. Hadoop: The Definitive Guide; O’Reilly Media Inc.: Newton, MA, USA, 2015. [Google Scholar]

- Blanton, M.R.; Bershady, M.A.; Abolfathi, B.; Albareti, F.D.; Prieto, C.A.; Almeida, A.; Alonso-García, J.; Anders, F.; Anderson, S.F.; Andrews, B.; et al. Sloan digital sky survey IV: Mapping the Milky Way, nearby galaxies, and the distant universe. Astron. J. 2017, 154, 28. [Google Scholar] [CrossRef]

- Gaia Collaboration; Prusti, T.; de Bruijne, J.H.J.; Brown, A.G.A.; Vallenari, A.; Babusiaux, C.; Bailer-Jones, C.A.L.; Bastian, U.; Biermann, M.; Evans, D.W.; et al. The Gaia mission. Astron. Astrophys. 2016, 595, A1. [Google Scholar]

- LSST Science Collaboration; Abell, P.A.; Allison, J.; Anderson, S.F.; Andrew, J.R.; Angel, J.R.P.; Armus, L.; Arnett, D.; Asztalos, S.J.; Axelrod, T.S.; et al. LSST Science Book, Version 2.0. arXiv, 2009; arXiv:0912.0201. [Google Scholar]

- Jordi, C.; Gebran, M.; Carrasco, J.M.; de Bruijne, J.; Voss, H.; Fabricius, C.; Knude, J.; Vallenari, A.; Kohley, R.; Mora, A. Gaia broad band photometry. Astron. Astrophys. 2010, 523, A48. [Google Scholar] [CrossRef]

- De Bruijne, J.H.J. Science performance of Gaia, ESA’s space-astrometry mission. Astrophys. Space Sci. 2012, 341, 31–41. [Google Scholar] [CrossRef]

- Gaia Collaboration; Brown, A.G.A.; Vallenari, A.; Prusti, T.; de Bruijne, J.H.J.; Babusiaux, C.; Bailer-Jones, C.A.L.; et al. Gaia Data Release 2. Summary of the contents and survey properties. arXiv, 2018; arXiv:1804.09365. [Google Scholar]

- Gaia Collaboration; Brown, A.G.A.; Vallenari, A.; Prusti, T.; de Bruijne, J.H.J.; Babusiaux, C.; Bailer-Jones, C.A.L. Gaia Data Release 1. Summary of the astrometric, photometric, and survey properties. Astron. Astrophys. 2016, 595, A2. [Google Scholar]

- Bailer-Jones, C.A.L.; Andrae, R.; Arcay, B.; Astraatmadja, T.; Bellas-Velidis, I.; Berihuete, A.; Bijaoui, A.; Carrión, C.; Dafonte, C.; Damerdji, Y. The Gaia astrophysical parameters inference system (Apsis). Pre-launch description. Astron. Astrophys. 2013, 559, A74. [Google Scholar] [CrossRef]

- Manteiga, M.; Carricajo, I.; Rodríguez, A.; Dafonte, C.; Arcay, B. Starmind: A fuzzy logic knowledge-based system for the automated classification of stars in the MK system. Astron. J. 2009, 137, 3245–3253. [Google Scholar] [CrossRef]

- Ordóñez, D.; Dafonte, C.; Arcay, B.; Manteiga, M. HSC: A multi-resolution clustering strategy in Self-Organizing Maps applied to astronomical observations. ASOC Elsevier 2012, 12, 204–215. [Google Scholar] [CrossRef]

- Smith, K.W. The discrete source classifier in Gaia-apsis. In Astrostatistics and Data Mining; Sarro, L.M., Eyer, L., O’Mullane, W., De Ridder, J., Eds.; Springer: New York, NY, USA, 2012; p. 239. [Google Scholar]

- Cortes, C.; Vapnik, V. Support-Vector Networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Jolliffe, I. Principal Component Analysis; Springer: New York, NY, USA, 2002. [Google Scholar]

- Fisher, R.A. The use of multiple measurements in taxonomic problems. Ann. Eugen. 1936, 7, 179–188. [Google Scholar] [CrossRef]

- Xu, R.; Wunsch, D. Survey of clustering algorithms. IEEE Trans. Neural Netw. 2005, 16, 645–678. [Google Scholar] [CrossRef] [PubMed]

- Kohonen, T. Self-organized formation of topologically correct feature maps. Biol. Cybern. 1982, 43, 59–69. [Google Scholar] [CrossRef]

- Kohonen, T. Self-Organizing Maps; Springer: Berlin/Heidelberg, Germany, 2001. [Google Scholar]

- Fustes, D.; Dafonte, C.; Arcay, B.; Manteiga, M.; Smith, K.; Vallenari, A.; Luri, X. SOM ensemble for unsupervised outlier analysis. Application to outlier identification in the Gaia astronomical survey. ESWA 2013, 40, 1530–1541. [Google Scholar] [CrossRef]

- Fustes, D.; Manteiga, M.; Dafonte, C.; Arcay, B.; Ulla, A.; Smith, K.; Borrachero, R.; Sordo, R. An approach to the analysis of SDSS spectroscopic outliers based on self-organizing maps: Designing the outlier analysis software package for the next Gaia survey. Astron. Astrophys. 2013, 559, A7. [Google Scholar] [CrossRef]

- Geach, J.E. Unsupervised self-organized mapping: A versatile empirical tool for object selection, classification and redshift estimation in large surveys. MNRAS 2012, 419, 2633–2645. [Google Scholar] [CrossRef]

- Way, M.J.; Gazis, P.R.; Scargle, J.D. Structure in the Three-dimensional galaxy distribution. I. Methods and example results. Astrophys. J. 2011, 727, 48. [Google Scholar] [CrossRef]

- Way, M.J.; Klose, C.D. Can self-organizing maps accurately predict photometric redshifts? Publ. Astron. Soc. Pac. 2012, 124, 274–279. [Google Scholar] [CrossRef]

- Süveges, M.; Barblan, F.; Lecoeur-Taïbi, I.; Prša, A.; Holl, B.; Eyer, L.; Kochoska, A.; Mowlavi, N.; Rimoldini, L. Gaia eclipsing binary and multiple systems. Supervised classification and self-organizing maps. Astron. Astrophys. 2017, 603, A117. [Google Scholar] [CrossRef]

- Armstrong, D.J.; Pollacco, D.; Santerne, A. Transit shapes and self-organizing maps as a tool for ranking planetary candidates: Application to Kepler and K2. MNRAS 2017, 465, 2634–2642. [Google Scholar] [CrossRef]

- Valette, V.; Amsif, K. CNES Gaia Data Processing Centre: A Complex Operation Plan; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2012. [Google Scholar]

- Brunet, P.; Montmorry, A.; Frezouls, B. Big data challenges, an insight into the GAIA Hadoop solution; American Institute of Aeronautics and Astronautics: Reston, VA, USA, 2012. [Google Scholar]

- Tsalmantza, P.; Karampelas, A.; Kontizas, M.; Bailer-Jones, C.A.L.; Rocca-Volmerange, B.; Livanou, E.; Bellas-Velidis, I.; Kontizas, E.; Vallenari, A. A semi-empirical library of galaxy spectra for Gaia classification based on SDSS data and PÉGASE models. Astron. Astrophys. 2012, 537, A42. [Google Scholar] [CrossRef]

- Isasi, Y.; Figueras, F.; Luri, X.; Robin, A.C. GUMS & GOG: Simulating the universe for Gaia. In Highlights of Spanish Astrophysics V; Springer: Berlin, Germany, 2010; Volume 14, p. 415. [Google Scholar]

- Luri, X.; Palmer, M.; Arenou, F.; Masana, E.; de Bruijne, J.; Antiche, E.; Babusiaux, C.; Borrachero, R.; Sartoretti, P.; et al. Overview and stellar statistics of the expected Gaia Catalogue using the Gaia Object Generator. Astron. Astrophys. 2014, 566, A119. [Google Scholar] [CrossRef]

- Cardelli, J.A.; Clayton, G.C.; Mathis, J.S. The relationship between infrared, optical, and ultraviolet extinction. Astrophys. J. 1989, 345, 245–256. [Google Scholar] [CrossRef]

- Garabato, D.; Dafonte, C.; Manteiga, M.; Fustes, D.; Álvarez, M.A.; Arcay, B. A distributed learning algorithm for Self-Organizing Maps intended for outlier analysis in the GAIA—ESA mission. In Proceedings of the 2015 Conference of the International Fuzzy Systems Association and the European Society for Fuzzy Logic and Technology, Gijón, Spain, 30 June–3 July 2015. [Google Scholar]

- Thorndike, R.L. Who belongs in the family? Psychometrika 1953, 18, 267–276. [Google Scholar] [CrossRef]

- Lusk, E.; Doss, N.; Skjellum, A. A high-performance, portable implementation of the MPI message passing interface standard. Parallel Comput. 1996, 22, 789–828. [Google Scholar]

- Dean, J.; Ghemawat, S. MapReduce: Simplified data processing on large clusters. Commun. ACM 2008, 51, 107–113. [Google Scholar] [CrossRef]

- Álvarez, M.A.; Dafonte, C.; Garabato, D.; Manteiga, M. Analysis and knowledge discovery by means of self-organizing maps for Gaia data releases. In Neural Information Processing, Proceedings of the 23rd International Conference on Neural Information Processing ICONIP, Kyoto, Japan, 16–21 October 2016; Springer International Publishing: Cham, Switzerland, 2016; pp. 137–144. [Google Scholar]

- Wenger, M.; Ochsenbein, F.; Egret, D.; Dubois, P.; Bonnarel, F.; Borde, S.; Genova, F.; Jasniewicz, G.; Laloë, S.; Lesteven, S.; et al. The SIMBAD astronomical database: The CDS reference database for astronomical objects. Astron. Astrophys. Suppl. Ser. 2000, 143, 9–22. [Google Scholar] [CrossRef] [Green Version]

- Taylor, M.; Boch, T.; Taylor, J. SAMP, the simple application messaging protocol: Letting applications talk to each other. Astron. Comput. 2015, 11, 81–90. [Google Scholar] [CrossRef]

- Sanders, J.; Kandrot, E. CUDA by Example: An Introduction to General-Purpose GPU Programming, 1st ed.; Addison-Wesley Professional: Boston, MA, USA, 2010. [Google Scholar]

| # of Cores | Memory (GB) | |

|---|---|---|

| Local single machine | 32 | 128 |

| Local cluster | 104 | 392 |

| SAGA-CNES cluster | ∼1100 | 6050 |

| 10 k | 100 k | 1 M | 10 M | 100 M | |

|---|---|---|---|---|---|

| Regular | |||||

| Fast |

| 10 k | 100 k | 1 M | 10 M | 100 M | |

|---|---|---|---|---|---|

| Local Sequential | |||||

| Local Apache Hadoop | |||||

| Local Apache Spark | |||||

| SAGA-CNES | - | - |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dafonte, C.; Garabato, D.; Álvarez, M.A.; Manteiga, M. Distributed Fast Self-Organized Maps for Massive Spectrophotometric Data Analysis †. Sensors 2018, 18, 1419. https://doi.org/10.3390/s18051419

Dafonte C, Garabato D, Álvarez MA, Manteiga M. Distributed Fast Self-Organized Maps for Massive Spectrophotometric Data Analysis †. Sensors. 2018; 18(5):1419. https://doi.org/10.3390/s18051419

Chicago/Turabian StyleDafonte, Carlos, Daniel Garabato, Marco A. Álvarez, and Minia Manteiga. 2018. "Distributed Fast Self-Organized Maps for Massive Spectrophotometric Data Analysis †" Sensors 18, no. 5: 1419. https://doi.org/10.3390/s18051419