Abstract

Looking at another person’s facial expression of emotion can trigger the same neural processes involved in producing the expression, and such responses play a functional role in emotion recognition. Disrupting individuals’ facial action, for example, interferes with verbal emotion recognition tasks. We tested the hypothesis that facial responses also play a functional role in the perceptual processing of emotional expressions. We altered the facial action of participants with a gel facemask while they performed a task that involved distinguishing target expressions from highly similar distractors. Relative to control participants, participants in the facemask condition demonstrated inferior perceptual discrimination of facial expressions, but not of nonface stimuli. The findings suggest that somatosensory/motor processes involving the face contribute to the visual perceptual—and not just conceptual—processing of facial expressions. More broadly, our study contributes to growing evidence for the fundamentally interactive nature of the perceptual inputs from different sensory modalities.

Similar content being viewed by others

Recognizing facial expressions of emotion accurately and efficiently is arguably one of the most important perceptual tasks we encounter as social creatures. The difference between an eyebrow raise of derision and one of sadness is perceptually subtle, but conceptually and behaviorally consequential. Growing evidence suggests that the sensorimotor activity in facial muscles that occurs automatically during perception of a facial expression may contribute to the efficient and accurate processing of the visual input (Ponari, Conson, D’Amico, Grossi, & Trojano, 2012; Rychlowska et al., 2014).

Indeed, people automatically and regularly mimic facial expressions (Carr, Iacoboni, Dubeau, Mazziotta, & Lenzi, 2003). This facial mimicry can be visible or nonvisible to onlookers, occurs even in response to expressions of which the perceiver is unaware (Dimberg, Thunberg, & Elmehed, 2000), and may reflect activation of the same neural processes involved in producing a facial expression (Niedenthal, Mermillod, Maringer, & Hess, 2010).

How and to what extent such facial mimicry contributes to expression perception is unclear. At the very least, facial sensorimotor information seems to influence verbal conceptual judgments about facial expressions (e.g., when applying emotion labels to faces; Ponari et al., 2012). The present research was designed to examine the possibility that such sensorimotor resonance also modulates more basic visual perceptual processes.

Facial mimicry and emotion recognition

Evidence that facial mimicry facilitates the processing of facial expressions has come largely from studies in which perceivers’ spontaneous facial movements have been disrupted by either mechanical or chemical manipulation. Such disruption appears to compromise both the accuracy and the speed with which the perceiver identifies the emotion expressed by the stimulus face, especially for subtle or ambiguous expressions (Rychlowska et al., 2014).

To date, the tasks used to document effects of facial mimicry on expression processing have involved the generation of emotion word labels for the face percepts (Neal & Chartrand, 2011), evaluation of the emotional meanings of expressions (Rychlowska et al., 2014; Stel & van Knippenberg, 2008), or explicit identification of the onset or offset of an emotional expression (Niedenthal, Brauer, Halberstadt, & Innes-Ker, 2001). However, by measuring “emotion recognition” with verbal judgment tasks, existing research is unable to separate the potential roles of the sensorimotor processes for visual processing and for higher-level linguistic/conceptual processing of emotion expressions.

A possible interpretation of these previous findings is that automatic facial mimicry activates high-level emotion concepts, which acts to improve performance on labeling tasks. According to this account, sensorimotor activity may strengthen the higher-level activation of the emotion concept, but it does not affect perceptual processing or perceptual memory for facial expressions.

We propose an alternative account in which automatic sensorimotor activation can directly modulate visual accuracy for facial expressions without requiring the prior activation of higher-order conceptual knowledge. Here, visual accuracy refers to the ability to perceive and remember the facial expression. In the present study, we therefore investigated whether facial feedback can affect performance on a simple visual-matching task.

The present research

To test whether facial feedback affects performance on a visual-matching task, we assigned participants either to a condition in which their facial action was disrupted by the application of a gel that dried and became a constricting facemask, or to a control condition. They then completed a visual discrimination task with images of facial expressions that included within-emotion category judgments (e.g., two versions of a sadness expression), which were not aided by labeling of the emotion expression (see the Discussion section). Performance in the discrimination task therefore required perceptual rather than conceptual judgments. Participants also completed a separate verbal identification task that required them to label the visual stimuli.

To ensure that the gel facemask affected task performance by altering facial feedback, rather than by disrupting other perceptual or cognitive processes (e.g., via distraction or the prevention of brow furrowing, which might impair concentration; Waterink & van Boxtel, 1994), participants also completed a visual-matching task involving nonface images that were not expected to elicit facial mimicry. We predicted that the gel facemask would affect accuracy on the visual-matching task specifically for facial expression stimuli, but not for the control images.

Method

In the following section, we report how we determined our sample size, all data exclusions, all manipulations, and all measures. The experiment and stimuli files are available online (https://osf.io/d364f/?view_only=c51c07e3ebdb45e884a2d0b700283a09).

Participants and procedure

On the basis of previous findings that interfering with facial feedback has a greater effect on the emotion processing for females than for males (e.g., Stel & van Knippenberg, 2008), we maximized power by recruiting exclusively females in this experiment. The participants were female college students from an introductory psychology subject pool working for class credit who had lived in the United States for at least 10 years. Although we did not have a priori effect size estimates, we aimed to collect data from 120 participants, ultimately recruiting a total of 124. One recruited candidate elected not to participate, and another participant’s accuracy on the perceptual discrimination task was so far below chance (39.44 %) that we concluded she had misunderstood the task; after excluding their data, 122 participants remained.

Participants completed the study alone or worked independently in pairs (in private testing booths). At the outset, they were told that the experiment concerned “the role of skin conductance in perception” and that they would be asked to apply a product to their faces that “blocks skin conductance” before they completed a series of computer-based tasks. Participants were then randomly assigned to apply either the gel (n = 64) or, as a control condition, lotion (n = 58) to their faces, and completed a distractor task while the product dried. They then performed the XAB task (so named because on each trial participants see target image X and then indicate whether image A or B is identical to X) and a forced choice identification task. At the end of the experimental session, participants completed several individual differences questionnaires, received full debriefing, and were given credit.

Gel mask manipulation

The participants in both the gel and control conditions were asked to apply the product to their entire faces in a thick layer. The gel condition product was a cosmetic peel-off mask (DaVinci Peel-Off Mask for Men) that dries within minutes of application to become a plastic and somewhat stiff mask. Self-reported feedback from early pilot tests had suggested that the gel exaggerates feedback from slight facial movements, producing feedback “noise” and constricting larger movements. The control condition participants applied a moisturizer (Cetaphil Moisturizing Lotion) that simply absorbed into their skin, to control for possible increases in self-awareness and attention to the face. Participants were then given several distractor maze puzzles to complete for 5 min while the gel/lotion dried.

Stimuli

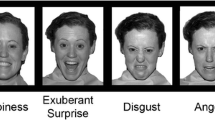

The facial expression stimuli consisted of images of a female model (original images developed by Niedenthal, Halberstadt, Margolin, & Innes-Ker, 2000) expressing various morphed combinations of sadness and anger. Because our analyses required many trials for each stimulus, we used only one actor’s images, acknowledging the limitations of this approach. The nonface control images were selected from a morph of a horse and a cow that had maximally similar postures.Footnote 1 Each morph continuum was constructed using morphing software (Morph Age Express 4.1.3) that rendered 11 grayscale images (see the supplemental materials). For instance, the sad–angry continuum began at 100 % sad and 0 % angry and transitioned in 10 % increments to 0 % sad and 100 % angry. All images were resized to subtend a visual angle between 10 and 12 deg. Participants were seated about 60 cm away from the screen. The experiment was created and presented using PsychoPy (version 1.80.06; Peirce, 2009) and was presented on Dell Optiplex 7010 computers with 55-cm ASUSTeK LCD monitors.

XAB perceptual discrimination task

The XAB task required participants to discriminate a target from a perceptually similar distractor. To minimize explicit category priming, no labels were provided (e.g., “sad” and “angry”). Participants performed eight practice trials using morphed images of a vase and a bottle, followed by the horse–cow and angry–sad versions of the task, in that order, in separate blocks of trials. Each trial (see Fig. 1) began with a 1,000-ms fixation cross, followed by the target image (X) for 750 ms, followed by a 300-ms noise mask. The target image reappeared alongside a distractor, with left–right locations counterbalanced across trials. The target and distractor remained on the screen for 3,000 ms or until participants had made a response. They pressed a key (“f” = left, “j” = right) to indicate which image matched the target image. Note that the short delay (300 ms) between the target and test images limited the extent to which participants could use explicit mnemonic strategies for remembering the target image.

Sample trials depicting the perceptual discrimination XAB task (top) and the forced choice identification task (bottom) with angry–sad sample stimuli. In the XAB task, a target image was presented and then reappeared next to a highly similar distractor. Participants then indicated with a keypress (“f” for left, “j” for right) which image matched the first image they had seen. In the identification task, participants saw the same images as before and indicated with a keypress (“f” for left, “j” for right) which of two category labels (representing either end of the morph continua) they thought best described each image

The target and distractor images were always 20 % apart on the morph continuum, yielding nine image pairs for each continuum. Every pair was presented five times in all order combinations (counterbalancing the target image and side of presentation), for a total of 20 trials/pair, 180 trials/continuum. The trial order was randomized across participants, and trials were separated into five blocks per continuum with self-paced breaks.

Forced choice identification task

In the identification task, participants chose one of two labels that best described each image from the horse–cow and angry–sad continua. Participants completed eight practice trials using morphed images of a vase and a bottle, followed by the horse–cow and angry–sad versions of the task (in that order) in separate sets of blocks. Each trial began with a 1,000-ms fixation cross, followed by the target image for 750 ms. The target image disappeared and was replaced by two word labels. Participants pressed a key (“f” = left, “j” = right) to indicate which word (“horse”/“cow,” “angry”/“sad”) best described the image. We presented ten trials for each of the 11 images on a continuum (the order of the word labels was counterbalanced across trials), resulting in 110 trials, separated into five blocks.

Individual differences questionnaires

Following the XAB and identification tasks, participants completed two questionnaires (see the online supplemental materials for descriptions of the scales and the corresponding analyses).

Results

We hypothesized that gel and control participants would differ in their accuracies (proportions of trials on which the correct image was selected) on the XAB perceptual discrimination task for the angry–sad stimuli, but not for the horse–cow stimuli. All analyses were conducted using the R environment (R Development Core Team, 2014; all package versions used were from Dec. 2014 or earlier) and the lme4 package (Bates, Mächler, Bolker, & Walker, 2015). All of the analyses and data files are available online (https://osf.io/452e7/?view_only=bd5d58f5061246f785310365fb721834).

XAB perceptual discrimination

We excluded XAB trials with reaction times shorter than 150 ms (0.1 % of all trials), which did not alter our results. We estimated generalized linear mixed-effect binomial models, since the outcome variable (correct/incorrect response) was dichotomous.

Calculating the slope of the categorical perception effect

Categorical perception is demonstrated when perceptual discrimination is greatest near the subjective boundary between the two categories (e.g., where a person perceives the offset of sadness and the onset of anger; Roberson, Damjanovic, & Pilling, 2007). For the present analyses, we wanted to account for the variance in accuracy due to categorical perception and to test whether disrupting facial feedback affected such perception. Therefore, for each of the participants, we identified the locations on both continua where their accuracy was greatest (if a participant had multiple “peaks” in accuracy, we used the average of those locations as the single peak). We then recentered the continua around each participant’s peak (average locations across participants: angry–sad = 58.09 %, horse–cow = 53.05 %). We then calculated the absolute values of the recentered continuum locations and included these distance from peak scores in the subsequent XAB analyses.

Interaction between condition and XAB continuum type

We predicted an interaction between condition (gel coded as = –.5, control = .5) and XAB continuum type (horse–cow = –.5, angry–sad = .5). To control for potential practice effects and the categorical perception effect, we included trial number (centered) and distance from peak (every unit increase represented a 10 % step from the peak), as well as all possible interactions, in this first model.Footnote 2 We included continuum type, trial number, and distance from peak as participant-level random effects.

The participants in the control condition (M = .74, SD = .44) were more accurate than the gel participants (M = .71, SD = .45), b = 0.235, SE = 0.090, 95 % CI [0.06, 0.41], z = 2.60, p < .01. However, the effect was qualified by a Continuum × Condition interaction, controlling for all other variables and interactions, b = 0.256, SE = 0.132, 95 % CI [0.00, 0.52], z = 1.94, p = .053, suggesting that the difference in accuracy across conditions was significantly greater on the angry–sad than on the horse–cow trials (Fig. 2). We next analyzed the data from the angry–sad and horse–cow trials separately to determine the simple effects of condition on accuracy.

Average accuracies of participants in the control and gel facemask conditions on the angry–sad (top) and horse–cow (bottom) XAB tasks. The x-axis indicates the location of a trial’s image pair on the morphed continua, with values representing the percentage presence of sadness (top graph) or of cow (bottom graph). For instance, “10–30” on the top graph refers to a trial involving the 10 % sadness image and the 30 % sadness image. Participants in the gel condition performed significantly worse than control participants on the angry–sad trials, but not on the horse–cow trials. Error bars represent standard errors of the means

Angry–sad XAB accuracy

We regressed participants’ responses for angry–sad trials on the same set of regressors as in the first model (except for continuum and its interaction terms). As expected, the effect of condition was significant when we controlled for all other variables, such that the participants in the control condition (M = .78, SD = .41) were 1.42 times more likely to make a correct response than were participants wearing the gel facemask (M = .74, SD = .44), b = 0.354, SE = 0.125, 95 % CI [0.11, 0.60], z = 2.84, p = .005. We observed a significant effect of distance from peak, controlling for all other variables, such that accuracy was worse the further an XAB image pair was from the peak, b = –0.173, SE = 0.011, 95 % CI [–0.19, –0.15], z = –15.86, p < .001. The Condition × Distance From Peak interaction approached significance, with control participants demonstrating a slightly steeper decline in accuracy as image pairs moved farther from the accuracy peaks, b = –0.037, SE = 0.021, 95 % CI [–0.08, 0.004], z = –1.75, p = .080.

Horse–cow XAB accuracy

We then performed the same analysis on the horse–cow data subset. Importantly, here we found no effect of condition, with performance in the gel (M = .68, SD = .47) and control (M = .69, SD = .46) conditions not being significantly different, z = 1.10, p > .250. An effect of distance from peak also emerged, controlling for all other variables, b = –0.145, SE = 0.010, 95 % CI [–0.17, –0.13], z = –14.30, p < .001. This was not qualified by a Distance From Peak × Condition interaction, z = –0.53, p > .250.

Angry–sad XAB reaction time

We next examined reaction times to ensure that the effect of condition on angry–sad accuracy was not simply due to a speed–accuracy trade-off in the gel condition. Using linear mixed-effect modeling, we regressed the reaction times for angry–sad trials on distance from peak, trial number, condition, and all possible interaction terms, with random slopes for distance from peak and trial number. The effect of condition on reaction times was not significant, suggesting that the gel condition participants’ reduced accuracy was not merely due to increased speed in the task: b = 0.019, SE = 0.036, t(118.85) = 0.54, p > .250.

To summarize, participants wearing the gel facemask performed significantly worse than control participants on the XAB task that involved facial expressions. The gel facemask had no effect on people’s performance on the horse–cow XAB task.

Forced choice identification task

For the identification task, we calculated the proportion of trials on which a participant selected one label (“angry” or “horse”) over the other (“sad” or “cow”) for each of the 11 target images on a continuum. To test for an effect of condition on the slope of the shift from one label to another (as an indicator of how “categorical” participants’ responses were), we fit sigmoid function curves to each participant’s identification responses and determined whether the shapes of those curves differed significantly for the gel and control participants. We used nonlinear regression with brute force (Grothendieck, 2013) to estimate for each participant two sigmoid function coefficients [y ~ .03 + (.97 – .03)/{1 + exp[(x – θ 1)/θ 2]}].Footnote 3 We then regressed these coefficients on condition to test whether the shapes of the curves for gel and control participants differed.

Fitting sigmoid curves to the angry–sad responses

The estimates for θ 1 did not differ between control (θ 1 = .445) and gel (θ 1 = .452) participants, t < 1, p > .250. The two groups also did not differ in their θ 2 estimates (control θ 2 = .074, gel θ 2 = .068), t < 1, p > .250. The shapes of the identification response curves did not change across conditions, suggesting that participants in both conditions applied the labels “angry” and “sad” similarly (see Fig. 3).

Estimated sigmoid functions and average responses (with error bars representing the standard errors of the means) of participants in the control and gel facemask conditions on the angry–sad (top) and horse–cow (bottom) identification tasks. The x-axis indicates the location of a trial’s image on the morphed continua, with values representing the percentage presence of sadness (top graph) or of cow (bottom graph). The y-axis represents the proportion of responses in which participants selected one label (“angry” or “horse”) versus the alternative (“sad” or “cow”). The estimates for the sigmoid functions did not vary as a function of condition, suggesting that the facemask did not change how “categorically” participants explicitly labeled the stimuli (a steeper curve suggests more categorical responses, but the curves did not vary across conditions)

Fitting sigmoid curves to the horse–cow responses

The estimated θ 1 values for control (θ 1 = .542) and gel (θ 1 = .552) participants did not differ significantly, t < –1, p > .250, nor did the estimates for θ 2 (control θ 2 = .052, gel θ 2 = .047), t < 1, p > .250. Thus, condition did not modulate the rate at which participants transitioned from using “horse” to using “cow” to label the images.

Discussion

The present work is the first to demonstrate that altering facial sensorimotor processes specifically impacts the visual processing of facial expressions, reducing people’s ability to discriminate facial expressions but not control stimuli. We acknowledge that the XAB task does not clearly distinguish between the effects on facial expression perception, per se (e.g., the clarity of the initial input), and any effects on perceptual memory (the fidelity of the percept maintained over the course of a trial; see Pasternak & Greenlee, 2005), although virtually all perceptual tasks rely to some degree on memory. Perhaps the gel facemask generates noise in somatosensory/motor regions of the brain, interfering with the perceptual memory stored in those modalities. Whatever the exact mechanism, it appears that facial somatosensory/motor activity can play a role in how faces are processed in the service of a visual matching task.

Although it appears that relevant categories are activated during tasks like ours (Roberson, Damjanovic, & Pilling, 2007), we argue that the effect of the gel facemask on performance in the present work was not verbally mediated. First, the gel facemask, which is thought to disrupt facial sensorimotor processes, had no effect on responses on the emotion-labeling task. Second, disrupting facial feedback reduced accuracy across the angry–sad morph continuum, regardless of whether the target and distractor expressions were members of the same conceptual category (“sad” “sad”) or different categories (“angry” “sad”). Previous work (Roberson, Damjanovic, & Pilling, 2007) has suggested that the strategy of mentally categorizing or labeling the target and distractor images is only useful when the expressions fall into separate subjective categories. Here we found that disrupting facial feedback reduced accuracy across the entire emotion continuum. Since only one female model was used in the present study, it will be crucial to conduct future studies with male participants and models to unpack gender differences in mimicry and emotion recognition (e.g., Stel & van Knippenberg, 2008).

An unanswered question is how the gel facemask alters sensorimotor processes during emotion perception. One possibility is that the gel constricts and reduces movements of the facial muscles, not unlike Botox, which has been shown to disrupt emotion recognition (Neal & Chartrand, 2011, Study 1). However, one study (Neal & Chartrand, 2011, Study 2) revealed that the gel facemask improved performance on a high-level theory of mind task called the Reading the Mind in the Eyes Test (RMET). The RMET is a forced choice labeling task that involves making complex and subtle mental state attributions (e.g., “desire,” “arrogant,” and “comforting”) that may often rely more on the direction of the expressers’ eye gaze than on actual facial muscle movement (for a discussion of the task, see Dal Monte et al., 2014). The authors proposed that the gel “amplifies” the somatosensory feedback generated with each movement, essentially increasing the emotion recognition benefits of facial mimicry. We argue that our findings can be reconciled with those of Neal and Chartrand. Perhaps accuracy in the RMET task relies less on perceptual clarity and precision (which are required for our XAB task) and more on generalizing subtle expressions of the eyes and brows to the rest of the face. If the gel exaggerates the somatosensory feedback from the facial skin and muscles, then it could facilitate performance on the RMET by providing the perceiver with a stronger, if distorted, signal from the face. On a lower-level perceptual task like the XAB task, in which differences between the stimuli are minimal and an exaggerated signal is actually incorrect, the gel mask would reduce accuracy. In this case, the “amplified” feedback from the face due to the gel mask adds noise that could interfere with working memory or the perception of the target expression that sensorimotor processes might be facilitating. The sensorimotor system functions optimally when somatosensory feedback converges with predictions about the expected effects of motor output (Wolpert & Flanagan, 2001), and the gel mask may cause a mismatch between sensorimotor predictions and feedback. These are only conjectures, and further research into whether the amplification of facial mimicry is ever perceptually advantageous is warranted.

The present work contributes more broadly to growing evidence that input from one sensory modality, in this case sensorimotor, can alter percepts in another modality, such as vision (see also Hu & Knill, 2010; Salomon, Lim, Herbelin, Hesselmann, & Blanke, 2013). The present and other cross-modal interactions suggest that inputs from separate modalities are combined to produce estimates of an external property of the stimulus (Driver & Noesselt, 2008). By involving multiple sensory modalities, complex percepts such as facial expressions can be processed more accurately.

In sum, recent findings suggest that the perception of another person’s facial expression of emotion triggers the same processes involved in producing the expression, and such responses play a functional role in emotion recognition. Our findings provide evidence in support of the hypothesis that facial responses also play a functional role in the perceptual processing of emotional expressions.

Notes

Ideal comparison stimuli would involve morphed images of faces that could not be mimicked—however, an early pilot test with a male–female morph continuum suggested that even nonemotional face stimuli can indeed be mimicked, as long as the facial morphology is being changed (e.g., the brow lowers as a face becomes more prototypically masculine).

To save space, additional coefficient estimates for all models are available online.

The constants of .03 and .97 were selected as appropriate lower and upper bounds for the sigmoid curve because those were the mean response rates at the extreme ends of the morph continua.

References

Bates, D., Mächler, M., Bolker, B., & Walker, S. (2015). Fitting linear mixed-effects models using lme4. Journal of Statistical Software, 67(1), 1–48. doi:10.18637/jss.v067.i01

Carr, L., Iacoboni, M., Dubeau, M. C., Mazziotta, J. C., & Lenzi, G. L. (2003). Neural mechanisms of empathy in humans: a relay from neural systems for imitation to limbic areas. Proceedings of the National Academy of Sciences, 100, 5497–5502. doi:10.1016/j.actpsy.2014.11.012

Dal Monte, O., Schintu, S., Pardini, M., Berti, A., Wassermann, E. M., Grafman, J., & Krueger, F. (2014). The left inferior frontal gyrus is crucial for reading the mind in the eyes: Brain lesion evidence. Cortex, 58, 9–17. doi:10.1016/j.cortex.2014.05.002

Development Core Team, R. (2014). R: A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing. Retrieved from www.R-project.org

Dimberg, U., Thunberg, M., & Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychological Science, 11, 86–89. doi:10.1111/1467-9280.00221

Driver, J., & Noesselt, T. (2008). Multisensory interplay reveals crossmodal influences on “sensory-specific” brain regions, neural responses, and judgments. Neuron, 57, 11–23. doi:10.1016/j.neuron.2007.12.013

Grothendieck, G. (2013). nls2: Non-linear regression with brute force [Software]. Retrieved from https://cran.r-project.org/web/packages/nls2/index.html

Hu, B., & Knill, D. C. (2010). Kinesthetic information disambiguates visual motion signals. Current Biology, 20, R436–R437. doi:10.1016/j.cub.2010.03.053

Neal, D. T., & Chartrand, T. L. (2011). Embodied emotion perception amplifying and dampening facial feedback modulates emotion perception accuracy. Social Psychological and Personality Science, 2, 673–678. doi:10.1177/1948550611406138

Niedenthal, P. M., Brauer, M., Halberstadt, J. B., & Innes-Ker, Å. H. (2001). When did her smile drop? Facial mimicry and the influences of emotional state on the detection of change in emotional expression. Cognition and Emotion, 15, 853–864. doi:10.1080/02699930143000194

Niedenthal, P. M., Halberstadt, J. B., Margolin, J., & Innes-Ker, Å. H. (2000). Emotional state and the detection of change in facial expression of emotion. European Journal of Social Psychology, 30, 211–222. doi:10.1002/(SICI)1099-0992(200003/04)30:2<211::AID-EJSP988>3.0.CO;2-3

Niedenthal, P. M., Mermillod, M., Maringer, M., & Hess, U. (2010). The Simulation of Smiles (SIMS) model: Embodied simulation and the meaning of facial expression. Behavioral and Brain Sciences, 33, 417–433. doi:10.1017/s0140525x10000865

Pasternak, T., & Greenlee, M. W. (2005). Working memory in primate sensory systems. Nature Reviews Neuroscience, 6, 97–107. doi:10.1038/nrn1637

Peirce, J. W. (2009). Generating stimuli for neuroscience using PsychoPy. Frontiers in Neuroinformatics, 2, 10. doi:10.3389/neuro.11.010.2008

Ponari, M., Conson, M., D’Amico, N. P., Grossi, D., & Trojano, L. (2012). Mapping correspondence between facial mimicry and emotion recognition in healthy subjects. Emotion, 12, 1398. doi:10.1037/a0028588

Roberson, D., Damjanovic, L., & Pilling, M. (2007). Categorical perception of facial expressions: Evidence for a “category adjustment” model. Memory & Cognition, 35, 1814–1829. doi:10.3758/BF03193512

Rychlowska, M., Cañadas, E., Wood, A., Krumhuber, E. G., Fischer, A., & Niedenthal, P. M. (2014). Blocking mimicry makes true and false smiles look the same. PLoS ONE, 9, e90876. doi:10.1371/journal.pone.0090876

Salomon, R., Lim, M., Herbelin, B., Hesselmann, G., & Blanke, O. (2013). Posing for awareness: Proprioception modulates access to visual consciousness in a continuous flash suppression task. Journal of Vision, 13(7), 2. doi:10.1167/13.7.2

Stel, M., & van Knippenberg, A. (2008). The role of facial mimicry in the recognition of affect. Psychological Science, 19, 984–985. doi:10.1111/j.1467-9280.2008.02188.x

Waterink, W., & van Boxtel, A. (1994). Facial and jaw-elevator EMG activity in relation to changes in performance level during a sustained information processing task. Biological Psychology, 37, 183–198. doi:10.1016/0301-0511(94)90001-9

Wolpert, D. M., & Flanagan, J. R. (2001). Motor prediction. Current Biology, 11, R729–R732. doi:10.1016/s0960-9822(01)00432-8

Author note

A.W. was supported by National Science Foundation (NSF) Grant GRFP 2012148522, G.L. by NSF Grant BCS-1331293, S.S. by NSF Grant IGERT DGE-0903495, and P.N. by NSF Grant BCS-1251101. We thank Eliot Smith, Magdalena Rychlowska, William Cox, Jared Martin, Lyn Abramson, and Piotr Winkielman for their invaluable feedback. Many thanks to Crystal Hanson, Anand Raman, Emma Phillips, Katelyn Glenn, Claire Skille, Clara Henkes, Ian Kuhn, and the rest of the Niedenthal Emotions Lab for their help in conducting this research.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(PDF 73 kb)

Rights and permissions

About this article

Cite this article

Wood, A., Lupyan, G., Sherrin, S. et al. Altering sensorimotor feedback disrupts visual discrimination of facial expressions. Psychon Bull Rev 23, 1150–1156 (2016). https://doi.org/10.3758/s13423-015-0974-5

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13423-015-0974-5