Abstract

River basin management, or Integrated Water Resources Management (IWRM), is subject to the European Directive 2000/60/EC, related Directives and amendments (2008/105/EC). These Directives define management “objectives” such as: preventing and reducing pollution; promoting sustainable water usage; environmental protection; improving aquatic ecosystems and mitigating the effects of floods and droughts. The dominant objective is to achieve “good ecological and chemical status” for all Community waters by 2015. These are all noble and laudable goals, hard to disagree with, but they do not necessarily cover everything that “stakeholders” in water resources management (which is more or less everybody) really want. I claim that what we really want is to maximize net benefit from the operation and use of any water resources system, get as close to some “Utopia” as possible. Net benefit here is not meant in any narrow economic, monetary sense, but as a measure of all the goods and benefits, countable monetary but also qualitative, perceived, spiritual, whatever is “worth” anything to us. Utopia is the (theoretical) state of a system that combines all the “best values” achievable for all criteria considered. Implementation of this view revolves around the simple concept of mass conservation, dynamic water budget modelling, that tells us how much water is or will be available where and when. The model describes the dynamic balance of demand and supply, reliability of supply, various efficiencies etc. together with water quality, and whatever “valued” attribute of the system the model can describe. This yields the metric for the degree to which various objectives are met. A multi-attribute approach measures how close we can get to Utopia in a “normalized” achievement space of any number of criteria. The optimization tries to find the set of policies, measures, and technologies with their costs and efficiencies, that will get us as close to Utopia as possible. This involves trade-off between objectives (and stakeholders), give and take, that should lead to cooperative games that should in turn (given reasonable stakeholders) result in win-win solutions. This assumes that “to win” in some tangible sense, to be better off than “before”, is what we really want, and that we can learn and agree on what means: “better”.

Similar content being viewed by others

1 Introduction

We start with naïve expectations: we manage river basins so that we have enough water of “sufficient quality” when we need it, where we need it, for a range of “useful” purposes. These are, inter alia, drinking and domestic requirements to agricultural and industrial use, to generate electricity where feasible, and for environmental uses (keep enough in the system to maintain environmental quality or enable “environmental services”, or keep the water “enjoyable” for recreational use (and the eventual fish or bird). And we want to protect ourselves and our property from floods.

In the European Union, the so-called Water Framework Directive 2000/60/EC amended by 2008/105/EC, “establishes a framework for Community action in the field of water policy”. Its primary objective is pollution control, with the ultimate target to achieve “good ecological and chemical status” for all Community waters by 2015. Availability, issues of scarcity and pricing, public participation, and River Basin Management plans as a unifying framework are also referred to, somewhat less prominent than the central issue of “ecological status”.

A somewhat different orientation is underlying the so-called Dublin Statement (ICWE 1992), where water is primarily seen as an economic good; this should lead to its protection as a vulnerable and often scarce resource, and its efficient use. Central topics in the four statements also include public participation in planning and management, and the role of women.

The basic instruments we have available are “water rights” that define who can use it (where, when, how much), regulations that define who can pollute water (and for how much), or limit the purposes water can be used for at times of scarcity, the storage water in reservoirs to buffer its time variable availability, and treatment in treatment plants to maintain its usefulness (and re-use) for different purposes. Metering and pricing are used to influence consumption by rational consumers, and supposed to recover the “true or full cost” of water (supply). Treating water as an economic good (the Dublin principles, ICWE 1992) versus a “human right”, questions of public ownership versus privatization of water infrastructure and services are an open field of ultimately political discussion (e.g., Savenije and van der Zaag 2002).

To meet the ever growing expectations, we have to plan and manage river basins, or more generally, water resources systems for more “efficiency”: doing “more” useful things with the same (if not shrinking) amount of water. This means to decide between alternative courses of action, and to design these alternatives, basically as combinations of regulations and infrastructure investments in the first place.

1.1 The Decisionmaking Process

Decision making processes can involve a single decision maker, or, better, a group (the WFD but also the Dublin Statement both put emphasis on public participation).

What this needs: an open framework for the preference structure, where we agree on what we want (the objectives), and how we can measure whether we have achieved it (the criteria). Simple and directly obvious criteria would be the availability of water (flow, level, supply) or quality parameters. Cost and benefit functions are defined, that help to translate more complex, non-linear issues into the (pseudo simplicity of) unifying monetary terms. These functions may be simple, outright naïve, and subjective, as long as the decision makers can agree: they are part of the language for communication (the DSS ontology) rather than applied economics. Then, we try to determine the consequences of different strategies: we use “a model”, run it many times, in fact many thousands of times for a water year at a time. The computer varies decision variables: the water technologies or policies applied, allocating and scaling them to the individual nodes where they affect the system behaviour and performance. We may also try some sensitivity analysis, e.g., vary the meteorology, precipitation and temperature that drive evaporative losses. Climate Change impacts, robustness and resilience, and of course sustainability are key concepts here (Fedra 2004).

This allocation of technologies can be done with simple naïve Monte Carlo initially. Analysing first results will help to fine-tune the technology selections and the range of application scales by eliminating choices and combinations that tend to violate the constraints. Once a promising core of alternatives has been found, genetic algorithms, neighbourhood search, machine learning, SNN or any combination of methods can be used to increase the efficiency of generating feasible alternatives (Michalewicz and Fogel 2000).

If no feasible alternatives that meet all constraints are found, constraints need to be relaxed. We know which constraints have been violated most often, so we know where to start. Eventually, we end up with a set of feasible solutions. From these the dominated solutions can be eliminated. Anything that is not “better” than any other alternative in at least one of the criteria, but worse in at least one, is dominated. The remaining subset is pareto optimal, i.e., we can always find a good (and perfectly rational reason) for selecting any one of them.

The analysis of the results generated supports relaxing or tightening of constraints: if everything is feasible, we can probably do better! The analysis will show which of the criteria and constraints are violated most often (or never): primary candidates to be ignored, tightened or relaxed. We can also learn about coincidences, how much a given structure or activity, i.e., decision variable contributes to specific criteria and overall system performance, and thus learn to concentrate the search to more promising regions emerging in the decision space.

The second major concept in the decision making process if trade-off, or compromise. We can not have it all. As soon as we deal (realistically) with more than a single criterion, we have to weigh criteria against each other, trade-off one against the other, which is an everyday, and more often than not subconscious process in any decision making.

How to compare and trade off criteria against each other is one of the continuing challenges, if not near religious aspects of multi-attribute decision theory. Since one of the aims beyond the support for interactive group decision making is to use the methods for more or less unsupervised operational management (Rubicon and ESS 2010), we use a simple approach, easy to computerize. This is based on a “normalization” of the criteria between Nadir (theoretical worst) and Utopia (theoretical best) in terms of “degree of achievement”. To nevertheless express specific preferences (often formulated as “weight factors”, derived e.g., from pairwise comparisons and a partial ranking or subjective weight assignment (e.g., ELECTRE, Roy 1968; Saaty 1995), users can move the default target solution (Utopia) towards some set of values that they believe represent a more desirable or realistic, achievable target (the reference point) which implies “weights” (explicit relative importance) for individual criteria.

2 A Formal Approach

To express what we want in some formal way (always a good idea for communication to use well defined, measurable concepts) we select and define a set of criteria. These are measurable (in the widest sense including psychometric tools to determine individual, subjective assessment) attributes of the system, and relevant for the objectives defined.

Examples could be various water quality parameters and indicators, but also the overall demand/supply balance in a basin, the reliability of supply, the overall content change (good measure of sustainability), minima and maxima of flow for specific reaches, or the minimum and maximum storage level of reservoirs, the price of water, annual investment and operating costs of water infrastructure, the net economic benefit generated from the use of water, and not only for the water year and the entire basin, for any temporal, spatial, sectoral subset down to the individual water user and limited, critical periods.

For each of the criteria, we need to define a method of measurement, units, and the related objectives (minimize, maximize, or minimize the deviation from a given (regulatory?) reference value. While this sounds very rational and fair, keep in mind that the selection of the criteria is already an important, value laden part of the planning and decision making process. Should we include in-stream benefits, environmental water demands and allocations? Hydropower? Cost of supply, the price of water? The beauty of a lakeshore? Happy fishermen? Happy fish?

Measurement of criteria means two things: first, they have to represent some meaningful property of the system that we could obtain, in principle, in the field; second, in the context of model based analysis, it must be possible to derive them from our water budget modelling. And, in theory, they should be independent. Since this is indeed rather theoretical in a complex system where most everything affects everything else: the extra water that makes the fish and fishermen happy upstream also contributes to the dilution of waste downstream toward better water quality), we try to work around that requirement by using many criteria, so they will tend to “even out” bias. And: there is nothing wrong with bias, (compared to what?) as long as it is open, well understood and agreed upon!

The approach foresees to be very generous and inclusive in the definition of criteria, since the final DSS step supports toggling them in and out of consideration easily (provided they are defined and available in the first place).

Another important aspect of the preference structure are constraints: we define upper and lower bounds for criteria that define acceptable, feasible solutions. Solutions outside are ignored, until we relax any or all of the constraints, for example, if no feasible solution can be generated otherwise.

And who is “we”? One or more stakeholders and decision makers, public participation in the decision making process being among the principles the WFD and the Dublin Rules advocate. Web-based asynchronous access for distributed stakeholders supports that very well (Fedra 2005; Fedra and Harmancioglu 2005).

2.1 DSS: Concepts and Terminology

There is no single accepted definition of what constitutes a Decision Support System (DSS) in the technical literature (Sprague and Carlson 1982). For a collection of state-of-the-art reviews on Multi-criteria Decision Analysis, see Figueira et al. (2005).

“A decision support system (DSS) is both a process and a tool for solving problems that are too complex for humans alone, but usually too qualitative for only computers. Multiple objectives will complicate the task of decision-making, especially when the objectives conflict. As a process, a DSS is a systematic method of leading decision-makers and other stakeholders through the task of considering all objectives and then evaluating options to identify a solution that best solves an explicit problem while satisfying as many objectives as possible to as high a degree as possible” (Fedra and Jamieson 1996a; Westphal et al. 2003).

“Decision support systems” use an interactive, flexible, and adaptable computer-based information systems especially developed for supporting the solution for a specific non-structured management problem (Fedra and Loucks 1985). Efficient data management, an intuitive user interface, support for the easy (and preferably consistent) editing of the decision maker’s expectations and constraints, the use of models of a wide range of complexity to translate the decision variables into systems response are all useful and common components. Finally, a DSS can be used by a single user, or it can be Web-based for distributed groups of users (Turban 1995).

The emphasis of DSS is in the support aspects. The main function is support for the understanding of the decision problem, to eventually find a compromise solution. Identification of the problem structure, the (formal) definition of preferences and constraints, and the set of available options with the costs and benefits are the key elements. Once the alternatives are well defined and the trade-offs well understood, finding the “best” alternative is comparatively simple. The “know” what we really want it is helpful to know what we can get, and what it will cost.

In a system as complex as a river basin, we always will deal with several if not many stakeholders and decision makers, multiple and conflicting objectives, and multiple criteria. To address a very complex, dynamic, spatially distributed and highly non-linear system, we generate discrete alternatives (no smooth, differentiable functions linking the decision variables to the system performance exists, leading to a Discrete Multi-criteria Optimization problem).

A conceptually simple approach is the reference point methodology of multi-attribute theory (Wierzbicky 1998). The basic advantage is simplicity, the use of a minimum set of assumptions and a preference structure that can be expressed in terms of any number of criteria with their natural units. The system performance is described as a set of points (the alternatives) in an N dimensional behavior space (the dimension are defined by the criteria, and bounded by the constraints). An alternative is better the closer it is located to Utopia or a user defined target or reference point. To make criteria directly comparable, we normalize them, replacing their natural units and values (for the internal calculations, the interface retains the natural units) by the relative position between Nadir and Utopia as a degree or percentage of achievement. This makes it possible to trade off criteria without eliciting complicated weights or preferences from the user.

2.1.1 Multiple and Conflicting Objectives

Objectives are what a decision maker wants to accomplish. One or more of the criteria can contribute to an objective. Any individual decision maker (and certainly a group of decision makers) will have more than one objective (including a few undisclosed ones).

Multiple objectives are usually competitive or conflicting: i.e., the improvement in one of them leads to a deterioration in others: there is a price to pay. We can speak of a conflict and about conflicting objectives whether there is one or more decision makers who have (within or between them) different objectives and who act on the same system or share the same resources. A river basin is a perfect example.

2.1.2 Multi-Attribute Theory

Making decisions is a choice between alternatives. This choice is based on some ranking, which is trivial with a single criterion (like cost), and complex with many. The choice between alternatives also needs and effective design of a repertoire of possible alternative decisions likely to contain attractive candidates.

The first step is based on satisficing: finding “good enough” (feasible) candidates. The design process (our model) will generate one or more feasible solutions. If there is only one feasible solution, Hobson choice suggests itself, as well as a redefinition of the preference structure and the design process for alternatives. If there are many feasible alternatives to choose from, a second phase selection process is used to identify a preferred solution (accepted by all or a qualified majority of stakeholders).

Any non-trivial real-world decision problem (like managing a river basin) involves multiple criteria that describe the alternatives, and to express constraints and objectives, usually also multiple, and often conflicting (Bell et al. 1977).

The set of decision-relevant attributes or criteria is not given a priori but is itself part of the decision making process. The selection and definition of criteria is one of the most critical steps together with the definition of “feasible” (setting the constraints). Adding or deleting criteria and moving constraints is a very powerful way to influence decisions.

A major concern is to make sure the criteria are open and relevant, that is they describe aspects of the decision problem that are indeed meaningful and relevant to (and understood and agreed upon by) all stakeholders and actors. This invariably will lead to the fact that they are incommensurable, i.e., they have very different units that cannot be readily compared. The criteria will also include intangibles that are not amenable to direct measurement but may reflect psychological or aesthetic considerations, believes, fears, perceptions which are extremely difficult to measure or elicit and scale. The problem of environmental valuation, but also the cost or the value of human life, are typical, and usually controversial examples.

Water resources management strategies and associated decisions may have a considerable life time (e.g., building a reservoir). Their consequences evolve over time. Beyond introducing uncertainties about future boundary conditions (for example, demographic development, food prices, or the climate) this leads to considerations of intergenerational equity or trade-offs. Requirements for long-term equity and sustainability will add additional dimensions.

2.1.3 Goal Programming, The Satisficing Paradigm

Reference point approaches is a generalization of goal programming. They were developed, starting with research done at the International Institute for Applied Systems Analysis (IIASA) in Laxenburg, Austria, specifically as a tool of environmental model and decision analysis (Wierzbicky 1998; Nakayama and Sawaragi 1983).

The main advantages of goal programming are related to the psychologically appealing idea that we should set a goal in objective space and try to come close to it. Coming close to a goal suggests minimizing a distance measure between an attainable objective vector (decision outcome) and the goal vector.

According to Simon (1957, 1996), real decision makers do not optimize their “utility” when making decisions. Simon postulated that actual decision makers, through learning, adaptively develop aspiration levels for various important outcomes of their decisions: they seek decisions as close as possible to the aspiration levels and “good enough”.

For a more detailed discussion of the reference point idea and implementation for water resources management, please refer to ESS (2006a), technical reports and project deliverables from the EU INCO-MPC project OPTIMA on water resources management optimization (http://www.ess.co.at/OPTIMA).

2.1.4 Discrete Multi-Criteria Methods

The basic optimization problem can be summarized as (for more detailed treatment, see: ESS 2006b)

where

is the vector of decision variables (the scenario parameters), and

defines the objective function. X0 defines the set of feasible alternatives that satisfy the constraints:

In the case of numerous scenarios with multiple criteria, we can define the partial ordering

where at least one of the inequalities is strict. A solution for the overall problem is a Pareto-optimal solution:

Since decision and solution space are of relatively high dimensionality, the direct comparison of a larger number of alternatives becomes difficult in cognitive terms. The data sets describing the scenarios can be displayed in simple scattergrams, (Fig. 1) with a user defined selection of criteria for the (normalized) axes. Along these axes, constraints in terms of minimal and maximal acceptable values of the performance variable in question can be set, leading to a screening and reduction of alternatives.

As an implicit reference point, the utopia point can be used. Consequently, and unless the user overrides this default by specifying an explicit reference point, the system always has a solution (the feasible alternative nearest to the reference point) that can be indicated and highlighted on the scattergrams and in a listing of named alternatives. A web-based implementation makes access for a large and distributed group of decision makes feasible, also asynchronously.

However, both the procedure and the underlying concepts are somewhat complex, involved, and not easily used other than with a captive audience of students of decision theory.

2.2 The Satisficing Concept: Good Enough

The basic idea is simple enough: rather than looking for “best” in some sense, define “good enough”, acceptable in terms of constraints. Expressing aspirations as a set of constraints, is simple, intuitively understandable, and lends itself well to a participatory approach, as a group of decision makers together in front of the computer screen, or with distributed web access.

An initial set of reasonable (agreed upon) constraint values are defined in the natural units of the criteria which makes the procedure easy to understand stakeholder can easily express what they want. A set of feasible solutions that meets the criteria is “found” in the set of available alternatives or generated, e.g., by simulation modelling. If the set is empty, the constraints are relaxed – the sequence and degree of relaxation are a reflection of the DM’s preferences (and negotiation skills). Criteria can be added or deleted, and new strategies tried in the design of alternatives.

If the set includes very many solutions, the constraints can be tightened in the same interactive and iterative procedure as above, but in the opposite direction. What is important is the feedback from what is possible on the definition of the preferences. The procedure ends whenever the decision makers are all satisfied.

From a game theoretical point of view (e.g., Gibbons 1997), we aim at a transition from a perceived zero-sum game or a version of the prisoners dilemma with dominant strategies with a possibly suboptimal equilibrium to a cooperative game. In terms of water use, while allocation for consumptive use may indeed be understood as a zero sum game, total extractions are not. The possibility for recycling and re-use but even more so a “water market”, selling water rights towards more efficient and beneficial uses, requires the introduction of the appropriate coordination and exchange mechanisms: the possibility to trade water in some sense so that the overall benefits of use can be increased together with individual user benefits.

In terms of consumptive use, managing a water resources system is a zero-sum game if getting more water is the only form of pay-off. Allocating water to the most “beneficial” use is obvious and needs some compensation mechanism (the “water market”). But “leaving” more water in the system has other benefits. These benefits, however, are generally shared between all stakeholders (whether they save water or not) and we get to a multiplayer form of the well known prisoner’s dilemma. Here the payoff depends on the coordinated (or at least cumulative) action of several if not all players (Power 2009).

3 Optimization: Only the Best

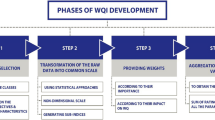

To find the “best” strategy for river basin management, a two-phased approach is used:

-

The design phase, generating a large number of feasible and eventually non-dominated alternatives: satisficing.

-

The selection phase, selection of a preferred, best solution, applying the multi-criteria methods described above.

A central concept here is that there is no such thing as “best” unless a decision maker or group of decision makers declares something as “best”: the outcome, or even better, the (however adaptable) transparent selection rules.

Another consideration leading to the two-phased approach is that the two steps, satisficing and multi-criteria selection, can deal with any level of complexity, dynamics, spatial distribution, non-linearity, thresholds, bifurcation, cusps and singularities in the underlying model representation. No (or rather less) need for model simplification as the price for being able to optimize is paid for by much lower “efficiency” in the search and no guarantee to ever find a “global optimum”.

There is no direct functional relationship between decision variables and system performance: the relationship is based on an “inverse solution”. We sample the decision space, generate a point in performance space from each sample, and based on the classification of system performance (feasible/infeasible, dominated/non-dominated, achievement or distance to Utopia or a reference point) we can now map the successful solutions back into decision space to identify regions there that lead to “good” solutions with a higher probability.

3.1 Design: Alternatives to Choose From

The basic logic of a discrete (multi-criteria) decision support approach is conceptually simple: a set of possible (discrete) alternative systems behavior is generated (by various modelling techniques), each representing and alternative control or management strategy leading to a corresponding performance of the system. This performance is described in terms of any number of criteria that can be evaluated and compared (explicit or implicit trade-offs) to arrive at a final preference ranking of the alternatives and an eventual choice of a preferred alternative as the solution of the Decision Process. For the selection process, we deal with the sub-set of feasible solutions (a very evolutionary strategy), that are “good enough”, and if sufficiently numerous (again at the price of considerable computational effort), stand a good chance to include some attractive improvements. And, as it will be discussed below, the basic idea is not so much to find the best, but to understand what that means, and what it might cost.

As a consequence of that indirect (invers) approach, the model that generates system performance from decision vectors can be as complex as needed for a faithful representation of the system (including all the criteria/aspects a stakeholder value) as needed.

Nodes that correspond to stakeholder activities can be split or aggregated with arbitrary “resolution” to make sure, all stakeholders find themselves represented in the model.

3.1.1 Dynamic Water Budget Modelling

The very basis or the evaluation of any and all “performance” criteria that describe how the basin management meets our expectations is a dynamic water budget model: this is supposed to tell us how much water (and with the integration of a water quality model) of which quality, we can expect where and when (ESS 2006c; Fedra et al. 2007; Cetinkaya et al. 2007).

The model uses a network of reaches (open channel and pipes) that connect any number of Nodes (Fig. 2). These represent sources of water (sub-catchments, springs, wells, inter-basin transfers, desalination plants), storage (reservoirs or lakes), and demand points (cities, industries, farms/irrigation districts, environmental water demands for wetlands). Reaches have a multi-segment geometry, take care of the routing, accept lateral inflow, interact with the groundwater, and loose water by evaporation and evapotranspiration of bank vegetation. Nodes generate benefits based on the amount of water allocated with a non-linear “cost and benefit” function (cost of water, revenue or benefit generated with the water). For any of the Nodes or Reaches, arbitrary non-linear penalties or payoff can be defined as a function of inflow (or shortfall), level, instantaneous or averaged over some “observation period”. The network communicates with one or more aquifers. Auxiliary models are a rainfall-runoff model that represents ungauged subcatchments (the models calculates the flow from an input Node), and an irrigation water demand model, that estimates the (daily) demand for supplementary irrigation. A simple reservoir model runs at an hourly time step to simulate a specific release rule. The model is run for annual (historical or planning) scenarios, driven by a prognostic meteorological model like MM5 or WRF, used for dynamic downscaling GFS or FNL global forecasts or re-analysis data. Alternatively, the model can be run in real-time for today (with data assimilation where available) and a few days of forecasts.

Water resources dynamic budget model network: Gediz baseline scenario from the OPTIMA project http://www.ess.c.at/OPTIMA

Every model run (usually over a water year) now generates a (large) set of criteria, physical (hydrological such as flows, levels/storage) and derived “economic” criteria based on cost and benefit functions related to flow.

3.1.2 Economic Criteria: Valuation

These economic criteria combine both traditional monetization (based on market prices or some of the tools of environmental economics) as well as the more or less arbitrary values of penalties, thus yielding a multi-criteria description of the alternatives.

Part of the performance (vector) description is derived from the cost (investment and operating costs) that the optimization process assigns to individual nodes. The technologies (also including strategies, policies) provide some water saving potential versus the (annualized) investment and operating costs.

The penalties defined for violations of some (regulatory or freely defined) threshold values are purely based on individual or group preferences, arbitrary, defined by the user(s). They are not “objective” in any sense, but open for inspection, discussion, and agreement.

When analysing more traditional methods of valuation, a critical review will show that this is not that much different from what textbooks teach. One of the generally accepted valuation method for non-market environmental good and services is the so called “travel cost method”, that equates the “value” of some environmental “object” to the total amount of money spent (the travel costs in a very inclusive sense) by its visitors and “users” (Bromley 1995).

Consider the following example “field experiment” from the literature (IHT, December 5, 1998). “An experimental program … allowing US National Parks to raise their fees has significantly boosted revenues without affecting the number of visitors …… Four agencies reported recreational fee revenues nearly doubled from 93 M US$ in 1996 to 179 M US$ in 1998. At the same time, …the number of visitors to sites with higher fees increased by 5 %.” So, evaluation in environmental economics is not that simple after all. Either the parks have been significantly undervalued, or their value doubled over night. In any case, it makes arbitrary valuation (or, more scientific, contingent valuation, expressed willingness to pay) look good.

3.1.3 Generating Alternatives: Beating Combinatorics

The basic water resources and linked water quality model generates a dynamic water budget (daily resolution) over a water year with the direct and derived criteria values defined in the preference structure. To generate alternatives, we introduce “water technologies” that can be attributed to the individual Nodes of the model to affect changes in the Nodes’ (or a reaches) behaviour. The latter can be used to represent alternative network topologies. The simplest changes would be a reduction of consumptive water use, or water quality, but can also describe reservoir release rules, evaporative losses along a reach, or reduced seepage losses, e.g., from an urban distribution network.

Each of the technologies is associated with costs (annualized investment and operating costs) and the change in system behaviour that “buys”.

A simple example would be an irrigation system, where we can test the effect of changing the irrigated area, the crop and cropping pattern, and the irrigation technology used.

The first step is simple satisficing: we split the set of results into feasible and infeasible results. Whatever solution meets all the constraints is feasible, and will be subjected to the next step in the analysis.

Given the number of elements we may wish to modify, and the very large number of possible “levels of introduction” for each of the technologies together with the possibility in many cases to combine several technologies (we can change the irrigated area and at the same time change crop and irrigation technology, which combines small numbers of discrete choices with continuous choices with a more or less well defined range) yields a basically near infinite number of possibilities. To sample that “model performance space” requires a complex approach to obtain a reasonable yield of feasible solutions.

A first set of runs uses naïve Monte Carlo, possibly combined with pre-defined heuristics (simple production rules) that exploit “obvious” known relationships between decision and performance variables. Once a basic set of solutions has been generated, then a priori set of constraints can be adjusted in reaction to too many or not enough feasible solutions. To increase the efficiency of the search and steer the selection towards Utopia, the original heuristics can be extended by machine learning algorithms, or concepts of Genetic Algorithms. This is based on emerging coincidence of decision and performance parameters, or by favouring more successful sub-populations in the decision space.

However, the generation of alternatives is perfectly “task parallel” and thus can be very efficiently implemented in modern, shared memory, multi-CPU multiple core architectures.

3.2 SELECTION: Conflict, Trade-Off, Pareto Efficiency

The final step of multi-criteria optimization is conflict resolution, finding an acceptable compromise. The different objectives are competing or conflicting, so moving from one solution to another always involves a trade-off between criteria and objectives, and thus give and take between stakeholders or interest groups.

For any improvement in one of the criteria, we must be ready to accept some loss in another.

This final stage pre-supposes that the alternatives considered are non-dominated or Pareto-optimal or Pareto efficient. Pareto optimality, named after Italian economist Vilfredo Pareto (Pareto 1971), is a measure of efficiency in multi-criteria decision situations. In multi-criteria problems there are two or more criteria measured in different units (“apples and oranges”), and no agreed-upon conversion factor exists to convert all criteria into a single agreed-upon metric that would make ranking and thus selection trivial.

Given a set of alternatives and several stakeholders, moving from one alternative to another could make at least one stakeholder better off, without making any other stakeholder worse off. This is called a Pareto improvement or Pareto optimization. A solution is Pareto efficient or Pareto optimal when no further Pareto improvements can be made, similar (but not equivalent) to a Nash equilibrium (Nash 1950).

In simple language and in terms of criteria (ESS 2006b), a solution is Pareto optimal if there is no other solution that performs at least as well on all criteria and strictly better on at least one of the criteria. A Pareto-optimal solution cannot be improved upon without giving up something else. A solution is not Pareto-optimal or dominated if there is another that is equally good in all criteria, and better in at least one of them.

Pareto optimality can be shown in a scatter-plot of solutions projected in planes of two criteria at a time (Fig. 1). Each criterion is graphed on a separate axis. It is easy to visualize a problem with only two criteria, but much more difficult with three or more criteria. In a problem with two criteria, both of which are to be minimized (for example, consider the number of violations of environmental standards versus the costs of wastewater treatment as candidate criteria), Pareto-optimal solutions are those in the scatter-plot with no points down and to the left of them. Dominated solutions are those with at least one point up and to the right of the “Pareto frontier” or surface.

It seems obvious that a rational decision maker would not consider a solution that is at best equal in most, but worse in at least one of the criteria, compared to all other solutions, or in other terms, that (at least theoretically) could be improved upon without extra cost. This, however, assumes that the set of criteria chosen is complete and representative, and there are no “hidden agenda” not represented in the formal decision making process. In practice, this is a situation frequently encountered, but easily identified.

And it is worthwhile repeating that the entire methodology is not so much about finding the best solution (as there hardly ever is such a thing undisputed) but to improve the process by which a satisfactory solution for all legitimately involved actors and stakeholders can be found, making the process and its rules transparent, open to criticism.

4 Discussion

Decision support, realistically, does not primarily make or help to make final decisions, it is (or should be) designed to understand the decision problem, and the decision making process.

The very core of a DSS is support for structuring the problem well, i.e., the information system components (Fedra and Loucks 1985; Fedra and Jamieson 1996b) and defining the selection rules. In practice, any set of pre-defined rules is subject to change if it does not yield the desired solution. But in a formal, computer based DSS, changes of the rules and all valuation functions must be made explicit, which is the most important function of a DSS. It does not produce a “best” (one can argue that there is no such thing) solution like a rabbit from the proverbial hat. What a DSS can do is teach us what we really want, individually and as a group (and what we are prepared to pay for it).

In the EU sponsored RTD project OPTIMA with partners from around the Mediterranean (http://www.ess.co.at/OPTIMA) these tools were tried across river basins in Turkey, Cyprus, Lebanon, Palestine/Israel, Tunisia and Morocco, involving local stakeholders. The optimization experiments show results of up to 10 % improvement on the net benefits. While these were based on simple assumptions and limited data, this was enough to get stakeholders interested and start discussions.

Ultimately, we want a compromise solution that is acceptable by whatever qualified majority of stakeholders rather than satisfying some preconceived mathematical notion, however clever. This is about learning and understanding, and agreement. Introducing the computer (Fedra and Loucks 1985) aims at reducing the dominance of loud words and hand waving, by making the elements of the preference structure and the trade-offs involved more transparent, open, minimizing undisclosed “hidden agenda” so that we may better know why we get what we get - or do not get.

References

Bell DE, Keeney RL, Raiffa H (1977) Conflicting objectives in decisions. Wiley, Chichester, 442 pp

Bromley DW (ed) (1995) The handbook of environmental economics, Blackwell handbooks in economics. Blackwell, Cambridge, 705 pp

Cetinkaya CP, Fistikoglu O, Fedra K, Harmancioglu N (2007) Optimisation methods applied for sustainable management of water-scarce basins. J Hydroinformatics IWA Publ 2008 10/1:69–95

ESS GmbH (2006a) D03.2, Implementation report: basic optimization report. INCO-CT-2004-509091, OPTIMA, Optimisation for Sustainable Water Resources Management, 26 pp., http://www.ess.co.at/REPORTS/OPTIMAD3.2.pdf

ESS GmbH (2006b) D03.3, Implementation report: discrete multi-criteria optimization. INCO-CT-2004-509091, OPTIMA, Optimisation for Sustainable Water Resources Management, p 18, http://www.ess.co.at/REPORTS/OPTIMAD3.3.pdf

ESS GmbH (2006c) D03.1, implementation report: water resources modeling framework. . INCO-CT-2004-509091, OPTIMA, Optimisation for Sustainable Water Resources Management, p 34., http://www.ess.co.at/REPORTS/OPTIMAD3.3.pdf

Fedra K (2004) Water resources management in the coastal zone: issues of sustainability. In: Harmancioglu NB, Fisitikoglu O, Dlkilic Y, Gul A (eds) Water resources management: risks and challenges for the 21st century. Proceedings of the EWRA Symposium, September 2–4, vol I. Izmir, Turkey, p 23–38

Fedra, K. (2005) Water Resources Simulation and Optimization: a web based approach. 6 pp., IASTED/SMO 2005, Oranjestad, Aruba, August 2005. http://www.ess.co.at/OPTIMA/PUBS/fedra-aruba.pdf

Fedra, K. and Harmancioglu, N. (2005) A web-based water resource simulation and optimization system In: Savic, D., Walters, G., King, R., and Khu, A-T. [eds], Proceeding of CCWI 2005, Water management for the 21st Century. Volume II, 167–172, Center of Water Systems, University of Exeter.

Fedra K, Jamieson DG (1996a) The WaterWare decision-support system for river basin planning: II. Planning capability. J Hydrol 177(1996):177–198

Fedra K, Jamieson DG (1996b) An object-oriented approach to model integration: a river basin information system example. In: Kovar K, Nachtnebel HP (eds), IAHS Publ. no 235, pp. 669–676

Fedra K, Loucks DP (1985) Interactive computer technology for planning and policy modeling. Water Resour Res 21(2):114–122

Fedra K, Kubat M, Zuvela-Aloise M (2007) Web-based water resources management: economic valuation and participatory multi-criteria optimization. Proceedings of the Second IASTED International Conference WATER RESOURCES MANAGEMENT August 20–22, 2007, Honolulu, Hawaii, USA ISBN Hardcopy: 98-0-88986-679-9 CD: 978-0-88986-680-5

Figueira J, Greco S, Ehrgott M (2005) Multiple criteria decision analysis: state of the art surveys. Springer Science + Business Media, Inc., New York. ISBN 0-387-23081-5

Gibbons R (1997) An introduction to applicable game theory. J Econ Perspect 11(1):127–149, Winter 1977

ICWE (1992) The Dublin statement and report of the conference. International Conference on Water and the Environment: development Issues for the 21st century. 26–31 January, Dublin. http://www.wmo.int/pages/prog/hwrp/documents/english/icwedece.html, accessed 20150424

Michalewicz Z, Fogel DB (2000) How to solve it: modern heuristics. Springer, Berlin, 467pp

Nakayama H, Sawaragi Y (1983) Satisficing trade-off method for multi-objective programming. In: Grauer M, Wierzbicki AP (eds) Interactive decision analysis. Springer Verlag, Berlin - Heidelberg

Nash J (1950) Equilibrium points in n-person games. Proc Natl Acad Sci 36(1):48–49

Pareto V (1971) Manual of political economy. Translated by AS Schwier, 261 pp. August M. Kelley Publlishers, NY. (original Italian: Pareto (1906) Manuale di EconomiaPolitica. SocietaEditriceLibraria. Milano

Power C (2009) A spatial agent-based model of N-person prisoner’s dilemma cooperation in a socio-geographic community. Journal of Artificial Societies and Social Simulation, 12/1/8 http://jasss.soc.surrey.ac.uk/12/1/8.html

Roy B (1968) Classement et choix en présence de points de vue multiples (la méthode ELECTRE). La Rev d’Informatique et Rech Opérationelle (RIRO) 8:57–75

RUBICON Ltd. & ESS GmbH (2010) Computer operated river project – proof of concept. Final Report to New South Wales, State Water. 78pp. http://www.ess.co.at/PDF/COR_FinalReport.pdf

Saaty TL (1995) Decisionmaking for leaders: the analytichierarchyprocess for decisions in a complex world, 3rd edn. RWS Publication, PA

Savenije H, van der Zaag P (2002) Water as an economic good and demand management: paradigms with pitfalls. Water Int 27(1):98–104

Simon HA (1957) Models of man. Social and rational. John Wiley and Sons, Inc., NY, 279 pp

Simon HA (1996) The science of the artificial, 3rd edn. MIT Press, Cambridge, 241 pp

Sprague RH, Carlson ED (1982) Building effective decision support systems. Prentice Hall, Englewood Cliffs, 329 pp

Turban E (1995) Decision support and expert systems: management support systems. Prentice Hall, Englewood Cliffs, 930 pp

Westphal KS, Vogel RM, Kirshen P, Chapra S (2003) Decision support system for adaptive water supply management. J Water Resour Plan Manag 129(3):165–177

Wierzbicky A (1998) Reference point methods in vector optimization and decisionsupport. International Institute for Applied Systems Analysis, Laxenburg, IR-98-017, 43 pp

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Fedra, K. River Basin Management: What do we Really Want?. Environ. Process. 2, 511–525 (2015). https://doi.org/10.1007/s40710-015-0084-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40710-015-0084-4